By: Aaron Bertrand | Comments (5) | Related: > Security

Problem

Row-Level Security, a new feature in SQL Server 2016, allows you to prevent unauthorized users from seeing certain rows in a table, without having to write your own filters. Think of a manager only being able to see their subordinates, or an account manager having access only to their own customers. All of this happens automatically, so it can be a very convenient way to implement security. But at what cost?

Solution

There is a performance penalty that can come into play when using Row-Level Security. I'm going to use a very simple example - an orders table, with three different users responsible for sales:

CREATE DATABASE RLS; GO USE RLS; CREATE USER Sales1 WITHOUT LOGIN; CREATE USER Sales2 WITHOUT LOGIN; CREATE USER Sales3 WITHOUT LOGIN; GO CREATE TABLE dbo.Orders ( OrderID int NOT NULL, SalesPerson sysname, OrderDate date NOT NULL CONSTRAINT df_OrderDate DEFAULT GETDATE(), CONSTRAINT pk_Orders PRIMARY KEY(OrderID), INDEX ix_Orders_sp (SalesPerson) ); GO GRANT SHOWPLAN TO Sales1, Sales2, Sales3; GRANT SELECT ON dbo.Orders TO Sales1, Sales2, Sales3;

Now, to illustrate how this affects performance, we'll insert 2,000 orders for the user Sales1, 20 orders for the user Sales2, and 2 orders for the user Sales3:

INSERT dbo.Orders(OrderID, SalesPerson) SELECT TOP (2000) rn = ROW_NUMBER() OVER (ORDER BY name), N'Sales1' FROM sys.all_columns UNION ALL SELECT TOP (20) 2000 + ROW_NUMBER() OVER (ORDER BY name), N'Sales2' FROM sys.all_objects UNION ALL SELECT 2021, N'Sales3' UNION ALL SELECT 2022, N'Sales3';

How we used to do it

In the old days, we would have relied on things like USER_NAME() or even passing a variable from the application to use in a where clause to produce only the rows the current user should access. That might look like this (and I'll be adding OPTION (RECOMPILE) throughout to ensure there are no tricks like relying on a previously cached plan):

DECLARE @SalesPerson sysname = USER_NAME(); SELECT OrderID, SalesPerson, OrderDate FROM dbo.Orders WHERE SalesPerson = @SalesPerson OPTION (RECOMPILE);

Let's execute this as each of our three users, and see what plans SQL Server comes up with to satisfy the queries:

ALTER DATABASE SCOPED CONFIGURATION CLEAR PROCEDURE_CACHE; DBCC DROPCLEANBUFFERS; DECLARE @sql nvarchar(max) = N' DECLARE @SalesPerson sysname = USER_NAME(); SELECT OrderID, SalesPerson, OrderDate FROM dbo.Orders WHERE SalesPerson = @SalesPerson OPTION (RECOMPILE);'; EXEC(@sql) AS USER = N'Sales1'; EXEC(@sql) AS USER = N'Sales2'; EXEC(@sql) AS USER = N'Sales3';

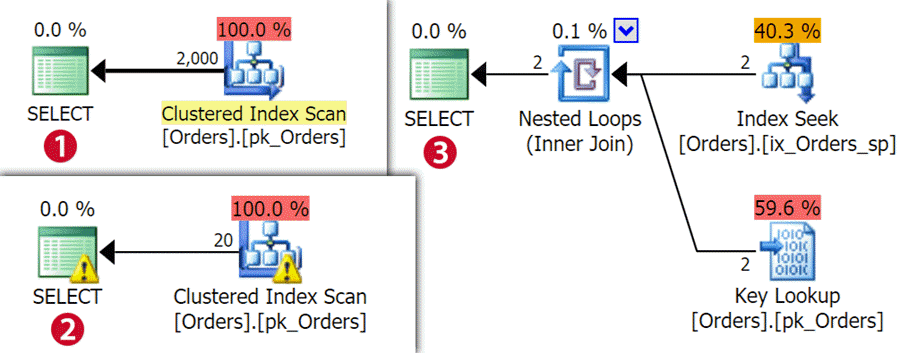

When you look at the execution plans, you can see that both the query for Sales1 (1) and Sales2 (2) have a clustered index scan, while Sales3 (3) - with only 2 rows - opted for an index seek plus a key lookup:

Now, there is some information in the properties for those operators, but runtime

performance is what most people care about. I ran the following batch 1,000 times

for each salesperson, clearing the cache and buffers again each time. Note that

I can't use OPTION (RECOMPILE) here, otherwise I wouldn't be able to

collect query stats (and that wouldn't be in typical production workloads anyway):

ALTER DATABASE SCOPED CONFIGURATION CLEAR PROCEDURE_CACHE; DBCC DROPCLEANBUFFERS; DECLARE @sql nvarchar(max) = N'DECLARE @OrderID int; SELECT @OrderID = OrderID FROM dbo.Orders WHERE SalesPerson = USER_NAME();'; EXEC(@sql) AS USER = N'Sales1'; -- run again for Sales2, Sales3 GO 1000 SELECT * INTO #MS1 -- run again for #MS2, #MS3 FROM sys.dm_exec_query_stats AS qs CROSS APPLY sys.dm_exec_sql_text(qs.plan_handle) AS t WHERE t.[text] LIKE '%Sales'+'Person%';

We'll come back and look at those runtime metrics later.

Now, with Row-Level Security

First, we need a function that can allow SQL Server to filter out rows a given salesperson should not have access to, and then a policy to associate the function with our orders table:

CREATE FUNCTION dbo.LimitOrders(@SalesPerson sysname)

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN

(

SELECT [Go] = 1 FROM dbo.Orders

WHERE @SalesPerson = USER_NAME()

OR IS_SRVROLEMEMBER(N'sysadmin') = 1

);

GO

CREATE SECURITY POLICY OrderPolicy

ADD FILTER PREDICATE dbo.LimitOrders(SalesPerson) ON dbo.Orders

WITH (STATE = ON);

Now, we can run our queries again, this time without the manual filters:

EXEC(N'SELECT * FROM dbo.Orders OPTION (RECOMPILE);') AS USER = N'Sales1'; EXEC(N'SELECT * FROM dbo.Orders OPTION (RECOMPILE);') AS USER = N'Sales2'; EXEC(N'SELECT * FROM dbo.Orders OPTION (RECOMPILE);') AS USER = N'Sales3';

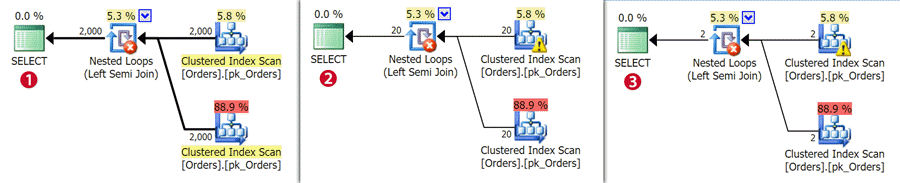

This time, the results show the same plan for all three queries, with two clustered index scans:

The expensive scan retrieves all the data, while the cheaper one finds all the

rows where SalesPerson = USER_NAME(). I could play with hinting to

see if there is any way to make SQL Server the index on SalesPerson

(without simplifying the function to remove the sysadmin check), but for now I'll

leave that as an exercise to the reader.

Again, I'm going to save off the results of a batch of 1,000 executions so we can look at performance metrics:

ALTER DATABASE SCOPED CONFIGURATION CLEAR PROCEDURE_CACHE; DBCC DROPCLEANBUFFERS; DECLARE @sql nvarchar(max) = N'DECLARE @OrderID int; SELECT @OrderID = OrderID FROM dbo.Orders WHERE SalesPerson = USER_NAME();'; EXEC(@sql) AS USER = N'Sales1'; -- run again for Sales2, Sales3 GO 1000 SELECT * INTO #PS1 -- run again for #PS2, #PS3 FROM sys.dm_exec_query_stats AS qs CROSS APPLY sys.dm_exec_sql_text(qs.plan_handle) AS t WHERE t.[text] LIKE '%Sales'+'Person%';

With Context

Another way you can write the function is to make use of SESSION_CONTEXT()

- we can simply change the filter to check the value of a specific session context

value we've set. The function would change like this (which can be done online):

ALTER FUNCTION dbo.LimitOrders(@SalesPerson sysname)

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN

(

SELECT [Go] = 1 FROM dbo.Orders

WHERE @SalesPerson = SESSION_CONTEXT(N'UserName')

OR IS_SRVROLEMEMBER(N'sysadmin') = 1

);

GO

Then we can change our queries slightly to see what those plans look like:

EXEC(N'DECLARE @u sysname = USER_NAME(); EXEC sys.sp_set_session_context @key = N''UserName'', @value = @u; SELECT @OrderID = OrderID FROM dbo.Orders;') AS USER = N'Sales1'; -- repeat for Sales2, Sales3

And here we can see that shifting from the direct check against USER_NAME()

to using SESSION_CONTEXT() actually provides the optimizer a little

leeway again:

One more time, we'll clear the procedure cache and drop all the clean buffers, run the query 1,000 times, and then save off the query stats metrics for further analysis:

EXEC(N'DECLARE @u sysname = USER_NAME();

EXEC sys.sp_set_session_context @key = N''UserName'', @value = @u;

DECLARE @i int = 1, @OrderID int;

WHILE @i <= 1000

BEGIN

SELECT @OrderID = OrderID FROM dbo.Orders;

SET @i += 1;

END') AS USER = N'Sales1'; -- repeat for Sales2, Sales3

SELECT * INTO #CX1 -- run again for #CX2, #CX3

FROM sys.dm_exec_query_stats AS qs

CROSS APPLY sys.dm_exec_sql_text(qs.plan_handle) AS t

WHERE t.[text] LIKE '%Sales'+'Person%';

Performance Comparison

With performance data already collected, it was easy to see how the different options performed at scale:

SELECT Iteration, rows_per = total_rows/execution_count, total_elapsed_time, avg_time = CONVERT(decimal(10,2),total_elapsed_time*1.0/execution_count), total_logical_reads FROM ( SELECT Iteration = 'Manual-Sales1' ,* FROM #MS1 UNION ALL SELECT 'Manual-Sales2' ,* FROM #MS2 UNION ALL SELECT 'Manual-Sales3' ,* FROM #MS3 UNION ALL SELECT 'Policy-Sales1' ,* FROM #PS1 UNION ALL SELECT 'Policy-Sales2' ,* FROM #PS2 UNION ALL SELECT 'Policy-Sales3' ,* FROM #PS3 UNION ALL SELECT 'Context-Sales1',* FROM #CS1 UNION ALL SELECT 'Context-Sales2',* FROM #CS2 UNION ALL SELECT 'Context-Sales3',* FROM #CS3 ) AS x ORDER BY RIGHT(iteration,1), avg_time;

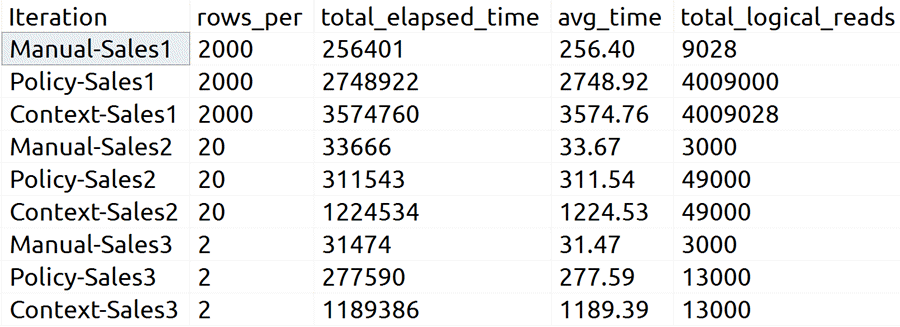

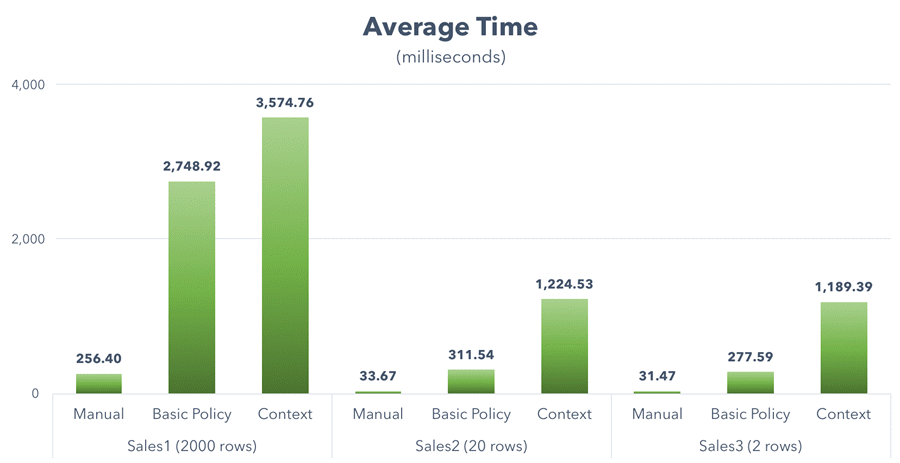

And here were the results in my tests:

The optimizer freedom afforded by the use of SESSION_CONTEXT(),

as it turns out, made average performance even worse than the standard policy, which

used the same plan throughout all tests. Here is average time on a graph:

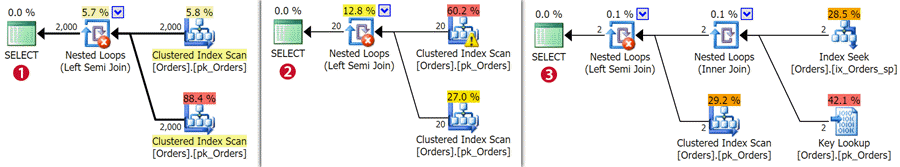

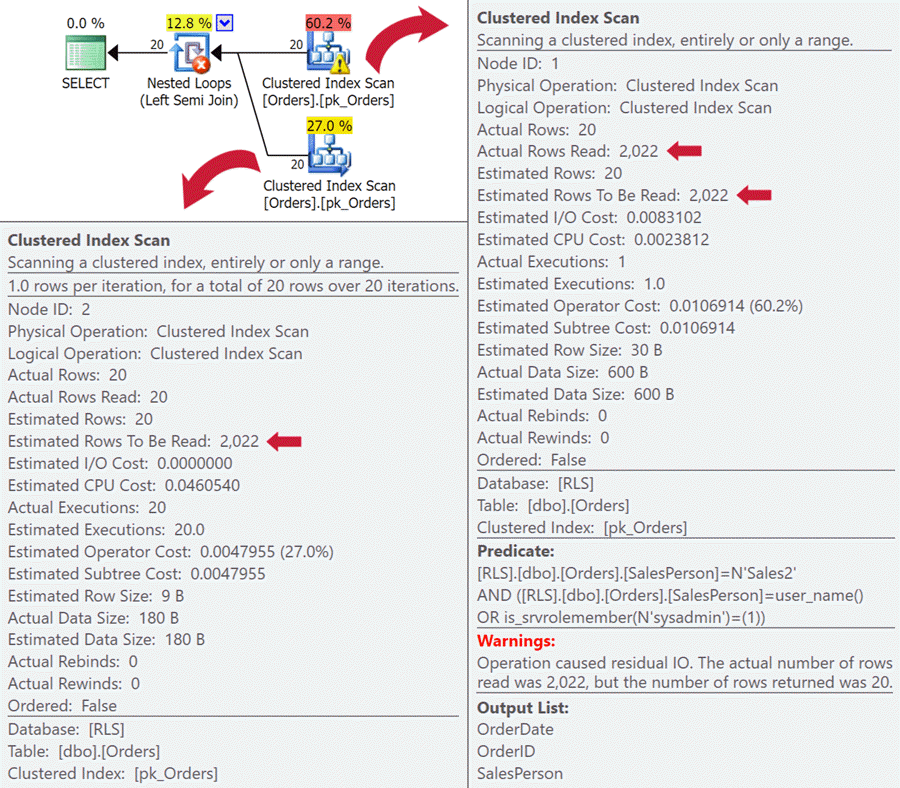

Also, when execution plans look alike, you should investigate them more deeply than just the graphical plans. In this case, it is obvious that the runtime performance has been drastically impacted by the introduction of the predicate function, even though the graphical execution plan gave no indication. If you dig deeper, though, you can see that in some cases there are bad estimated number of rows to be read - it's almost as if the security predicate is applied way too late to avoid parameter sniffing or just escalating to the whole table:

Similar phenomena exist in other plans above, but it is clear that the RLS functions - at least when relying on data in the table - add a performance impact for some kind of read operation that can't be folded into the original table access (be it a seek or a scan).

Summary

Row-Level Security introduces a very convenient way to implement proper access

controls on your data, with minimal impact to existing code. However, there are

performance implications that you should consider and test with a realistic data

set and workload before arriving at a solution. One thing I want to investigate

is how to use SESSION_CONTEXT() to avoid some of those OR

conditionals that are eventually going to make their way into your RLS policy functions,

and perhaps also design suggestions to avoid two trips to the base table. For future

tips, maybe.

Next Steps

- If you are planning on implementing Row-Level Security, be sure that you test out performance. The overhead may be worth the convenience, but you don't want this to be a surprise.

- See these previous tips:

- SQL Server 2016 Row Level Security Introduction

- Implement Row Level Security Natively with SQL Server 2016

- SQL Server 2016 Row Level Security Example

- Test Performance Overhead of SQL Server Row Level Security

- SQL Server 2016 Row Level Security Limitations, Performance and Troubleshooting

- Beware of Side-Channel Attacks in Row-Level Security in SQL Server 2016

About the author

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips