By: John Miner | Comments (1) | Related: > Azure SQL Database

Problem

Today, many companies are living in a hybrid world in which data resides not only on-premises in a local data center, but in the cloud at a Microsoft Azure data center. There is an increasing need to share data with not only internal resources, but with our customers. How can we keep an on-premises SQL Server database in synch with an Azure SQL database?

Solution

Microsoft SQL Server has supported replication services for almost two decades. Transactional replication allows a database administrator to keep two databases in synch with minimal delay. Support for push replication from on-premises to an in-cloud Azure SQL database was added in November 2015. See this old announcement for details.

Business Problem

This tip assumes you know how to create an Azure SQL Server and Azure SQL Database. If you are not familiar with these tasks, please see my earlier tip that goes over these basic steps using PowerShell.

There are many financial companies that invest in the stock market via mutual funds. Our fictitious company named Big Jon Investments is such a company. They currently track the S&P 500 mutual fund data for the last 12 months in a STOCKS table in the ACTIVE schema. Older data is rolled off monthly to a matching table in the HISTORY schema.

Our task is to replicate the active data from an on-premises SQL Server database to an in-cloud Azure SQL database. Our boss has asked us to work on this proof of concept and report back to him/her with our results.

SQL Databases

This article assumes you have read my previous tip on “Using Snapshot Replication with an Azure SQL Database”. I would like to point out that the either a virtual or physical machine can be used for the publisher. The only requirement is a working install of SQL Server Agent. I choose to use an Azure Virtual machine named sql16tips and the Azure SQL Server named mssqltips17. Finally, push snapshot replication exists between the publisher (sql16tips) and the subscriber(mssqltips17).

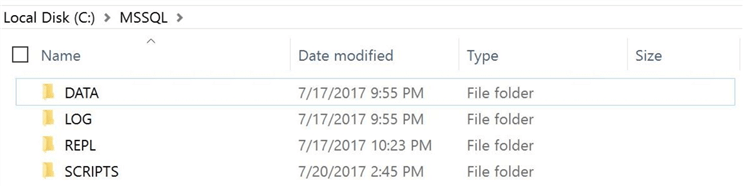

I am going to connect to the Azure Virtual machine using the Remote Desktop Protocol (RDP). This article assumes you have the following directory structure and the database engine has full access to read/write files.

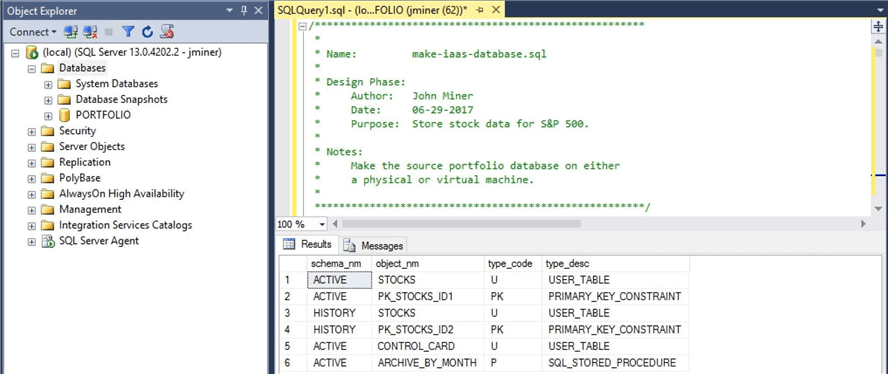

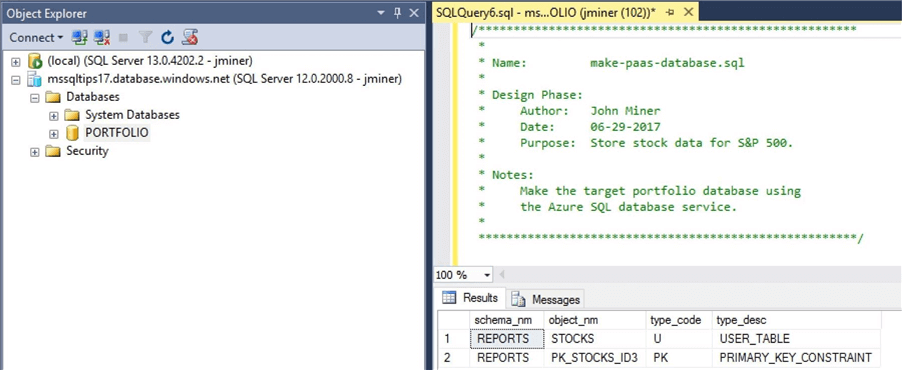

The database engine contains system catalog views that can be used to display information about user defined objects. The T-SQL snippet below displays the schema name, object name, object type and object type description for each object that was not shipped from Microsoft.

-- -- Show user defined objects -- SELECT s.name as schema_nm, o.name as object_nm, o.type as type_code, o.type_desc FROM sys.objects o LEFT JOIN sys.schemas s ON o.schema_id = s.schema_id WHERE o.is_ms_shipped = 0; GO

Launch SQL Server Management Studio (SSMS) and log onto our local database server named sql16tips using the jminer account. Let us look at the schema of the publisher database. If you successfully executed the snippet above, you should see the following results in the query output window. Three tables, two primary keys and one stored procedure currently exist in the source database.

Open another database connection to our remote database server named mssqltips17 using the jminer account. Let’s examine the schema of the subscriber database. If you successfully executed the snippet above, you should see the following results in the query output window. One table and one primary key exists in the target database.

Our next task is to remove the existing replication and clear the STOCKS tables of any data.

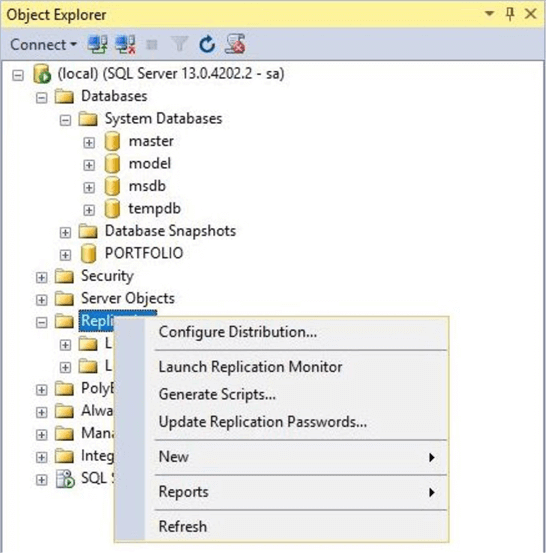

Removing Replication

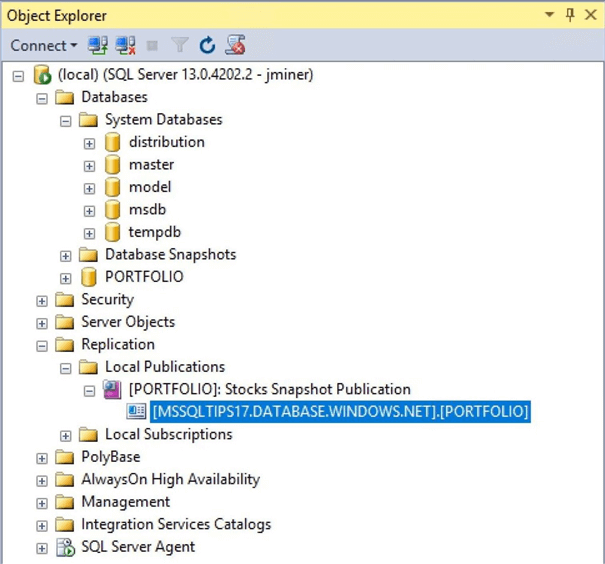

If we expand the items in the object explorer in SQL Server Management Studio (SSMS), we can see the existing subscriber for the Stocks Snapshot Publication. Right click on the subscriber and select delete. Repeat this process for the publisher. When this task is complete, no items should exist under the local publications tab.

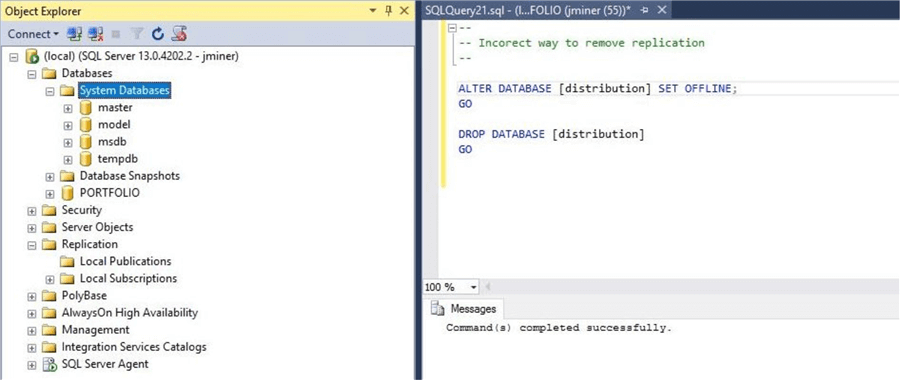

We can see from the image above that the distribution database created by replication still exists after removing the local publication. Let’s say a junior database administrator puts this database in an OFFLINE state and DROPS the database from the server. See image below for details.

Is this the correct way to remove the last remains of replication? Halt! Step away from Management Studio. This is not the correct way to remove replication.

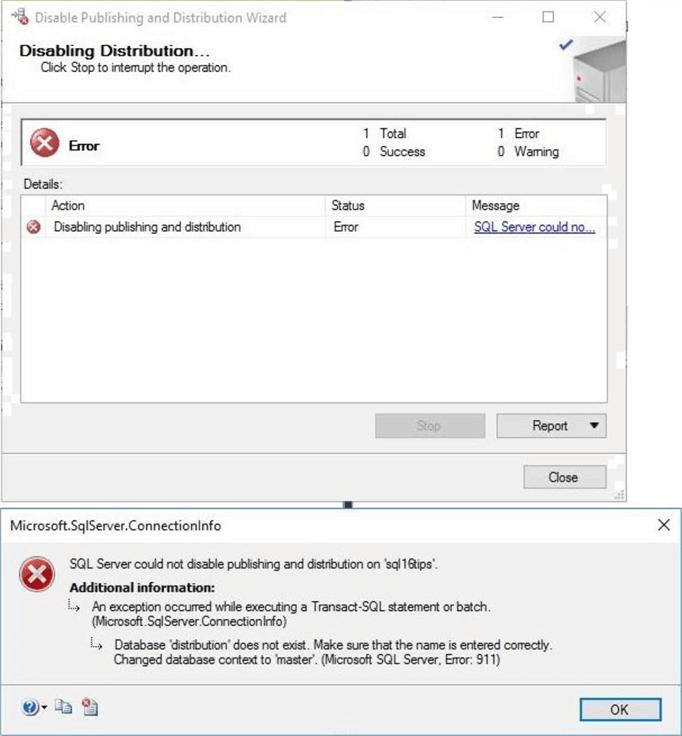

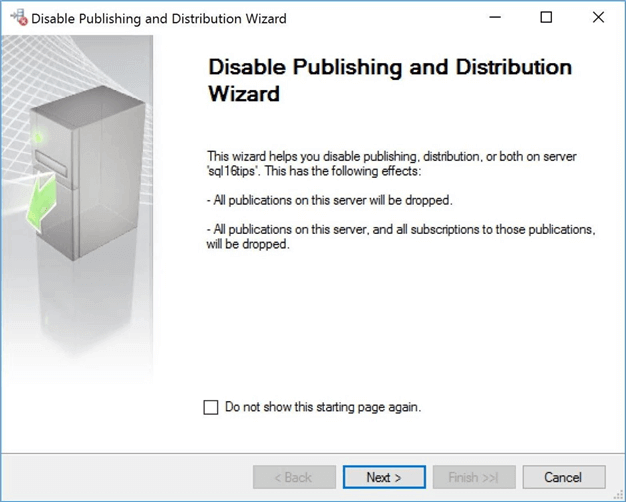

You should use the disable publishing and distribution wizard to clean up the server. Even the wizard can’t help us when the distribution database has been dropped. We are lucky that the database engine designers have planned for this incorrect user action.

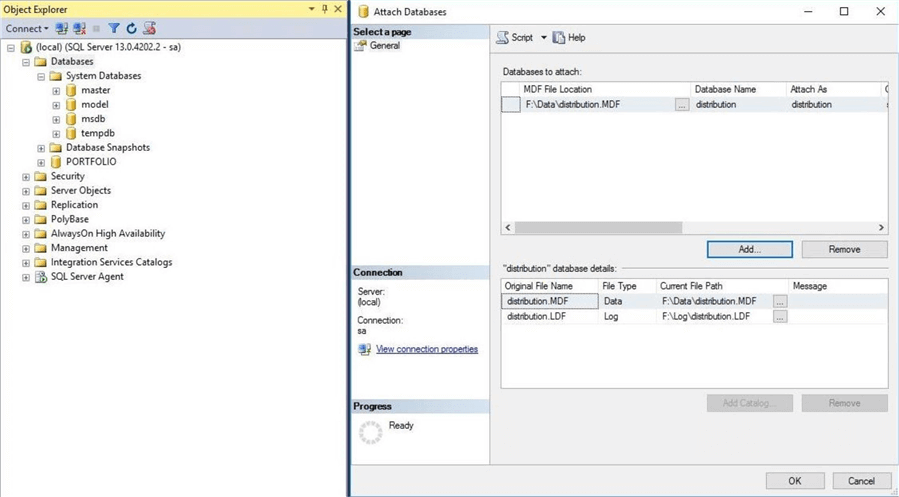

The distribution database still exists on the file system. We just need to re-attach the database using the system administrator account. The image below shows the how to perform this action using SQL Server Management Studio. Just right click the database node in the object explorer to start the attach databases task via a dialog box.

The correct way to remove replication from a server is to use the wizard. Optionally, you can look at the Transact-SQL code generated by the wizard. I suggest this option for users who want to become experts.

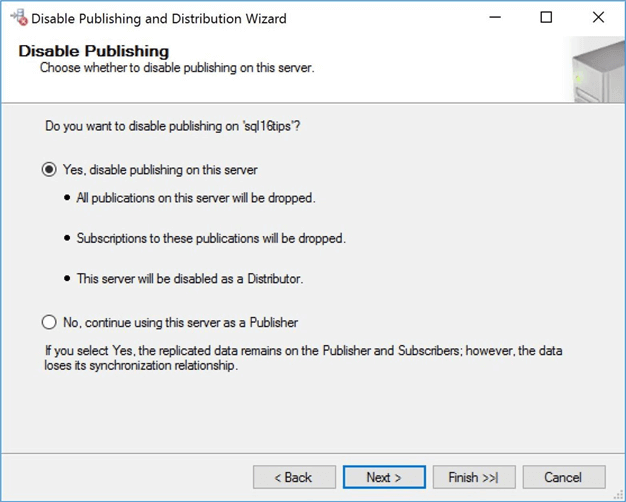

The wizard states the following actions that will happen if you choose yes. Click the next button to continue.

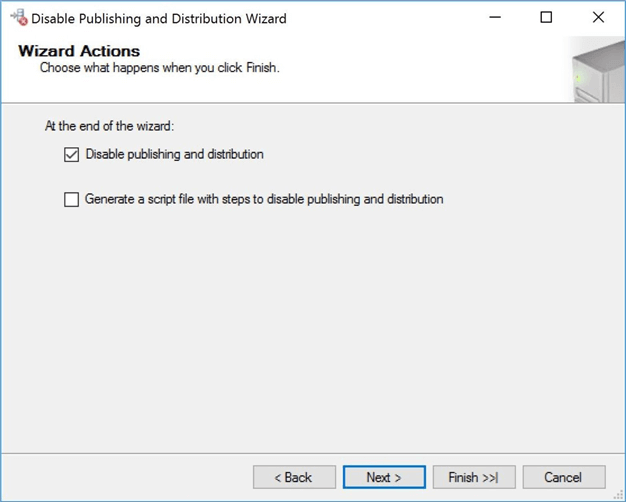

The next screen allows you to disable publishing and distribution or generate a script file. Choose the default action and click the Next button to advance.

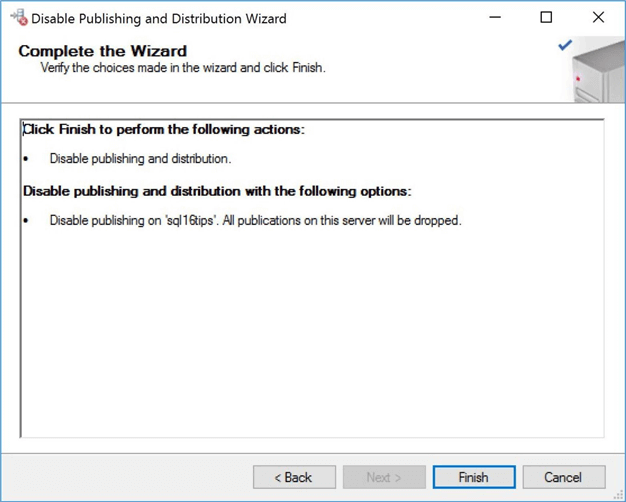

Like most wizards, you are prompted with a final list of choices that were selected during the process. Either exit the screen by hitting the cancel button or perform the actions clicking the Finish button.

If you successfully remove replication, the option for the wizard will disappear from the right click menu. The image below shows a screen shot of Object Explorer with all remnants of replication have been removed.

To recap this section, always use the right click wizard to remove subscriptions, publications and replication. The use of Transact SQL (T-SQL) to manage replication is an advance topic which will not be covered today. If you are interested, here is a link to the stored procedure than can be used to remove a subscription. Likewise, here is a link to a stored procedure that can be used to remove a publication. Use the scripts generated by the replication wizards as a starting point of your T-SQL journey.

Reloading test data

Right now, we have two databases named PORTFOLIO in two different environments. The on-premises database is considered the source or publisher. The in-cloud database is considered the target or subscriber. Replication uses the concept of publishers and subscribers when defining objects. Before we can start working with transactional replication, we need to clear the STOCKS table of data in both environments.

How can we work on two different servers using one query window?

This is a great question! A great question is one in which I have already written a tip for. One of my very first tips for www.mssqltips.com was “Connect an Azure SQL database to an On-Premises SQL Server”. This article creates a linked server to an Azure SQL Server database. The enclosed T-SQL script named make-linked-server creates a linked server named TIPS17.

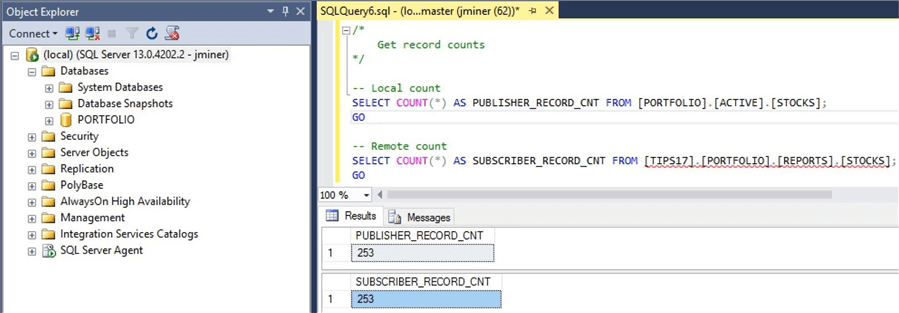

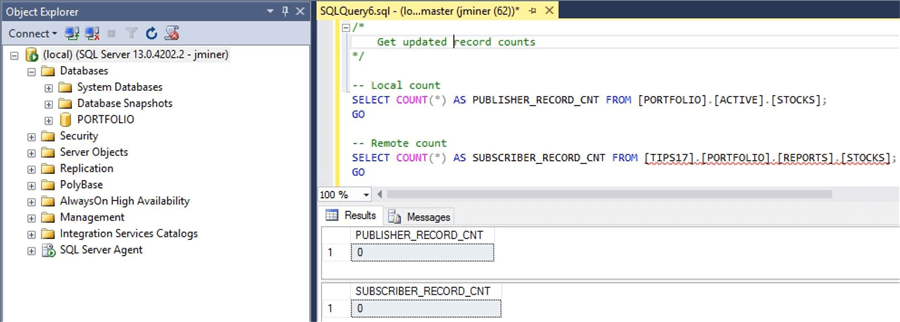

The T-SQL code below gets record counts from the STOCKS table in both the on-premises and in-cloud databases. Then, it deletes all records from those tables. Please note that the remote query uses 4-part notation which consists of the following: linked server name, database name, schema name and table name.

/*

Get record counts

*/

-- Local count

SELECT COUNT(*) AS PUBLISHER_RECORD_CNT FROM [PORTFOLIO].[ACTIVE].[STOCKS];

GO

-- Remote count

SELECT COUNT(*) AS SUBSCRIBER_RECORD_CNT FROM [TIPS17].[PORTFOLIO].[REPORTS].[STOCKS];

GO

/*

Clear tables

*/

-- Local delete

DELETE FROM [PORTFOLIO].[ACTIVE].[STOCKS];

GO

-- Remote delete

DELETE FROM [TIPS17].[PORTFOLIO].[REPORTS].[STOCKS];

GO

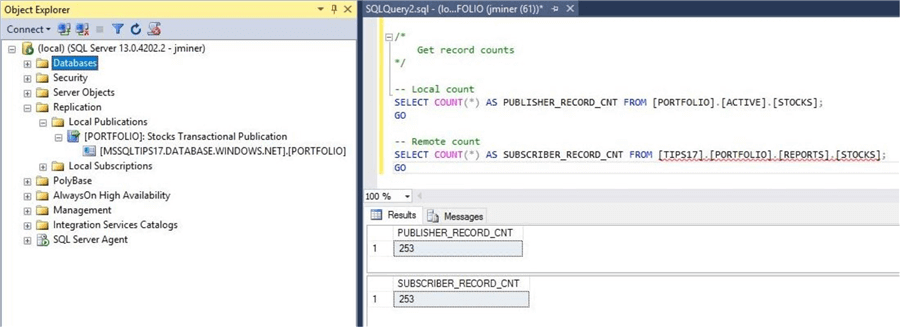

The screen shot below displays record counts from STOCKS tables located inside the publisher and subscriber databases. This execution took place before the data was cleared.

The screen shot below displays record counts after the data was cleared from the tables. Of course, a zero-record count is what we are looking for. The expected results are shown below.

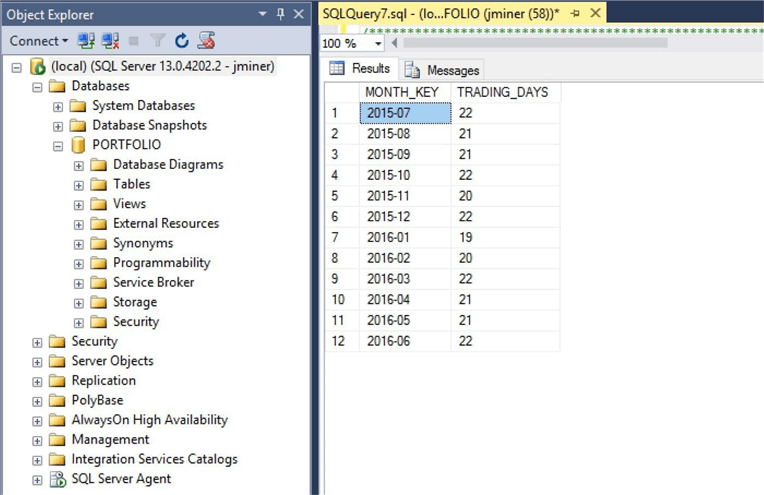

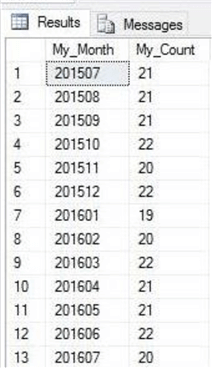

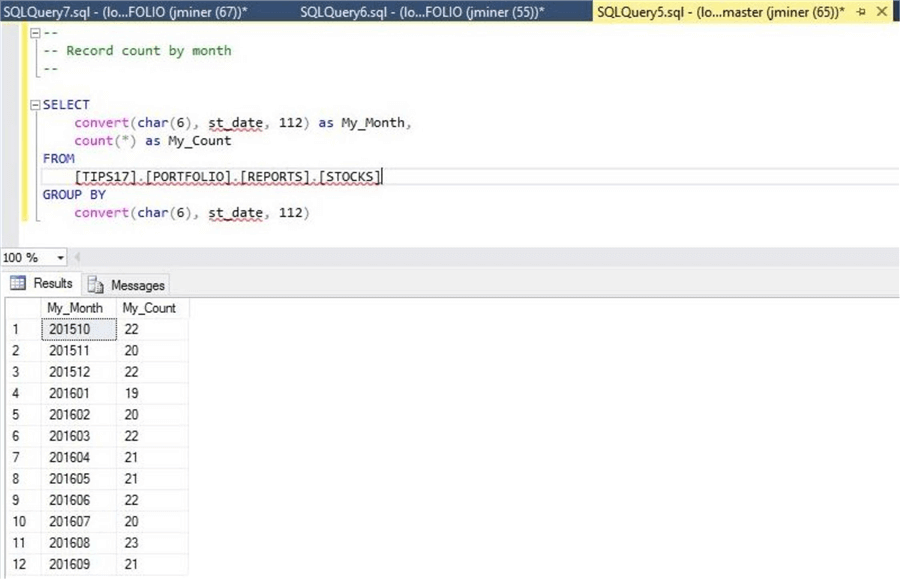

Please execute the T-SQL script named data-set-one to load the publisher with data. A simple group by query can be used get record counts by month for the STOCKS table. The image below shows the number of trading days per month between July 2015 and June 2016. This investment company has a fiscal year that starts in July and runs to June. The same fiscal year is used at Microsoft to report earnings.

At this point, we have setup both our publisher and subscriber databases. Now we are ready to build our replication objects. Before we begin, let us have a high-level chat about the Microsoft SQL Server Transactional Replication architecture.

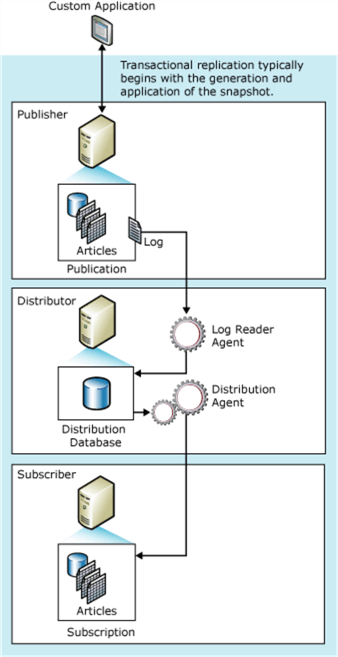

Transactional Replication Architecture

Microsoft replication services allows the database administrator to create a transactional replication process which keeps two databases in synch with minimal delay. The cool thing about this service is that not a single line of code needs to be written. If you were designing a transactional replication process by hand, a bunch of ETL code would need to be written to accomplish the same feat.

So, how does the transactional replication process work?

The process begins with the generating and applying a snapshot to the subscriber. The Snapshot Agent generates snapshot files containing schema and data of published database objects, stores the files in the snapshot folder, and records synchronization jobs in the distribution database on the Distributor. The distribution agent applies the schema and data to the subscriber upon (re)initialization. This process allows the subscriber database to get caught up with the publisher database for a given point in time.

There are two key components to the transactional replication process.

The Log Reader Agent monitors the transaction log of each database configured for transactional replication. It copies the transactions marked for replication from the transaction log on the publisher into the distribution database. Any INSERT, UPDATE or DELETE commands that have been committed and pertain to a published article are moved to the distribution database. To summarize, the distribution database is a store and forward queue for the transactions. Usually, the log reader agent is set to run continuously.

The distribution agent can be set to run continuously or periodically. I suggest running this agent continuously for minimal delay in data arrival. This agent executes the recorded commands in order at each subscriber. These commands can be translated into either dynamic T-SQL or calls to stored procedures.

If you are truly interested in the finer details of this process, please look at this TECHNET article that does a great job of explaining the actual process. The image to the left shows a conceptual process executed by transactional replication.

I am using the wizard to generate and apply the initial snapshot. However, if you decide to become a replication guru who writes their own T-SQL, there are several more ways to execute the snapshot process. The list includes a recent FULL backup, custom ETL using a tool like SSIS, and/or promoting a warm stand by server for larger databases.

Publication Wizard

Microsoft has provided a wizard to help you fill in the blanks when defining a new publication. Navigate to the replication node under the object explorer in SSMS for the local server named sql16tips. Drill into the Local Publications entry. Right click to bring up the actions menu.

Select the new publication option to start the wizard. After choosing an option in each dialog box that is presented, click the Next button to continue. Eventually, you will end up will a finish button that will execute all the actions that were selected.

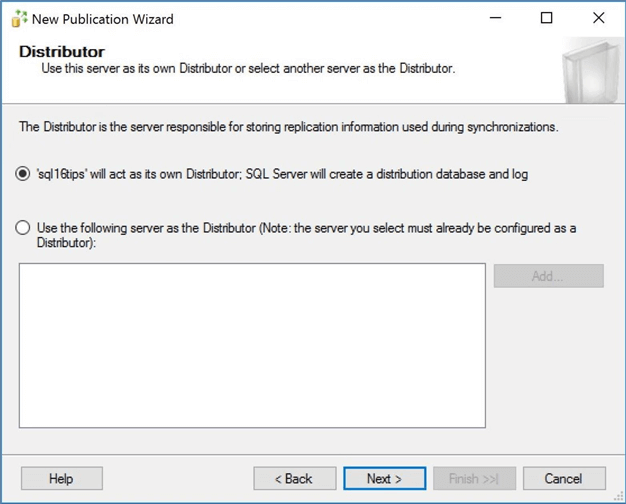

The distribution database will be defined on the local SQL Server named sql16tips.

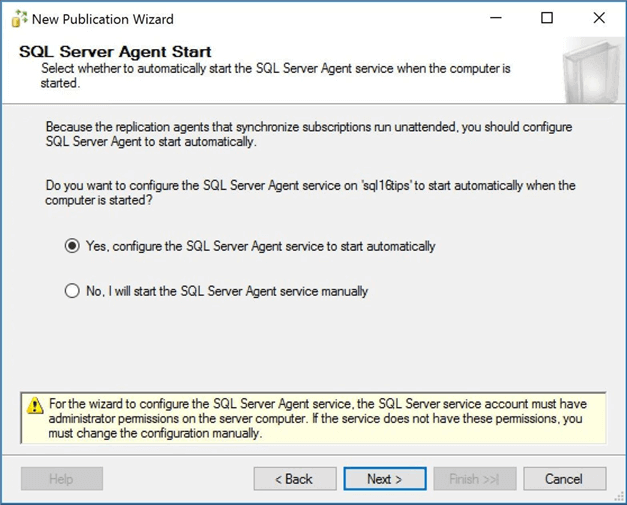

The SQL Server Agent plays a key part in replication services. The wizard has detected that this service is in manual start mode. It is suggesting that we change this setting to automatic start.

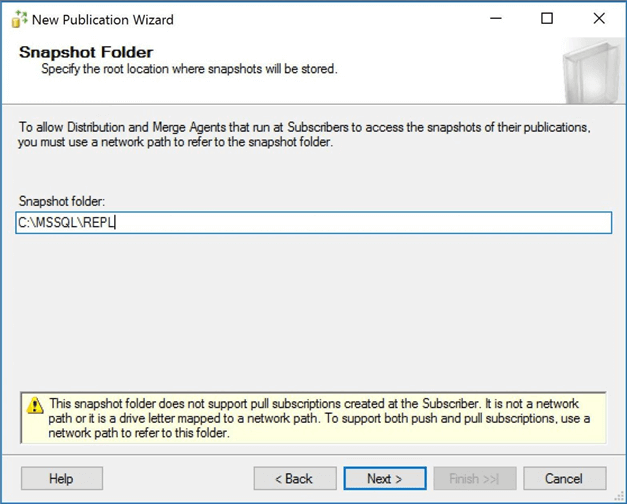

For each article in the publication, the snapshot agent generates schema and data files. The distribution agent reads this information and performs the correct actions to the subscriber. I chose to have the snapshot folder located in my user defined directory structure.

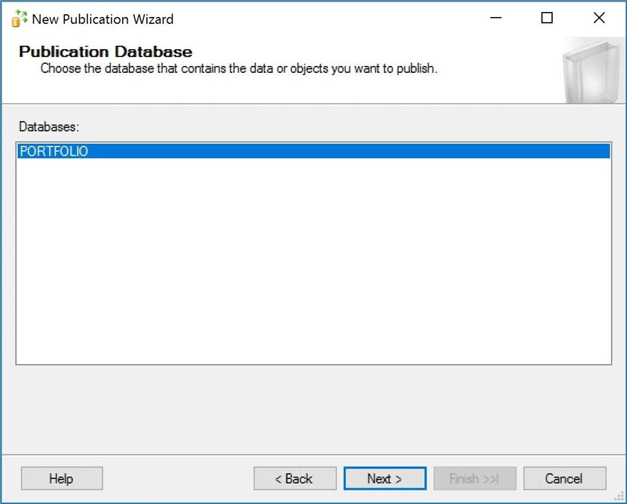

Choose the PORTFOLIO database as the source of our publication data.

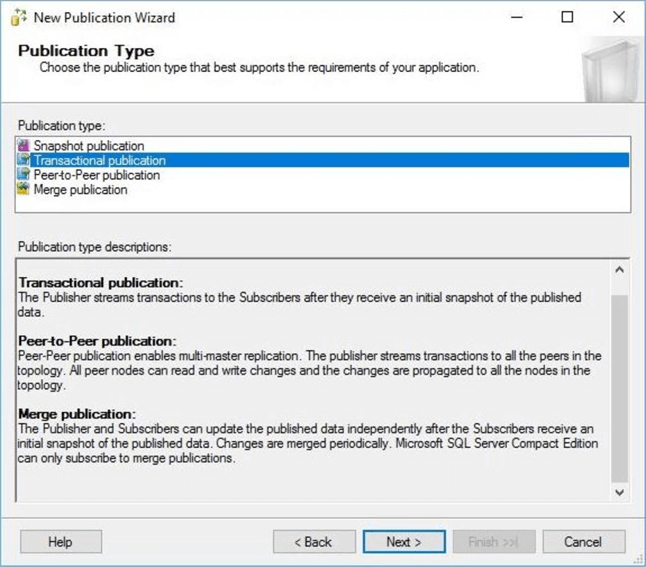

There are four different publication types. Only snapshot and transactional replication are supported with an Azure SQL database as a push subscriber. Today, we are talking about transactional replication. Choose this option as the publication type.

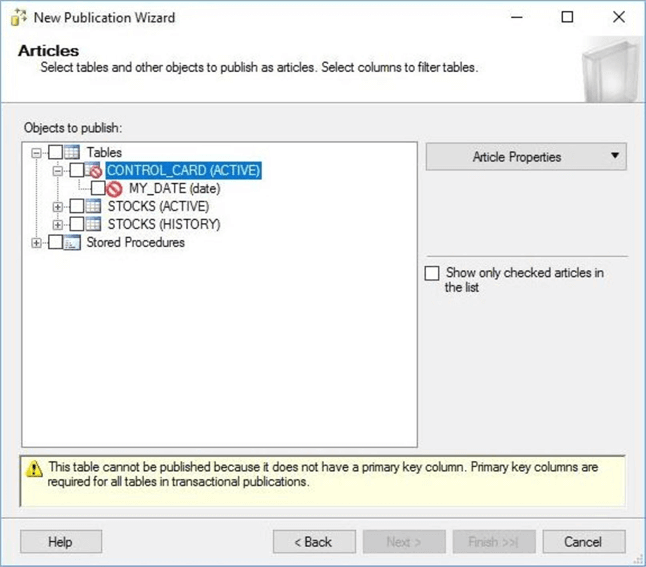

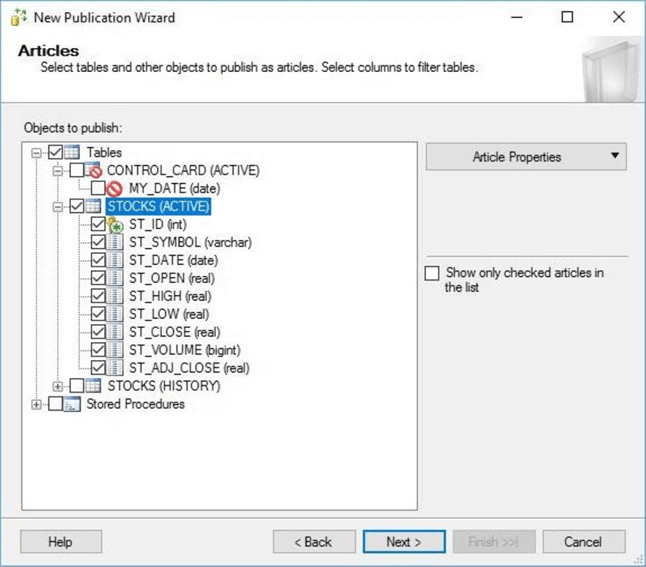

Transactional replication executes update and delete statements against the articles, tables and materialized views. With this said, each eligible table needs to have a primary key column defined. The wizard is smart enough to know that the CONTROL_CARD table does not satisfy this requirement and is ineligible for selection.

Select the ACTIVE.STOCKS table as the source article. If we choose a subset of the table columns, we are performing vertical partitioning.

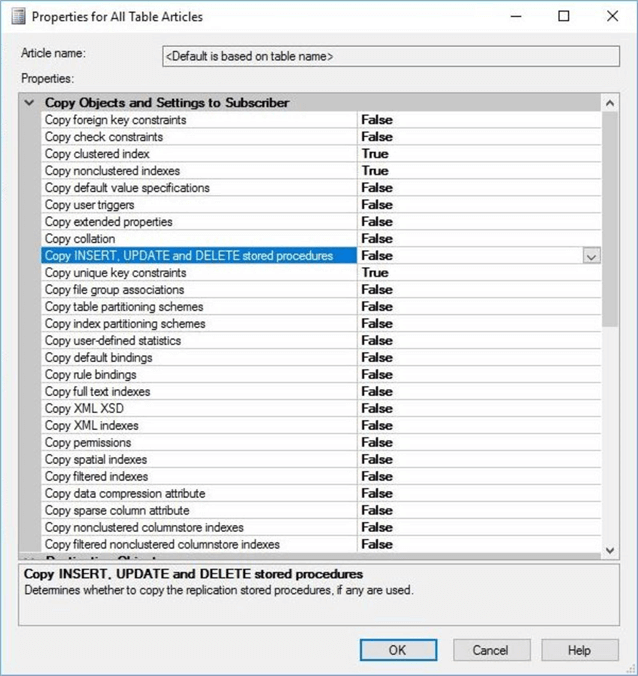

Right click the article to change the table properties. Since our target or subscriber database schema is already created, set the property of each copy option from TRUE to FALSE. The snapshot agent can copy many objects that might be associated with a table; however, we are choosing to not re-invent the wheel each time we synchronize the databases.

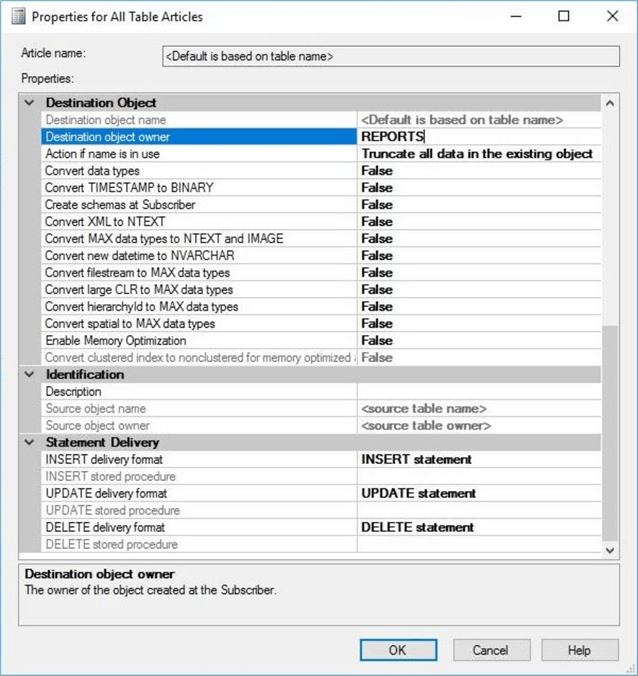

Use the scroll bar on the right side of the dialog box to see other options. We want to change the destination object owner to REPORTS which is the target or subscriber schema name. We also want to change the action to perform if the object exists to truncate table. Last but not least, change the delivery format of statements to dynamic T-SQL.

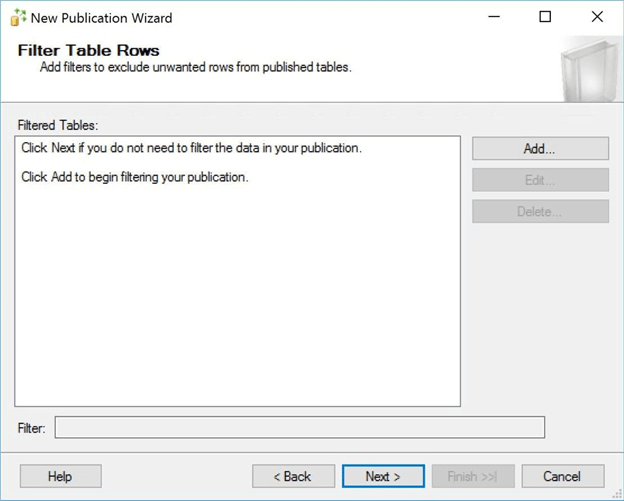

The next screen asks us if we want to filter the table data of the published articles. This action is considered horizontal partitioning.

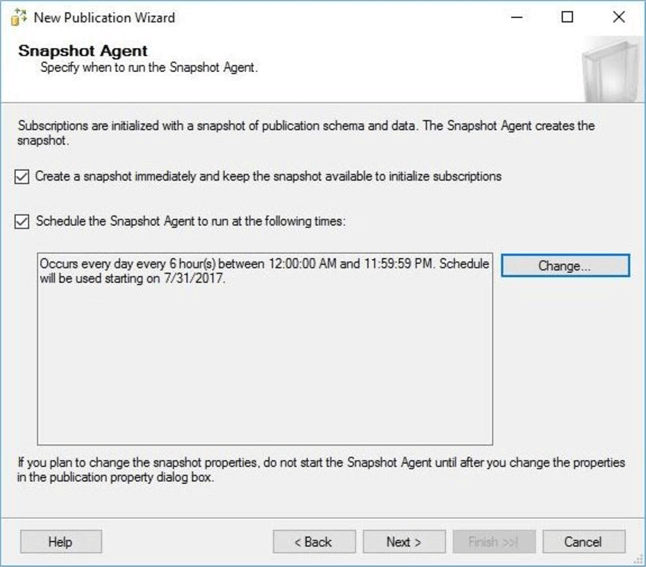

An instance of the snapshot agent is executed by a SQL Server agent job. We need to define an execution schedule for this job. I want to execute the job right now to have an initial publication for any subscribers. Also, I want to generate snapshot files every 6 hours. This schedule could be hourly, daily, weekly or monthly to meet your business needs.

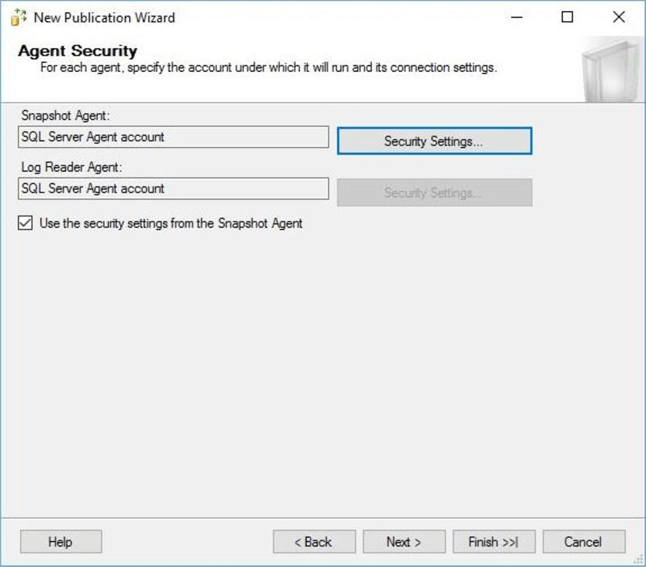

Security for the snapshot and log reader agents needs to be defined. Since this is just a proof of concept, I am going to run the job under SQL Agent service account. Also, I am going to use this account to connect to the publisher database. This is not a recommended security best practice.

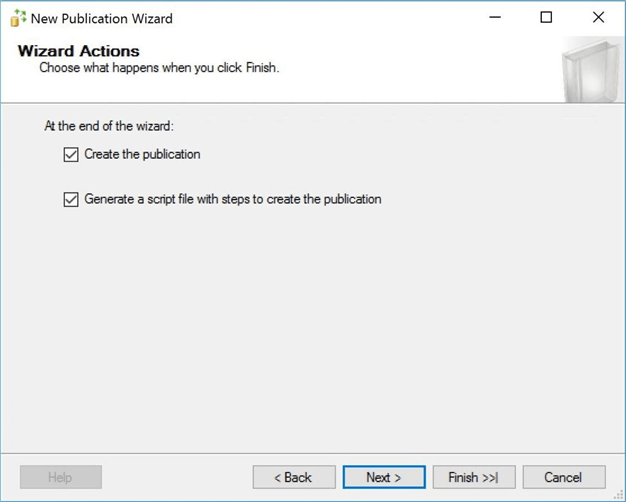

There are two actions that can be performed by the wizard: create the publication or create a publication script. I am going to select both actions. The TSQL script should be saved in a source control system so that publication can be rebuilt from scratch if necessary.

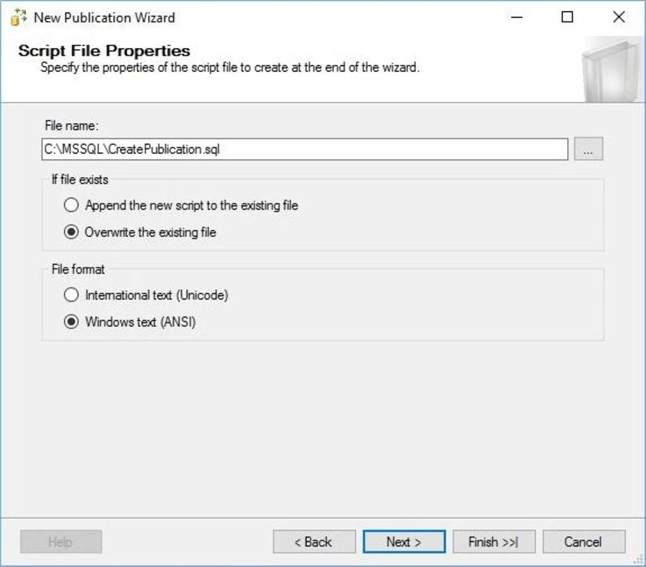

I am going to save the script in the root directory of my user defined storage space on the local hard drive. I want to over write any existing files and save it as ANSI text.

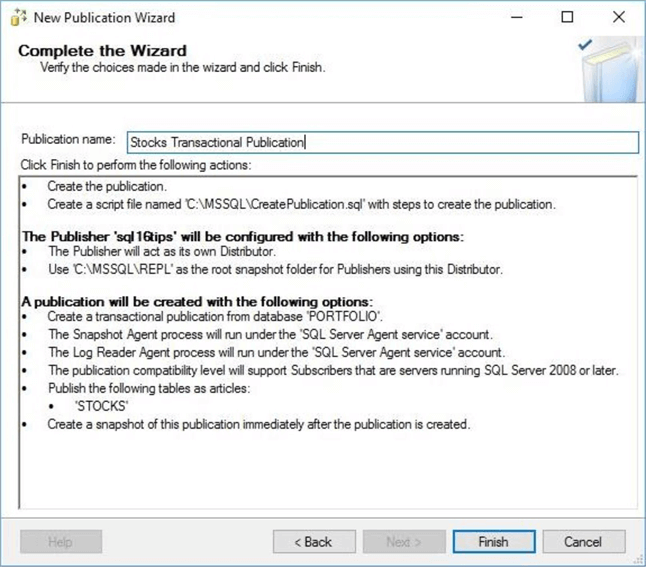

Finally, you are prompted with the laundry list of choices that were selected during each step. You must now name your publication. I choose Stocks Transactional Publication as the name of my publication. Click the Finish button to execute the specified actions.

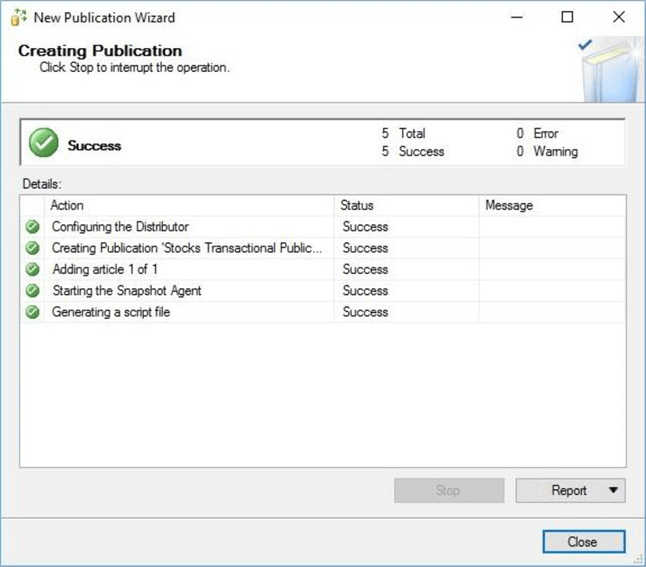

There are five actions that will be completed by the wizard. A progress bar will increment during the execution of the tasks. At the end of the day, you want to have a green success check mark next to each action.

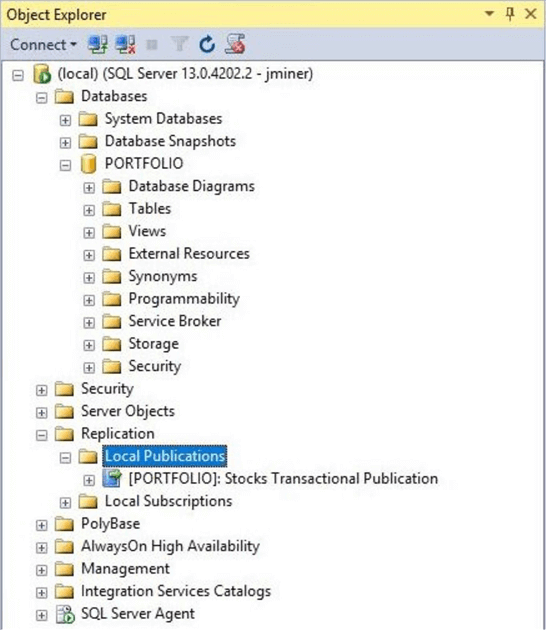

The object explorer in SQL Server Management Studio can be used to look for existing publications. We now see our new publication in the list.

Subscription Wizard

Microsoft has provided a wizard to help you fill in the blanks when defining a new subscription. Navigate to the replication node under the object explorer in SSMS for the local server named sql16tips. Drill into the Local Publications entry. Select the Stocks Transactional Publication as the publication. Right click to bring up the actions menu.

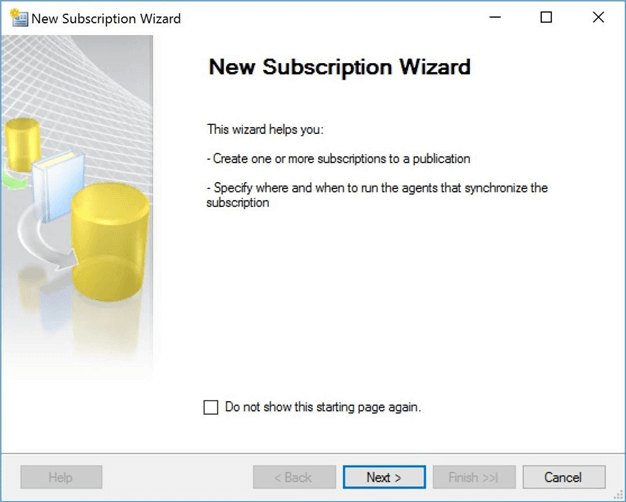

Select the new subscription option to start the wizard. After choosing an option in each dialog box that is presented, click the next button to continue. Eventually, you will end up with a Finish button that will execute all the actions that were selected.

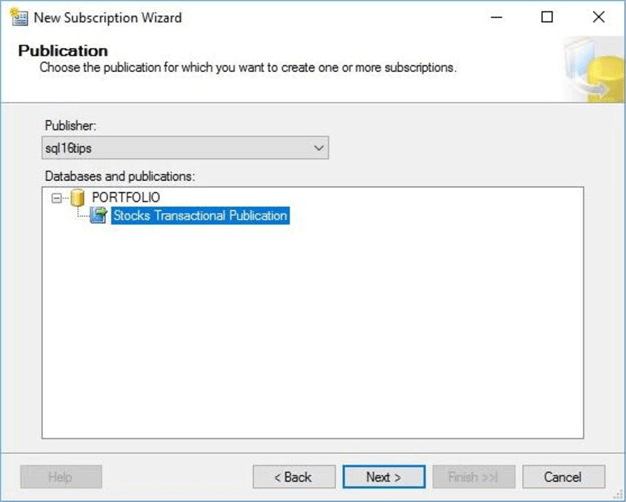

Since we only have one publication, just select the highlighted item and click next.

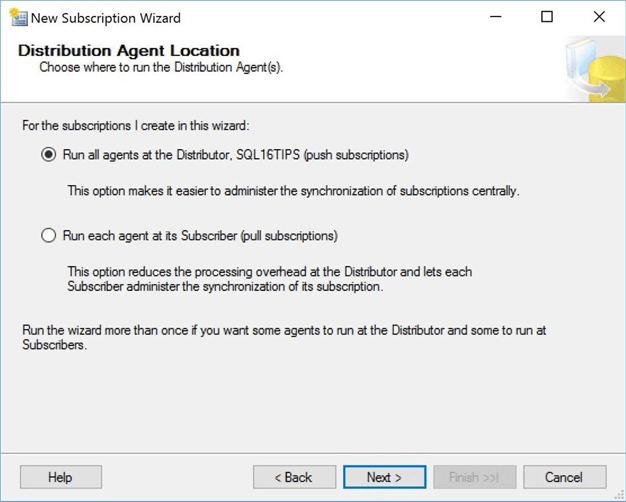

When designing replication between on-premises databases, the location of the distribution database can be located with the publisher, with the subscriber or on another machine. In our case, we are replicating from an on-premises database to an in-cloud database using a push subscription. Choose the first option.

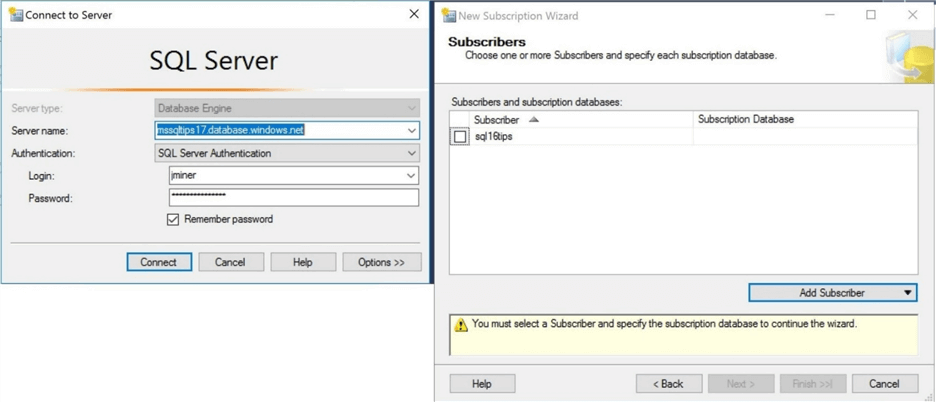

A single transactional publication can have multiple subscribers. Click the add subscriber button to enter the credentials of the Azure SQL server named mssqltips17.

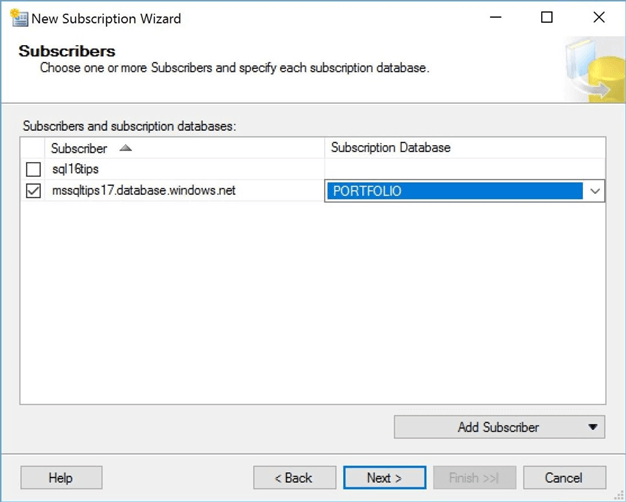

The server we just selected should show up as the only subscription in the list. Since we have only one subscriber, it is time to move onto the next dialog box.

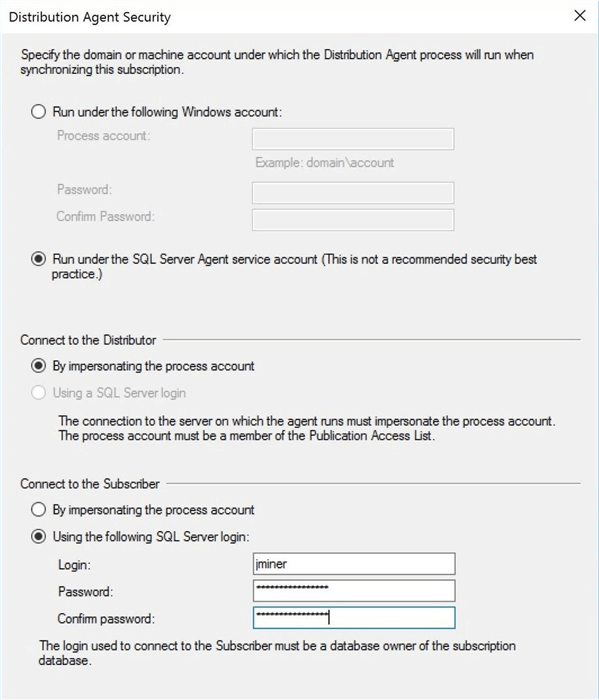

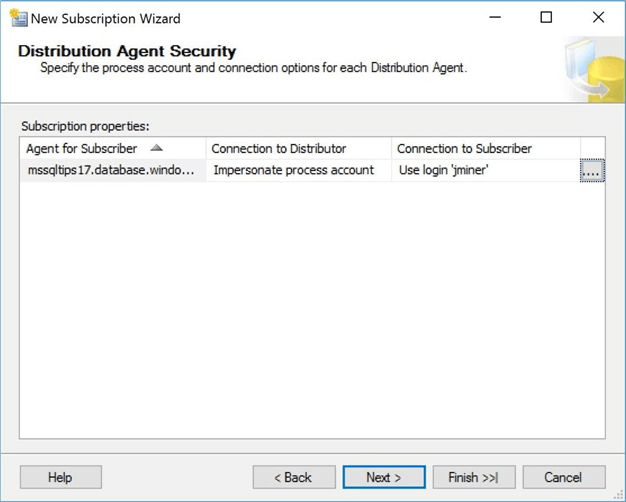

Security is always a consideration when specifying the distribution agent. Since this is just a proof of concept, I am going to run the job under SQL Agent service account. Also, I am going to use this account to connect to the distribution database. Just like the warning says, this is not a recommended security best practice. To wrap up our entries, I am going to use the jminer account to connect to the subscriber and to execute replication (TSQL) commands. Make sure this account has read and write privileges on the target tables. If you are dropping and creating the database objects from scratch, you need to give this account ownership privileges.

The wizard will confirm the choices that you made for the distribution agent. If you want to modify the choices, just select the button with the ellipses.

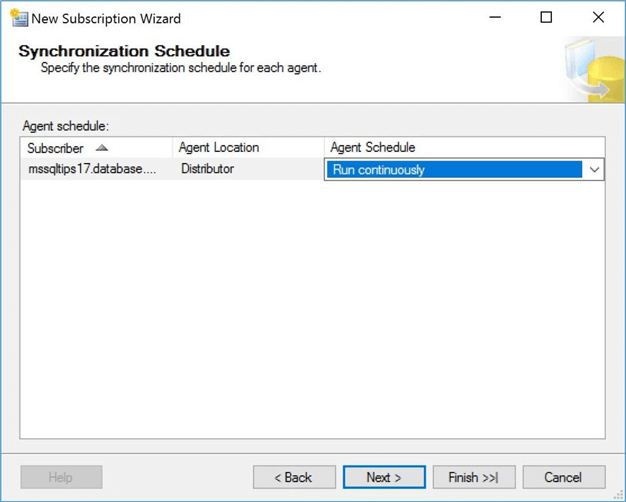

An instance of the distribution agent is executed by a SQL Server agent job. We need to define an execution schedule for this job. I want to continuously run this agent. When a new subscription is published, I want the distribution agent to update the subscriber right away. This includes both the initial snapshot and any transactional commands.

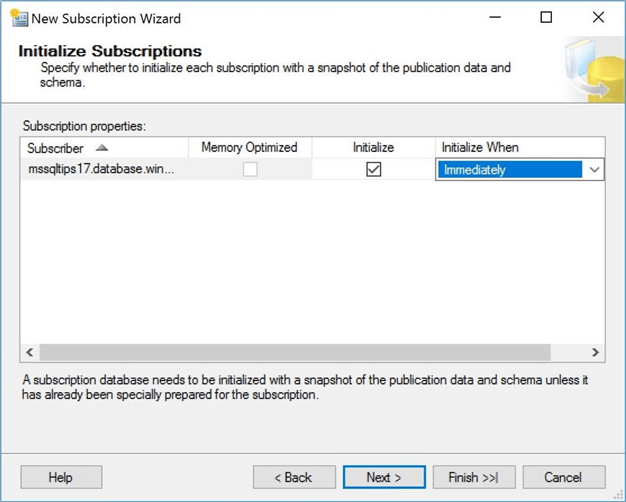

Right now, the subscriber database is empty. Unless we are near one of the 6 hour scheduled intervals, we will have to wait for the synchronization to happen. Select the initialize immediately option to force the distribution agent to run right away. There is a newer option for memory optimized tables at the subscriber. Just ignore it since we are not using this feature.

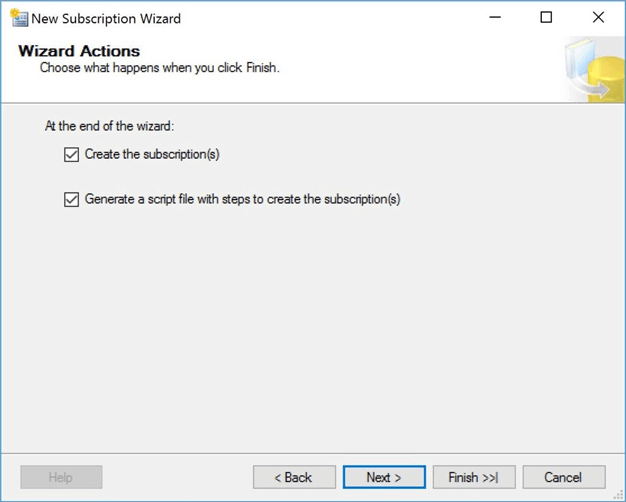

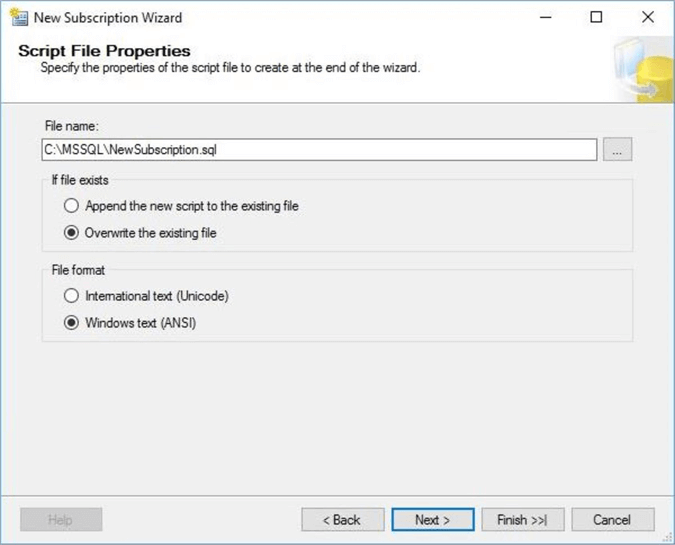

There are two actions that can be performed by the wizard: create the subscription or create a subscription script. I am going to select both actions. The TSQL script should be saved in a source control system so that subscriber can be rebuilt from scratch if necessary.

I am going to save the script in the root directory of my user defined storage space on the local hard drive. I want to overwrite any existing files and save the content as an ANSI text file.

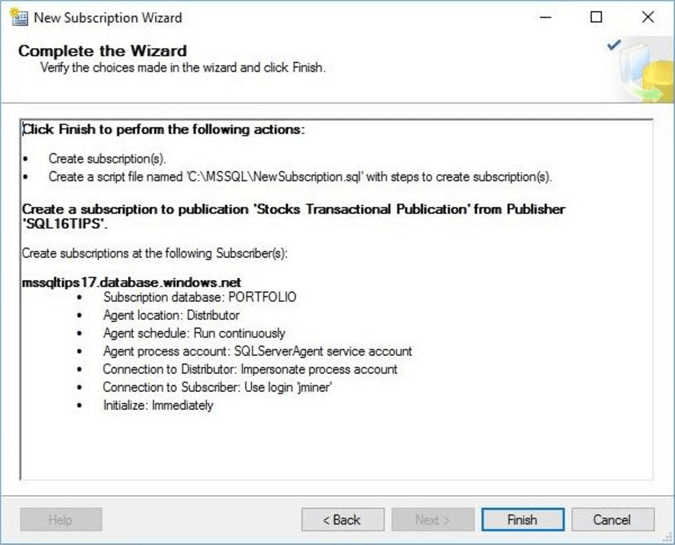

Finally, you are prompted with the laundry list of choices that were selected during each step. Click the finish button to execute the specified actions.

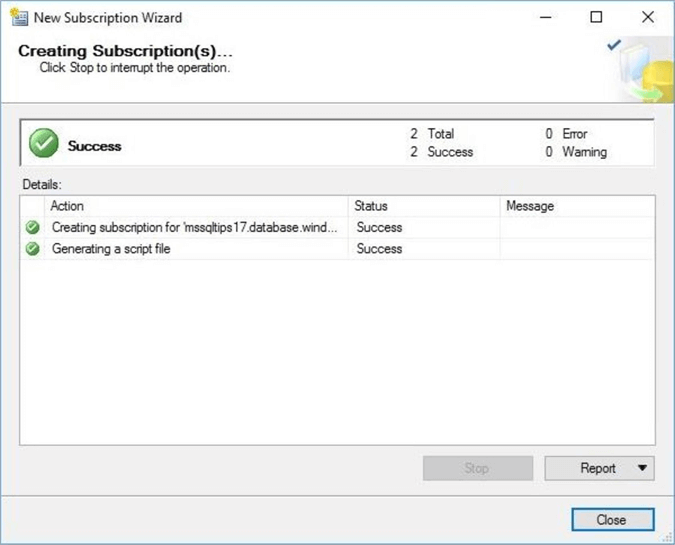

There are two actions that will be completed by the wizard. A progress bar will increment during the execution of the tasks. At the end of the day, you want to have a green success check mark next to each action.

The object explorer in SQL Server Management Studio can be used to look for existing subscriptions associated with our Stocks Transactional Publication. We now see our new subscriber as the Azure SQL Server named mssqltips17 and Azure SQL database named PORTFOLIO.

All this information is very interesting. But we are really interested in whether the actual transactional replication process worked. We can compare the total number of records in the publication versus the number of records in the subscriber. We see that they match exactly and that the process worked exactly as designed.

Transactional Replication Testing

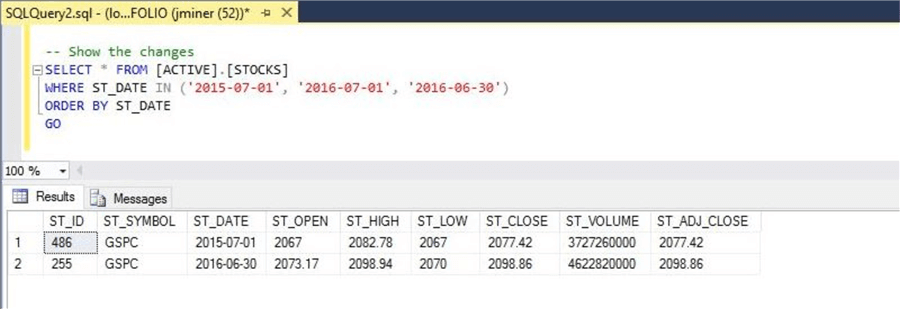

Testing is always important when verifying the expected results of a process. Executed the following T-SQL first on the publication database to show you the initial state of the table. Please see the image below.

There are three Data Manipulation Language (DML) statements that can affect our table data: INSERT, UPDATE and DELETE. The enclosed test script deletes the oldest record, updates the newest record, and inserts a record which is newest by date. Execute the first three statements on the publication database. Other SELECT statements in the file are used to look the status of the databases.

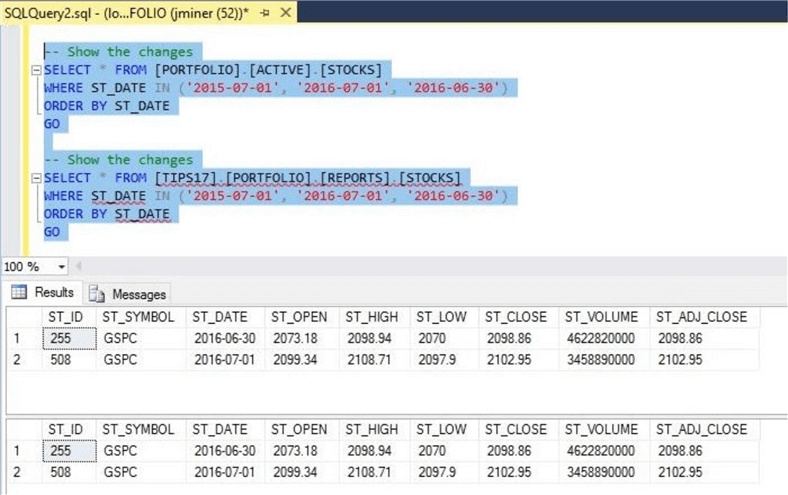

Currently, we have made changes to the publisher. Both the log reader and distribution agent are running continuously. New transactions automatically flow from the publisher to the subscriber. Just wait a few seconds for the transactions to appear. The screen shot below shows the expected outcome of executing the three DML statements.

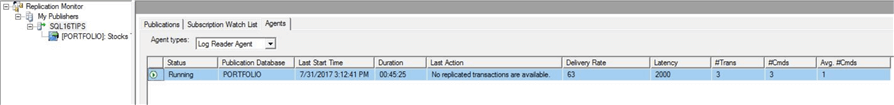

The replication monitor is an important tool for any database administrator that managers replication. Right clicking on the replication node in the SSMS object explorer to bring up an actions menu. Choose the replication monitor to launch the tool. Find our transactional publication and right click again. Find the publications tab and choose the log reader agent. We can see that three commands were processed by the agent.

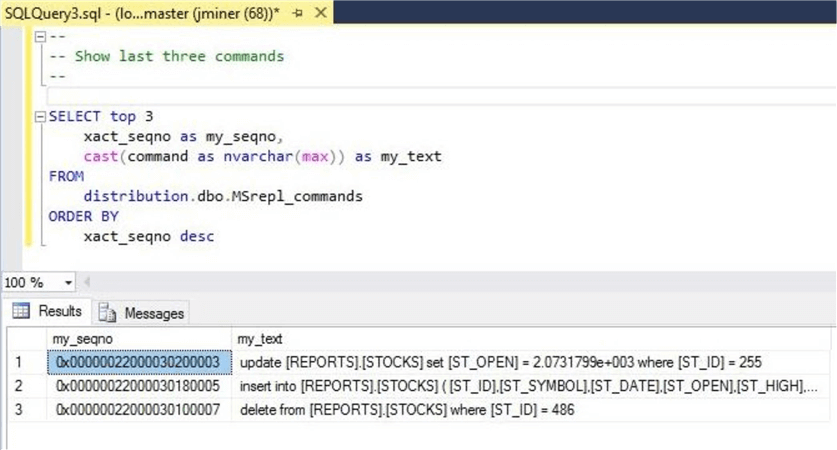

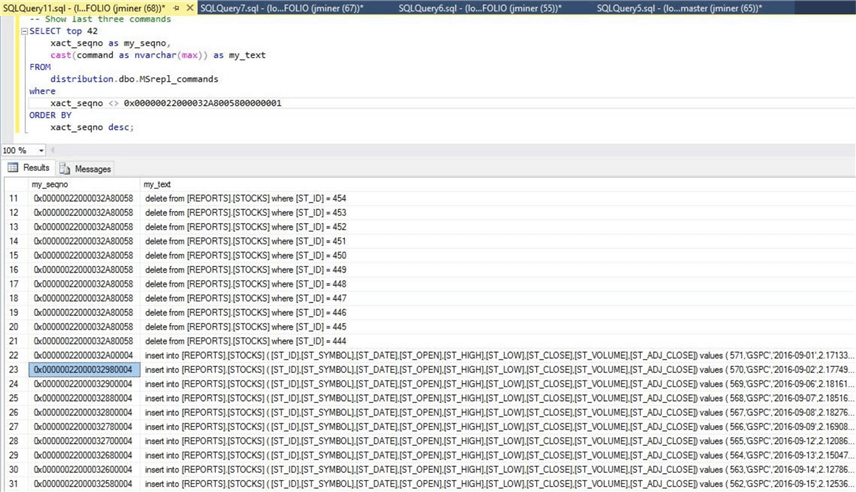

There are many supporting system tables associated with Microsoft Replication Services inside the distribution database. One important table is the MSrepl_commands which keeps track of transactions. This is the store and forward table that I talked about during the architectural review.

I am interested in the transaction sequence number and the actual Transact SQL command. Since the command is stored as a VARBINARY data type, we need to cast it to NVARCHAR to see the data. The above results show me that three separate transactions were executed against the STOCKS table. The Transact SQL statements have been transformed to use the correct target schema, REPORTS.

In a nutshell, transactional replication keeps the publisher and subscriber in synch with minimal time delay. In fact, this might be even considered near real time if your current system uses a daily batch type process.

Archiving Historical Data

The transact SQL script that built the subscriber database contains an ARCHIVE_BY_MONTH stored procedure. One of the business requirements of Big Jon Investments is to only publish a rolling 12 months of data to their cloud database. This means we will call this stored procedure below at the first of the month to move data from ACTIVE to HISTORY.

There are following list are important concepts implemented by the code snippet below. The list number is shown in the code for clarity.

- The new DROP PROCEDURE IF EXISTS clause is used to the existing stored procedure.

- Both parameters to the stored procedure use default values. This allows us to detect a call to the procedure with no input.

- I am using a verbose parameter. This does add overhead to the code. But allows us to leave optional informational messages in the code.

- The insert, update and delete statements have a little-known clause named OUTPUT. This allows us to delete and insert records within the same transaction.

/*

Archive historical data

*/

-- 1 - Delete the existing stored procedure.

DROP PROCEDURE IF EXISTS [ACTIVE].[ARCHIVE_BY_MONTH]

GO

-- Create the new stored procedure.

CREATE PROCEDURE [ACTIVE].[ARCHIVE_BY_MONTH]

@VAR_STOCK_MONTH DATE = '01/01/1900',

@VAR_VERBOSE_IND TINYINT = 1

AS

BEGIN

-- Declare variables

DECLARE @VAR_START DATE;

DECLARE @VAR_END DATE;

DECLARE @VAR_RECS INT;

-- 2 – No input detected.

IF (@VAR_STOCK_MONTH = '01/01/1900')

BEGIN

PRINT '[ARCHIVE_BY_MONTH] - please select a valid month to archive active table data.'

PRINT ' '

RETURN

END

-- Do not display counts

SET NOCOUNT ON

-- Set variables

SET @VAR_START = @VAR_STOCK_MONTH;

SET @VAR_END = DATEADD(M, 1, @VAR_STOCK_MONTH);

-- 4 - Move data from active 2 history

DELETE FROM [ACTIVE].[STOCKS]

OUTPUT DELETED.*

INTO [HISTORY].[STOCKS]

WHERE ([ST_DATE] >= @VAR_START) AND ([ST_DATE] < @VAR_END);

-- Grab # of records moved

SET @VAR_RECS = @@ROWCOUNT;

-- 3 - Show info if requested

IF (@VAR_VERBOSE_IND = 1)

BEGIN

PRINT '[ARCHIVE_BY_MONTH] - ' + FORMAT(@VAR_RECS, 'G') + ' records were moved from active to history table for given month ' + FORMAT(@VAR_START, 'd', 'en-us') + '.'

PRINT ' '

END

END

GO

I purposely did not add error checking to the stored procedure. I want to have the errors flow up to the SQL Agent job.

/*

Archive job – Step 1

*/

-- Get control card

DECLARE @VAR_CARD DATE;

SELECT @VAR_CARD = [MY_DATE] FROM [ACTIVE].[CONTROL_CARD];

-- Move the data

EXEC [ACTIVE].[ARCHIVE_BY_MONTH] @VAR_STOCK_MONTH = @VAR_CARD, @VAR_VERBOSE_IND = 1;

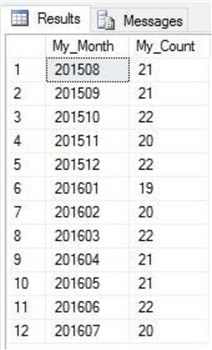

I broke the job into two steps for easy maintenance. The CONTROL_CARD table keeps track of the earliest month (date) in the ACTIVE.STOCKS table. Just restart the job at the step that failed to get back to a consistent state. When developing an ETL process, it is always important to think about rollback and/or restarting the job. I leave the task of creating the archival job on the publisher for you to complete. For more information on SQL Server Agent Jobs, please see this MSDN article.

/*

Archive job – Step 2

*/

-- Get control card

DECLARE @VAR_CARD DATE;

SELECT @VAR_CARD = [MY_DATE] FROM [ACTIVE].[CONTROL_CARD];

-- Update the card

UPDATE [ACTIVE].[CONTROL_CARD] SET [MY_DATE] = DATEADD(M, 1, @VAR_CARD);

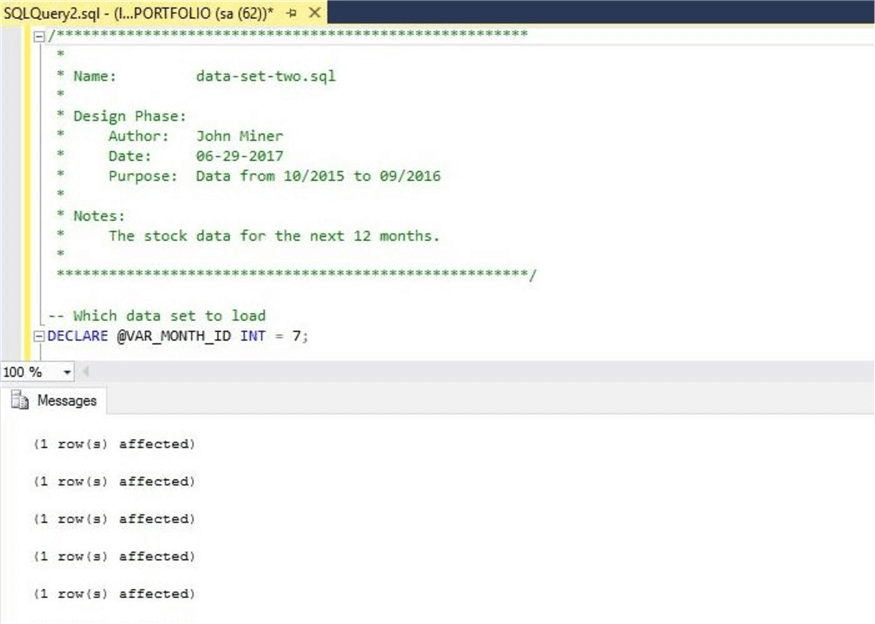

We need to add data to the publication STOCKS table to test our archival process. I am enclosing the data-set-two script for adding data by month. Just change the VAR_MONTH_ID variable to load the next months’ worth of data.

After adding data for the month of June in the year of 2016, we can execute a simple group by query to get record counts by month for the STOCKS table. We see there is 13 months of data.

Execute the archival job to move the data from the ACTIVE to HISTORY schema. Now we see that the last month has rolled off to our archive table.

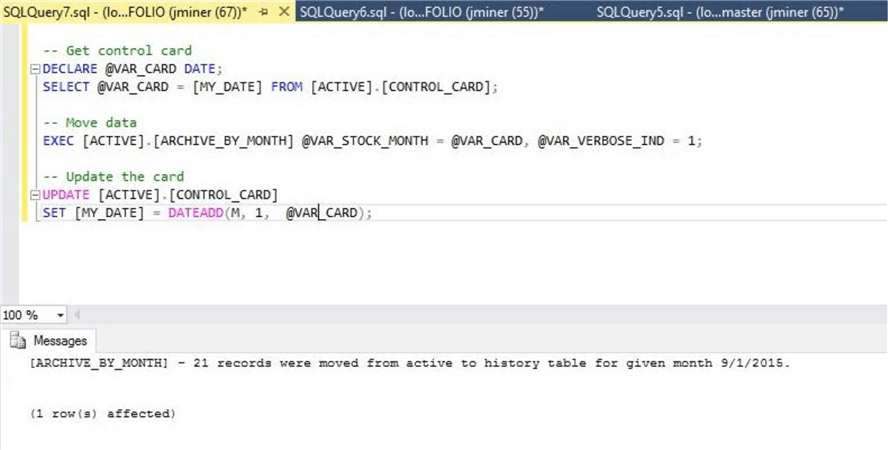

Let’s examine what would happen if we ran the job on October 1st, 2016. If you have been trying the example code, you will need to execute both the data-set-two script and archival job several more times. To make things simple, I converted the job back to a TSQL script. We can see that 21 records were moved to the HISTORY table.

If we look at record counts on the subscriber, we have data from October 2015 to September 2016. These are the exact results we are looking for.

One might ask what transactions were sent to subscriber during the archival process executed on October 1st, 2016?

We can retrieve that information by querying the MSrepl_commands table. If we start digging thru the table by xact_seqno number, we can see that 42 changes were made. It is interesting to note that all the DELETE commands have the same transaction number. That is a result of the OUTPUT clause being a single transaction. We can see that each insert statement is a single transaction. You can add a BEGIN TRANSACTION and END TRANSACTION statements to the date-set-two script to force all INSERTS to happen within the same transaction if you want.

In a nutshell, all business requirements have been satisfied. We just need to summarize what we learned for our boss.

Summary

Microsoft replication services allows the database administrator to create a transactional process which synchronizes two databases in near real time. The cool thing about this service is that not a single line of code needs to be written. It is important to script out and save the choices that you make in a source control system. This allows you to rebuild the process if needed in a timely manner.

Instead of writing code, you will need to use the publication and subscription wizards to configure the snapshot, log reader and distribution agents respectively. Since Azure does not have a SQL Server agent, all subscriptions must be defined as push subscriptions.

Like anything in life, there are PROS and CONS associated with using transactional replication with an Azure SQL database.

First, you need to consider the latency of the connectivity between the on-premises and in-cloud databases. If you are not using express route or VPN and the size of the database is extremely large, it might take a while to (re)initialize a subscriber.

Second, transactional replication always starts with synchronizing the publisher and subscriber with some form of a snapshot. Snapshot replication in its simplest form brings over all table data. I am assuming you did not choose either horizontal or vertical partitioning during the configuration. With that said, a large static table will transfer all table records.

Third, there is always a tiny time delay between the publisher and subscriber tables (articles). Any new transactions must commit to the database log file. The log reader picks up and converts the transactions to T-SQL statements which are stored in the distribution database. Last, the distribution agent executes these actions against the subscriber.

In short, the ease in which a transactional replication process can be created is why I would use this process to off load some work, such as reporting, to the Azure cloud.

If you want to read more about this topic, please check out this MSDN article.

Next Steps

- Investigate how horizontal partition can be used to send over a subset of the table records.

- Investigate how vertical partition can be used to send over a subset of the table columns.

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips