By: Ameena Lalani | Comments (1) | Related: 1 | 2 | 3 | 4 | > Temporal Tables

Problem

In previous tips we look at some of the benefits of using Temporal Tables. In this tip we look at some of the benefits of using Temporal Tables with memory optimized tables

Solution

In Part 1, Part 2 and Part 3 of this tip series, several benefits of temporal tables were discussed. This is the last tip of this series. In high online transaction processing systems (OLTP), keeping historical data becomes challenging due to the nature of time it takes to write to the disk. But combined with other technologies such as in-memory OLTP, Temporal tables solve this particular problem. Let’s learn about some of the key benefits of using temporal tables with memory optimized tables.

SQL Server Temporal Tables with Memory-Optimized Tables

Before we talk about how temporal tables and memory-optimized tables work together, let's get an understanding of Memory-Optimized tables.

SQL Server Memory Optimized Tables

The in-memory OLTP feature was introduced with SQL Server 2014 and it has 2 parts; memory-optimized tables and natively complied stored procedures. The main benefit of memory-optimized tables are that rows in the table are read from and written to memory which results in non-blocking transactions at super-fast speed. The second copy of the data is stored on the disk and during database recovery, data is read from the disk-based table. Memory-optimized tables are for specific types of workloads such as high volume OLTP applications. Discussing Memory Optimized Tables (MOT) is beyond the scope of this tip and you can learn more this feature in the Next Steps section. But we will discuss some important points about MOT in our examples.

Example with SQL Server Temporal Tables and Memory-Optimized Tables

We will create a test database with one additional filegroup marked for memory optimized objects and will add a data file to it. Then we have to turn the Memory optimization configuration at the database level. Finally, when we create a memory optimized table, we have to define Memory_Optimized = ON. Here is the code we are going to run to setup our example.

USE master GO CREATE DATABASE [TestTemporal] ON PRIMARY ( NAME = N'TestTemporal', FILENAME = N'C:\MSSQL\Data\TestTemporal.mdf' ) LOG ON ( NAME = N'TestTemporal_log', FILENAME = N'C:\MSSQL\Log\TestTemporal_log.ldf' ) GO -- Add memory optimized filegroup and a file ALTER DATABASE [TestTemporal] ADD FILEGROUP [Optimized_FG] CONTAINS MEMORY_OPTIMIZED_DATA GO ALTER DATABASE TestTemporal ADD FILE ( NAME = N'Optimized_Data', FILENAME = N'C:\MSSQL\Data\Optimized_Data.ndf') TO FILEGROUP [Optimized_FG] GO -- Turn memory optimization feature on at the database level ALTER DATABASE TestTemporal SET MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT = ON GO -- Create a Customer temporal table without Memory optimization and insert 3 rows USE TestTemporal GO CREATE TABLE Customer ( CustomerId INT IDENTITY(1,1) PRIMARY KEY ,FirstName VARCHAR(30) NOT NULL ,LastName VARCHAR(30) NOT NULL ,Amount_purchased DECIMAL NOT NULL ,StartDate datetime2 generated always as row START NOT NULL ,EndDate datetime2 generated always as row END NOT NULL ,PERIOD FOR SYSTEM_TIME (StartDate, EndDate) ) WITH(SYSTEM_VERSIONING = ON (HISTORY_TABLE = dbo.CustomerHistory)) GO INSERT INTO dbo.Customer( FirstName, LastName, Amount_Purchased) VALUES ( 'Frank', 'Sinatra',20000.00),( 'Shawn', 'McGuire',30000.00),( 'Amy', 'Carlson',40000.00) GO -- Now create a memory optimized temporal table Customer2 and insert the same 3 rows CREATE TABLE Customer2 ( CustomerId INT IDENTITY(1,1) ,FirstName VARCHAR(30) NOT NULL ,LastName VARCHAR(30) NOT NULL ,Amount_purchased DECIMAL NOT NULL ,StartDate datetime2 generated always as row START NOT NULL ,EndDate datetime2 generated always as row END NOT NULL ,PERIOD FOR SYSTEM_TIME (StartDate, EndDate), CONSTRAINT [PK_CustomerID] PRIMARY KEY NONCLUSTERED HASH (CustomerId) WITH (BUCKET_COUNT = 131072) ) WITH(MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA, SYSTEM_VERSIONING = ON (HISTORY_TABLE = dbo.Customer2History)) GO INSERT INTO dbo.Customer2 ( FirstName, LastName, Amount_Purchased) VALUES ( 'Frank', 'Sinatra',20000.00),( 'Shawn', 'McGuire',30000.00),( 'Amy', 'Carlson',40000.00) GO -- Let's select data from both table with Actual Execution plan mode on. SELECT * FROM dbo.Customer GO SELECT * FROM dbo.Customer2 GO

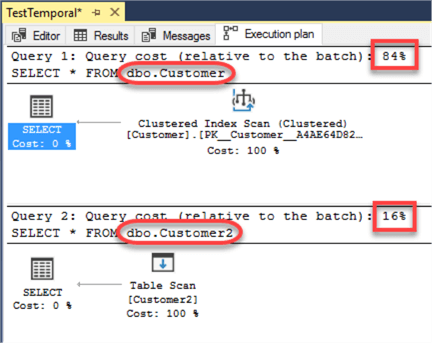

Execution plans of both select statements are shown below. Here we are querying and comparing the generated plans of the two temporal tables. It shows the performance difference between SELECT statements of the normal Temporal table “Customer” and a Memory optimized Temporal table “Customer2” very clearly. Obviously, any operation done in memory is going to be much faster than retrieving from disk.

Now let’s make some updates to these 2 temporal tables so we can check out history tables.

-- Make some updates to both temporal tables and then check out both history tables with Actual Execution Plan mode on UPDATE dbo.Customer SET Amount_purchased = '50000.00' WHERE FirstName ='Amy' AND LastName = 'Carlson' UPDATE dbo.Customer2 SET Amount_purchased = '50000.00' WHERE FirstName ='Amy' AND LastName = 'Carlson' GO SELECT * FROM dbo.CustomerHistory GO SELECT * FROM dbo.Customer2History GO

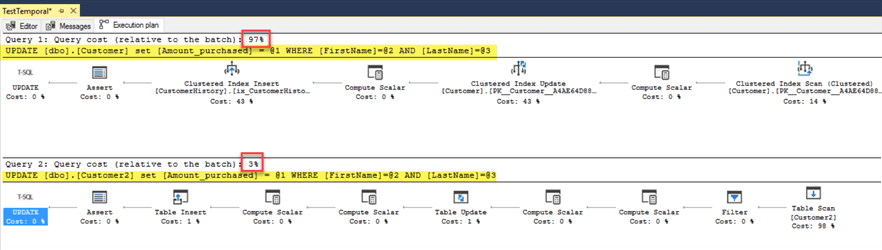

Again, execution plans of Update operations on these 2 Temporal tables shows that performance is blazing fast on MOT.

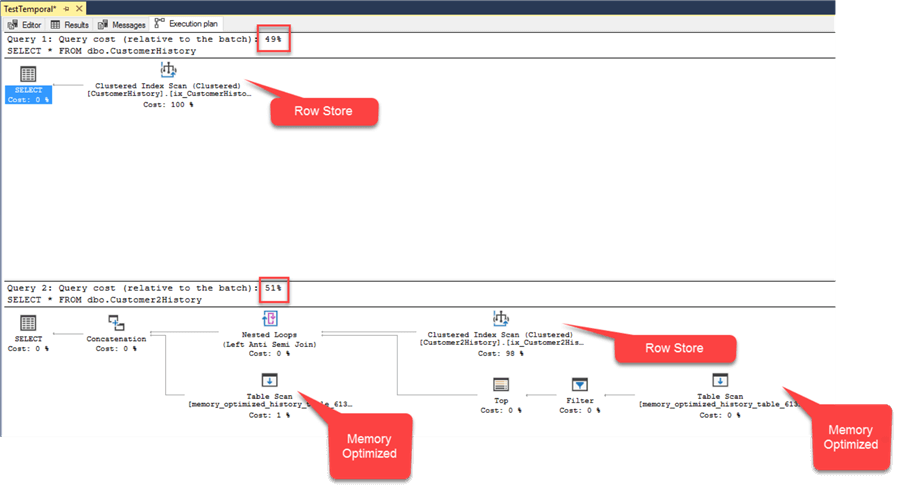

But a query to SELECT data from these 2 history tables: “CustomerHistory” and “Customer2History” does not show any performance difference between the 2 tables. The reason is discussed below.

For each Memory optimized temporal table, two history tables get created, one in memory (called staging history table that contains the most recent changes) and other is the disk based (row store) history table with the same history table name that was provided at the time of the temporal table creation. The querying of historical data returns the union of in memory staging table and the disk based (row store) table without duplicates. Data from the internal staging memory table is regularly moved to the disk-based history table by an asynchronous data flush task. According to BOL, the data flush task is activated regularly with a schedule that varies based on the existing workload. With a heavy workload, as frequent as every 5 seconds, and with a light workload, as infrequent as every 1 minute. One thread is spawned for each internal memory-optimized staging table that needs cleanup.

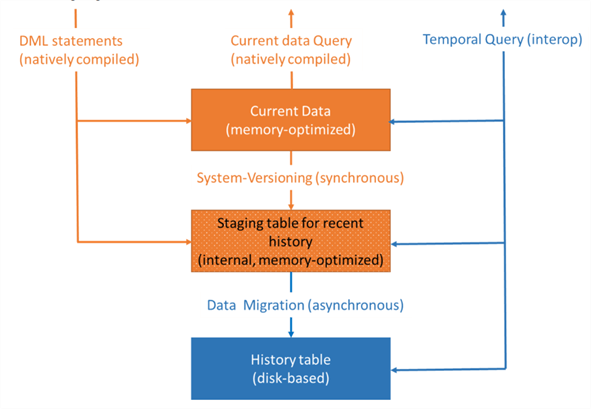

The following figure is from a Microsoft article. This describes the arguments we made in the above paragraph regarding the operation of temporal tables in the in-memory OLTP setup.

The orange part represents the in-memory OLTP functionality and blue portion describes the Temporal table functionality.

A normal temporal table work like this:

- Data updates > Temporal Table > Old Data > History Table

A memory optimized temporal table works like this:

- Data updates > Memory optimized Temporal Table > Old Data > In-memory staging History Table > Older data after flush > Disk Based History Table

Summary

In this tip, we covered how Memory Optimized Table and Temporal Table features can work together to solve the problem of high OLTP applications to be able to store historical audit data efficiently. This is a complex undertaking and although the example discussed in this tip was simplistic, it does convey the potential of the benefits of OLTP applications with temporal tables.

Next Steps

- Read this Microsoft documentation to learn more about Temporal Tables.

- Check out other tips in this series here.

- Download this free book on In-Memory OLTP to learn everything about this feature.

- Check out all of the SQL Server In-Memory OLTP tips.

About the author

Ameena Lalani is a MCSA on SQL Server 2016. She is a SQL Server veteran and started her journey with SQL Server 2000. She has implemented numerous High Availability and Disaster Recovery solutions.

Ameena Lalani is a MCSA on SQL Server 2016. She is a SQL Server veteran and started her journey with SQL Server 2000. She has implemented numerous High Availability and Disaster Recovery solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips