By: Gauri Mahajan | Comments (2) | Related: > SQL Server 2017

Problem

Data exploration and aggregation are two significant aspects of data analysis while processing with transactional data stored in SQL Server with R for statistical inferences. For data exploration using data science languages like R, data often needs to be filtered, re-ordered, transformed, aggregated and visualized. There are many possibilities for achieving these functionalities. This often involves using a number of libraries, especially for data manipulation, which also requires developers to learn all those libraries. For beginners, it would make a data science developer's job easier if there is a versatile package that can act like a Swiss knife and can perform multiple data transformation functionalities in the same library. In this two-part tip, we will learn in detail about one such package.

Solution

dplyr is a package in R that provides a flexible option for data manipulation, which are particularly useful in data science projects. It contains a set of verbs that are helpful in data wrangling, cleaning, visualization and analysis. R programming language in dplyr package can be leveraged to accomplish such tasks. When working with massive datasets, it is not a good practice to store them in R, the best bet is to collect the data in SQL Server and use an R library like dplyr to process the data.

Outline

The intent of this two-part tip is to get you started with basic data exploration, manipulation and simple visualizations using dplyr R package in SQL Server. We are going to cover a range of data munging capabilities of dplyr package using SQL Server as well as R.

- Part 1 of this series will focus on the initial set-up, configuration of R in SQL Server, setting up data and basic functions for selecting, filtering and re-ordering data supported by the dplyr package.

- Part 2 we will learn about some more advanced functions supported by dplyr package related to data aggregation, function chaining and basic data exploration graphs for data analysis.

Prerequisites

The first step is to ensure that your system has all the required software components like SQL Server 2017, SSMS, SQL Server R services (in-database). You can learn more about the installation and setup from this SQL Server R Tutorial.

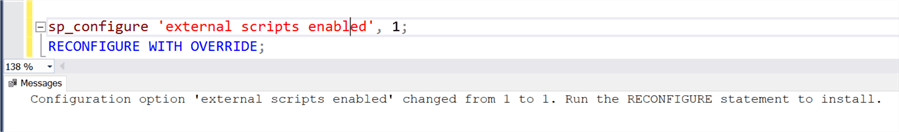

After installing the above mentioned services, we need to install the 'dplyr' package in R using the command install.packages("dplyr") in the R console. In case, you are unfamiliar with the process of installing R packages, I would recommend to have a look at this URL to learn how to install R packages on an R server. Once the dplyr package is successfully installed, we will make use of this R package for data munging and wrangling in SSMS. However, before we begin, we will have to enable the option 'external scripts enabled' to execute external scripts with a remote language extension (R in this case), to do so, open SSMS and execute the below code.

sp_configure 'external scripts enabled', 1;

RECONFIGURE WITH OVERRIDE;

After executing the above code, restart SQL Server to make this change effective. Also, make sure the 'SQL Server Launchpad (MSSQLSERVER)' service is in the running mode in SQL Server Configuration Manager. This is basically a new service released by Microsoft (for SQL Server 2016 and higher) to start Advanced Analytics Processes necessary for integration of T-SQL and Microsoft R Open.

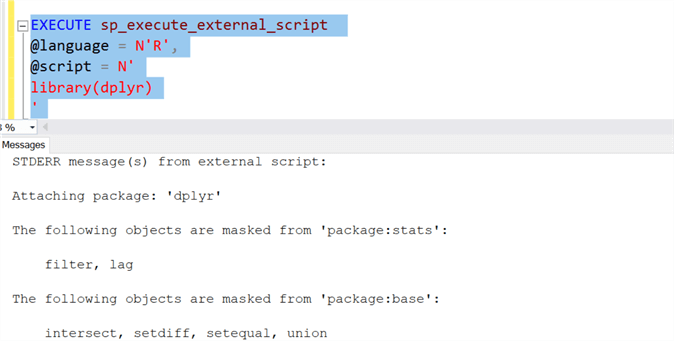

Open a new query window and execute the below code. If you notice, it refers to the newly installed dplyr package. Successful execution of this code would ensure that the dplyr package is installed successfully and SQL Server environment is able to consume the package.

EXECUTE sp_execute_external_script

@language = N'R',

@script = N'

library(dplyr)

'

Let's go through each step executed in the code above:

- The Stored Procedure sp_execute_external_script is used to execute external scripts with R as an input parameter for the language and R code to be executed as an input parameter for the script.

- The library function references dplyr package to access functionality of the package.

- Attaching package: 'dplyr' in the result window confirms that the installation of this package has been done successfully and is accessible in SQL Server.

Setting up data to work with dplyr package

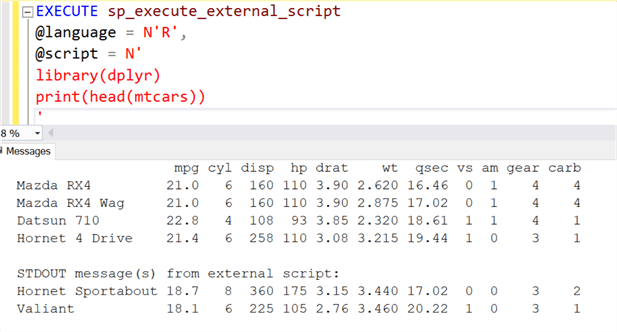

We will need some data to evaluate how the dplyr package actually works. We will employ multiple datasets here to cover different functions of this package. mtcars is one of the widely used datasets in R, and comprises of several attributes like miles/gallon (mpg), hp (horsepower), weight, etc. related to 32 cars. We will also use other similar external datasets in R (like flights, airports, airlines, weather etc.) present in the package nycflights13. This package provides all the relevant information for all the flights that departed NYC in 2013. Install the nycflights13 package and refer to appropriate datasets in order to access data from this package.

Execute the below code in a new query window to show the mtcars data. We are using the head() function to return the first six rows of the mtcars dataset and the print() function to display the result.

EXECUTE sp_execute_external_script

@language = N'R',

@script = N'

library(dplyr)

print(head(mtcars))

'

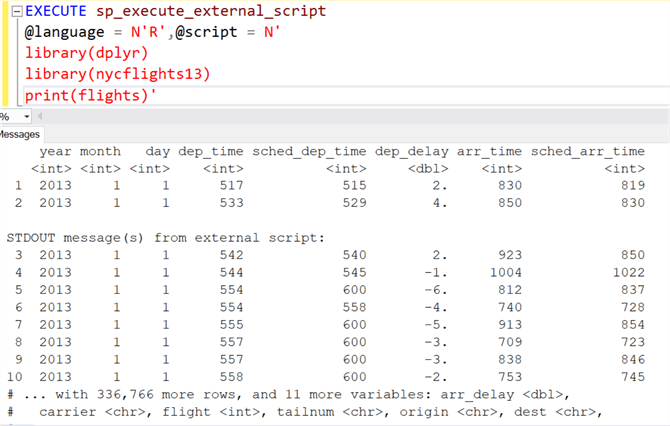

We will now execute the code below that loads the nycflights13 package and displays flight data using the print() function. This flights dataset contains approximately 340,000 records and 19 variables. One important thing to note here in the result window below is the flights dataset in this package is a tibble (or tbl_df) which is nothing more than a modern reshape of the data frame and is considered useful when there is a large dataset as it returns the first few rows only with rows count and variables names. Also, the data type of each column is enclosed in the angle brackets right below the column name as shown below.

EXECUTE sp_execute_external_script

@language = N'R',@script = N'

library(dplyr)

library(nycflights13)

print(flights)'

Now that we are ready with the initial setup and the required datasets, let's dive into the workings of the dplyr package.

Functions in dplyr package

This package provides a separate function for each basic data manipulation and transformation operation in T-SQL. We will start with the basic functions (like select(), filter(),arrange(), etc.) and move up to a few advanced functions (like mutate(), group_by(), summarise(), pipe operator, etc.) in the next part of the tip.

Please note – We will be returning a few rows in the result by using either head() or tail() functions wherever necessary for the sake of readability.

Selecting columns with select() using dplyr - SELECT equivalent in T-SQL

We often work with huge datasets with numerous columns, but in actuality only a few are of interest to us. In such scenarios, the select() function comes in handy and is used to select a set of column(s) from the data frame. Let's jump to SSMS and execute the below code to see how select() works.

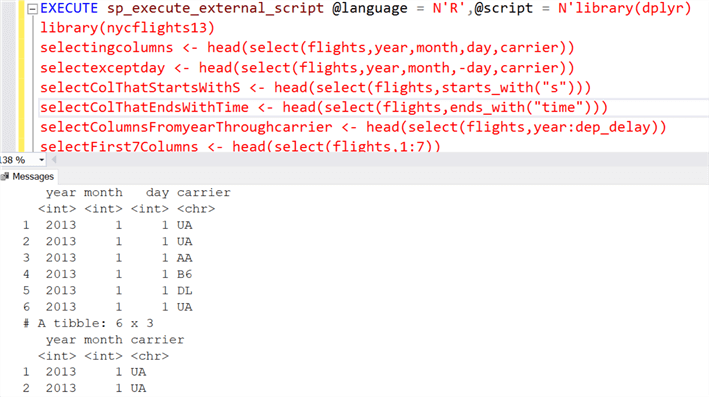

EXECUTE sp_execute_external_script@language = N'R',@script = N'library(dplyr)

library(nycflights13)

selectingcolumns <- head(select(flights,year,month,day,carrier))

selectexceptday <- head(select(flights,year,month,-day,carrier))

selectColThatStartsWithS <- head(select(flights,starts_with("s")))

selectColThatEndsWithTime <- head(select(flights,ends_with("time")))

selectColumnsFromyearThroughcarrier <- head(select(flights,year:dep_delay))

selectFirst7Columns <- head(select(flights,1:7))

selectColumnsThatContainsdelay <- head(select(flights,contains("delay")))

print(selectingcolumns)

print(selectexceptday)'

Let's understand the code we executed above. The select() function offers many ways of column selection from the dataset. For instance, to select a set of columns except specific column(s), we can use "-" the subtraction operator to show columns except the ones listed with "-" .This is called negative indexing. Also, we can make use of ":" the colon operator to select a range of columns both by name (e.g. year:dep_delay) and numeric (like 1:7, this is called sequential numeric indexing).

Additionally, there are a couple of helper functions that can be used within select(), like starts_with(), ends_with() ,contains(), etc. to select columns based on specific criteria. These functions help to quickly return the set of the columns that meets some particular conditions.

The first argument in the select() function is the dataset itself (flights in our case) and the succeeding arguments are either the column names or the helper functions explained above. For the sake of brevity, we will be showing results of the first two commands below. You can also try to display results for other statements in the code by using print() for a better understanding.

Updating column name(s) with rename() using dplyr - ALTER equivalent in T-SQL

We will make use of the airports dataset in nycflights13 package to demonstrate how we can rename a column. rename() is used to alter column names by passing 'newcolname = oldcolname' followed by the dataset. We can also rename more than one column as shown in the following screenshot. Here, we are updating variable 'name' with 'airportname' and variable 'tzone' with 'timezone'.

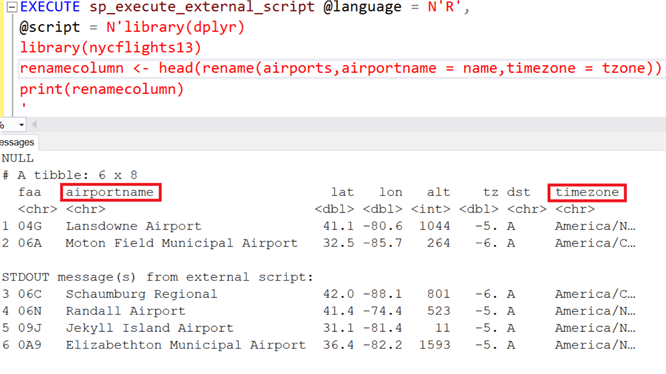

EXECUTE sp_execute_external_script@language = N'R',

@script = N'library(dplyr)

library(nycflights13)

renamecolumn <- head(rename(airports,airportname = name,timezone = tzone))

print(renamecolumn)

'

One interesting point to note is we can also use the select() function to rename variables, but the downside of using select() is it drops all the variables that are not explicitly mentioned. For example, if you execute using select() in order to rename 2 variable names - select(airports, airportname = name, timezone = tzone), you will notice, only airportname and timezone are returned by this command and all other column names are dropped. In such scenarios, it is more useful to make use of the rename() function than select().

Sorting data with arrange() using dplyr - ORDER BY equivalent in T-SQL

The arrange function helps to order rows by particular variable(s). It works similarly to T-SQL 'ORDER BY' clause. Let's try to arrange rows using arrange() based on maximum departure delay (in mins) and then in descending order of year and month.

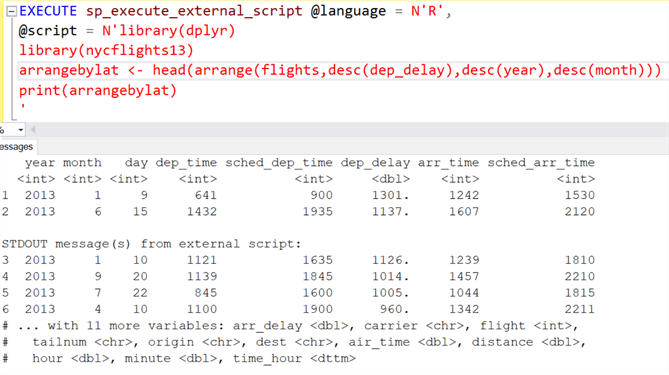

EXECUTE sp_execute_external_script@language = N'R',

@script = N'library(dplyr)

library(nycflights13)

arrangebylat <- head(arrange(flights,desc(dep_delay),desc(year),desc(month)))

print(arrangebylat)

'

Filtering rows with filter() using dplyr - WHERE equivalent in T-SQL

filter() allows a subset data row-wise by using logical expressions and returning rows that meet the conditions. As used for the other functions, we will have to pass a dataset as the first argument and subsequent arguments contain logical conditions on variables that filter the dataset. This is an equivalent to the WHERE clause in T-SQL. To see this in action, let's refer to the weather dataset and execute the below code that selects all flights that originated from JFK airport with a temperature of more than 95F using filter().

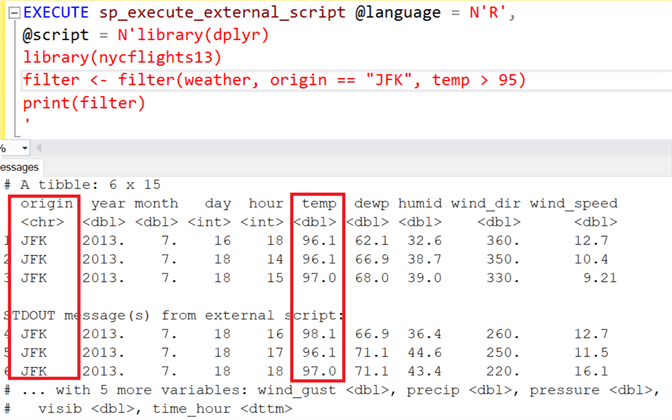

EXECUTE sp_execute_external_script@language = N'R',

@script = N'library(dplyr)

library(nycflights13)

filter <- head(filter(weather, origin == "JFK", temp > 95))

print(filter)

'

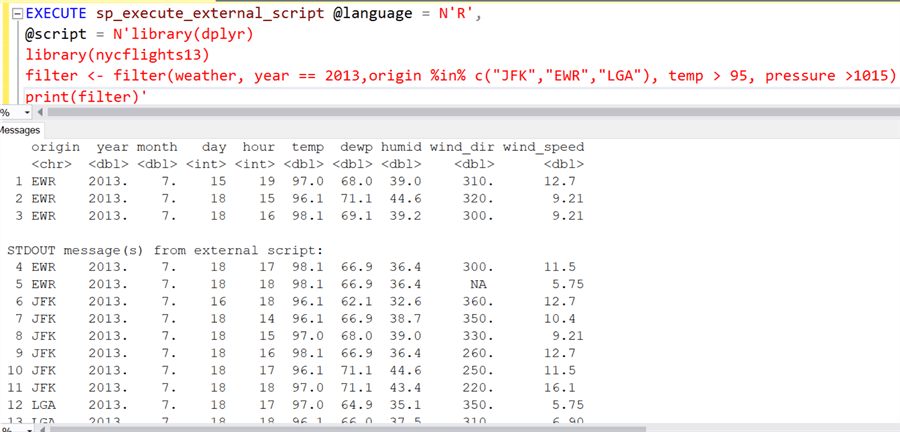

Filtering multiple values on columns using '%in%' - IN equivalent in T-SQL

When dealing with larger datasets, we often come across an urge to filter data with multiple values on variables. This can be easily implemented using '%in%' operator, that works similar to the IN operator in T-SQL. Let's say we want to see what flights took off from JFK, EWR and LGA airports in 2013 and with a temperature more than 95F and pressure exceeding 1015mb. We will execute the code below that uses %in% and returns 13 rows.

EXECUTE sp_execute_external_script@language = N'R',

@script = N'library(dplyr)

library(nycflights13)

filter <- filter(weather, year == 2013,origin %in% c("JFK","EWR","LGA"), temp > 95, pressure >1015)

print(filter)'

These functions can help a data science developer shape the data per their needs from the machine learning model or different data science use-cases, with a concise amount of code.

Summary

We can simplify our code with these various elementary verbs offered by the dplyr package with the functionality comparable to those available in T-SQL. This package makes data exploration and data manipulation intuitive and much faster. We will learn a set of more powerful functions that comes in handy for data aggregation as well as manipulation with the dplyr package in the next tip on this topic.

Next Steps

- Stay tuned for the next block of more advanced functions in dplyr and data analysis with visualizations using dplyr in SQL Server 2017.

- Install dplyr package and try some hands-on practice of the exercises illustrated in this tip for a better grip on the concepts.

- For more information on SQL Server and R related topics, refer to these other tips.

About the author

Gauri Mahajan is very passionate about SQL Server Reporting Services, R, Python, Power BI, the Database engine, etc. and enjoys writing.

Gauri Mahajan is very passionate about SQL Server Reporting Services, R, Python, Power BI, the Database engine, etc. and enjoys writing.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips