By: Fikrat Azizov | Comments (3) | Related: > Azure Data Factory

Problem

In these series of posts, I am going to explore Azure Data Factory (ADF), compare its features against SQL Server Integration Services (SSIS) and show how to use it towards real-life data integration problems. In previous posts, we have built pipelines with copy activities. Many data integration scenarios require data transformation and processing capabilities, in addition to copy tasks. In this post, we will discuss transformation related pipeline activities.

Solution

Azure Data Factory Transformation Activities

Transformation activities transform and process data in different computing environments such as SQL Server, Azure HDInsight cluster or an Azure Batch. In other words, ADF pipelines play an orchestrator role, initiating tasks within different engines. The following transformation types are available:

- Stored Procedure Activity

- HDInsight Hive activity

- HDInsight Pig activity

- HDInsight MapReduce activity

- HDInsight Streaming activity

- HDInsight Spark activity

- Machine Learning activities

- Data Lake Analytics U-SQL activity

- Databricks Notebook activity

- Databricks Jar activity

- Databricks Python activity

- Custom activity

In this post, we will be focusing on using Stored Procedure Activity.

Stored Procedure Activity can be used to invoke a stored procedure in one of the following data stores in your enterprise or on an Azure virtual machine (VM):

- Azure SQL Database

- Azure SQL Data Warehouse

- SQL Server Database on on-premises machine on Azure VM. In this case, you will need to use Self Hosted Integrated Service, which we discussed in earlier posts.

Creating Stored Procedure Activity in Azure Data Factory

Stored Procedure Activity could be used to run regular batch processes, to log pipeline execution progress or exceptions. We will create a simple stored procedure in the DstDb database to store pipeline name, pipeline run ID and sample text. Let's run the following queries to create ExceptionLogs table and Usp_ExceptionLog stored procedure, using below script under DstDb database context:

CREATE TABLE [dbo].[ExceptionLogs](

[PipelineName] [varchar](100) NULL,

[RunId] [varchar](100) NULL,

[TableName] [varchar](100) NULL

)

GO

CREATE PROCEDURE Usp_ExceptionLog

(@PipelineName varchar(100), @runid varchar(100),@TableName varchar(100))

AS

BEGIN

INSERT INTO ExceptionLogs VALUES(@PipelineName,@runid,@TableName)

END

GO

Most of activities we created in previous tips had two datasets - source and sink (destination) datasets. In contrary, like most transformation activities, Stored Procedure Activity has no datasets at all. However, we still need to have a linked service pointing to the server or service hosting the database, where the stored procedure is located.

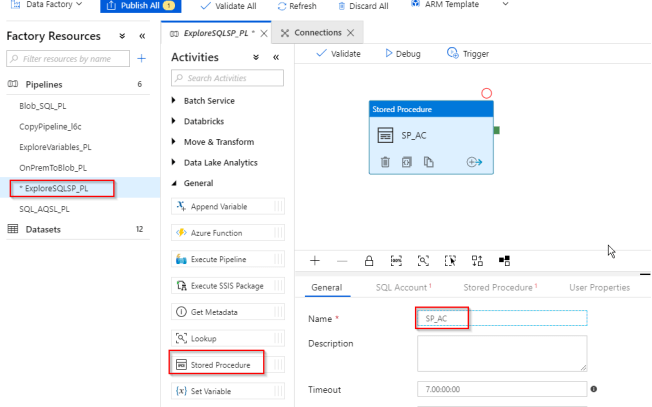

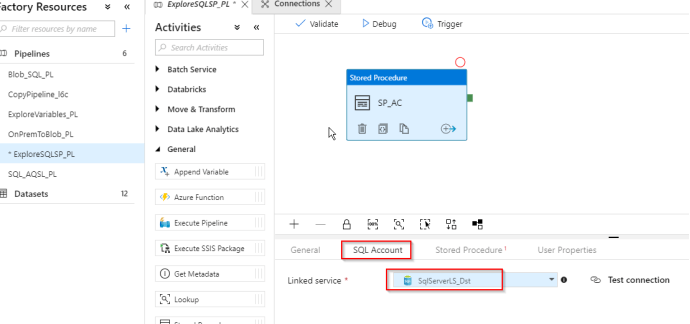

I have created pipeline ExploreSQLSP_PL for the purpose of this exercise and will use SqlServerLS_Dst linked service, which is pointing to the DstDb database.

Creating Stored Procedure Activity is a straightforward process and here are the required steps:

- Select the pipeline that will host this activity, expand the General category on the Activities panel, drag-drop it to the central panel and assign the name (SP_AC in this example):

- Switch to SQL Account tab and select the required linked service (SqlServerLS_Dst in this case):

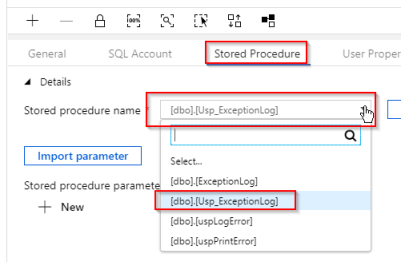

- Switch to the Stored Procedure tab and select the required procedure (Usp_ExceptionLog in this case):

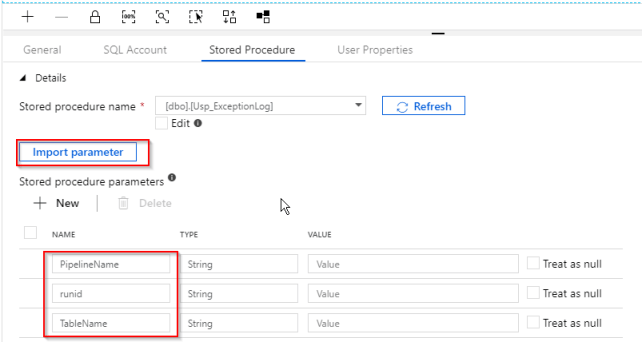

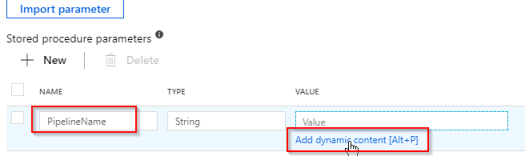

- Click the Import parameter button to fetch the procedure parameters:

- Now, let's assign a system variable to the first parameter, by using the Add dynamic content link:

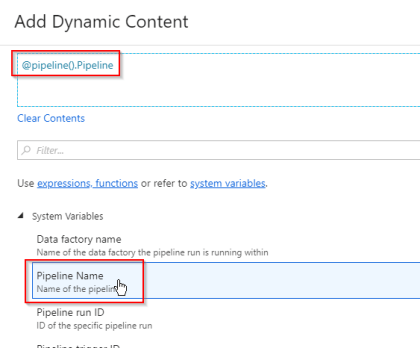

- The Add Dynamic Content window allows you to use a combination of system variables and ADF functions to build complex expressions interactively. Select Pipeline Name under the System Variables group and ensure that ADF has added the new expression to the expression box:

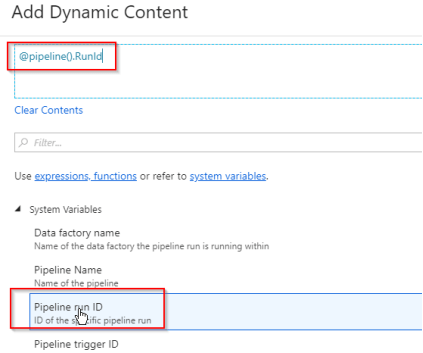

- Follow similar steps to assign Pipeline run ID system variable to runid parameter:

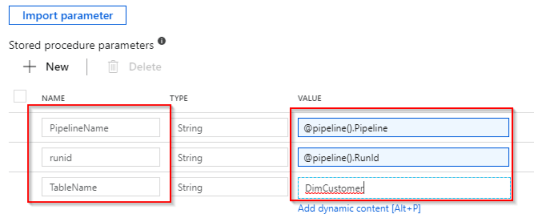

- Assign static string DimCustomer to the TableName parameter. Here' how your screen should look at the end of this exercise:

Here is JSON script behind this pipeline:

{

"name": "ExploreSQLSP_PL",

"properties": {

"activities": [

{

"name": "SP_AC",

"type": "SqlServerStoredProcedure",

"policy": {

"timeout": "7.00:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"typeProperties": {

"storedProcedureName": "[dbo].[Usp_ExceptionLog]",

"storedProcedureParameters": {

"PipelineName": {

"value": {

"value": "@pipeline().Pipeline",

"type": "Expression"

},

"type": "String"

},

"runid": {

"value": {

"value": "@pipeline().RunId",

"type": "Expression"

},

"type": "String"

},

"TableName": {

"value": "DimCustomer",

"type": "String"

}

}

},

"linkedServiceName": {

"referenceName": "SqlServerLS_Dst",

"type": "LinkedServiceReference"

}

}

]

}

}

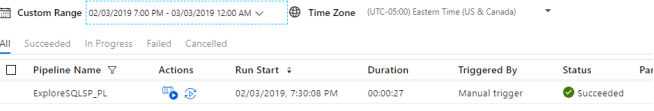

Finally, let's publish all changes and start this pipeline manually. As you can see from ADF monitoring page's screenshot, the execution was successful:

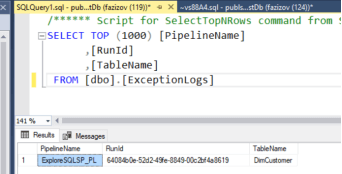

Let's validate the results by querying the target table:

Conclusion

Stored Procedure Activity in ADF could be used for running batch processes and storing a pipeline's intermediate results in a way that resembles SSIS's OLE DB Command functionality. The other types of transformations I mentioned require knowledge of specific technology and we will look at them in future posts. We will be exploring another type of activity Control Flow activities in the next few posts.

Next Steps

- Read: Transfer On-Premises Files to Azure Blob Storage

- Read: Transform data in Azure Data Factory

- Read: Transform data by using the SQL Server Stored Procedure activity in Azure Data Factory

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips