By: Eli Leiba | Comments (9) | Related: > Performance Tuning

Problem

I need to create a method of reporting the SQL Server database data and log files that have latency issues. The criteria for measuring the IO busy levels is presented as "average total latency" and is calculated for each file as a ratio between the IO stalls and the sum of read and write requests as shown below.

Solution

My solution involves creating a T-SQL stored procedure in the SQL Server master database, called dbo.usp_FindErrorsInDefTrace that will collect the needed IO information from SQL Server for the database and transaction log files.

The statistics information about all of the files is exposed through the sys.dm_io_virtual_file_stats system function. This function gets a file_id and/or a database_id as parameters and provides cumulative IO statistics like the number of reads and writes for each database file and for each transaction log file. It also reports IO stall time between the various requests. From these columns the average total latency can be computed.

The IO stall metric is considered to be a general indicator of performance problems. High stall times show I/O problems, which can point to busy physical disks or queries that return large data sets to the client.

IO Stalls are affected mostly by three reasons:

- A poorly performing disk subsystem

- Badly defined queries

- An overloaded disk subsystem

The procedure takes an integer parameter (@N) that indicates how many files are displayed in the result set.

The procedure joins the results from the sys.dm_io_virtual_file_stats system function with the system view sys.master_files (joined by the file and database id columns) and reports the database name, the average total latency, the file size, the file name and path.

SQL Server Stored Procedure to Show Latency for Database Files

USE master

GO

-- =================================================================================

-- Author: Eli Leiba

-- Create date: 07-2019

-- Procedure Name: dbo.usp_FindTopVirtualFilesStatsIOLatency

-- Description:

-- This procedure finds the name and paths for the top N data or log files

-- on the Server with the most high value of average total IO latency.

-- ==================================================================================

CREATE PROCEDURE dbo.usp_FindTopVirtualFilesStatsIOLatency (@N INT = 3)

AS

BEGIN

SET NOCOUNT ON

SELECT TOP (@N) DB_NAME (a.database_id) AS dbname,

a.io_stall / NULLIF (a.num_of_reads + a.num_of_writes, 0) AS average_tot_latency,

Round ((a.size_on_disk_bytes / square (1024.0)), 1) AS size_mb,

b.physical_name AS [fileName]

FROM sys.dm_io_virtual_file_stats(NULL, NULL) AS a,

sys.master_files AS b

WHERE a.database_id = b.database_id

AND a.FILE_ID = b.FILE_ID

ORDER BY average_tot_latency DESC

SET NOCOUNT OFF

END

GO

Example Stored Procedure Execution and Results

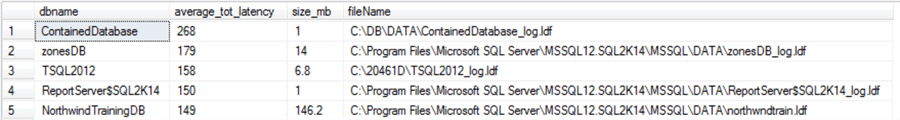

Report the top five average total latency files on my SQL Server disk system:

USE master GO EXEC dbo.usp_FindTopVirtualFilesStatsIOLatency @N=5 GO

And the results are (on my server):

From the above screen shot we can see the transaction log file for database ContainedDatabase has the highest latency based on the calculation. Also, we can see that all of the above files are the transaction log files by looking at the file extension on the files.

Next Steps

- You can create and compile this simple procedure in your master database and use it as a simple tool for finding out information about the top N average total IO latency transaction log or database files on your SQL Server.

- The data gathered from this stored procedure can lead to allocating new hardware resources in addition to performance tuning of queries for databases residing on the files reported by the procedure.

- The procedure was tested for SQL Server 2014 and 2017, but should work with SQL Server 2005 - 2017.

About the author

Eli Leiba is a senior application DBA, a teacher and a senior database consultant with 19 years of RDBMS experience.

Eli Leiba is a senior application DBA, a teacher and a senior database consultant with 19 years of RDBMS experience.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips