By: Simon Liew | Comments | Related: > Performance Tuning

Problem

Our SQL Server database developers inherited an intensive T-SQL workload script which performs many continuous small transactions. For testing purposes, our developers executed the workload script on the [TempDB] database. To their surprise, they found that the execution duration was greatly reduced with no code change in the workload script.

Why would the performance difference be so drastic just executing the same script on [TempDB] versus a user database?

Solution

[TempDB] is categorized as a system database in SQL Server and its behavior and characteristics are different from a user database.

One deviation is TempDB does not honor the durability setting or commit semantics and this behavior cannot be changed.

This means workload performance that are throttled due to constant log flush waits (typically due to many continuous small transactions) whether intentionally or unintentionally can get performance benefits similar to enabling delayed durability when executed on [TempDB].

Setting up a Test Environment

To illustrate the scenario in the problem statement, we will create and configure a new user database [UserDB] with the T-SQL script below. The script also makes sure that the delayed durability feature is disabled on both the [UserDB] and [TempDB].

The script below was executed on SQL Server 2016 Developer Edition.

USE [master]

GO

CREATE DATABASE [UserDB]

GO

ALTER DATABASE [UserDB] SET RECOVERY SIMPLE WITH NO_WAIT

GO

ALTER DATABASE [UserDB] MODIFY FILE ( NAME = N'UserDB', SIZE = 1024000KB )

GO

ALTER DATABASE [UserDB] MODIFY FILE ( NAME = N'UserDB_log', SIZE = 1024000KB )

GO

ALTER DATABASE [UserDB] SET DELAYED_DURABILITY = DISABLED WITH NO_WAIT

GO

ALTER DATABASE [TempDB] SET DELAYED_DURABILITY = DISABLED WITH NO_WAIT

GO

Test 1: Benchmark small transaction workload between [TempDB] and [UserDB]

To simulate a workload with many small transactions, we will use a very simple T-SQL script which performs just continuous inserts of 200K rows into a physical table as shown below. Note that each row insertion is encapsulated in an explicit transaction.

-- Continuous row insertion code ...

IF OBJECT_ID('dbo.ContinuousRowInsert') IS NOT NULL

DROP TABLE dbo.ContinuousRowInsert;

CREATE TABLE dbo.ContinuousRowInsert

(col1 INT IDENTITY(1,1)

, col2 datetime DEFAULT GETDATE()

, col3 CHAR(20) DEFAULT 'a');

SET NOCOUNT ON;

DECLARE @i INT = 1, @start DATETIME = GETDATE();

WHILE @i <= 200000

BEGIN

BEGIN TRAN

INSERT INTO dbo.ContinuousRowInsert DEFAULT VALUES

SET @i += 1

COMMIT TRAN

END

PRINT 'ContinuousRowInsert duration: ' + CONVERT(VARCHAR(10), DATEDIFF(SS, @start, GETDATE()))

GO

Perfmon is enabled and running in the background prior to kicking off the script. Two performance counters, shown below, were set to capture every second and filtered on [TempDB] and [UserDB] database.

- \SQLServer:Databases\Log Flush Waits/sec

- \SQLServer:Databases\Transactions/sec

The same row insertion script will be executed in the context of [TempDB] and [UserDB] database one after another with a 5-second wait between each execution.

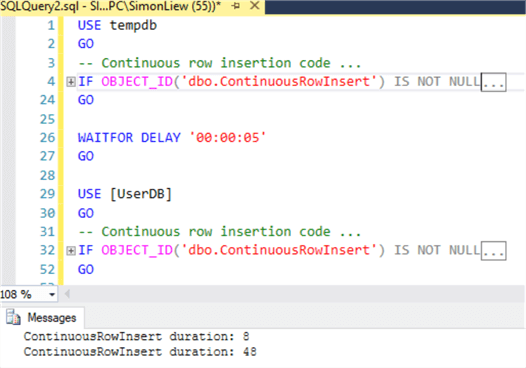

The structure of the code is as below. The "-- Continuous Row insertion code ..."is the full T-SQL script of the 200K rows insertion above.

USE tempdb

GO

-- Continuous row insertion code ...

WAITFOR DELAY '00:00:05'

GO

USE [UserDB]

GO

-- Continuous row insertion code ...

Script Execution Duration

The T-SQL script completed 6 times faster in the context of [TempDB] database as compared to the execution duration in the context of [UserDB] database.

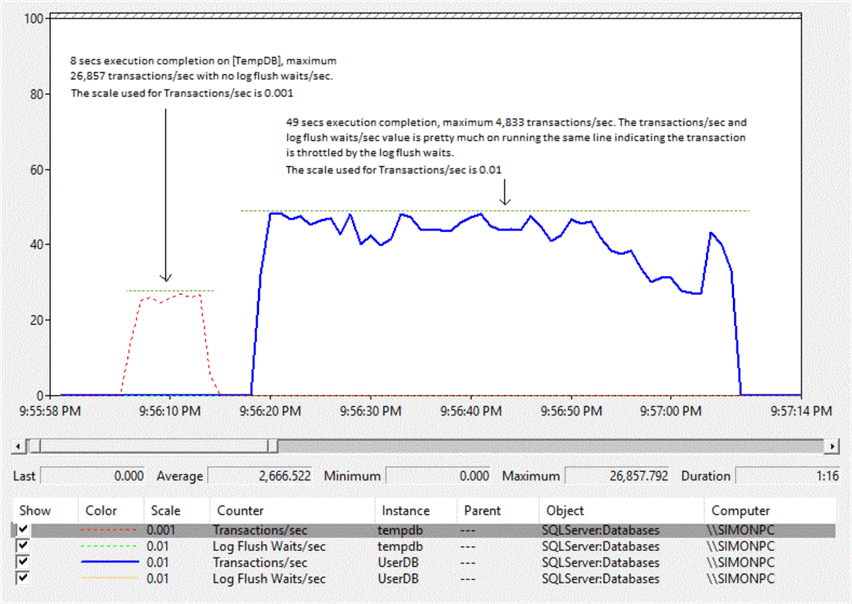

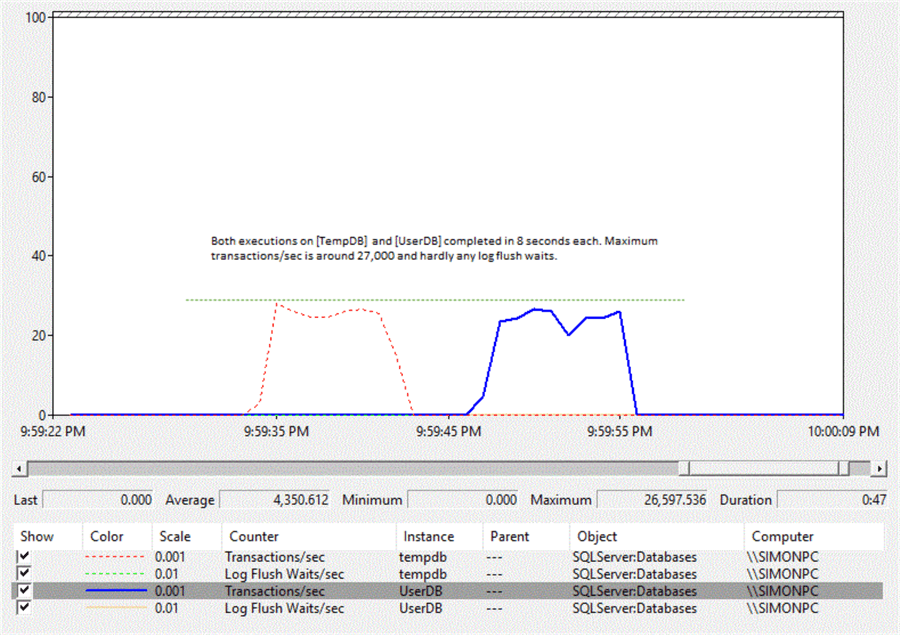

Perfmon Output

We will be looking at the Perfmon output in two different views

- Line graph view and compare the maximum counter value of Transactions/sec

- Report view and compare the average counter value of Transactions/sec and Log Flush Waits/sec

The maximum Transactions/sec counter value on [TempDB] is 26,857 whilst the same counter is 4,833 on [UserDB]. The reason in taking the maximum value of Transactions/sec counter is for simplicity because this value roughly represents the Transactions/sec counter over time as per the horizontal dotted green line drawn in the graph.

Disregard the fluctuation, dividing the two maximum Transactions/sec counter values between [TempDB] and [UserDB] also shows that Transactions/sec on [TempDB] is roughly 6 times higher than on [UserDB].

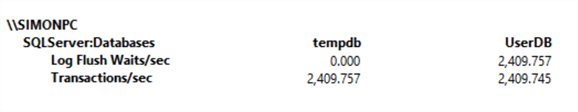

Looking at the Perfmon counter in Report view, there are barely any log flush waits for the execution in the context of [TempDB] database. This behavior is consistent with the effect of the Delayed Durability feature.

On [UserDB], the Log Flush Waits/sec and Transactions/sec counter value is very close to each other indicating each transaction commits only upon the completion of the log flush. Note that the counter values shown in Report view are the average value.

Test 2: Benchmark small transaction on [UserDB] with Delayed Durability

As a complement scenario, would we expect to see the same behavior and execution duration should delayed durability be enabled on [UserDB] database?

We will test with the script below to enable delayed durability on the [UserDB] database.

USE [master]

GO

ALTER DATABASE [UserDB] SET DELAYED_DURABILITY = FORCED WITH NO_WAIT

GO

ALTER DATABASE [TempDB] SET DELAYED_DURABILITY = DISABLED WITH NO_WAIT

GO

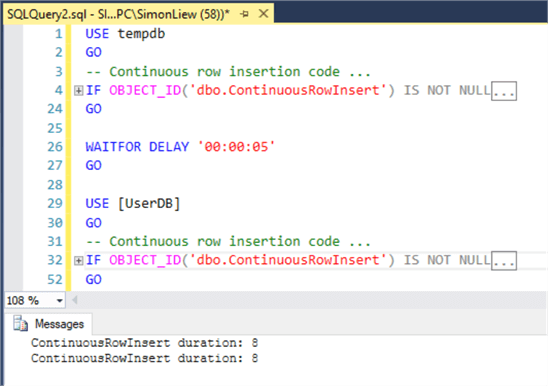

After the script execution above, the workload script in Test 1 is re-executed in the database context of [TempDB] and [UserDB] one after another with the same 5-seconds wait between each execution.

Script Execution Duration

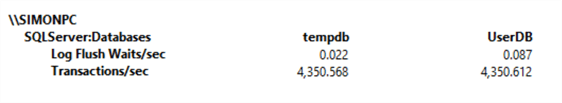

As expected, both executions completed with the same duration of 8 seconds.

Perfmon Output

Looking at the Perfmon output in Line graph, the max Transactions/sec counter value is approximately 27,000 for both [TempDB] and [UserDB] database as per the horizontal dotted green line.

In Perfmon Report view, log flush waits were barely observed in both [TempDB] and [UserDB] and the counter Transactions/sec average values were both close to each other between both databases.

Conclusion

The delayed durability feature is coded directly into [TempDB], hence workloads which are throttled by high log flushes waits would benefit from this feature.

In the context of a user database, this does not mean delayed durability should be enabled whenever high log flushes waits are observed. The feature carries a risk of data loss as it allows transactions to commit pending changes to be hardened in the transaction log.

The delayed durability feature can provide a significant performance gain when implemented for the right workload, like when a developer can leverage this behavior for a complex continuous calculation that requires it to be done in many small transactions. The calculation in small transactions can be done against the full set of data in a temp table, at the completion of the calculation the result can be updated to the main physical table in a single batch operation.

Next Steps

- Control Transaction Durability

- Reduce SQL Server WRITELOG waits using Delayed Durability

- Applying DELAYED_DURABILITY = FORCED on TEMPDB

- Performance Monitor

- Analyze Windows Performance Monitor Counters Using PerfMon

- Download the T-SQL script used in this tip

- Download the Perfmon output

About the author

Simon Liew is an independent SQL Server Consultant in Sydney, Australia. He is a Microsoft Certified Master for SQL Server 2008 and holds a Masterís Degree in Distributed Computing.

Simon Liew is an independent SQL Server Consultant in Sydney, Australia. He is a Microsoft Certified Master for SQL Server 2008 and holds a Masterís Degree in Distributed Computing.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips