By: John Miner | Comments | Related: 1 | 2 | 3 | > Azure Integration Services

Problem

There are existing on premises SQL Server Integration Services (SSIS) packages that use the Azure Blob Download and Azure Blob Upload tasks to move files between on premises and cloud storage systems. Other packages might move data around on local file systems. If you company is thinking of lifting and shifting these packages to Azure, how can you replicate these file management tasks using SSIS?

Solution

Microsoft has been supplying the SSIS developer with a set of tools for Azure since 2012. The most recent Azure Feature Pack for Visual Studio 2019 was released in November, 2019. Most systems designed for Azure use two types of storage: Blob Storage and Data Lake Storage. The Flexible File Task is the next evolution in managing files regardless where they are stored. This control will work with local, blob and data lake generation 2 storage systems.

Today, we are going to investigate how the Flexible File Task can replace existing file management code used with Azure Blob Storage.

Business Problem

There are three use cases that in which the Flexible File Task can come in handy.

| Use Case | Feature |

|---|---|

| 1 | Copying and deleting files saved in local storage. |

| 2 | Copying and deleting files saved in blob storage. |

| 3 | Copying and deleting files saved in data lake storage. |

Because this Flexible File Task works in a variety of storage layers, replacing existing code with this new control might be a good idea. This will ease any future migrations to the cloud. For each storage layer, it is important to know both older controls used in current systems and newer controls provided with the most recent feature pack. A comparison between the Azure Blob Download Task, the Azure Blob Upload Task and the Flexible File Task is useful for understanding the different design patterns that will work with Azure Blob Storage.

It is assumed that a development machine with Visual Studio 2019, SSIS extensions and the Azure Feature Pack has been created. The focus of this article will be on using the new control with Azure Blob Storage.

Use Case #2 – Azure Blob Storage

The Azure Feature package has had controls to upload and download files to blob storage for some time. The second business case is to copy data from on premises to the cloud. Many companies are finally implementing logical data lakes in the Azure cloud. These actions might come in handy for new projects.

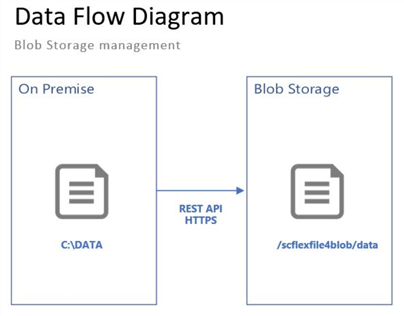

The image below shows the data flow (file movement) between on premise and blob storage. Most everything in Azure boils down to a REST API call over the HTTPS protocol. While I am only focused on uploading files, the Flexible File Task can be used to download files and/or move files around within Azure Blob Storage.

At this time, let us create a blob storage account and a blob storage container. Please adopt a naming convention for your company. I am using the abbreviation "sa" for storage account and "sc" for storage container. The image below shows a storage account on the default dashboard in the Azure Portal.

Stage Data in Blob Storage

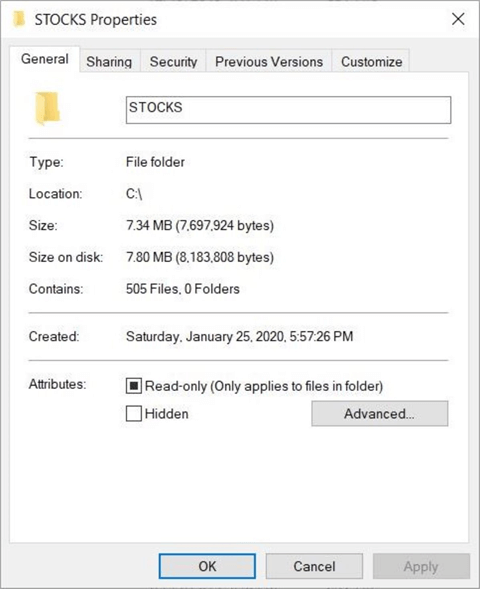

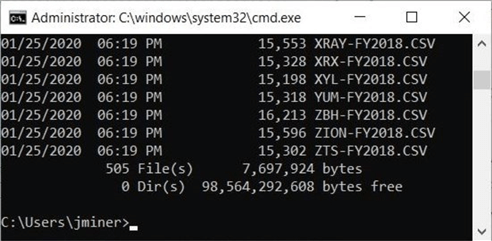

When creating an article (tip), the data for the examples must be staged. In real life, your company might get data copied to a blob container on regular basis. The image below shows the 505 files that reflect daily stock trading data in 2018. These files represent each company in the S&P 500 list.

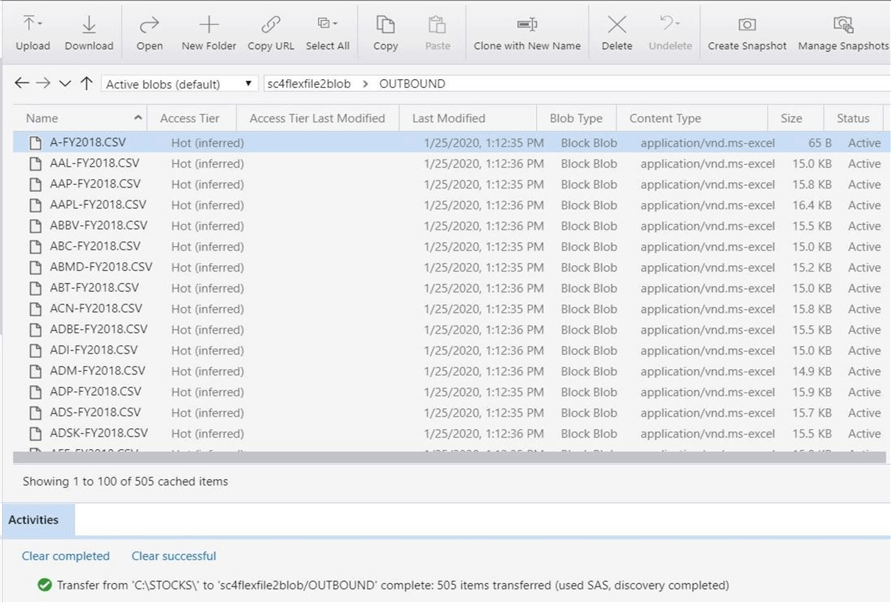

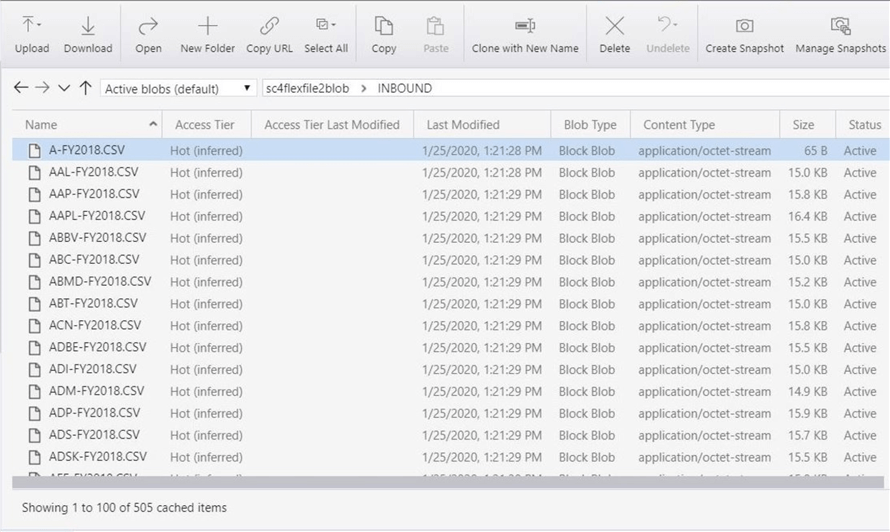

Azure Storage explorer is still a key tool to use when managing storage on the Azure platform. We can upload these files from a local directory into a logical directory in Blob Storage. The image below shows a successful upload of the 505 files.

The actual file path (directory) is just meta data that is associated with a file. There is a limit of 1024 characters when defining this meta data. A company might require the copying of files from one path to another path in blob storage. How can this SSIS package be created with the Azure Blob Upload and Azure Blob Download tasks?

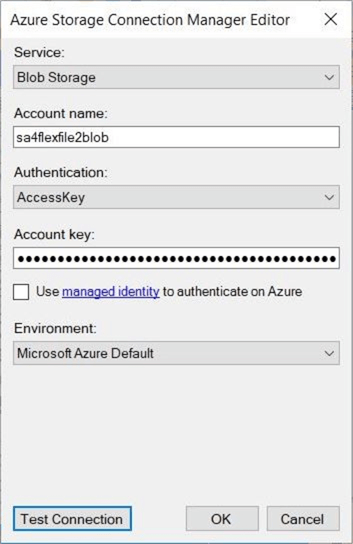

Blob Storage Connection

All tasks in SSIS start with definition the source and destination connections. Please create an Azure Storage Connection via the connection manager. There are two types of security that can be used with blob storage: Access Key or Service Principle. I will be using the storage account access key that can be captured from the Azure portal and should be rotated on a scheduled basis. The image below shows the connection information for the blob storage connector. Please test the connection before moving onto the next step.

Blob Download and Upload Tasks

Today, we want to implement a copy action between two logical file paths. From now on, I will use the shorter name (blob storage) for the full name (Azure Blob Storage). Unfortunately, the older controls do not support files actions within blob storage. The work around is to use the download task to copy files from blob storage to a local storage. The upload task will copy files from the local storage to the new file path in blob storage. I suggest using the %TEMP% environment variable as the local directory. This variable is not only defined for every Windows operating system but it is defined within the Azure SSIS Integration Runtime. Leveraging this path will make package portable.

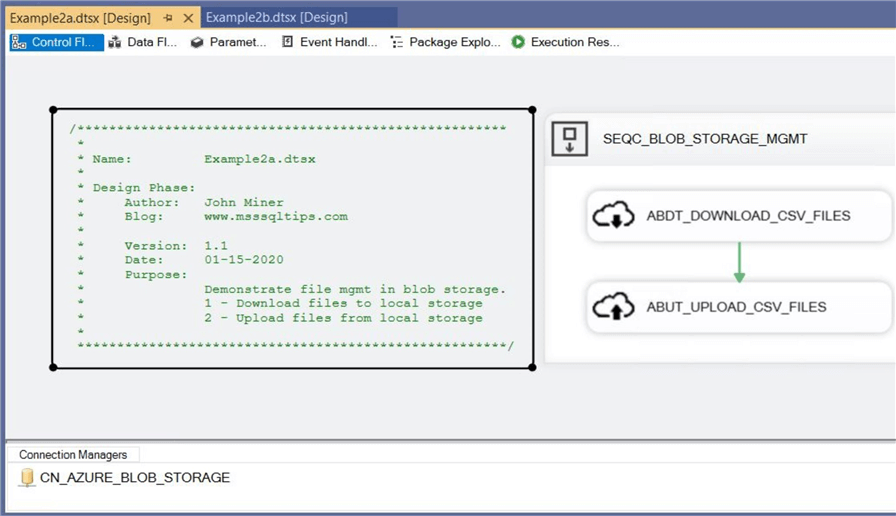

The image below shows the completed package named "Example2b.dtsx". Naming conventions have been used for the sequence container, the blob storage connection, the blob download task and the blob upload task. The precedence constraint is used to guarantees that the download action is completed before the upload action. The addition of documentation using the annotate control completes the package design.

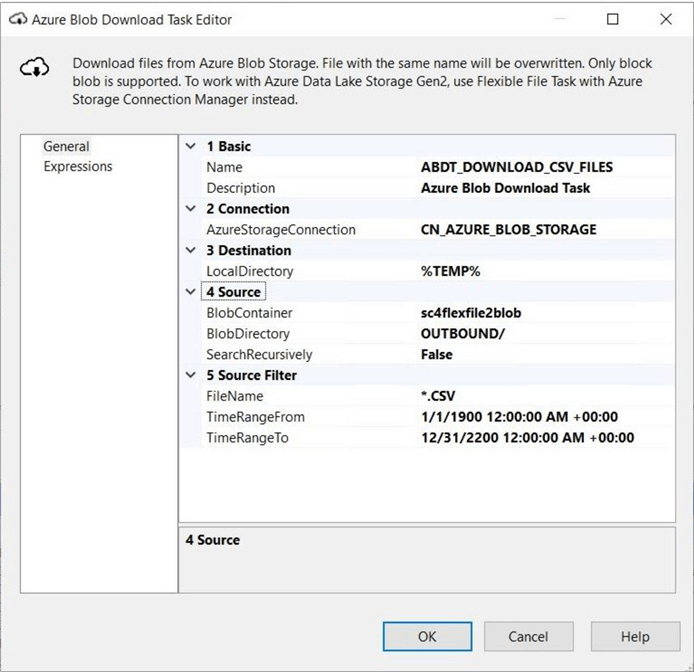

Both the download and upload tasks do not need a local file connections. This is nice since it eliminates a lot of coding. The image below shows the completed data entry for blob download task. The name of the Azure Storage Connection, local destination directory, remote source container, remote source directory and file filter are shown below. The next step is to fill in similar information for the upload task.

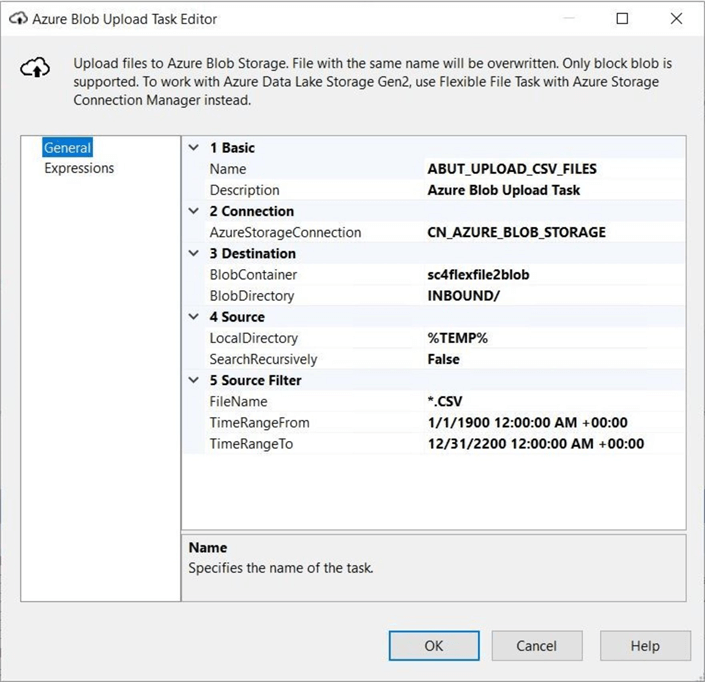

The upload task requires almost the same parameters as the download task. See the image below for details. The main difference is the download task is pulling from the OUTBOUND logical directory and the upload task is pushing to the INBOUND logical directory.

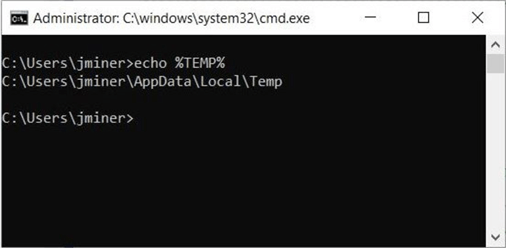

The cool thing about SSIS is the ability to disable a task within the control flow. If we just execute the download action, we can take a look at the TEMP directory. The image below shows a command line window. The echo command can show the full path to the %TEMP% directory. These environmental settings can be changed to your liking using the control panel.

A directory listing of all the comma separated files (CSV), shows the correct number of files have been download to the %TEMP% directory.

The upload and download control automatically overwrite the destination files. If we run the fully enabled package to completion, we can verify that the correct number of files were landed in the logical destination folder. See the image below for algorithm verification.

The Azure Blob Download Task and the Azure Blob Upload Task are focused on copying many files from on premise to in cloud or vice versa. These controls lack the functionality to manage files directly in Azure Blob Storage. The Flexible File Task overs come this functionality. Since companies are moving to the cloud, can we run the resulting package in Azure using the SSIS Integration Engine.

Package Execution Using Azure Database Catalog

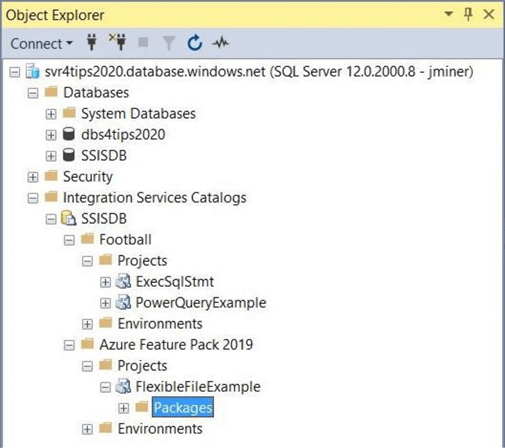

This next section assumes that you have an existing Azure Logical Server named srv4tips2020.database.windows.net. An Azure Data Factory named adf4tips2020 has been deployed and a Azure SSIS Integration Runtime named irs4tips2020 has been configured. Please see this prior article that goes over the deployment of the SSIS catalog using Azure SQL database.

The above image shows the Integration Services Catalog viewed from the Object Explorer. Please make sure you are using the latest version of the SQL Server Management Studio to achieve full functionality. I manually created a new folder within the catalog named "Azure Feature Pack 2019". I used Visual Studio 2019 to deploy the project to this given folder.

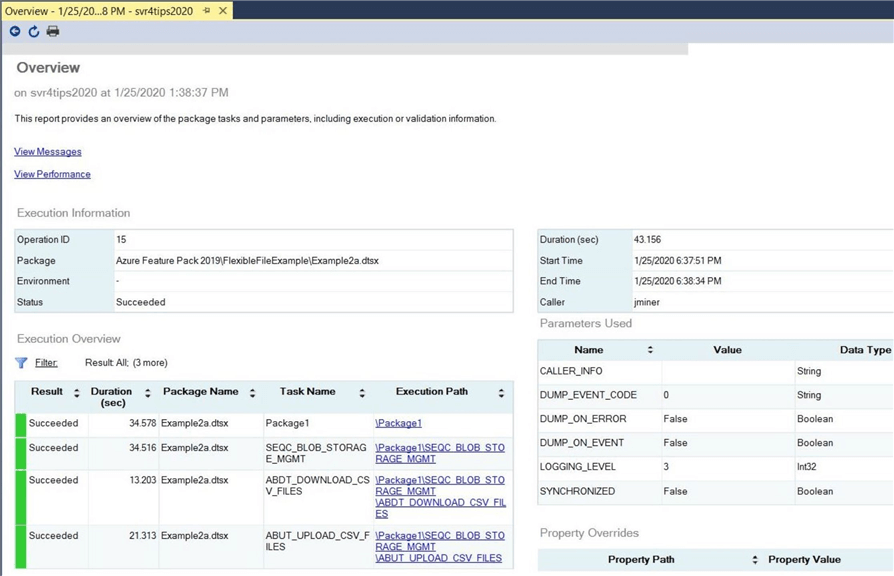

Right click the package name in the catalog and choose the execute option. This action brings up a dialog box. Please check the 32-bit runtime option under the advanced tab. Click the okay button to start the package execution. The user is prompted to view the execution report. This is a real time view of all the messages or steps that the package performs. If you choose the verbose logging option, a lot of information will be shown.

The above image shows the successful execution of our package. Lifting and shifting packages to the Azure Cloud is easy. Again, the download and upload tasks lack the ability to delete files from Azure Blob Storage. Next, we will review a new control that overcomes that issue.

SSIS Flexible File Task

Today, we want to execute a simple test of the COPY and DELETE operations using Azure Blob Storage and the Flexible File Task. In this test, we copy one file to blob storage. We reverse this action by deleting the file from blob storage. I leave it to the reader to turn this package into a MOVE operation. This can be easily done by changing the DELETE operation to work with local storage.

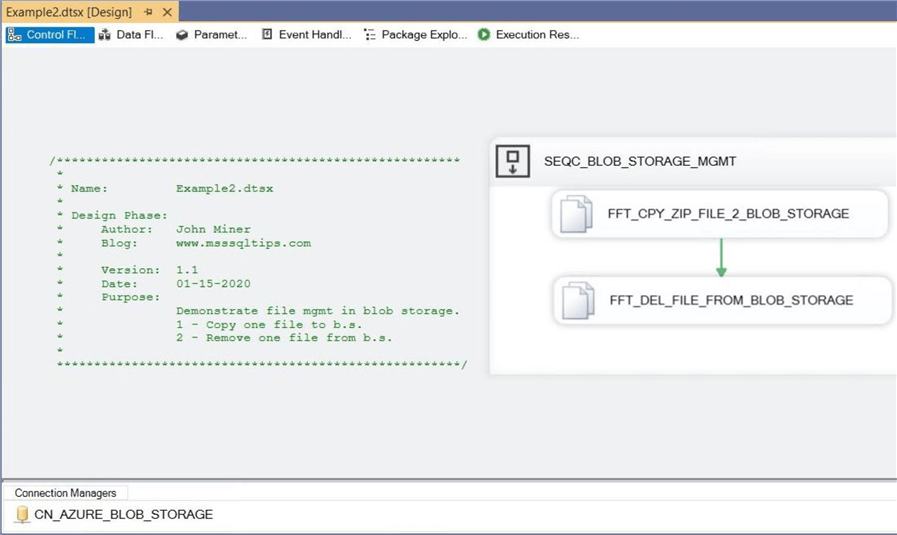

The image below shows the finished package. We will be using the Azure Blob Storage Connection that we defined above. Repeat these steps for this newly package. The following steps can be used to create your own version of the package. First, create a sequence container. Within the container, place two Flexible File Tasks that are joined by a success precedence constraint. The package is correctly named "Example2.dtsx" after our second use case. Commenting and naming conventions allow for easy understanding of the logic many months after the project has been deployed.

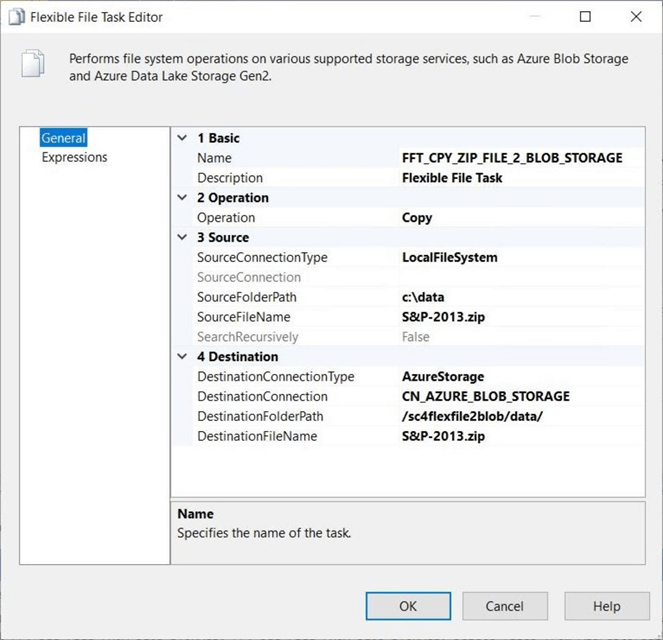

The devil is always in the details. Looking at the first flexible file task below, we can see that the file is being copied from the data directory to the blob storage path named /sc4flexfile2blob/data/. All the paths and file names are hard coded within the control. In real life, would want to use package variables mapped to control properties for a dynamic program. Change the inputs (variables) to the package would change the outcomes (actions).

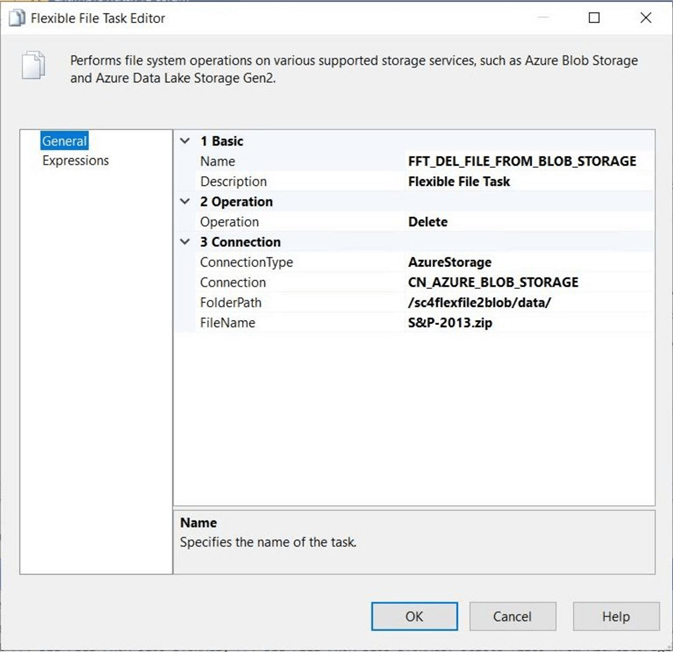

The second task shown below deletes the file from blob storage. We could have deleted the source file from the local file system and completed the logical move action. However, we already verified the execution of this local pattern in the first use case. Testing the deletion of a file from Blob Storage completes our overall test plan.

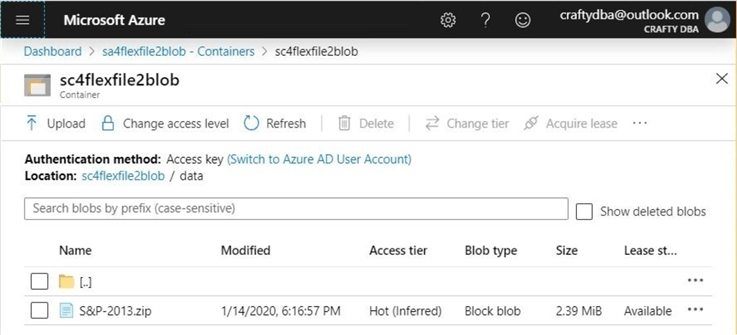

Within Visual Studio, the package designer has the ability to enable or disable tasks in the control flow. If we disable the delete task, we can see that the zip file uploads correctly to blob storage. The image below was captured from the Azure Portal.

To recap, the flexible file task can copy and delete files to, from, and within Azure Blob storage.

Summary

The new Flexible File Task is the next evolution in controlling the copying and deleting of files regardless if they exist in local, blob storage or data lake storage. Today, we compared and contrasted the old controls versus the new control. The Azure Blob Upload and Azure Blob Download task are very powerful since they perform a bulk operation on a set of files. However, these controls lack the ability to remove files from blob storage or copy files between logical folders within blob storage.

The Flexible File Task adds value by allowing the following three copy patterns: copy file from local to blob storage; copy file from blob to local storage; copy file from blob folder A to blob folder B. These actions can be wrapped within a For Each Loop Container to iterate over a set of files. To complete the analysis, the Flexible File Task allows for two delete patterns: delete file from local storage; and delete file from blob storage. To recap, a for each loop container and blob storage file enumerator need to be used to have the deletions be performed on a set of files.

Existing packages will need some mitigation before lifting and shifting to Azure SSIS integration runtime. The %TEMP% directory environment variable can be used for packages that need local storage during execution. Unfortunately, if earlier package C saves a file to disk for later package D to consume, this work around might fail. This is due to the fact that the Azure SSIS integration runtime can be housed on multiple nodes. This makes the service highly available. Also, there is also no documentation on when garbage collection on the temporary directory is perform, if ever.

In short, the Flexible File Task adds some benefits when working with Azure Blob Storage. Next time, we will investigate how to manage files in Azure Data Lake Storage Generation 2 with the Flexible File Task.

Next Steps

- What is new in the Azure Feature Pack for SSIS 2019

- Use the Flexible File Task with Azure Data Lake Storage

- Use the Azure Data Lake File System Task in a control flow

- Use the Flexible File Source in a data flow

- Use the Flexible File Destination in a data flow

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips