By: John Miner | Updated: 2023-02-06 | Comments | Related: > Cloud Strategy

Problem

Going back 30 years, when I started in Information Technology, there were only a few choices to store data in digital form: in a Binary or ASCII file on a storage system. If you were lucky, you had a relational database management system (DBMS) for the entire enterprise. At my first job, we used the MS DOS operating system. Code was written in Microsoft Basic to write flat files and/or Btrieve files. Today, there are so many more choices. How can we know which data platform solution is correct for our company?

Solution

Today, we will review the high-level architectures for Relational Databases, NoSQL platforms, Graph Databases, Apache Spark, and Data Warehouses. Each of these systems can be used to store your company's collection of data. We will review the pros and cons of each platform. The three big cloud providers are Azure Cloud Computing Services (Azure), Amazon Web Services (AWS), and Google Cloud (GCP). Each vendor supports a variety of data platform products, and links to documentation will be provided to assist with your evaluation of the services. At the end of each section, I will list the pros and cons of each data platform and links to popular products. This is not meant to be a complete list of products or features, but an introduction to the technology.

I always like referring to articles from the Gartner Group when deciding on a new product. Their experts analyze current players in a given market, and access to their information is by subscription only. They have coined the term "magic quadrant chart," categorizing the vendors into niche players, visionaries, challengers, and leaders. This is a good starting point for an IT manager when they are trying to pick a new product.

In this tutorial, we will look at:

- Relational Databases

- NoSQL Databases

- Graph Databases

- Apache Spark

- Data Warehouses

Relational Databases

A relational database management system (RDBMS) is based upon E.F. Codd's paper written in 1970. A record of data within the table is represented as a tuple in which each column has domain integrity. Domain integrity ensures that a column defined as an integer has a certain range of values. Trying to store a larger or smaller value outside this range will result in an error. Primary Keys are used to uniquely identify each record and enforce entity integrity. It is very hard to update a record when it is not unique, like changing an IRS record without knowing the social security number. The foreign keys relate one table to another and enforce referential data integrity. One example of this integrity is the inability to insert an order detail record if the order header record does not exist. This logically makes sense.

Most database vendors adhere to the core constructs in the ANSI SQL definition. However, some vendors like Microsoft and Oracle have deviated from the standard for specific use cases. For instance, creating a Common Language Runtime (CLR) function is only defined by Microsoft SQL Server.

The Structured Query Language (SQL) statements can be divided into three groups. The Data Manipulation Language (DML) statements are related to CRUD. CRUD is an acronym for creating, reading, updating, and deleting records. These actions are directly related to the INSERT, SELECT, UPDATE, and DELETE statements. The Data Definition Language (DDL) defines static objects in the database. Statements that support the CREATE, ALTER, and DROP keywords fall into this category. The CREATE TABLE statement is part of the DML specification. The Data Control Language (DCL) supports security keywords such as GRANT, REVOKE, and DENY. This language is used to give users rights to database objects. The GRANT ALL statement is part of the DCL specification.

Relational databases have been popular for the last 50 years. They have been used to solve a variety of business problems. If you look around your organization, you will find one or more relational database products being used.

Before we talk about the pros and cons of databases, we need to understand that things have changed over time. In the past, we installed the database software on physical hardware. Before the cloud became popular, we consolidated larger numbers of small to medium-sized machines using products such as VM Ware and/or Hyper-V. These products support the deployment of virtual machines. We can go over the number of logical computing objects as long as we never fully use all the physical computing objects. Cloud vendors support Infrastructure as a server (IaaS), aka virtual machines, and Platform as a Service (PaaS), aka cloud services.

When considering physical hardware or IaaS environments, it is a fact that RDBMSs are not scalable on demand. Database administrators can add more resources to the server when a performance problem is encountered. However, this is usually a manual action that requires downtime. This task is considered vertical scaling or scale-up action. Some relational database vendors, such as Microsoft, have tried to solve part of the BIG data problem by allowing multiple read-only secondary replicas. However, only the primary replica can accept write actions.

The PaaS environments do offer some advantages over their IaaS siblings. First, all maintenance and disaster recovery are handled by the service. Second, some cloud vendors implemented scaling with limited effort and downtime. Microsoft does support scaling of Azure SQL database. Thus, if you need more CPU cores or disk space, pick a different tier. Some vendors even support serverless features. The service will pause, so the user is only billed for storage when computing power is not required.

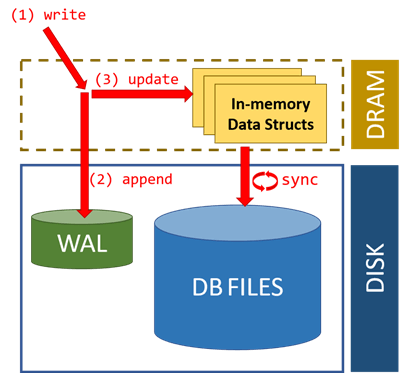

There are many different database flavors; however, most vendors use write ahead logging to maintain the ACID (Atomic, Consistent, Independent, Durable) properties. The straightforward image below depicts write ahead logging. Reading is simple: pull pages from disk to memory, apply any SQL transformations, and return a result set to the user. Writing is a whole different story. When making a logical change to the data, the data page is modified and considered dirty since it has not been written to the database. A log entry has to be added before the data can be persisted to the database file at the end of a transaction. This step allows for recovery when a crash might happen during a transaction. Completed transactions can be rolled forward, and uncompleted transactions can be rolled back.

| Considerations | Description |

|---|---|

| PROS | Very easy to install. Many different product choices. Well-known technology. |

| CONS | Physical limit on computing power. Scaling usually means downtime. |

| FLAVORS | DB2, MariaDB, MySQL, Oracle, PostgreSQL, SQL Server |

| AZURE | Azure Databases (Cloud Database) |

| AWS | Amazon Databases (Cloud Database) |

| GCP | Google Databases (Cloud Database) |

NoSQL Databases

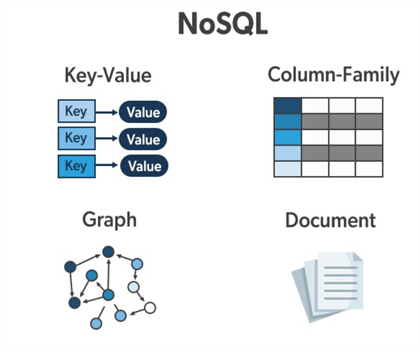

Truly different from other storage options, NoSQL databases supply the developer with data storage and retrieval patterns that differ from the relational calculus theory used in RDBMS. Four common ways to store and retrieve data in these databases are key-value pair, wide column store, document store, and graph database as seen in the image below from the Geeks-For-Geeks website.

Source - https://www.geeksforgeeks.org/types-of-nosql-databases/

NOTE - I decided to give the graph database its own section below for discussion since it is truly different from all the other storage options.

The key-key pair paradigm can be considered a dictionary or hash table. The table is automatically indexed on the key. This storage pattern is the most simplistic of all systems to be covered today. The typical CRUD operations are supported at a programming layer.

The document database has become very popular with programmers that use a code-first methodology. Given that most WEB APIs (application programming interfaces) use representative state transfer (REST) architecture, the management of JSON documents lends itself to this technology. JSON is how data is transferred between the client and the server. If you consider the JSON document as a single value, then the key is the field in the document that is indexed and partitioned. It is not surprising that the Azure COSMOS database uses the same storage engine for both databases.

What is a wide column store database? It is a collection of rows and columns grouped as a single table. What is different about the table? Unlike a relational database, the number and names of the columns can be different between rows. This database system is sometimes referred to as a two-dimensional key value store. The idea of column families is used to group rows with similar schemas. The data for a given column family is stored row by row.

What do all these storage systems have in common?

Not only SQL databases (NoSQL) support very fast read and write operations. That is why they are popular among developers. Thus, web applications that need high performance use these storage systems. Many times, the stored data can be geo-replicated. Unlike databases that ensure strict consistency between replicas, these systems loosen their restrictions to keep performance high. That means a record stored in the North America Database will eventually end up in the Asian Database. This is called eventual consistency. Partitioning plays an important role in the performance of these storage systems. For instance, the Cosmos database allows you to select a logical partition, but the physical implementation is managed by the system.

Most of these modern NoSQL systems do support a SQL syntax for querying. However, advanced SQL features like common table expressions are probably not supported. Features such as triggers, stored procedures, and functions might use JavaScript for definition. The underlying system executes these scripts during record processing. The table below lists the pros, cons, types, and some popular products on the market.

| Considerations | Description |

|---|---|

| PROS | Very fast read/write operations. Supports many levels of consistency. |

| CONS | Supports a subset of SQL Syntax. Slower at aggregating data. |

| FLAVORS | Big Table, Cosmos DB, Couch DB, Dynamo DB, Document DB, Firestore, and Mongo DB |

| AZURE | Azure Databases |

| AWS | Amazon Databases |

| GCP | Google Databases |

Graph Databases

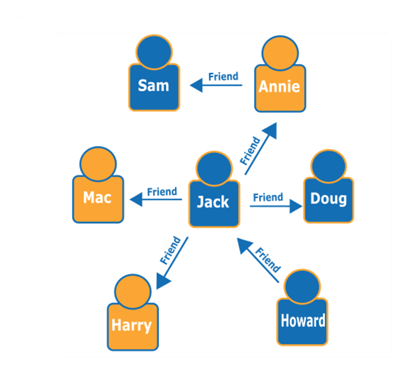

A graph database consists of three objects. A node represents a logical item, such as a person. An edge represents a relationship between two nodes. Directed edges might have different meanings than undirected edges. Properties are attached to the node to describe it further. The image below was taken from the AWS website. It is a social relationship graph within a local chess club. Let's assume that the person at the end of a directed edge can be asked to play a game and has a high probability of the request being approved. Thus, Howard can ask Jack for a game of blitz chess. However, the opposite is not true.

Gartner Group suggests there are five main problems that graph databases can solve: social graph – the connections between people, intent graph – what motivates human beings, consumption graph – the spending patterns of individuals, interest graph – what are the hobbies of individuals, and mobile graph – it is comprised of data from phones.

For each of these problems, I can list a widely used cloud application. LinkedIn is a good example of a social graph in which new connections are suggested to users. Twitter is a good example of an intent graph in which tweets can be related to emotions. Microsoft advertising uses interest graphs to suggest solicitations to users viewing a web page via Bing. The Amazon website uses a consumption graph to offer products that might interest a buyer. Finally, Google Maps keeps track of the location data on your mobile phone and charts this data on a world map over time.

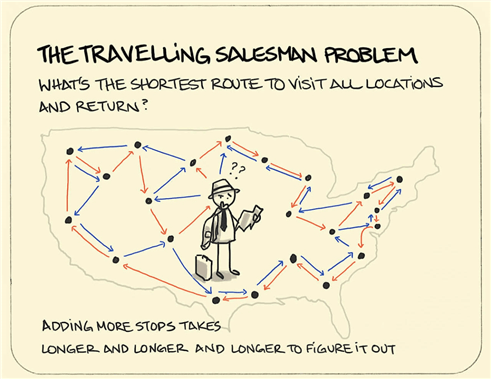

Do all problems fit into these categories? The answer is "not exactly." A famous computer science problem is the traveling salesperson. The image below was taken from an IBM partner using the CPLEX optimization studio software. Please see the association from computer machinery (ACM) for the actual publication of the article in October 1960. The edges might contain additional information such as traveling distance, customer priority, fuel cost, etc. Miller, Tucker, and Zemlin devised an algorithm to solve this problem in record time. Since locations can now be represented by GPS coordinates, we can classify this historical problem as a mobile graph.

How is the data stored in a graph database? It all depends on the product. Some products abstract the graph data so that it can be stored in a relational database. Other products choose to save the graph data as documents in a NoSQL database. In short, check the product documentation to be used.

Do graph databases have a common query language? The answer is "maybe in the near future." Before 2019, there were a myriad of languages created by product vendors. After 2019, national standards organizations such as ISO came together to propose and validate a language called Graph Query Language (GQL). However, this language may or may not be implemented by your vendor. The snippet below might be a Cypher query to return data for the traveling salesperson. It shows who the customer is and what city/state they live in.

MATCH (p:Customer)-[:WORKS_IN]->(c:City) RETURN p.first_name, p.last_name, c.name, c.state

To summarize, graph databases are a real niche area of computer science. They come in handy when you have a use case that can be represented as a graph.

| Considerations | Description |

|---|---|

| PROS | Data must have nodes, properties, and edges. |

| CONS | Not suitable for OLTP or OLAP use cases. |

| FLAVORS | Database: Cosmos, DataStax, Neo4j, Neptune. Query Language: Cypher, GSQL, Gremlin, PGQL, and Morpheus |

| AZURE | Azure Databases |

| AWS | Amazon Databases |

| GCP | Google Databases |

Apache Spark

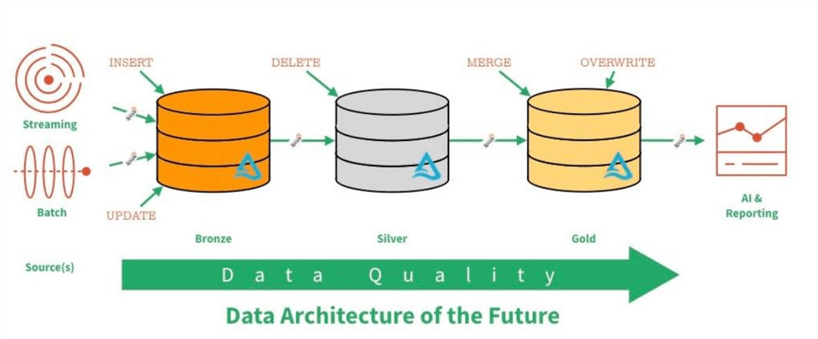

The Apache Spark ecosystem solves big data problems by scaling out the hardware as a cluster. Regardless of the chosen cloud vendor, data is stored in a data lake. Therefore, it is important to understand quality zones and how to reduce surface attack areas.

The image taken from Databricks below shows a typical Lamba architecture. The bronze or raw zone will contain an unmodified copy of the file. I suggest using strong file formats such as Apache parquet that have both the schema embedded in the file and the ability to be partitioned. The silver or refined zone might perform some type of data processing. Some typical transformations are removing duplicate data, adding surrogate keys, and/or correcting invalid data such as addresses. The gold or curated zone is where the data is ready for the end user.

Each zone should have security to reduce the surface area that a hacker can attack. For instance, in Azure, we can place the data in a separate storage container and/or storage account. Always use the principle of least privilege. Thus, the Azure Data Factory (ADF) pipeline does need access to the bronze zone, and the Power BI (PBI) service will need access to the gold zone.

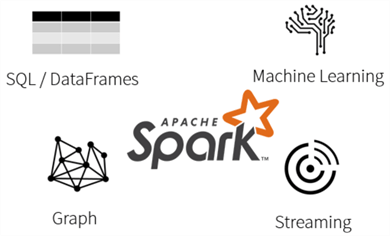

The Spark ecosystem can solve a variety of problems. The data engineer can use Spark SQL and/or dataframes to read, transform, and write data. Streaming jobs can be used to ingest data from event hubs or remote storage if needed in real-time. Graph problems can be represented and solved using Apache Graph Frames. Machine learning problems can be solved with typical packages such as Scikit-Learn, XG Boost, etc.

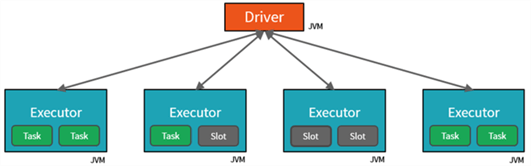

How does the Spark engine work? It is based on distributed processing. At the heart of the Spark ecosystem are thousands of lines of Scala Code. Scala is an object-oriented Java Language. The image below shows a 5-node cluster. Each node is a java virtual machine running on a Linux OS. The high-level spark notebook written in your favorite language, such as Python, is compiled by the optimizer into Java Byte Code. Depending upon the partitioning of the data file and the physical execution plan created by the optimizer, a given number of slots are chosen to execute the code on the worker nodes. The driver node coordinates the processing of the code and returns the results if required.

Unlike map reduce, Spark uses resilient distributed datasets (RDD) in memory for processing. The elimination of constant reading and writing to disk has reduced the usage of Hadoop on new projects. However, programming with RDDs is tedious and not optimized. Therefore, most big data programmers use dataframes for data engineering tasks. Please see this article that compares how RDDs perform compared to dataframes.

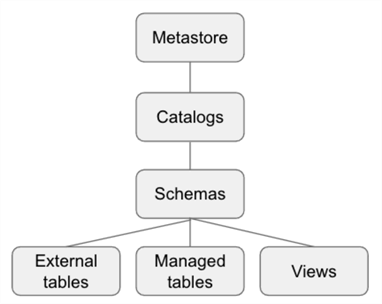

The hive catalog is key to representing files as tables. The image below was taken from the Microsoft website for the unity catalog. The central meta storage allows sharing of data between spark deployments. Within a hive catalog, a schema is considered a database. External tables (unmanaged) are files stored in remote storage. Dropping an external table result in metadata being removed from the catalog. Local tables (managed) are files stored with the catalog. Dropping a managed table results in both data and metadata being permanently removed.

Only the spark.read and spark.write methods are written for distributed processing. Therefore, many developers read a file into a dataframe and convert it to a temporary view using the createOrReplaceView method. After that action, Spark SQL can join and transform one or more views. Use the spark.sql method to capture the output of the data engineering into a dataframe which can be written to storage. In short, if you use the Python language in your notebooks, you should get familiar with the PySpark Library.

The Apache Spark ecosystem has so much more to offer, but unfortunately, it cannot be fully covered, even at this high level. It gets even more complicated if you use Python since that language has many pre-built, open-source libraries. Remember, any library that is not distributed processing aware runs at the single driver node. Thus, a large job might run slow in this situation. One solution to this problem is to create a single node cluster and scale up the computing power.

The table below shows that each cloud vendor has their own adaption of Apache Spark. However, Databricks is the only vendor that runs on all three clouds.

| Considerations | Description |

|---|---|

| PROS | Works with unstructured, semi-structed, and structured data. |

| CONS | Must know a programming language. Might be slower for small data requests. |

| FLAVORS | Azure Synapse, Amazon EMR, Google Dataproc, Databricks (cross cloud platforms) |

| AZURE | Azure Spark |

| AWS | Amazon Spark |

| GCP | Google Spark |

Data Warehouses

Large data warehouses started as on-premises appliances that use massively parallel processing (MPP) architectures to provide high-query performance and platform scalability. Most appliances implement a "shared-nothing architecture," where each server operates self-sufficiently and controls its own memory and disk space. The distribution of data evenly across the servers allows the DW appliance to resolve a relational query by scanning data in parallel. The divide-and-conquer approach delivers high performance and scales linearly as new servers are added to the architecture. In the past, vendors that supplied DW appliances were Exadata, Netezza, PDW, and Teradata. Many of these vendors have transitioned to cloud products.

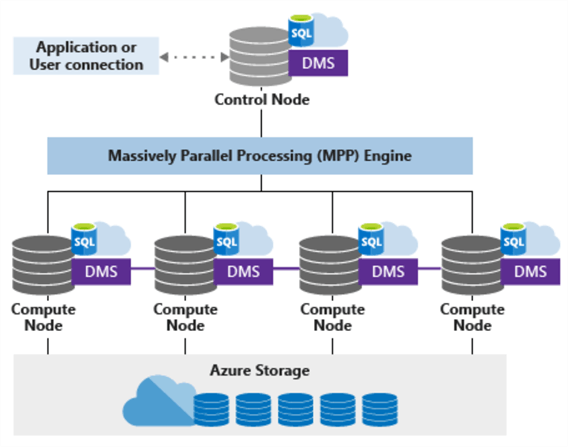

Some of today's Cloud Data Warehouses follow the architecture of their on-premises predecessors. Most important for these systems is how the data is distributed between the logical servers. For this topic, we will discuss Azure Synapse Analytics, dedicated SQL pool, as shown in the image below. We can see that each compute and control node is independent of each other.

However, when joining two tables or aggregating data, the actual data distribution is important. Three different distributions strategies can be defined for a table:

- Hash distribution – a column is used to spread the data between the nodes,

- Round robin distribution – the data is randomly distributed between the nodes and

- Replicated tables – key reference data is duplicated between all nodes.

The round robin distribution is great for loading data quickly but is not typically used for production tables. A hash distribution might be ineffective if the column is not used in the JOIN, GROUP BY, DISTINCT, OVER, and HAVING clauses. Please see the documentation for details. This performance condition is called shuffling, in which data has to be moved from one node to another. The data movement service (DMS) is not a smart utility; it will always expand the varchar buffer to the max allocation. Thus, right sizing your variable character fields is very important. Replicated tables can speed up processing when the reference data is used extensively in queries.

Not all products are made equal. Therefore, it is important to consider the following features when selecting a vendor:

- Separate Storage: Some products separate storage from compute. This saves the customer when computing can be paused during off hours.

- Scalability: The ease in which the product can scale is important. Some products are very hard to change once they are deployed.

- Replication: Most products support replication. This feature is necessary if you want the data close to the end users or application. Also, this eliminates egress charges between data center zones.

- Cloning: Some products support cloning technology. This technology is not limited to data warehouses. For instance, Databricks supports both shallow and deep delta clones.

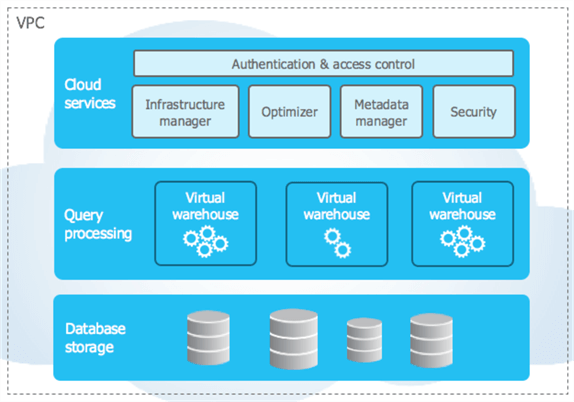

The image below shows the architectural diagram of the Snowflake Data Warehouse. It is unique because it is the only vendor supporting all three clouds.

There are a couple of interesting features that make it stand out:

- Uses micro partitions to store data. Each partition is up to 16 MB of compressed data. The technology uses a hybrid column storage format, including column statistics stored in the header. With these statistics, the engine can use partition elimination with searching for data.

- Second, any changes to the table data in an existing micro partition via a DELETE or UPDATE action causes the whole partition to be re-written to disk. That is why time travel is possible with the data.

- Worries about data distribution. Therefore, you do not have to worry about how to hash the table data across the compute nodes.

Now that you understand how a Data Warehouse works, what SQL operations does the software handle well? A Data Warehouse is not meant to be an Online Transaction Processing (OLAP) system. Many small INSERTS, UPDATES, and/or DELETES will not perform well. Single SELECT statements will perform okay. However, DW software excels at aggregating enormous amounts of data. If we stored all the transactional data for Amazon purchases in the data warehouse, we could easily aggregate the data by vendor and product to get sales totals by year, month, and day.

The table below shows that each cloud vendor has their own proprietary data warehouse software. However, Snowflake is the only vendor that runs on all three clouds.

| Considerations | Description |

|---|---|

| PROS | Loads and aggregates large structure data efficiently. |

| CONS | Does not support single execution of CRUD statements quickly. |

| FLAVORS | Big Query, Redshift, Synapse Analytics, Snowflake |

| AZURE | Azure Databases |

| AWS | Amazon Databases |

| GCP | Google Databases |

Summary

In this article, we reviewed five modern data platforms. I hope the architectural discussion of these technologies gave a broad knowledge of their use. At this point, let's review what we learned.

First, relational databases have been around since the 1970s. They are used in many different applications. For instance, FedEx shipping software uses the express edition of Microsoft SQL server to store data locally. Relational databases use ANSI SQL to manage access, objects, and data. The main drawback of relational databases is that they scale vertically. If you need more computing power, downtime must be scheduled to add hardware. This downtime has been reduced or eliminated with cloud systems. However, there is still a limit on the total number of cores and memory a virtual machine might have.

Second, not only SQL databases (NoSQL) use different storage patterns than databases that use logical tables stored in files. The key-value pair is the easier storage pattern. A document storage engine can be simplified by considering the index column as the key and the JSON document as the value. Last, the wide column storage pattern is a two-dimensional key value store in which different record types can be stored in the same logical table. Almost all of these products support a limited version of ANSI SQL. They are very popular since they are extremely fast, can be geo-replicated, relax consistency rules between regions, and map directly to a code first application approach.

Third, graph database technology is a very niche area of computer science. The five categories of graphs are social, intent, consumption, interest, and mobile. Computer scientists have been thinking about solutions to graph problems for years. The traveling sales problem was brought up in a paper to the ACM in 1960. While these problems can be solved using brute force coding methods, the graph database supplies the end user with prebuilt algorithms. This type of database does not lend itself to OLTP and OLAP workloads.

Fourth, the spark ecosystem has become very popular with companies starting to leverage the data lake. There are four computing problems that the spark engine can solve: data engineering, streaming data, graphing problems, and machine learning. This technology solves big data problems by scaling out computing power using a cluster. The code in the spark notebooks is compiled into Java Byte code that runs on Java (Linux) Virtual Machines. Only the spark library is distributed processing aware. Using additional libraries results in the code being executed at one node, the driver. It is very important to organize your data files into quality zones. For the end users, hive tables are preferred over spark dataframes. In short, spark works well with unstructured, semi-structured, and structured data.

Fifth, the data warehouse technology started as on-premises specialty appliances. With the mass migration of new projects to the cloud, many of these products have been transitioned to cloud services. They all have a shared-nothing architecture. That means a given node or server has its own CPU, RAM, and DISK. It is very important to distribute the data evenly across the servers. Query performance can be degraded when huge amounts of data need to be shuffled from one node to another. A common storage system saves the data when the computing power is paused. Look for products that scale on demand; have a separation of computing power and disk storage; allow for replication and cloning of data; and fit your company's budget. Data warehouses work well with ingesting and aggregating large amounts of structured data. Single operations on these servers might not perform well.

Today, there are many choices when choosing a data platform for your new project. This article has given you the knowledge to narrow down your search. Happy hunting for your new data platform.

Next Steps

- Here is some additional reading:

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2023-02-06