By: Ron L'Esteve | Updated: 2022-07-15 | Comments | Related: 1 | 2 | > Cloud Strategy

Problem

This article is a continuation of the two-part series on A Cloud Data Lakehouse Success Story. Please refer to Part 1 of the series to gain a deeper understanding of the existing on-premises challenges, business goals, strategy & solution approach, and the Cloud Data Lakehouse solution's capabilities. As organizations go deeper into their evaluation of cloud platform adoption, they are interested in learning more about architectural patterns and components of success. Although the solution described has been implemented on Azure, customers are also interested in understanding the capability of implementing similar architectural paradigms on other leading cloud provider platforms. Before embarking on their cloud adoption journey, customers are also interested in learning about expected business outcomes and lessons learned from similar cloud platform adoption success stories.

Solution

In this final series on A Cloud Data Lakehouse Success Story, you will learn more about the architecture of a successfully implemented Cloud Data Lakehouse on the Azure Platform. You will also learn about corresponding architectures and technology components on other leading cloud platforms including AWS and GCP. Finally, you will learn about the expected business outcomes and lessons learned from this Cloud Data Lakehouse Success Story.

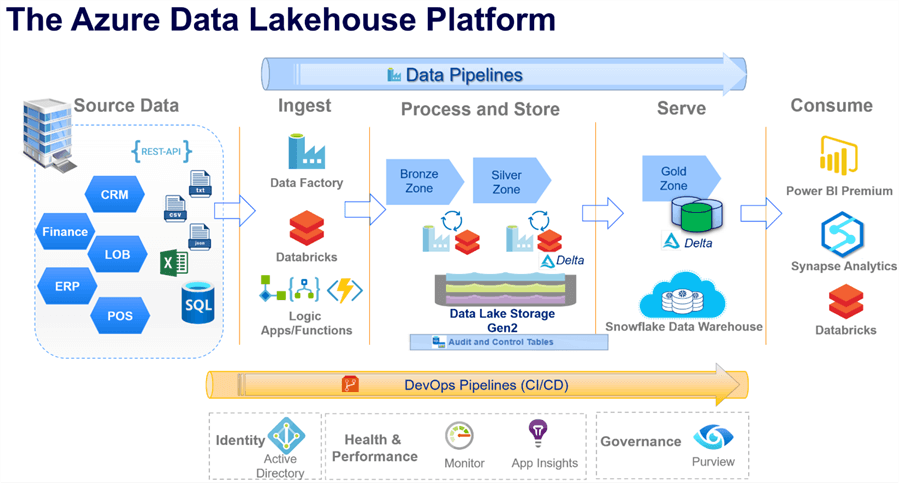

Azure Data Platform Architecture

While this solution was primarily built on the Azure Platform, as shown in the figure below, it also integrated many non-Azure native technologies including Snowflake as a data warehouse serving layer, and non-Azure DevOps CICD, orchestration, and credential management tools. This multi-technology integration process demonstrated that integrating a native Cloud Provider with non-native technologies is a capability that many organizations are interested in. With this Data Lakehouse in place, the organization was able to leverage Databricks, Synapse Analytics, and Power BI to build advanced analytics use cases and deliver quick value, ROI, and business outcomes.

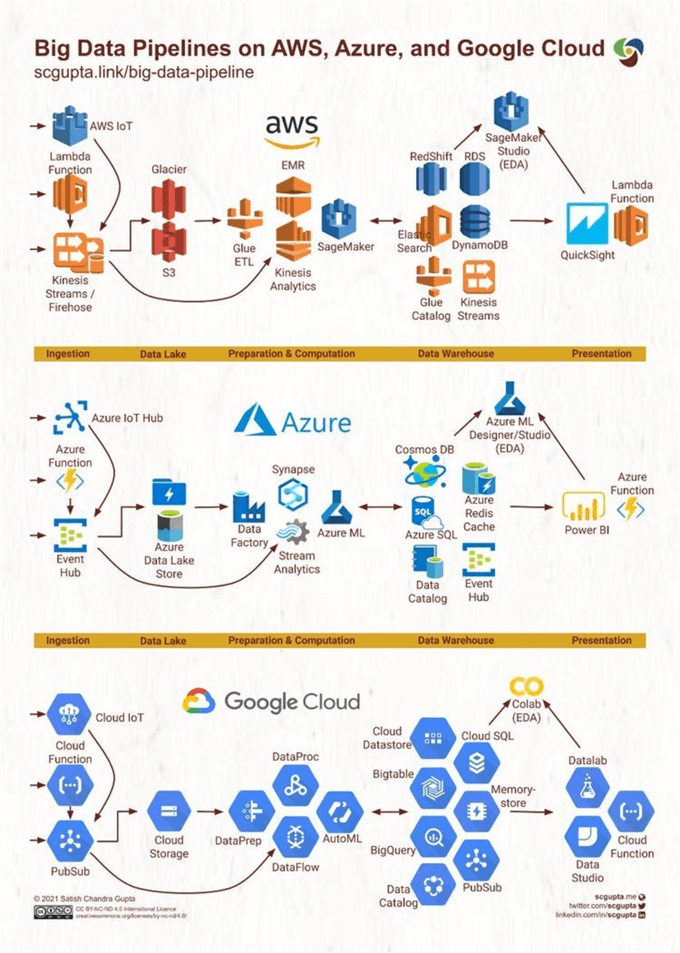

Multi-Cloud Data Platform Architecture

While this section is not intended to deep dive into the pros, cons, and trends of multi-cloud, it is worth mentioning that other major cloud providers including Amazon (AWS) and Google (GCP) have their own corresponding architectural paradigms and technologies, similar to Azure. There is an equivalent service offering for data ingestion, processing, storing, serving, and consuming across all of the three major cloud providers. The figure shown below illustrates this end-to-end comparison of Cloud Data Platform architectures for AWS, Azure, and GCP. This shows that although this Cloud Data Platform success story is tightly coupled with Azure, similar benefits and implementation victories can also be accomplished with other major cloud providers. Having the flexibility to choose between cloud providers for some or all of the Cloud Platform implementation components is powerful and shows the provider agnostic capabilities that are available to evaluate, implement, and deliver value-added outcomes to business stakeholders.

Expected Business Outcomes

The new Modern Cloud Data Platform was able to instantly deliver value through real-time DevOps continuous integration and deployment best practices, along with access to Advanced Analytics capabilities. Customer Lifetime Value (CLV) Machine Learning Models which were previously outsourced to vendor consultancies were brought in-house to be developed in Databricks, a universal Data and Advanced Analytics Platform which integrates well with multiple cloud providers. CLV ML Models helped identify the organization's most valuable and longest lifetime customers which led to prioritizing resources and spending limits for unprofitable customers to help improve the ROI of marketing programs.

With Databricks' Solution Accelerators, a number of Machine Learning (ML) notebooks supplemented and accelerated the model development efforts. Data was being sourced from both on-premises and cloud systems through re-usable ELT pipelines driven by Audit, Balance, and Control (ABC) Frameworks which landed data into a Medallion Architecture with multiple zones. Snowflake served as a data warehouse with consumption-ready data. The Data Lake Gold zone contained curated and consumption data in Delta format, an open-source data storage format that addresses the fundamental principles of ACID compliance within a Data Lake. With re-usable data engineering pipelines, the client was empowered to build self-service data engineering workloads and robust reporting capabilities through self-service BI. The overall impact of the solution included highly secure, performant, cost-efficient infrastructure, quicker time to insights resulting from near-real-time advanced analytics, CI/CD, and self-service BI.

Lessons Learned

As your foundational Cloud Platform MVP project comes to a close, every player and stakeholder of the project team will have learned a valuable lesson that is worth sharing in a retrospective discussion. Different personas bring their perspective on lessons learned from a foundational Cloud Platform implementation MVP Project. The perspective explained in this section is from a Data Consultant's point of view.

1 - Clearly outline your MVP Project's risks, dependencies, goals, and timelines.

A Minimum Viable Product (MVP) is merely a pilot which introduces the foundational Cloud Platform to the organization and demonstrates immediate business value by solving a specific use case defined by business stakeholders. Stakeholders will obviously be interested in a mature Cloud Platform; however, this may take many months or even years to achieve. When outlining your MVP project's intended deliverables, be sure to factor in any potential risks and dependencies while setting clear timelines and milestones along the way. Most likely, you will have a Project Manager or Scrum Master assigned to the project that can help with this and clearly keep track of project status and report out to business stakeholders at least weekly, if not more frequently. Once the MVP project is delivered and it proves out the value generated, future iterations and roadmap plans for the Cloud Platform would continue to mature the platform and onboard additional products, customers, and use cases.

2 - Evangelize Cloud-native technologies that play well with each other.

There is room for iterative improvements post-MVP. During an MVP, given the innovative nature of the digital transformation pilot project, organizational leadership may require integrating the foundational Cloud Platform with existing Cloud or on-premises tools used for reporting, source systems, credential management, data governance, orchestration, continuous integration, and deployment, and more. While there is a corresponding cloud solution for each one of these, there is value in leveraging the MVP project to test integration capabilities with one or many of these existing tools. On the other hand, since this is a digital innovation and transformation project, there is also value in demonstrating the ease of integration with similar cloud-native technologies to demonstrate improved performance, lowered costs, simpler maintenance, less custom development time, and increased customer support. By evangelizing the capabilities of these cloud-native technologies early, you may just obtain buy-in to go forth with implementing a fully cloud-native platform for the MVP solution. If the direction is to proceed with integrating the Cloud Platform with technologies that are being used at the organization, then ensure that the relevant skills and capacity are available to be onboarded to the project. Even for this scenario, there is value in evangelizing cloud-native technologies if issues with the existing tools are uncovered.

3 - Architect an MVP solution that addresses immediate business needs to deliver value.

Before embarking on the Cloud Platform adoption journey, an organization might have been building a backlog of business questions they want to answer to deliver value and outcomes. This might include building a dimensional data model, designing complex reporting visualizations, or sharing data with vendors efficiently. Deep value can also be uncovered from predictive and prescriptive analytics, so a Data Science use case that delivers ML propensity models which support the analysis of a customer's future behavior based on historical trends. By carefully understanding the immediate business needs, the MVP will champion a targeted solution that consistently drives a roadmap towards answering questions to deliver value and outcomes for the organization.

4 - Carefully design and implement security models which protect PII data.

Most descriptive, diagnostic, predictive, and prescriptive analytics would require access to sensitive data such as customer information. By ensuring that the Cloud Platform is designed well enough to meet regulatory and organizational data governance requirements, security models which protect sensitive data will be a priority that will require careful design and implementation to ensure that the foundational platform is securely built and governed for successful scalability.

5 - Clearly estimate and monitor costs to prevent overcharges.

While certain components of a foundational Cloud Platform are intended to save costs, others can quickly rack up costs that are far greater than existing on-premises infrastructure costs, and get the attention of concerned business stakeholders. Cloud providers offer a variety of cost analysis and monitoring tools to help with anticipating and controlling costs. Pricing calculators support cost analysis from the perspective of estimating costs per cloud technology across a number of optional criteria such as 'pay as you go vs. reserved', estimated usage duration, anticipated storage and compute, customer support service level agreements (SLAs), and more. Pricing calculators are great at estimating costs for a specified duration. As the cloud platform gets built, cost analysis and monitoring tools can be used to prevent overcharges. Custom chargeback processes and models can be implemented to ensure that various departments would be able to segregate their various charges by the applicable cost centers. With out-of-the-box and custom cost analysis capabilities, cost thresholds and alerts can be scheduled for delivery to platform administrators. Additionally, cost metrics can be integrated with reporting dashboards. Compute and storage costs can also be contained by creating appropriate security models and policies which govern access to these storage and compute resources. By having a sound handle on costs, your MVP project will always remain in 'Green' status and your stakeholders will remain happy.

Summary

Cloud adoption certainly has several benefits. Many of the leading cloud providers that are gaining adoption and market share in the space offer compatible services and tools which enable foundational to maturity level Cloud Platforms. Becoming a cloud data-driven organization is an investment, requiring commitment from various stakeholders. The modern cloud platform can solve problems experienced with legacy platforms, software, and infrastructure to enable growth and promote self-service, enhanced capabilities, improved performance, and lowered costs.

A foundational Data Lakehouse empowers the foundational journey to maturity on single-cloud, hybrid-cloud, or multi-cloud platforms with its bleeding-edge capability offerings. As customers begin considering the cloud as their new home platform, most leading cloud providers offer a Cloud Adoption Plan for granularly defining the process for defining their cloud strategy and migration plan. As the journey begins, a clearly defined MVP project which demonstrates immediate business value by solving for certain use cases and desired outcomes will serve as an entry point for organizations that are strongly considering fully adopting the cloud as their new home. As the platform delivers value, it can be matured to the point of taking on more complex use cases such as advanced analytics ML and AI workloads to continue delivering business outcomes related to its proposition to solve predictive and prescriptive analytics challenges.

In this article, you learned about a Cloud Data Lakehouse success story along with real-world lessons learned about organizations that are embarking on their cloud journey and realizing tremendous benefits.

Next Steps

- Read more about the Differences and Comparisons between Power BI and Tableau

- Explore Databrick's Solution Accelerator for Customer Lifetime Value (CLV)

- Read more about How to Get Started with Databricks Machine Learning

- Read more about the Databricks SQL Workspace and Data Science & Engineering Workspace

- Read more about the Benefits and Limitations of Multi-Cloud

- Read more about AWS vs Azure vs GCP

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-07-15