By: Rick Dobson | Comments (9) | Related: 1 | 2 | 3 | More > Import and Export

Problem

My company uses a vendor-supplied application for loading data into a specialized SQL Server OLTP database that has a matching highly integrated enterprise application. The input application has a large set of data layouts for inputting different kinds of records into the OLTP database. The wide range and sometimes complex layout for inputting text files makes it difficult for our company to track down the source of errors, such as data issues, bad data formats, or even faulty application configuration settings. Are there any simple SQL Server T-SQL capabilities for validation of text files, such as those required by our input application?

Solution

The BULK INSERT statement is especially designed for inputting bulk data sources, such as large text files, into a SQL Server table. After the contents of the text file are in a table, you have the full power of T-SQL available for validating the contents of the text file that is copied into the table. This tip illustrates with a pair of easy-to-follow examples how to take advantage of the BULK INSERT statement for inputting text files into a SQL Server table and then using T-SQL to validate the input from one or more text files that are imported to SQL Server.

The BULK INSERT statement is a moderately rich T-SQL statement with an array of parameters to assist you with configuring its functionality. However, the statement's default settings are appropriate without any tweaking for inputting a text file. Therefore, after specifying a database to hold a text file's contents, all you have to do is indicate the name and path to the file to be imported to a target table in SQL Server.

Steps for inputting a text file to a SQL Server table

With three easy steps, you can import a text file into a SQL Server instance via the BULK insert command.

- First, designate the database holding the target table to be populated with the contents of the text file.

- Second, create a fresh copy of the table to receive the file's contents.

- Third, invoke the BULK INSERT statement so that it points at text file (C:\foo\SampleTextFile.txt) as its input and the target table as its output.

The following short script illustrates the application of these three steps. The USE statement designates the MSSQLTips database as the container for the target table to hold the text file's contents.

There are at least a couple of different strategies to prepare a target table for holding the text file's contents. In the following script, an EXISTS operator tests for the existence of a previously created target table (the ImportedFileTable table in the default schema, such as dbo, of the MSSQLTips database). If the object exists, the table is dropped so that a fresh copy can be created by the following CREATE TABLE statement. The target table in this example has a single column up to the maximum width for a varchar column. This approach of stuffing each line from a text file into a single column is especially appropriate when the location of field values are positionally specified within the text file. Non-default BULK INSERT configuration settings can accommodate requirements when input column values are delimited by a special character, such as a comma (,) or a pipe (|).

USE MSSQLTips

GO

-- Remove prior ImportedFileTable

if EXISTS (SELECT * FROM sys.objects WHERE name = 'ImportedFileTable' and TYPE = 'u')

DROP TABLE ImportedFileTable

-- Create ImportedFileTable

CREATE TABLE ImportedFileTable(

textvalue varchar(max)

)

GO

-- Import text file

BULK INSERT ImportedFileTable

FROM 'C:\foo\SampleTextFile.txt'

Validating a Text File in a SQL Server Table

After you get your text file into a SQL Server table, then you can use queries to verify the contents within the table. The queries that you run depend on the specifications for the input file and type of test that your organization thinks is most appropriate.

The following pair of scripts illustrates two very simple data profiling scripts for the contents of a text file in the ImportedFileTable. The first query lists the rows in the table. Whether or not you display all the rows in a table will likely depend on the number of rows in a table. However, it is often helpful to see some subset of rows when attempting to validate data.

The second query counts the rows based on the first two characters of a row. In the context of the sample application, these first two characters denote the record type. For example, 00 is for a header row and 99 is for a trailer row.

-- List file contents SELECT * FROM ImportedFileTable -- Report count of record types SELECT LEFT(textvalue,2), COUNT(*) record_type_count FROM ImportedFileTable GROUP BY LEFT(textvalue,2) ORDER BY LEFT(textvalue,2)

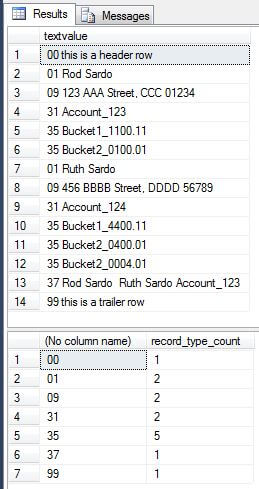

The following screen shot displays the two result sets output by the preceding script. Notice from the first result set that each line from the input file starts with a two-character number. This number designates the type of record type: 01 is for a record with information about a debtor, 31 is for information about a specific debt, 35 is for information about amounts associated with a debt, and 37 references the owners for a debt when there is more than one owner of a debt.

The second result set shows the count for each record type. It is common to confirm transfers by verifying that the number of output records matches the number of input records.

Validating a Change to a Text File in a SQL Server Table

Let's say that the creator of the input file incorrectly specified a 37 record for account number 123. This situation could be fixed by creating a new input file. The new input file will be without a 37 record for account number 123. Otherwise, the two files will be identical. By a relatively simple extension of the approach for inputting the first file, you can load the corrected file and even verify that it satisfies the requirement of no 37 record.

The following code excerpt validates the change to the text file. The script starts by preparing a second table for the second corrected text file. Then, it uses the BULK INSERT statement to load the file (C:\foo\SampleTextFile_wo_37.txt) into the table (ImportedFileTable_wo_37).

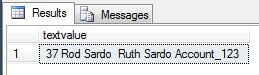

Next, an EXCEPT operator sandwiched between two SELECT statements returns all rows from the original file that are missing from the second corrected file. The screen shot after the following script verifies that there is just one record in the original file which is missing from the second file. This missing record is the erroneous 37 record for account number 123.

-- Drop and re-created wo_37 file

if EXISTS (SELECT * FROM sys.objects WHERE name = 'ImportedFileTable_wo_37' and TYPE = 'u')

DROP TABLE ImportedFileTable_wo_37

-- Create a table for the second text file

CREATE TABLE ImportedFileTable_wo_37(

textvalue varchar(max)

)

GO

-- Import second, corrected file

BULK INSERT ImportedFileTable_wo_37

FROM 'C:\foo\SampleTextFile_wo_37.txt'

-- Rows in SampleTextFile.txt missing from SampleTextFile_wo_37.txt

SELECT * FROM ImportedFileTable

EXCEPT

SELECT * FROM ImportedFileTable_wo_37

Next Steps

- The BULK INSERT statement is both a simple and powerful tool for importing text files into a SQL Server database. This tip focuses on how easy it can be to perform common kinds of data validation.

- The associated file for this tip includes both the T-SQL code as well as same text files used in the tip's description. For security reasons, you'll need to set up your own foo directory or a directory of your choice for storing the text files.

- This tip closes with a link for learning more about the BULK INSERT command. The MSDN site (http://msdn.microsoft.com/en-us/library/ms188365.aspx) takes its classic approach of systematically describing all the configuration parameters along with a few selected examples.

- Check out these related resources:

About the author

Rick Dobson is an author and an individual trader. He is also a SQL Server professional with decades of T-SQL experience that includes authoring books, running a national seminar practice, working for businesses on finance and healthcare development projects, and serving as a regular contributor to MSSQLTips.com. He has been growing his Python skills for more than the past half decade -- especially for data visualization and ETL tasks with JSON and CSV files. His most recent professional passions include financial time series data and analyses, AI models, and statistics. He believes the proper application of these skills can help traders and investors to make more profitable decisions.

Rick Dobson is an author and an individual trader. He is also a SQL Server professional with decades of T-SQL experience that includes authoring books, running a national seminar practice, working for businesses on finance and healthcare development projects, and serving as a regular contributor to MSSQLTips.com. He has been growing his Python skills for more than the past half decade -- especially for data visualization and ETL tasks with JSON and CSV files. His most recent professional passions include financial time series data and analyses, AI models, and statistics. He believes the proper application of these skills can help traders and investors to make more profitable decisions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips