By: Jeffrey Yao | Comments (2) | Related: More > Import and Export

Problem

I frequently need to import data from some CSV files into my various databases on different systems using BCP.exe or BULK Insert. Sometimes I just need to import a few fields of a file for one table into a database and a few other fields for a few table columns in another table (in another system) out of a same CSV file with 30+ fields, I usually have to generate a Format file with bcp and then modify the format file to adjust to my needs. But this process is tiresome and error-prone especially when the number of source file fields is large, like 40+, is there any easy way to address this issue? One thing to add is that all my csv files are pure ASCII files.

Solution

In SQL Server Books Online (BOL), there is a detailed example about using a format file to map table columns to the data file fields. I personally do not like to use XML format files, because of two reasons as stated in BOL and shown below (see section "Using an XML Format File" in BOL for more info).

- You cannot skip a column when you are using BCP command or a BULK INSERT statement to import data directly

- You can use Insert .. SELECT .. from OPENROWSET(BULK..) with XML format file, but you need to explicitly provide the column names in the SELECT list, this is an unnecessary overhead "cost" from a coding perspective.

So for these reasons, I prefer a non-XML format file.

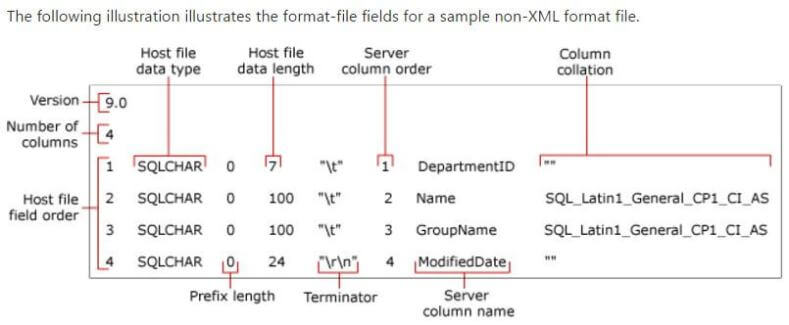

The detailed format file structure specification can be found from Microsoft Books Online here. The following image is copied from the link.

Figure 1 - Format Fields for Sample Non-XML Format File

I just want to make a few points here. The assumption is that the format file is an ASCII delimited source data file, not a source file of SQL Server native data.

- The rows in the format file do not necessarily need to be vertically aligned.

- The blank spaces between fields in the format file are flexible, i.e. you can have [X] blank spaces, where X can be any number larger than 1.

- [Server column name] field is not important in that you can put a fake name there, but the [Server column order] field is important.

- Since my csv source files are not fixed-length for each field, I can set [Host file data length] and [Prefix length] fields to 0.

Now to generate a non-XML format file, here is the "algorithm" (please refer to Figure 1 for component names in the format file).

- [Version] can be set to any number >= 9.0, so any bcp utility of SQL Server 2005+ can use this format file. I will set it to 10.0 as I do not have any SQL Server 2005 in my environment.

- [Number of columns]=# of fields in the data source file, we will calculate the # of fields by reading the first line of the source file.

- [Host file field order]=1 to [Number of columns].

- [Host file data type]='SQLCHAR', this is a fixed value as we are dealing with ASCII data file only.

- [Prefix length]=0, as we are dealing with ASCII data file only.

- [Host file data length]=0 as said in BOL "If you are creating a non-XML format file for a delimited text file, you can specify 0 for the host file data length of every data field.".

- [Terminator]= value from an input parameter, such as "|" or comma ",".

- [Server column order]: 0 = the column is ignored, N = the nth column of the destination table.

- [Server column name]: the target table's column name. This seems not used by BCP utility, instead, BCP utility uses [Server column order] to determine the column position.

- [Column collation]: only used for columns with char or varchar datatype, default to the collation setting of the database.

We also need to design the input parameters to generate a desired non-XML format file and this is actually simple and straight-forward.

Here are the parameters:

- [ServerInstance]: target SQL Server instance for data importation, default is the current machine name

- [Database]: target database for data importation

- [Schema]: schema name of the target table for data importation

- [Table]: target table name

- [FieldTerminator]: separator for fields in the source data file, defaults to '|', can be anything such as ';' or ',' or '#'

- [Mapping]: Field sequence number mapping to table column name in the format of (Field Position Number='column name'; ...), example (1='id'; 2='firstName') etc.

- [SourceFile]: UNC path to the source data file, we need to read at least one line from this file to retrieve information such as # of fields

- [FormatFile]: the format file to be generated, this is an ASCII text file, e.g. c:\temp\MyFormatFile.fmt

Source Code

The following is the PowerShell code to create a format file:

# Function: to generate a BCP format file so we can populate

# some destination table columns with some fields in a source data file

# assume you have SQL Server PS module (sqlps) installed, this sqlps module is included in sql server 2012+ version.

push-location;

import-module sqlps -DisableNameChecking;

Pop-Location;

#requires -version 3.0

function Create-BCPFormatFile {

<#

.Synposis

Generate a bcp format file based on parameter values

.Description

Generate a BCP format file based on source data file and destination table so we can bulk insert into some destination table columns with some corresponding fields in a source data file

.Parameter

ServerInstance: target SQL Server instance, string value, default to current Machine Name

Database: target database name, string value, mandatory.

Schema: schema name of the target table, string value, default to 'dbo'

Table: target table name, string value, mandatory

Mapping: a hashtable to link the field in data source file with the table column

Source File: UNC path for the source file

Format File: a UNC path for the generated format file, which can be used by BCP directly.

.Example

Create-BCPFormatFile -database tempdb -table t -Mapping @{2='account'; 4='balance'; 5='credit'} -FormatFile 'C:\temp\MyFmt.fmt' -SourceFile 'C:\temp\source.txt'

#>

[CmdletBinding()]

param (

[Parameter ( Mandatory=$False, HelpMessage='SQL Server Instance where the Destination table resides')]

[string] $ServerInstance = $env:ComputerName,

[Parameter ( Mandatory=$true)]

[string] $Database,

[parameter (mandatory=$false)]

[string] $schema='dbo',

[parameter (mandatory=$True)]

[string] $table,

[parameter (mandatory=$false, HelpMessage="Field Terminator in the source data file, default to | ")]

[string] $FieldTerminator='|',

[parameter (Mandatory=$true, HelpMessage="Field position number mapping to Column Name, such as (1='id', 2='firstname')etc")]

[hashtable] $Mapping = @{},

[parameter (Mandatory=$true, HelpMessage="The full UNC name of the source data file, such as c:\temp\Source.csv")]

[string] $SourceFile,

[parameter (Mandatory=$true, HelpMessage="The full UNC name of the format file, such as c:\temp\MyFmt.fmt")]

[string] $FormatFile

)

if ( -not $ServerInstance.contains('\'))

{ $ServerInstance = $ServerInstance+"\default";}

$svr = get-item sqlserver:\sql\$serverInstance;

$db = $svr.Databases.Item($Database);

$tbl = $db.tables.Item($table, $schema);

$columns = $tbl.Columns.name.toUpper();

#find the longest column name and get its lenth

[int] $max_col_len = 0;

$columns | % { if ($_.length -gt $max_col_len) { $max_col_len = $_.length;}};

$max_col_len += 2; # for adding additional two spaces here

#find the database collation

[string]$db_collation = $db.Collation;

#find the column numbers

[int] $cnt = (get-content -totalcount 1 -Path $SourceFile).split($FieldTerminator).count

[string]$space_6 = " "*6;

[string]$col_name = '';

[int] $col_pos = 0;

[string] $col_collation='';

if (test-path -Path $FormatFile)

{ Remove-Item -Path $FormatFile -Force;}

"10.0" | Out-File -FilePath $FormatFile -Force -encoding ascii ; # change "10.0" = SQL 2K8, "9.0"= SQL 2K5, "12.0"=SQL2012 etc

"$cnt" | Out-File -FilePath $FormatFile -Append -encoding ascii;

for($i =1; $i -le $cnt; $i++)

{

[string]$i_str = ($i.ToString() + ' '*4).Substring(0,4);

if ($Mapping.Keys -contains $i)

{

#check the column sequence position and the data type of the corresponding column

if ($columns -icontains $Mapping[$i])

{

[string]$val = $Mapping[$i].ToString().ToUpper();

$col_pos = $columns.IndexOf($val)+1; #Arrary is 0-based while in format file, column position is 1 based

$col_name = $Mapping[$i];

#now we need to find the char data type,

if ($tbl.columns[$col_pos-1].datatype.Name.Contains('char'))

{

$col_collation = $db_collation;

}

else

{

$col_collation = '""';

}

}

else

{ write-error "CANNOT find column $($Mapping[$i]), please correct your input parameter `$Mapping before continues";

return;

}

#make $col_name to be of the same length

$col_name = ($col_name + ' '*$max_col_len).Substring(0, $max_col_len);

#make $col_pos to be of the same lentgh

[string]$col_pos_str = ($col_pos.ToString() + ' '*4).Substring(0,4);

if ($i -lt $cnt)

{ "$i_str $space_6 SQLCHAR $space_6 0 $space_6 0 $space_6 ""$FieldTerminator"" $space_6 $col_pos_str $space_6 $col_name $space_6 $col_collation" | Out-File -FilePath $FormatFile -Append -encoding ascii; }

else # $i -eq $cnt

{ "$i_str $space_6 SQLCHAR $space_6 0 $space_6 0 $space_6 ""\r\n"" $space_6 $col_pos_str $space_6 $col_name $space_6 $col_collation" | Out-File -FilePath $FormatFile -Append -encoding ascii; }

} # $Mapping.Keys -contains $i

else

{

$col_name = ('xyz' + ' '*$max_col_len).Substring(0, $max_col_len) #make it to be 32 characters long

if ($i -lt $cnt)

{"$i_str $space_6 SQLCHAR $space_6 0 $space_6 0 $space_6 ""$FieldTerminator"" $space_6 0 $space_6 $col_name $space_6 `"`"" | Out-File -FilePath $FormatFile -Append -encoding ascii; }

else

{"$i_str $space_6 SQLCHAR $space_6 0 $space_6 0 $space_6 ""\r\n"" $space_6 0 $space_6 $col_name $space_6 `"`"" | Out-File -FilePath $FormatFile -Append -encoding ascii; }

}

}#end for loop

} # Create-BCP_FormatFile

Here is an example, but first let's prepare the environment.

We first create a target table as follows:

use tempdb

if object_id('dbo.t', 'U') is not null

drop table dbo.t;

go

create table dbo.t (id int identity --no import

, account varchar(200), balance decimal(10,2) -- yes, import

, credit bit default 1 -- no import

, Dt_transaction datetime -- yes, import

, OperationUser varchar(60) default suser_sname() -- yes, import

);

GO

Second, let's say we have the following CSV source data that needs to be imported, the data file is at c:\temp\source.txt.

col_1|account|col_2|balance|credit|Date|Col_3|col4|col5|col6|col7|col8|col9|name no_use_1|A-001|no_use_2|111.01|1|2016-01-01|no_use_3||2||||xyz|jeff no_use_1|B-002|no_use_2|222.02|0|2016-02-02|no_use_3||3||||abc|jane no_use_1|C-003|no_use_2|333.03|1|2016-03-03|no_use_3||11|||||bob no_use_1|D-004|no_use_2|444.04|0|2016-04-04|no_use_3||222||||def|dell

Our requirements are as follows:

- Populate table [tempdb].[dbo].[t] for columns [account], [balance], [dt_transaction] and [OperationUser]

- The source data will come from c:\temp\source.csv from columns 2, 4, 6 and 14 respectively

So here are the detailed steps:

Run the following command to generate a format file:

Create-BCPFormatFile -database tempdb -table t -Mapping @{2='account'; 4='balance'; 6='dt_transaction'; 14='OperationUser'} -FormatFile 'c:\temp\Import-T.fmt' -SourceFile 'C:\temp\source.txt'

Once the format file c:\temp\import-t.fmt is generated, we can run the following query in SSMS:

bulk insert tempdb.dbo.t from 'C:\temp\source.txt' with (formatfile='C:\temp\import-t.fmt', firstrow=2);

Or if we want to run bcp.exe utility, we can do this in a command window:

bcp dbo.t in c:\temp\source.txt -S . -d tempdb -T -f c:\temp\import-t.fmt

Here is what the c:\temp\import-t.fmt looks like:

10.0 14 1 SQLCHAR 0 0 "|" 0 xyz "" 2 SQLCHAR 0 0 "|" 2 account Latin1_General_CI_AS 3 SQLCHAR 0 0 "|" 0 xyz "" 4 SQLCHAR 0 0 "|" 3 balance "" 5 SQLCHAR 0 0 "|" 0 xyz "" 6 SQLCHAR 0 0 "|" 5 dt_transaction "" 7 SQLCHAR 0 0 "|" 0 xyz "" 8 SQLCHAR 0 0 "|" 0 xyz "" 9 SQLCHAR 0 0 "|" 0 xyz "" 10 SQLCHAR 0 0 "|" 0 xyz "" 11 SQLCHAR 0 0 "|" 0 xyz "" 12 SQLCHAR 0 0 "|" 0 xyz "" 13 SQLCHAR 0 0 "|" 0 xyz "" 14 SQLCHAR 0 0 "\r\n" 6 OperationUser Latin1_General_CI_AS

Note those 'xyz' strings are just a place holder for columns that do not have corresponding source fields. Actually, you can put any string there.

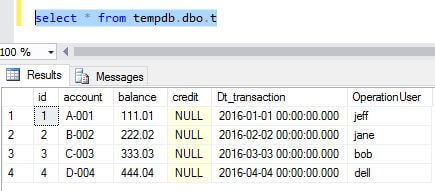

We will get the following result after the Bulk Insert.

Summary

This tip provides a quick way to generate a customized BCP format file when you need to extract a few source fields into a few columns of a target table. It currently only deals with ASCII type source file, which is arguably the most common type, not the native format source file.

This was used and tested with SQL Server 2008 and higher versions.

Next Steps

You can read the following articles to better understand how to use bulk insert methods in a SQL Server environment:

- BCP XML Format Files with SQL Server 2005

- Adding more functionality to SQL Server BCP with PowerShell

- Different Options for Importing Data into SQL Server

About the author

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips