By: Rajendra Gupta | Comments (9) | Related: > SQL Server 2017

Problem

In my previous tip, SQL Server 2017 Differential Backup Changes I outlined how to effectively issue a differential backup based on the percentage changed in the data since the last full backup. SQL Server 2017 RC2 also offers changes for transaction log backups as well. In this tip we will explore how to effectively issue transaction log backups with SQL Server 2017.

Solution

SQL Server DBAs often keep databases in the Full Recovery Model in order to issue transaction log backups. This helps to be able to recover the database using point in time recovery.

Normally we create a SQL Server Agent Job to run at a specific duration depending upon the criticality of the database. However, as you know the number of transactions or database load is not the same at all times. Sometimes the database is very busy causing the transaction log to automatically grow frequently thus increasing the virtual log file count. Since our transaction log backup schedule is based on the interval i.e. every 1 minute or every 15 minutes, it is independent of the transaction log activity. Due to this we see a variation in the size of the transaction log backups. The transaction log backup size also varies due to the transactions happening during that time interval. The problem with this approach is that it doesn't take into consideration transaction log activity, so we see frequent auto growth events occurring in the database.

Pre SQL Server 2017 Transaction Log Backups

Let's walk through an example of transaction log backups prior to SQL Server 2017. First let's create a database and insert some records into a table.

CREATE DATABASE SmartTrasactionLogBackup

GO

USE [SmartTrasactionLogBackup]

GO

/****** Object: Table [dbo].[TestTable] ******/

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TABLE [dbo].[Customers]

(

[custid] [int] IDENTITY(1,1) NOT NULL,

[custname] [nvarchar](50) NOT NULL

) ON [PRIMARY]

GO

INSERT INTO Customers VALUES ('MSSQLTIPS')

GO 1000

Let's take a Full backup followed by a transaction log backup.

--Full database backup BACKUP DATABASE [SmartTrasactionLogBackup] TO DISK = N'C:\Backup\SmartTrasactionLogBackup.bak' WITH FORMAT, NAME = N'SmartTrasactionLogBackup-full Database Backup', STATS = 10 GO --Transaction log backup BACKUP LOG [SmartTrasactionLogBackup] TO DISK = N'C:\Backup\SmartTrasactionLogbackup.trn' WITH FORMAT, NAME = N'SmartTrasactionLogBackup-log Database Backup', SKIP, NOREWIND, NOUNLOAD, STATS = 10 GO

In the below script I will run the transaction log backup every 1 minute. During this time frame the workload will vary i.e. the database will be idle sometimes while very active at others.

SET NOCOUNT ON WHILE (1=1) BEGIN BACKUP LOG [SmartTrasactionLogBackup] TO DISK = N'C:\Backup\SmartTrasactionLogbackup.trn' WITH FORMAT, NAME = N'SmartTrasactionLogBackup-log Database Backup', STATS = 10 WAITFOR DELAY '00:01:00' --Timer to run the script for backup every 1 min end GO

I have waited for some log backups to finish and then started this transaction to insert records.

INSERT INTO Customers VALUES ('MSSQLTIPS')

GO 10000000

Before we move on, let me introduce a new SQL Server 2017 DMF - sys.dm_db_log_stats. This DMF returns summary level attributes and information on transaction log files for databases.

Below is the syntax for sys.dm_db_log_stats.

sys.dm_db_log_stats ( database_id )

This DMF provides very important and useful output. Some of the important output columns are:

- recovery_model: Current database recovery model i.e. Full, Bulk-logged, Simple.

- total_log_size_mb: Total transaction log size in MB.

- active_log_size_mb: Total active transaction log size in MB.

- active_vlf_count: Total number of active VLFs in the transaction log.

- log_truncation_holdup_reason: Log truncation holdup reason. The value is same as log_reuse_wait_desc column of sys.databases.

- log_backup_time: Last transaction log backup time.

- log_since_last_log_backup_mb: Log size in MB since last transaction log backup LSN.

We will create a table to insert the output from our DMF every 30 seconds to see the transaction log details.

CREATE TABLE DBLogStats

(

DBname varchar(50),

Recovery_model varchar(20),

total_log_size_mb float,

active_log_size_mb float,

active_vlf_count int,

log_truncation_holdup_reason nvarchar(100),

log_backup_time datetime,

log_since_last_log_backup_mb int

)

GO

SET NOCOUNT ON

WHILE (1=1)

BEGIN

INSERT INTO DBLogStats

SELECT

DB_NAME(database_id) AS DBName,

recovery_model,

total_log_size_mb,

active_log_size_mb,

active_vlf_count,

log_truncation_holdup_reason,

log_backup_time,

log_since_last_log_backup_mb

FROM sys.dm_db_log_stats(DB_ID())

WAITFOR DELAY '00:00:30'

END

GO

Also, I have captured the auto growth event using the following query:

DECLARE @trcfilename VARCHAR(1000);

SELECT @trcfilename = path FROM sys.traces WHERE is_default = 1

SELECT StartTime,

DB_NAME(databaseid)as DatabaseName,

Filename,

SUM ((IntegerData*8)/1024) AS [Growth in MB],

(Duration/1000)as [Duration in seconds]

FROM ::fn_trace_gettable(@trcfilename, default)

WHERE (EventClass = 92 OR EventClass = 93)

GROUP BY StartTime,Databaseid, Filename, IntegerData, Duration

ORDER BY StartTime

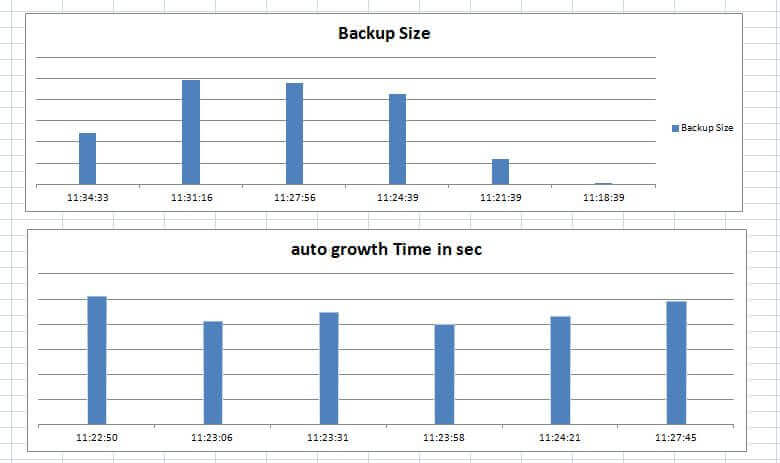

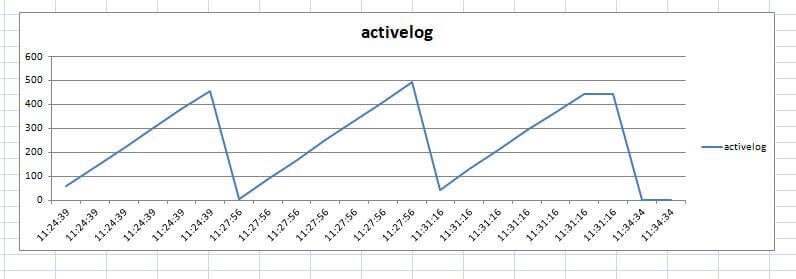

So far we have set the stage to gather the data. Once the data is collected, I can create a graph in Excel to show the details as shown below.

We can see in the graph that the backup size continuously varies. Sometimes it is very small when no activity is happening while sometimes the log backup size is comparably larger. We can see a couple of auto growth events occurring which caused the transaction log file to grow.

In the above figure we can see the active log varies because the backup is happening at scheduled times.

So far we have seen the transaction log behavior up to SQL Server 2016. Now let's what has changed in SQL Server 2017.

Effective log backup - SQL Server 2017

As highlighted above, SQL Server 2017 introduces a new DMF sys.dm_db_log_stats to track transaction log backup status in more granular way. We can use the information to effectively manage the SQL Server transaction log backups.

The DMF, sys.dm_db_log_stats has an output column logsincelastbackup which represents how much log data has been generated since the last transaction log backup. We can use this information to manage the transaction log backups.

Suppose I want log backups to happen only when logsincelastbackup has more than 50 MB for the particular database. So my log backup should execute as:

- logsincelastbackup<>50MB: No log backup should execute even on scheduled time

- logsincelastbackup<=50MB: Log backup should execute

To do so, I have executed the below script to issue transaction log backups. This code will only create a log backup if the threshold of 50 MB has been met.

DECLARE @logsincelastbackup int

DECLARE @logbackupthershold int=50

WHILE (1=1)

BEGIN

SELECT @logsincelastbackup = log_since_last_log_backup_mb from sys.dm_db_log_stats(DB_ID())

IF (@logsincelastbackup = @logbackupthershold )

BACKUP LOG [SmartTrasactionLogBackup] TO DISK = N'C:\Backup\SmartTrasactionLogbackup.trn' WITH FORMAT

END

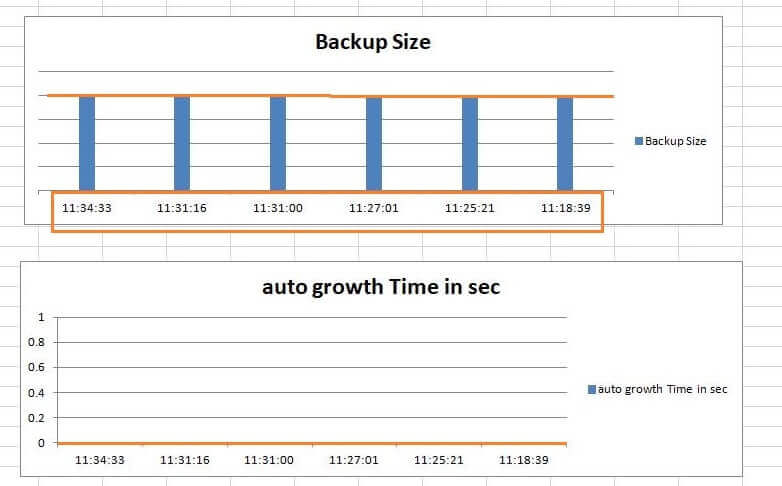

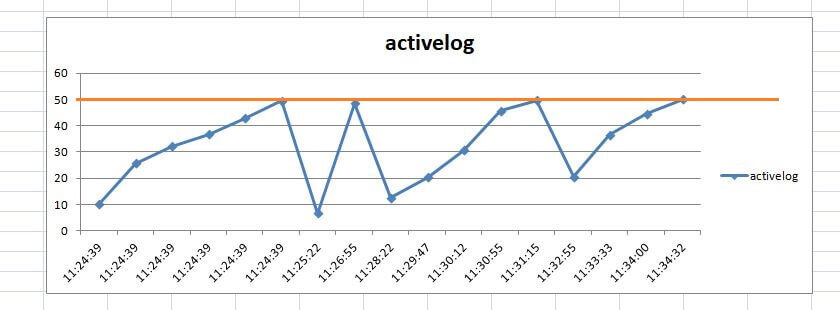

I gathered the same data as we captured above to compare the results. The new log backup mechanism shows the results below.

We can see that the transaction log backup is happening once 50MB has been reached. There is no fixed time interval on which the process will issue the transaction log backup. Also, the transaction log backup size is also continuously the same.

Since we are taking a backup once the threshold is met, an auto growth event is not happening which should improve the database performance as well.

In the above figure we can see the active log is not reaching beyond 50 MB since the log backup executes once the threshold is met. So SQL Server 2017 provides us more control over transaction log backups where we can issue the transaction log backup based on the database activity instead of a predefined schedule. We can also improve the database performance by minimizing the transaction log auto growth frequency.

SQL Server 2017 provides many exciting features, effective and smart transaction log backups is a really impressive new feature. Explore this feature in your test environment to see the benefits.

Next Steps

- We will explore more about SQL Server 2017 in future tips.

- Read more about sys-dm-db-log-stats.

- Get overview of SQL Server backups.

- Explore SQL Server 2017 RC2.

- Read more about SQL Server vNext Linux Tips.

About the author

Rajendra Gupta is a Consultant DBA with 14+ years of extensive experience in database administration including large critical OLAP, OLTP, Reporting and SharePoint databases.

Rajendra Gupta is a Consultant DBA with 14+ years of extensive experience in database administration including large critical OLAP, OLTP, Reporting and SharePoint databases.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips