By: John Miner | Comments (5) | Related: > Azure Functions

Problem

Over the next few years, companies will be moving to the cloud for the speed in which services can be deployed, the ability to scale services when demand changes, and potential cost savings from eliminating data centers. Many current on-premises applications are backed by a SQL Server database with batch jobs running on SQL Server Agent. Microsoft’s Azure SQL database service does not have a native job scheduler.

How can we schedule Transaction SQL (T-SQL) statements to run on a scheduled basis?

Solution

There are several ways to schedule a TSQL job using a programming language such as C# or PowerShell. If we want to stay with the Platform As A Service (PAAS) offerings, this list can be narrowed down. Azure Functions is a server-less event driven experience that can be used to create a modern Web Application. These nano web services can scale on demand and are billed on activity. Thus, you only pay for the resources that you consume.

Consider using Azure functions to schedule TSQL jobs if your application team is writing Web Applications using this technology.

Business Problem

Our boss has asked us to look into scheduling batch processes for Azure SQL database. The chosen technology needs to easily support multiple environments, support modern day languages, and should have excellent monitoring capabilities.

This proof of concept will use the math database which calculate prime numbers from 1 to N. We want to schedule a job every 5 minutes that keeps on calculating increasing numbers in batches of 5000 new candidates.

The rest of the article will show you how to leverage Azure Functions to satisfy these business requirements.

Sample Azure Databases

The first business requirement is to create two similar databases for different environments. This article assumes you know how to setup an Azure SQL database using PowerShell cmdlets or the Azure Portal. See my prior tips if you have questions.

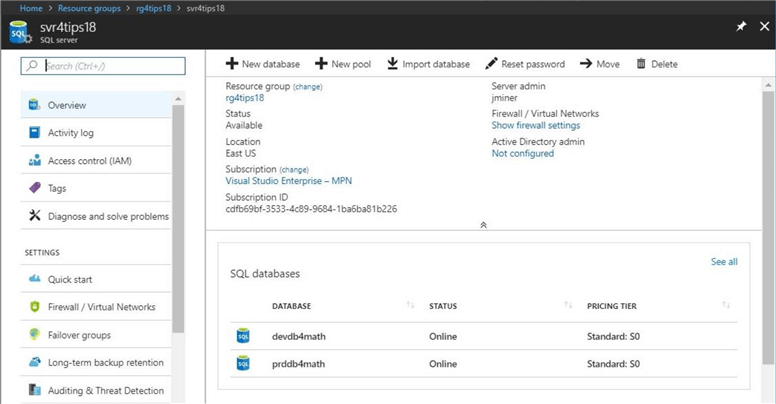

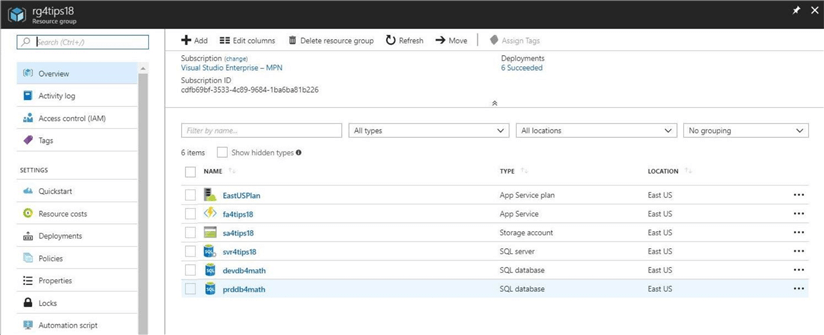

The image below shows a development database named devdb4math and a production database titled prddb4math. Both databases are associated with a server identified as svr4tips18 and a resource group called rg4tips18. All of these services are located in the East US data center.

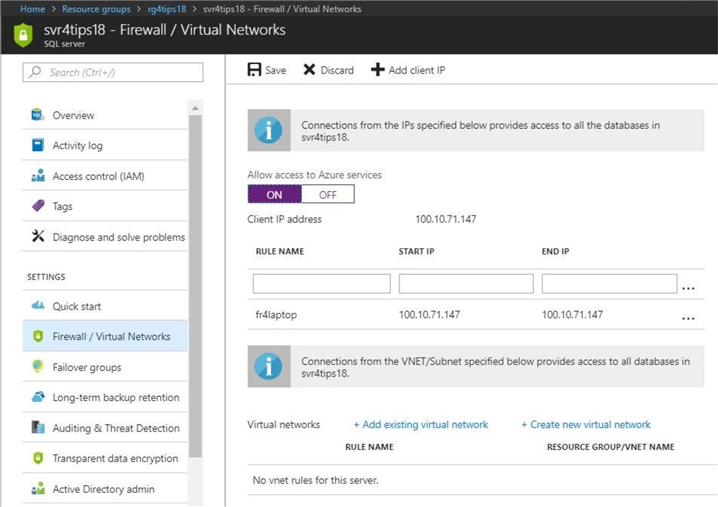

By default, the firewall is set to allow no interactions with the newly defined Azure SQL Server. Use the firewall blade in the Azure portal to allow Azure Services to access these databases.

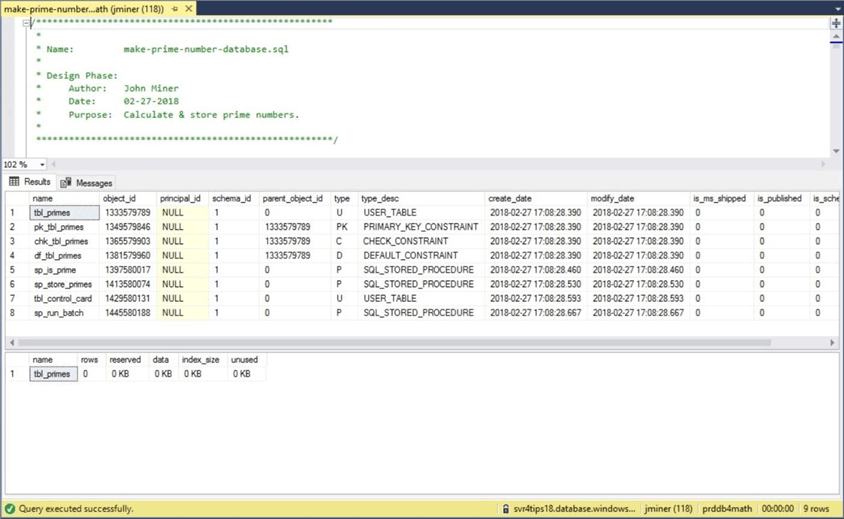

I have used the prime number database in past articles to create proof of concepts. I added one new stored procedure to the Transaction SQL script.

The sp_run_batch stored procedure reads the tbl_control_card table to retrieve the last candidate number checked for being prime. It calls the sp_store_primes stored procedure to check the next 5000 candidate numbers. Last but not least, it updates the control table with last number that was checked.

We need to create a unique contained database user for each environment. It is good practice not to give out access to multiple environments using a single account.

The code below creates a service account user for the development environment with complete rights to the database. Adjust the rights according to your environment. Create another account for the production environment at your leisure.

/*

Development user

*/

-- Service Account

CREATE USER [svcacct4dev] WITH PASSWORD = 'MS#tIpS$2018dev';

-- Give out rights

EXEC sp_addrolemember 'db_owner', 'svcacct4dev'

To recap, we need two different environments to satisfy the business requirements. If this is a one-time deployment, use the Azure Portal to create it. Otherwise, if you are using a continuous development practice, create development operation scripts using PowerShell to automate the process. I am enclosing the complete Transact SQL script for the database schema.

Azure Functions

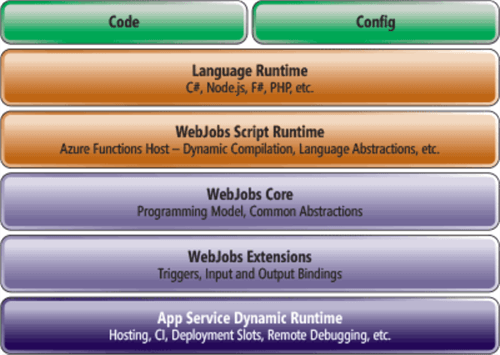

What is Azure Functions? It is a server-less architecture built on top of cloud services. The idea is to abstract away application frameworks and external dependencies so that developers can focus simply on the code to implement business logic. There is a great article on MSDN that covers the architecture of this technology.

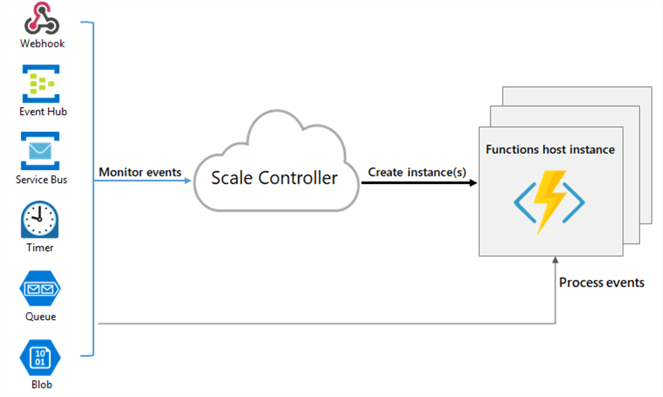

The image below shows the overall system design of Azure Functions. As you can see, there are many different layers.

There are a several important things you should note from this diagram. First, it is built upon Azure App Service, which is a fully managed hosting service for web, mobile and API applications. Second, the developer can use many different languages to code a new function. Right now, both C#, F# and JavaScript are fully supported at this time. Third, it supports configuration settings at the service level. This will allow us to define connection strings for different environments. Fourth, it is an event driven architecture. Therefore, code that defines the function is called when an event happens.

The image below shows some of the different events that can trigger an Azure Function. The awesome part of this architecture is the ability to scale up when demand increases and scale down when demand decreases. This functionality is governed automatically by a scale controller. If your team is creating modern day web applications, you might define a Webhook or HTTP trigger.

We will be interested in the timer trigger, since we want to run the TSQL job on a predetermined schedule. Now that we have some idea about the technology, let us deploy an Azure Function.

Deploying Azure Function App

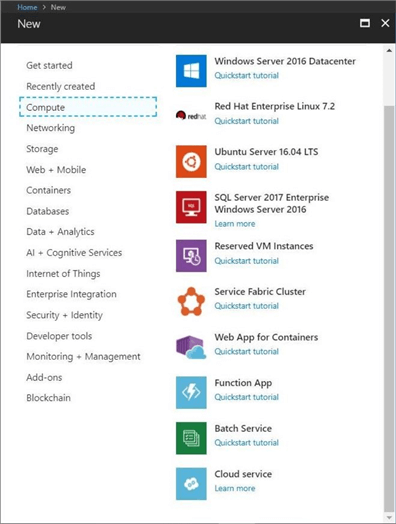

I will be using the Azure Portal to deploy our Function App service. Find the new option in the left menu and select the compute sub option. Double click the Function App icon to start the process.

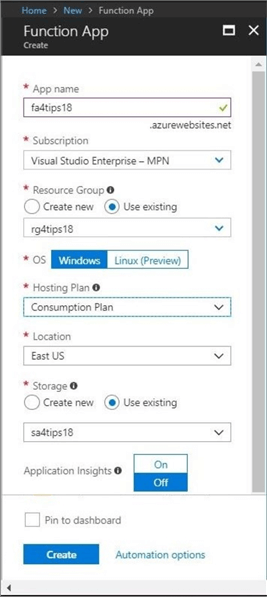

The image below shows the typical dialog box when deploying a new service. I am going to name the service fa4tips18 and create a storage account called sa4tips18to support this service. Both objects will be associated with our resource group identified as rg4tips18.

I suggest using the Windows Operating system. The Linux operating system is in preview. I consider this offering an experimental option at this time. For now, let us leave application insights off. The biggest decision you have is the hosting plan. It can either be consumption only or available all the time. We will take the default plan.

Like most services, you click the create button and go grab a cup of coffee. By the time you are back, you have a service ready to go.

The image below shows all the objects in our resource group. The EastUSPlan is the only object that you cannot rename to our naming conventions.

In summary, deploying the Azure Function App service is very easy. Our next task is to define connection strings for our two different environments.

Configuring Application Settings for Azure Function Apps

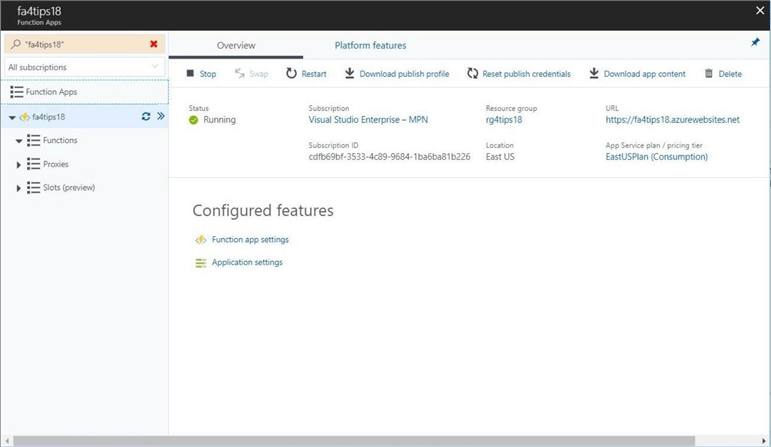

The main menu for the Function Apps has many different options that can be configured. Right now, we want to define connection strings. Click the hyperlink named application settings under the Configured Features section to switch to the right screen.

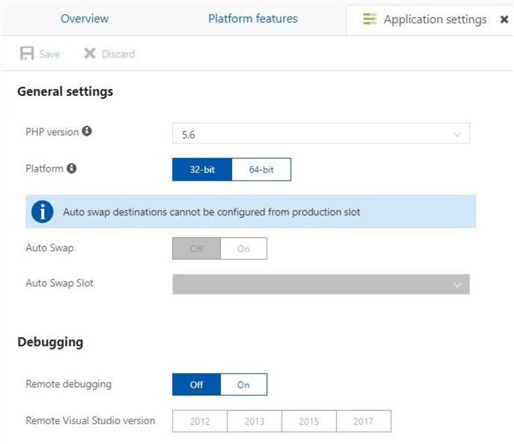

The application settings tab dialog menu shows different options for the cloud services environment. Today, we are going to write a simple function using C# script. However, this platform support continuous deployment using Visual Studio and Team Services. Of course, most people are storing their code base in a GIT version control repository.

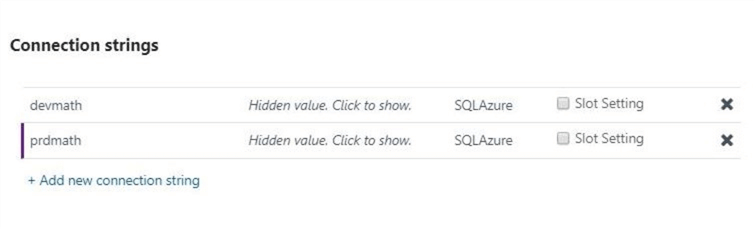

If you scroll down the window, you will come to a section for connection strings. The image below shows two strings defined for the development and production math databases.

The snippet below shows the connection string for the development database. Please setup your connection strings in your environment at this time.

Server=tcp:svr4tips18.database.windows.net;Database=devdb4math;Uid='svcacct4dev';Pwd='MS#tIpS$2018dev';

The overall all look and feel of configuring Azure Functions is that of a hosted cloud service.

Building an Azure Function

Please take a look at the Function App named fa4tips18 as shown above. There are three sections under this object: functions, proxies and slots.

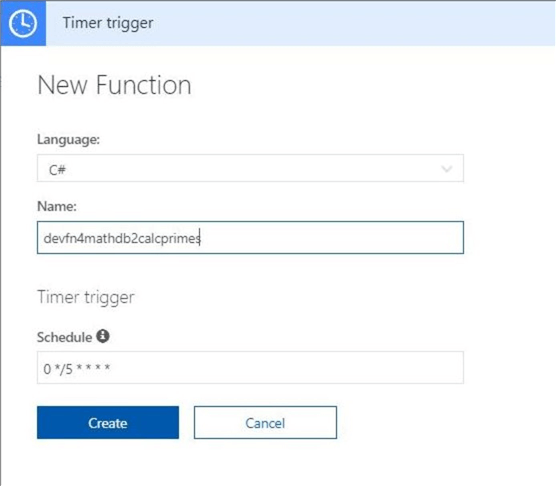

Click the functions down arrow to show currently defined functions. Since there are no functions that are defined, choose the new function button. Select a timer trigger as the type of function we want to define.

The following dialog box creates a C# timer trigger named devfn4mathdb2calcprimes that will execute every five minutes.

So, what is up with that weird string shown in the scheduling section of the trigger?

The Azure Function service uses CRON expressions to define the pattern (schedule) of execution. The snippet below shows the format of the expression. For more details, please see this page on Wikipedia.

{second} {minute} {hour} {day} {month} {day-of-week}

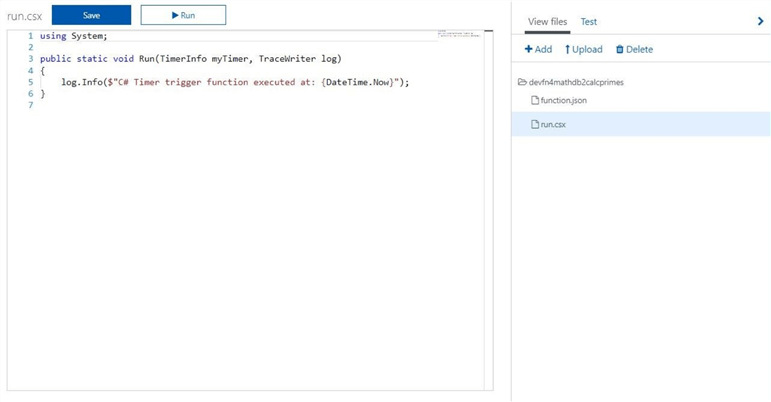

Upon creation of the new function, we can look at the two files that make up its definition.

The function.json file defines bindings. This includes function type, function schedule, and any input/output definitions. See the GIT reference page from Microsoft for more details.

The run.csx file defines the actual code to run. Every function that is defined is given sample code or a template to start off with. The image below shows the sample code that prints the date and time the function was executed at.

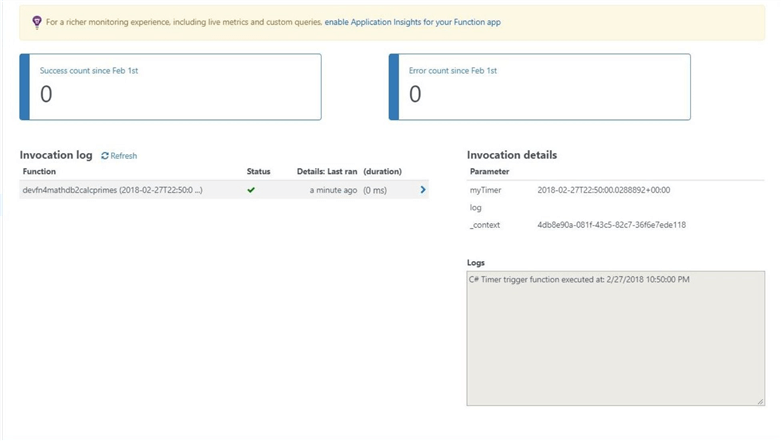

The actual object named devfn4mathdb2calcprimes has three sub-menus: integrate, manage, and monitor. We are interested in the last sub-menu. The image below shows the first successful execution of the trigger. We can see the invocation log and invocation details.

Deploying our first Azure function is extremely easy. However, it does not solve our business problem. The next step is to change C# script to solve our business problem.

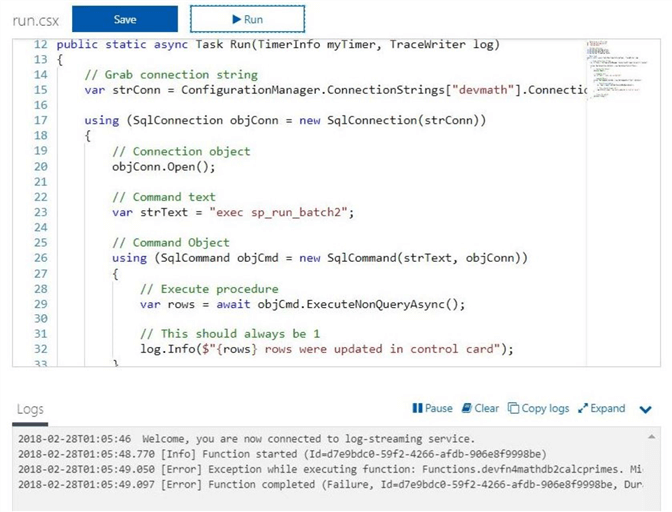

Reworking the Timer Trigger

We need to change the code of the timer trigger to read the connection string, connect to the Azure SQL database and execute the given stored procedure. The C# script listed below executes this algorithm perfectly. Just change the strText variable to any Transaction SQL statement that is not a SELECT statement or set the schedule.

// References 4 C# script

#r "System.Configuration"

#r "System.Data"

// System Libraries

using System.Configuration;

using System.Data.SqlClient;

// Main task

public static async Task Run(TimerInfo myTimer, TraceWriter log)

{

// Grab connection string

var strConn = ConfigurationManager.ConnectionStrings["devmath"].ConnectionString;

using (SqlConnection objConn = new SqlConnection(strConn))

{

// Connection object

objConn.Open();

// Command text

var strText = "exec sp_run_batch";

// Command Object

using (SqlCommand objCmd = new SqlCommand(strText, objConn))

{

// Execute procedure

var rows = await objCmd.ExecuteNonQueryAsync();

// This should always be 1

log.Info($"{rows} rows were updated in control card");

}

// Close the object

objConn.Close();

}

}

There is one interesting piece of syntax that I have not seen before. You can reference other (loose) assemblies from your CSX script by using a #r directive. See this GIT reference page from Microsoft for more details.

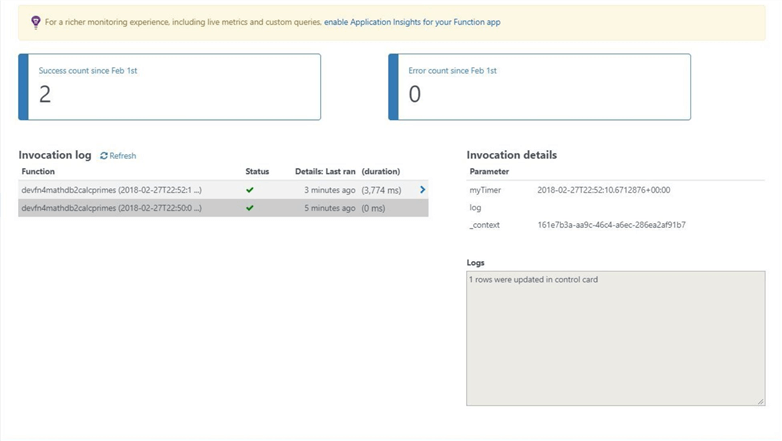

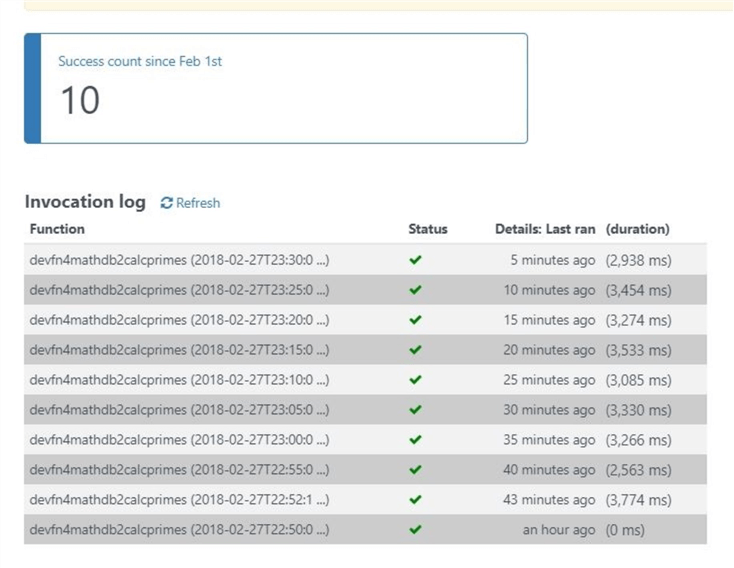

Save and execute the new C# script. The image below shows the output in the monitor section of the Azure Function. We can see that the template function took almost no time to execute. The updated function takes 3.7 seconds to find prime numbers between 1 and 5000.

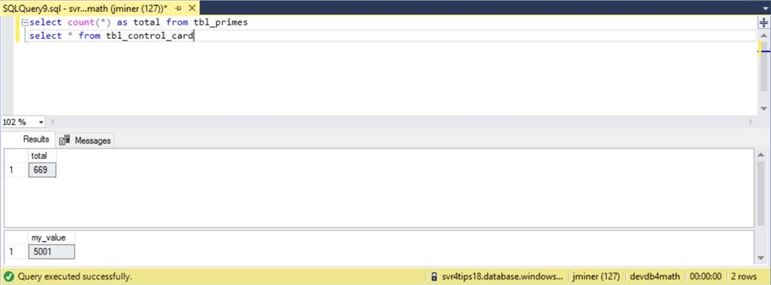

I am going to connect to our Azure SQL database in the development environment with SQL Server Management Studio (SSMS) and run some queries. We can see that 669 prime numbers exist between the numbers 1 and 5000.

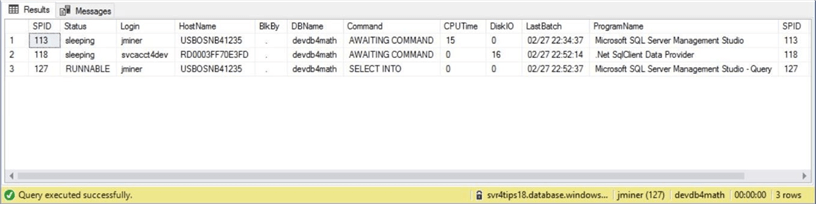

I was able to execute the sp_who2 command during the execution of the Azure Function to capture the output below. We can see that a computer named RD0003FF70E3FD, part of the managed cloud service, is running our Microsoft .Net script.

Last but not least, the invocation log shows the Azure Function executing every 5 minutes as designed.

In conclusion, Azure Functions, or more specifically timer triggers, can be used to schedule Transact SQL jobs. Using the invocation log to monitor these jobs might become unwieldly as the number of jobs increase.

Is there a better way to monitor a bunch of Azure Functions?

Application Insights

Application insights allows the production support engineer to monitor and respond to failed functions. I purposely left off this option when deploying the Azure Function App at the start of the article. Let us deploy and configure the application insights service right now.

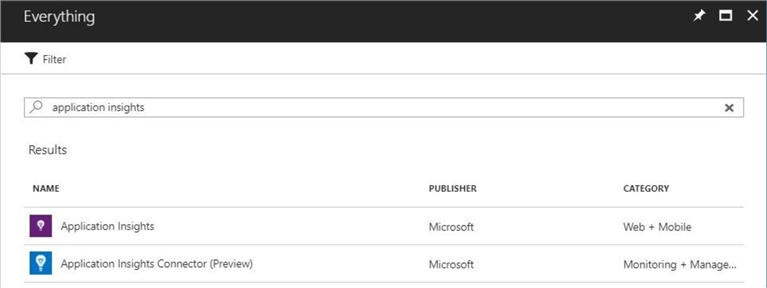

We will be using the Azure Portal to install this service. Search the Azure Market Place for application insights. Double click the icon to start the deployment.

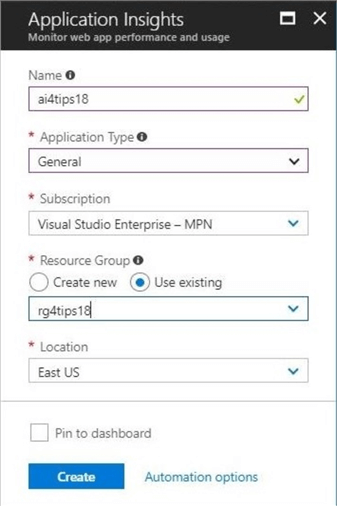

The application insights deployment dialog box is shown below. Make sure you choose a general application type.

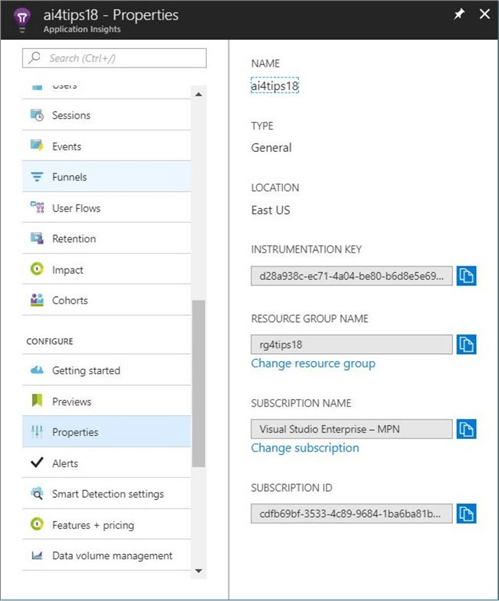

Once the application insights service is deployed, double click the object named ai4tips18 to open the service blade. The default view is the overview panel. Scroll down to the configure section. Click the properties option to open the panel and view the instrument key. Click the copy button to save this key to the windows clip board.

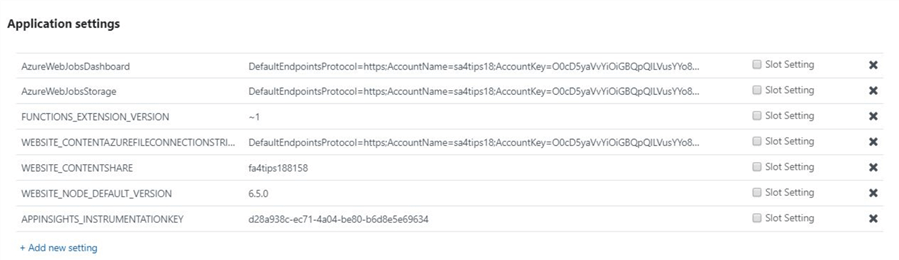

To have an Azure Function connected to Application Insights, an application setting needs to be added to the service.

The above image shows the APPINSIGHTS_INSTRUMENTATIONKEY was added as a new setting to the service. Now that we have advanced monitoring, let us test how it detects and displays errors.

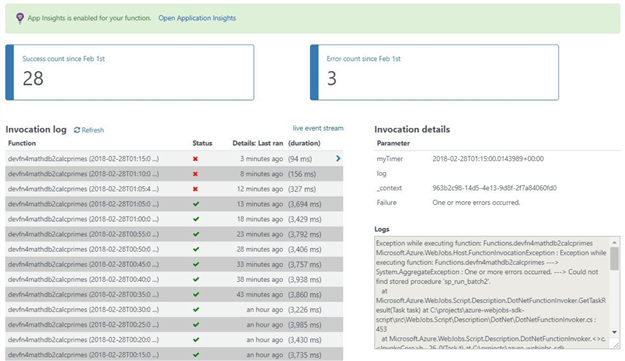

Testing Application Insights

There are many ways to create a negative test case for Azure Functions and Application Insights. One quick way to have the function error out is by calling a stored procedure that does not exist. This could actually happen in real life if a code change was not thoroughly tested.

The image below taken from the Azure Portal shows a manual run of the function with an invalid stored procedure name.

If we take a look at the invocation log shown below, we can see that 3 failed executions happened before the function was reverted back to the original code.

It is interesting to note that the .Net call stack in the log trace is very detailed. It states that it could not find the name of the stored procedure that was being called. Also note, the execution time of the failed execution is a fraction of the normal execution time.

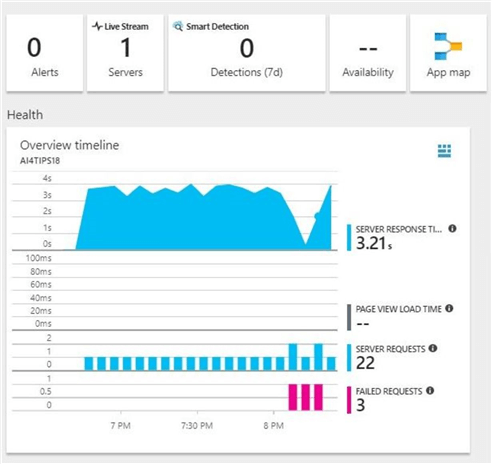

There are two graphs on the overview panel that are important to know about. The overview timeline shows you the total function call response time over a running time line. Both successful and failed requests are shown on the graph. Please see below image for details.

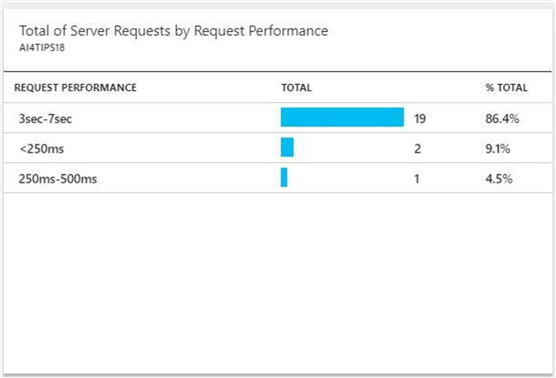

The server request performance graph buckets the calls by execution time ranges. You can see that valid requests take between 3 and 7 seconds. All other requests take a quarter of a second or less. Again, the image below was taken from the Azure Portal.

In a nutshell, application insights provide a dashboard that can be very helpful in finding errors when you are running multiple Azure Functions. The design pattern of Azure Functions, Timer triggers, C# scripting and Application insights was used to solve our business problem.

Summary

Today, we talked about Azure Functions, which are a server-less event driven experience that can be used to create a modern Web Applications.

More companies are moving to the Azure cloud every day. Many of the current on-premise web applications are backed by a SQL Server database with batch jobs running on SQL Agent. If your development team is re-writing an old web application using Azure, you can leverage Azure Functions and timer trigger to schedule a Transact SQL database calls.

If you want to learn more about Azure Functions, please see the online documentation page for this offering. The idea behind functions (nano services) is to break up a unit of work into separate tasks. Therefore, a single function is a single task. What I did not talk about is the fact that Azure Logic Apps can be used to create workflows. Also, the C# scripting can be used to manage and manipulate Azure objects other than Azure SQL database.

Like many things in life, there are multiple ways to solve a problem. Azure Functions might be the right fit if you want to exclusively use a Platform As A Service (PAAS) architecture.

Next Steps

- Leveraging Azure Automation and PowerShell to schedule Transact SQL statements

- Using elastic jobs to schedule and execute Transact SQL statements

- How can Azure Logic Apps be used to create complex workflows?

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips