By: Koen Verbeeck | Comments (4) | Related: > Azure Functions

Problem

Azure Data Factory currently doesn't have an integrated connector for the Snowflake cloud data warehouse. A work-around is to use Azure Functions to send SQL statements to Snowflake. In this two-part tip, we explain how you can create and use such an Azure Function.

Solution

In part 1 of the tip we explained the basic concepts and created an Azure Function in Visual Studio. This function accepts a SQL statement through the body of an HTTP request and then sends this SQL statement to the Snowflake database. The connection string is retrieved from a configuration file. It's advised to go through part 1 if you want to follow along with this tip.

For completeness, here's the code for the Azure Function:

using System; using System.IO; using System.Threading.Tasks; using Microsoft.AspNetCore.Mvc; using Microsoft.Azure.WebJobs; using Microsoft.Azure.WebJobs.Extensions.Http; using Microsoft.AspNetCore.Http; using Microsoft.Extensions.Logging; using Newtonsoft.Json; using Microsoft.Extensions.Configuration; using System.Collections.Generic; namespace RunSnowflakeQuery { public static class SnowflakeExecuteQuery { [FunctionName("SnowflakeExecuteQuery")] public static async Task<IActionResult> Run( [HttpTrigger(AuthorizationLevel.Function,"get", "post", Route = null)] HttpRequest req, ILogger log, ExecutionContext context) { var config = new ConfigurationBuilder() .SetBasePath(context.FunctionAppDirectory) .AddJsonFile("local.settings.json", optional: true, reloadOnChange: true) .AddEnvironmentVariables() .Build(); string myConnectionString = config["SQLConnectionString"]; var client = new SnowflakeClient(myConnectionString); string requestBody = await new StreamReader(req.Body).ReadToEndAsync(); dynamic command = JsonConvert.DeserializeObject(requestBody); var statements = new List<string>(); statements.Add(command.Query.ToString()); int affectedRows; try { affectedRows = client.ExecuteNonQuery(statements.ToArray()); } catch (Exception e) { log.LogError(e, "Error processing request"); // return req.CreateResponse(HttpStatusCode.InternalServerError, e.Message); return new BadRequestObjectResult(e.Message); } // return req.CreateResponse(HttpStatusCode.OK); return new OkObjectResult(new { rowcount = affectedRows }); } } }

The code uses an extra class called SnowflakeClient, which can also be found on the Github page of Jess Pani.

Publishing the Azure Function

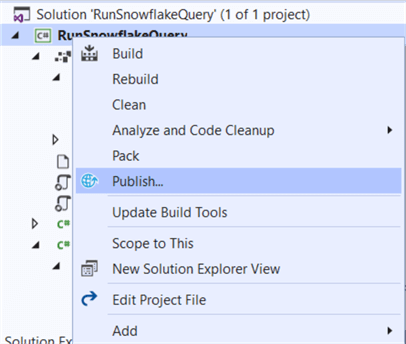

Now our function is complete, we want to push it to the Azure Portal where we can easily test it. Publishing is quite straight forward: right-click the project and select Publish…

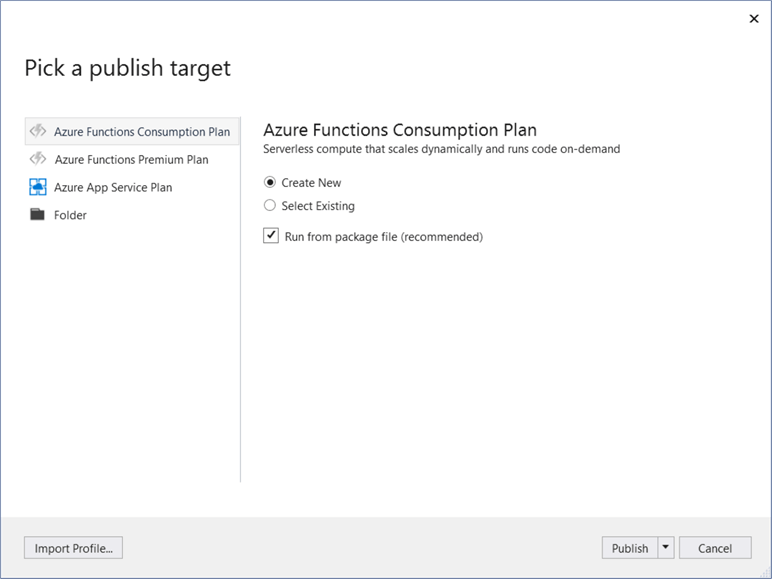

The next screen asks us for the intended target.

- You have Azure Functions Consumption or Premium Plans. This is serverless compute, which scales dynamically and will run the code on-demand. The Premium plan is of course faster and better. You can find more info in the pricing guide.

- There is also Azure App Service Plan, which is dedicated compute for running code on-demand. More info in the pricing guide.

- Or you can deploy to a folder.

In this example, the defaults were chosen as indicated in the screenshot above. Selecting Run from package file will run the Function from a .zip file containing all the source files. More information can be found in the documentation and in this announcement.

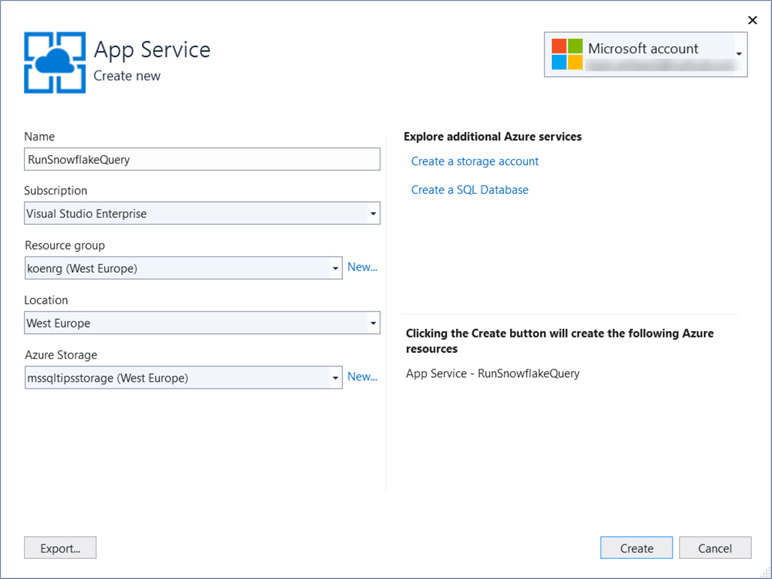

In the next screen, you create your Azure App Service (for the difference between the service and the plan, check this blog post). As mentioned in part 1, the name of the Visual Studio project is the same as the App Service.

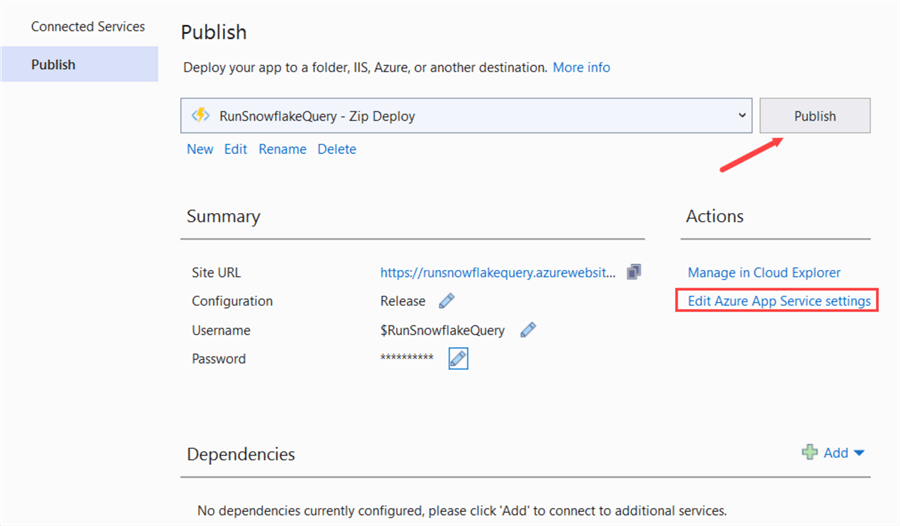

After creating the App Service, we have arrived in the Publishing screen.

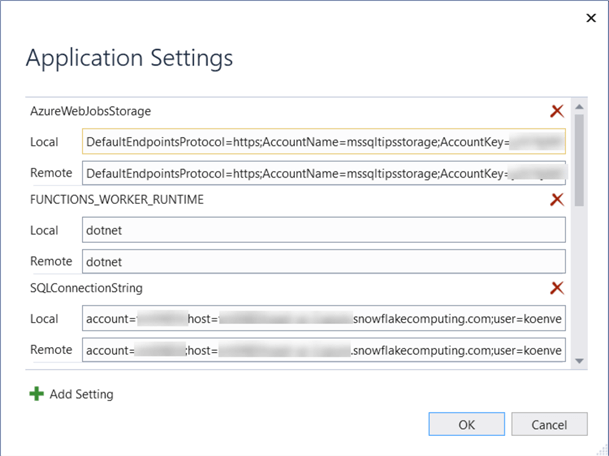

Before you hit Publish, click on Edit Azure App Service settings. This will allow you to create your configuration for the Snowflake connection string.

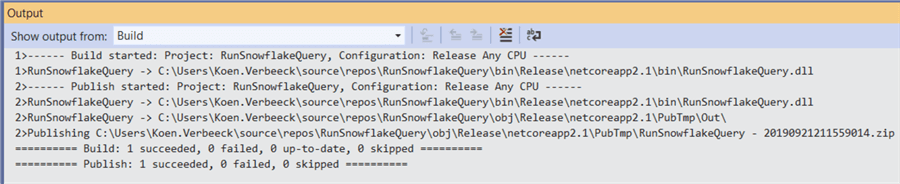

After clicking Publish, we can verify in the output window that publishing went smoothly:

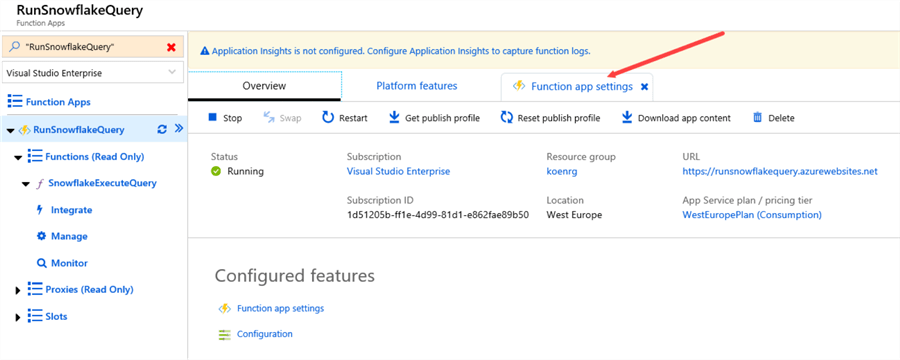

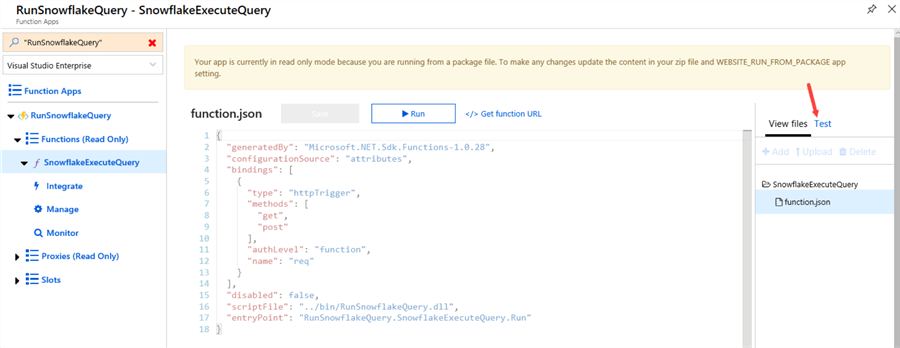

In the Azure Portal, we can now find the newly created App Service (called RunSnowflakeQuery) and it's only function SnowflakeExecuteQuery. In the App Service, you can go to the Function app settings.

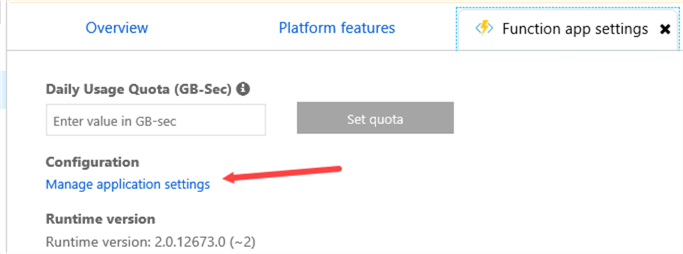

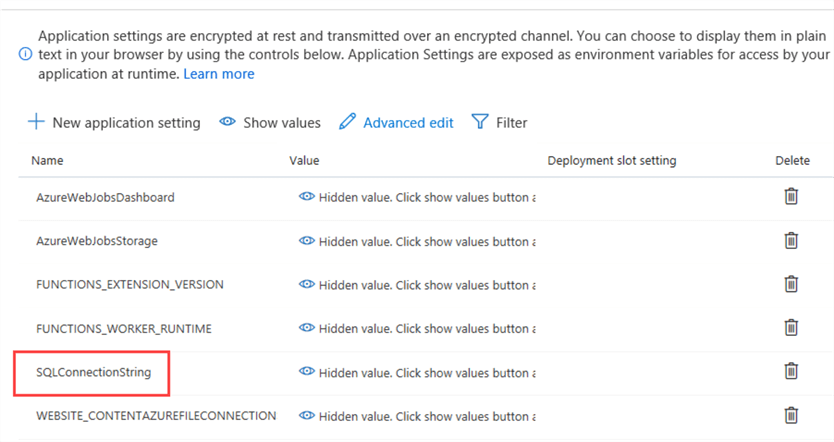

In the settings, we can manage the applications settings, so we can verify our connection string was deployed correctly.

As you can see in the screenshot below, the connection string is present. It's encrypted, but you can verify it in the browser. It will be passed as an environment variable when the function is running.

Testing the Azure Function

After all this work, it would be nice to know if the function actually works. It's possible to debug the function in Visual Studio, but since we're using a POST request, it's a bit easier in the Azure Portal.

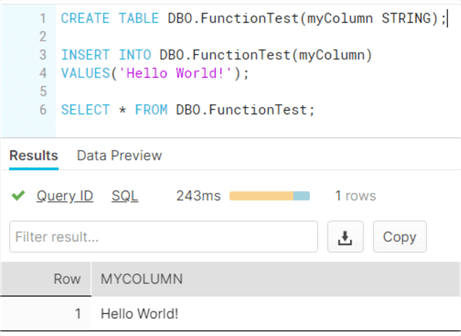

First of all, we're going to create a dummy table in Snowflake and populate it with one single row:

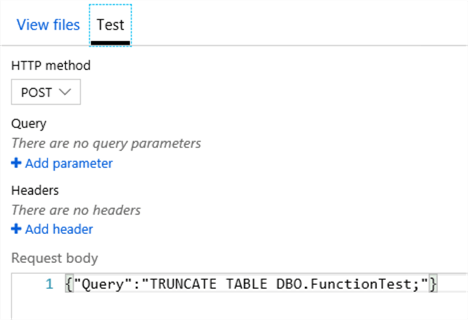

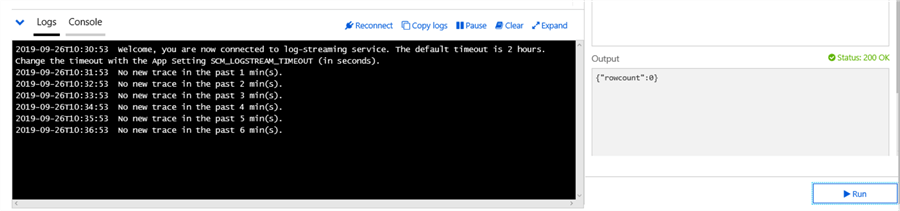

Using our function, we're going to send a TRUNCATE TABLE statement to empty the table. In the Azure Portal, go to the App Service and select the function SnowflakeExecuteQuery. At the right hand side of the screen, you can find the Test functionality.

In the Test pane, we can select the HTTP method which is POST.

In the Request body, we can enter the following JSON:

{

"Query":"TRUNCATE TABLE DBO.FunctionTest;"

}

At the bottom of the pane, click Run to start the test. If everything went successful, you'll see an output body containing the row count, which should be zero since TRUNCATE TABLE doesn't return rows. You'll also see the status message "200 OK".

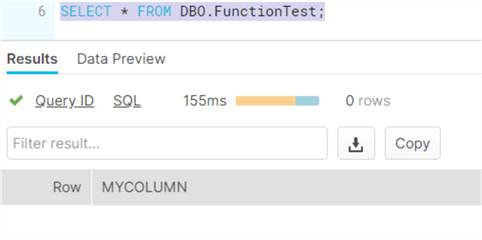

In Snowflake, we can verify the table is now empty.

Let's try with a different statement.

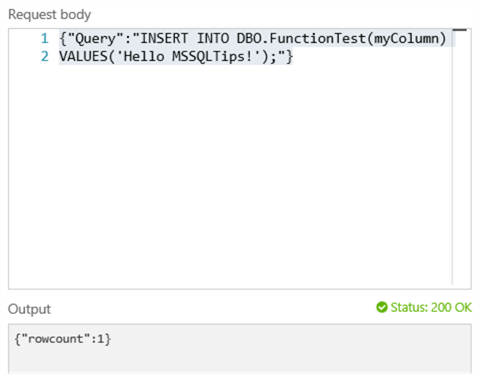

{"Query":"INSERT INTO DBO.FunctionTest(myColumn)

VALUES('Hello MSSQLTips!');"}

And the result:

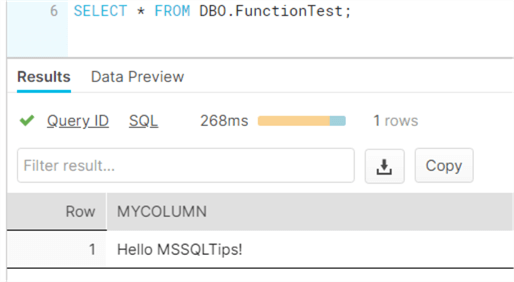

Let's verify in Snowflake:

We've demonstrated how we can successfully send SQL statements over to the Snowflake cloud data warehouse using the HTTP trigger of an Azure Function. In a next tip, we'll integrate this solution into Azure Data Factory so we can use it as an orchestrator for the ETL process loading the data warehouse.

Next Steps

- Try it out yourself! If you haven't already, check out part 1 to get up to speed with the Azure Function.

- Official documentation on Azure Functions:

- The tip How to Schedule T-SQL Statements to Run with Azure Functions demonstrates how you can execute T-SQL statements using a schedule-triggered Azure Function.

- For more Azure tips, check out these articles.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips