By: Koen Verbeeck | Updated: 2023-01-30 | Comments (5) | Related: > Azure Functions

Problem

I need to read a file stored in an Azure Blob Storage container. However, I cannot use Azure Data Factory (ADF) because I need to do some transformations on the data. Can I achieve my goal with Azure Functions instead?

Solution

There are several different methods for reading files in the Azure cloud. The easiest options don't require code: a Copy Data activity in ADF or an Azure Logic App. However, both copy the data to a destination. What if we want to do more complex transformations? Another option is dataflows in ADF (both mapping dataflows or Power Query), but these might be a bit too expensive to process small files.

A solution that involves a bit more code is Azure Functions. Using C# libraries, we can read a file from Azure Blob Storage into memory and then apply the transformations we want. In this tip, we'll cover a solution that retrieves a file from Azure Blob storage into the memory of the Azure Function.

How to Retrieve a File with an Azure Function

Creating a New Project

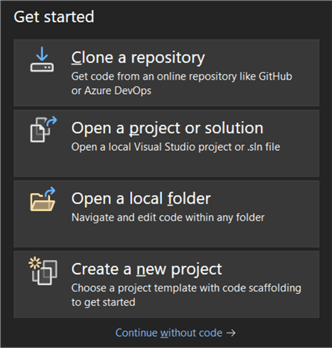

Let's start by creating a new project in Visual Studio. This tip utilizes screenshots taken from VS 2022.

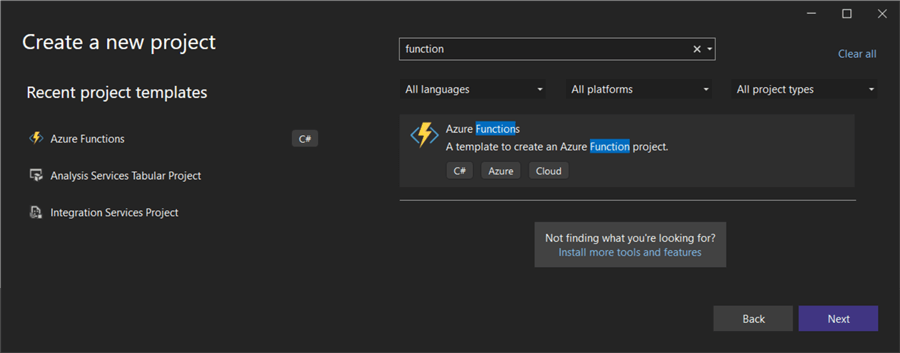

Choose the Azure Function project template. In Visual Studio, you can only use the C# programming language. You can use Visual Studio Code or program directly in the Azure Portal if you want another language, such as PowerShell.

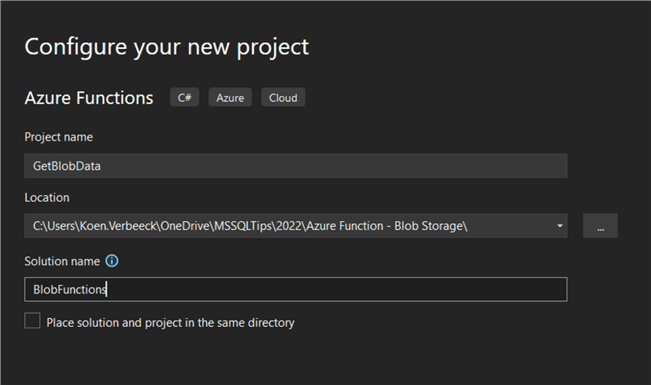

Give the project a name and choose a location to store it. If you want to add more Azure Functions later, you might want to give the solution a different name.

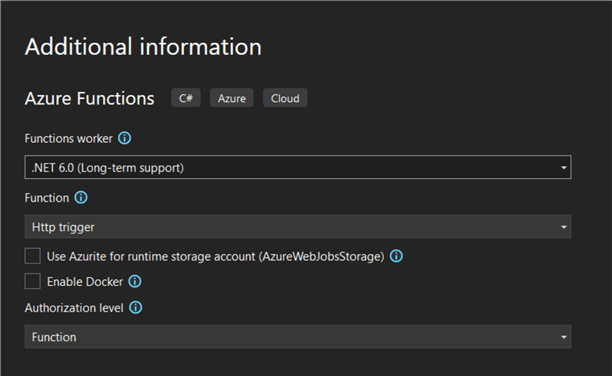

In the next screen, you can leave the defaults. Make sure the trigger is HTTP.

Note -You could also use a blob trigger. In this case, the Azure Function will be executed every time a new file is created or changed. The blob itself will be passed along to the Function, so there's not much effort required to read it. However, in this solution, we want more control over when and how the Function is triggered, hence the choice for the HTTP trigger. If you want more info about blob triggers, check out the tip Process Blob Files Automatically using an Azure Function with Blob Trigger.

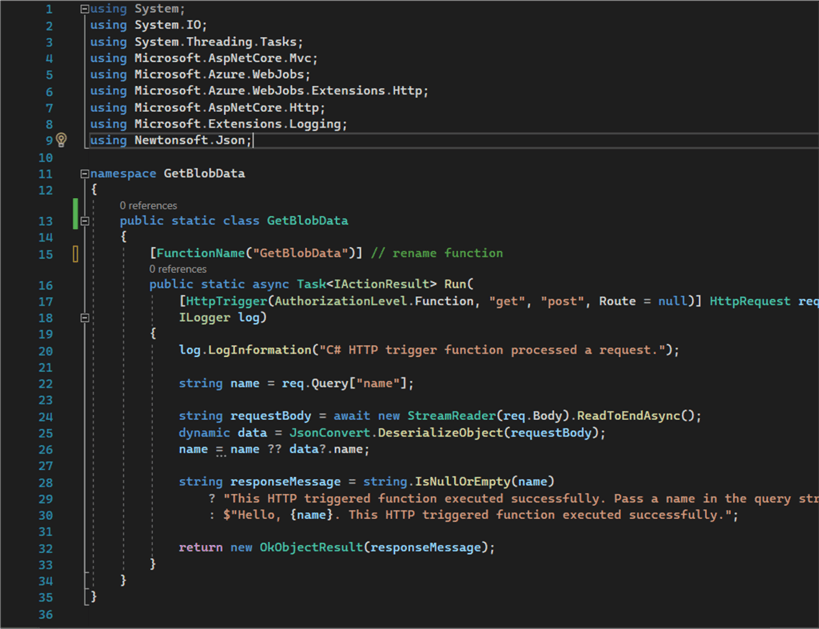

A new Azure Function is created. It contains a bunch of template code:

In short, it does the following:

- The function is triggered by an HTTP trigger. The body and other information are stored in the HttpRequest object named req.

- The function logs that it is started the processing.

- It assumes a property called name is passed along in the body of the HttpRequest. It tries to read this directly (line 22), then parses the body (which is in JSON format) and tries to retrieve name again (lines 24-26).

- Both results from the previous steps are coalesced together in line 26.

- A response is created containing the name that was parsed in the previous steps. The response is returned to the client in line 32. If no name was found, a message is displayed saying a name should be passed.

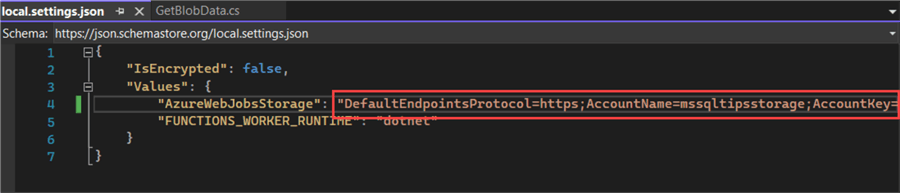

First, we will add a connection string to the Azure Blob Storage account. In the project, there's a file called local.settings.json. In there, we can find a key with the name AzureWebJobsStorage. Fill in the name of the Azure Blob Storage account and the account key (which can be found in the Azure Portal).

If the storage account you want to use differs from the storage account used by the Azure Function, you can add a new key-value pair to the JSON file.

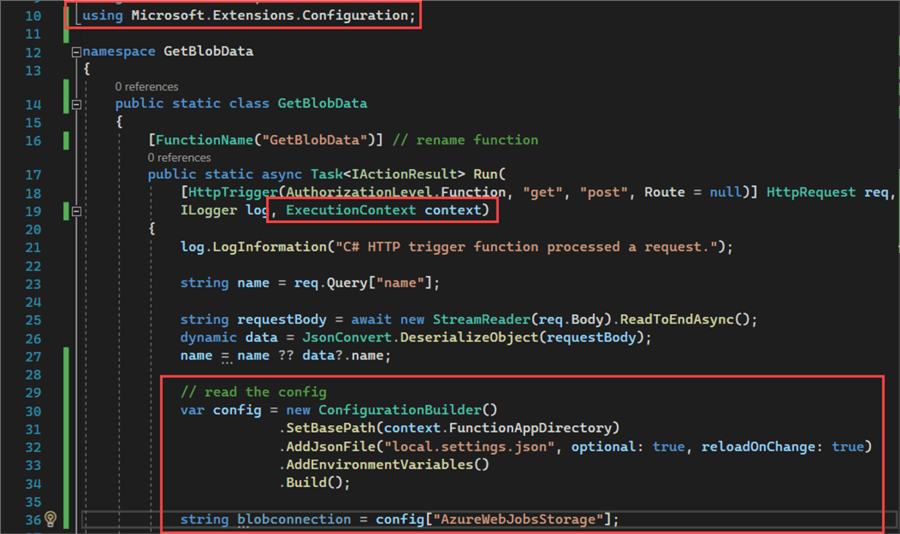

By adding a few lines of code, we can read this config file into our Azure Function:

This ConfigurationBuilder object actually does a lot more. It will first check if configuration values can be found in the Azure Function App (which will host the Azure Function when deployed in the cloud). This means we can specify configuration parameters for when the Azure Function is running in its serverless environment in Azure. In the next step (line 32), the contents of the local.settings.json file are checked. This is needed to debug our Azure Function locally in Visual Studio. This settings file is not deployed to the Azure Function App! In fact, it should not even be in source control since it contains secrets. If both are not found, the configuration builder checks environment variables or build parameters (lines 33-34).

Finally, in line 36, we read the connection string for the Azure Storage account into a variable from the config.

Connecting to an Azure Blob Container

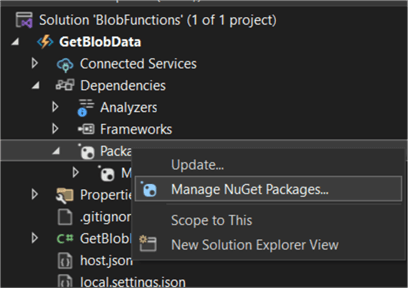

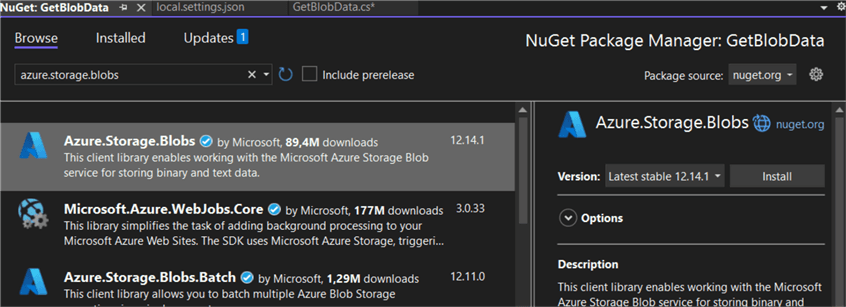

Before we can connect to a container, we need to install some additional packages into our project. This is done through the Nuget Package Manager, which comes with Visual Studio. Right-click Packages under Dependencies and choose Manage Nuget Packages...

In the package manager, go to Browse and search for azure.storage.blobs. Install the package. It's possible you have to accept one or more license agreements.

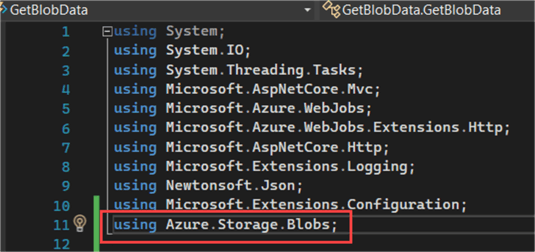

In the code of the Azure Function, add a reference to our new package:

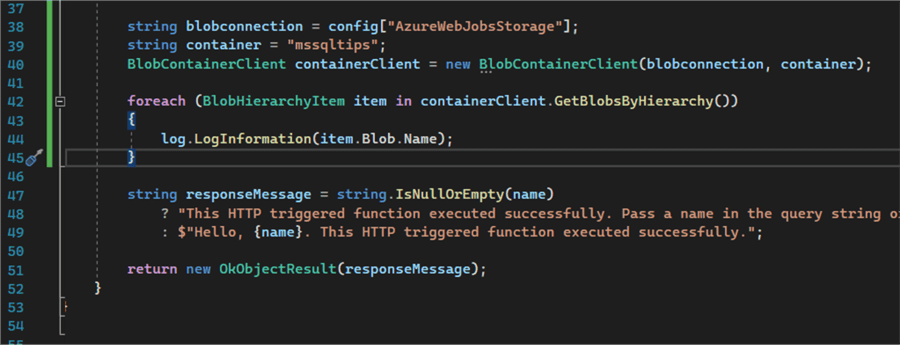

Let's add some code that will create a connection to a specific blob container, loop over all the items in that container, and write each filename to the log. This is done by creating an instance of the class BlobContainerClient, which accepts two arguments for its constructor: a connection to a storage account – which we retrieved earlier from the config – and the name of the container ("mssqltips" in this use case).

Then we can use the method GetBlobsByHierarchy, which will return a list of BlobHierarchyItems. For each item, we get the blob and log its name.

string blobconnection = config["AzureWebJobsStorage"];

string container = "mssqltips";

BlobContainerClient containerClient = new BlobContainerClient(blobconnection, container);

foreach(BlobHierarchyItem item in containerClient.GetBlobsByHierarchy())

{

log.LogInformation(item.Blob.Name);

}

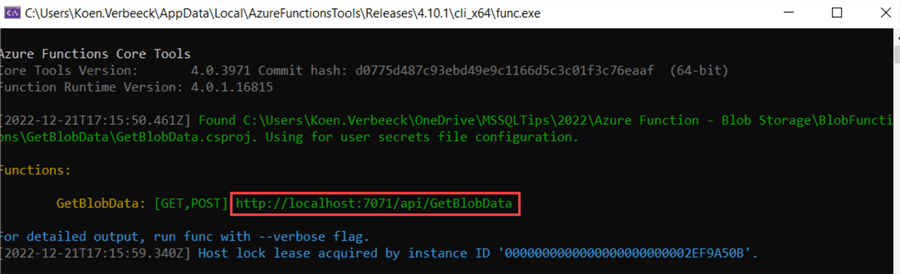

When we run the Azure Function in Visual Studio (hit F5), a command line prompt will open where you can retrieve the local URL of the Azure Function:

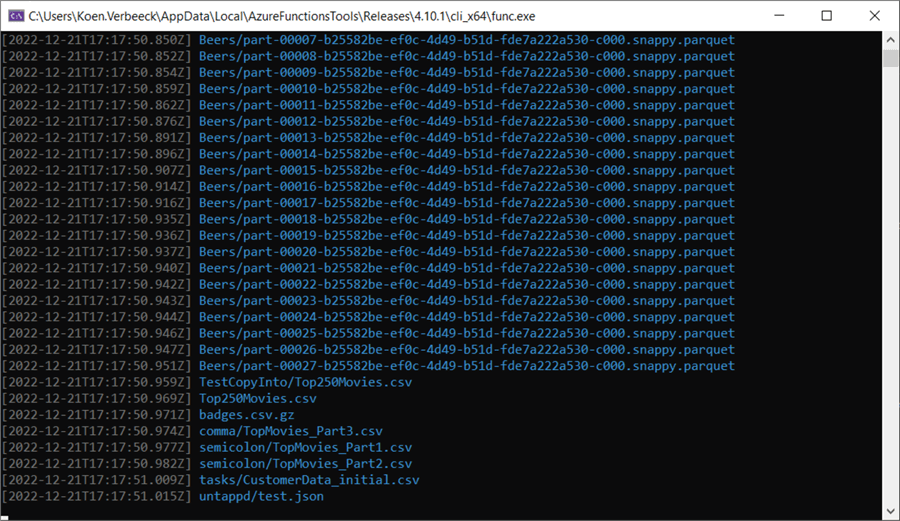

The Azure Function will be triggered if you open a browser and go to this URL. When it's triggered, you can see all of the names of the different blobs in the blob container being logged to the console:

Now that we've verified that we can connect successfully to an Azure Storage account and blob container, we can try to retrieve a single blob file.

Retrieving a Blob from a Blob Container

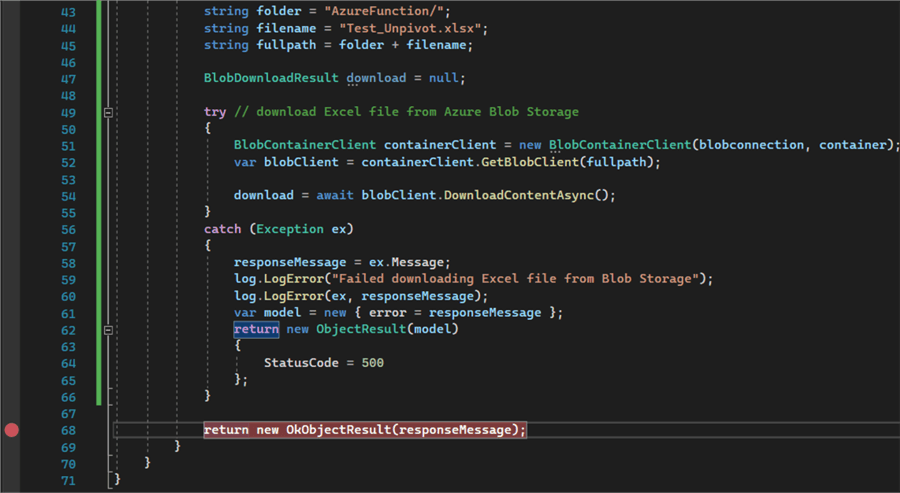

The rest of the code looks like this:

First, we define some variables holding the filename of the blob we want to download and the name of the folder where this blob is located. We concatenate this together into a full file path.

Then we create an instance of a BlobDownloadResult. Inside a try-catch block, we try to download the file. Now we're using the methods GetBlobClient and the asynchronous DownloadContentAsync to retrieve the blob from the Azure service. The result of the method DowloadContentAsync is stored in the BlobDownloadResult instance.

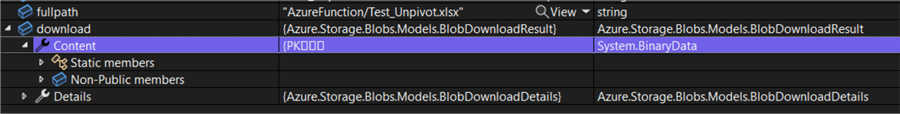

When we debug our Azure Function again (I've put a breakpoint on the last line), we can see that the function has retrieved the file successfully. However, we cannot inspect its contents directly since it's a binary file.

In the next tip, we'll look at how we can read the contents of an Excel file inside an Azure Function.

Next Steps

- As an exercise for the reader, try to pass the file name and the folder as parameters through the HTTP request body instead of hardcoding them.

- You can find more Azure tips in this overview. You can filter on "function" to find all the tips related to Azure Functions.

- You can download the sample code here.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2023-01-30