By: Jeffrey Yao | Comments | Related: > SQL Server 2017

Problem

We usually set up our SQL Server transaction log backup job to run every 30 minutes for our 30+ databases and we have log-shipping setup for two databases to a remote location as per business requirements. Sometimes a SQL Server transaction log backup file size can be very large and makes the log-shipping latency much longer due to network bandwidth limitations. Is there a way we can do our SQL Server transaction log backup based on the data change volume, which usually determines the transaction log backup size?

Solution

Microsoft has never documented how the database log works, such as its format, its logging mechanism/algorithm, etc. But SQL Server 2017 has introduced a few improvements in some DMVs that expose more details about the status of data and log changes, and we can use the new information to design a better backup strategy. In this tip, we will explore these new DMVs and how we can use them to build a smart backup process.

This is an old DMV, but in SQL Server 2017, it adds a new column [modified_extent_page_count], which tells the total number of pages modified in allocated extents of the file since the last full database backup.

A common practice for SQL Server backups is to execute a weekly full backup plus a daily differential backup. With this new [modified_extent_page_count] column, we can set up a threshold (based on our own business requirements), once the threshold is reached, we can trigger a differential backup (or a transaction log backup if necessary). This information can prevent us from executing backups blindly by following schedules, but instead execute backups when needed.

This is a new DMV, and it returns a column [log_since_last_log_backup_mb]. With this information, we can set up a threshold for log size, once the threshold is reached, we will execute a transaction log backup.

Environment Setup

We will first create a sample database with the full recovery model and then create a table and populate it with 500,000 rows of random data.

use master

go

drop database if exists mssqltips;

go

create database MSSQLTips

on primary (name=mssqltips_data, filename='c:\data\mssqltips_data.mdf', size=12mb, filegrowth=4mb)

log on (name=mssqltips_log, filename='c:\data\mssqltips_log.ldf', size=5mb, filegrowth=2mb);

go

use MSSQLTips

if object_id('dbo.Record', 'U') is not null

drop table dbo.Record;

create table dbo.Record (id int primary key, [name] varchar(200), x int, dt datetime default getdate());

create index idx_Record_name on dbo.Record (name, x);

create index idx_record_x on dbo.Record (x, dt)

go

set nocount on;

; with L0 as (select c from (values (1), (1)) as T(c))

, L1 as (select 1 as c from L0 as A cross join L0 as B)

, L2 as (select 1 as c from L1 as A cross join L1 as B)

, L3 as (select 1 as c from L2 as A cross join L2 as B)

, L4 as (select 1 as c from L3 as A cross join L3 as B)

, L5 as (select 1 as c from L4 as A cross join L4 as B)

, num (rn) as (select rn = row_number() over (order by (select 1)) from L5)

insert into dbo.record (id, [name], x)

select top (500000) rn, substring(convert(varchar(300),NEWID()),1, cast(ceiling(rand()*123456789) as int) %200 )

, cast(ceiling(rand(rn)*123456789) as int)

from num;

go

alter database MSSQLTips set recovery full;

go

On-Demand SQL Server Differential Backup

We will first look at how to execute a SQL Server differential backup. Letís say we will execute a differential backup once the data modification reaches a threshold, say 20MB, i.e. 2560 pages (8kb/page * 2560 pages = 20480KB = 20MB). In terms of events, it should be:

- Full SQL Server backup as a baseline

- If data change reaches 20MB or beyond, start a SQL Server differential backup

- If data change reaches 40MB or beyond, start a SQL Server differential backup

- Continue until a full SQL Server backup (letís say, we still stick to weekly full backup)

Note, since a differential backup will not reset the [modified_extent_page_count] column of the sys.dm_db_file_space_usage DMV, it means the next differential backup will occur only when the data change volume reaches 2X the threshold = 2 X 20MB = 40 MB (20MB is the defined threshold in our demo), and next differential will be 3X threshold = 60MB, so on and so forth.

On-Demand SQL Server Transaction Log Backup

For the SQL Server transaction log backup, the idea is similar, we first define a threshold, say 2MB, once the [log_since_last_log_backup_mb] column of sys.dm_db_log_stats reaches 2+MB, we will trigger a transaction log backup. The good thing here is once a transaction log backup is done, the column will be reset to nearly 0, and we just need to monitor this column to compare its value with the threshold value before starting another transaction log backup. We do not need to calculate how many transaction log backups have been done previously as we do with differential backups. So in terms of events, it should be like:

- Full SQL Server backup as a baseline

- If [log_since_last_log_backup_mb] reaches the threshold, start a SQL Server transaction log backup

- Repeat step 2 until a full SQL Server backup and then start again.

Implementation

To do on-demand backups, the ideal way is to have an event-driven architecture, that is whenever there is any change to our target, i.e. the targeted columns [modified_extent_page_count] and [log_since_last_log_backup_mb], we will evaluate the value against our thresholds and take actions when the condition is right.

However, there is no obvious way for this event-driven approach, so we instead choose to scan the two columns via a polling process that runs every [X] minutes.

So here is the polling process:

-- Fig-1 script -- on-demand differential backup implementation use master; declare @debug bit = 1; -- 1= print out, 0=execute -- define threshold declare @diff_bkup_threshold_mb int = 20.0; declare @bkup_device varchar(128)='c:\backup\'; -- define your own backup device path set nocount on; -- first check out the differential backup drop table if exists #t; create table #t (dbname varchar(128), diff_bkup_cnt int, IsDiff bit default 0); -- IsDiff = 1, means the db needs diff backup ; with c as ( -- using cTE to find the latest full backup select db.name, backup_finish_date=max(b.backup_finish_date) from msdb.dbo.backupset b inner join master.sys.databases db on b.database_name=db.name and b.type='D' group by db.name ) -- find the # of differential backups after the latest full backup for each db insert into #t (DBName, diff_bkup_cnt) select [DBName]=c.name, cnt=sum(case when b.database_name is not null then 1 else 0 end) from msdb.dbo.backupset b right join c on b.database_name=c.name and b.type='I' and b.backup_finish_date > c.backup_finish_date group by c.name; -- find dbs that need differential backup declare @sqlcmd varchar(max) = ' use [?] ; with c as ( select [DBName]=db_name(database_id) , TotalPage=sum(total_page_count) , ModifiedPage= sum(modified_extent_page_count) from sys.dm_db_file_space_usage u group by database_id ) update t set IsDiff=1 from #t t inner join c on c.DBName=t.DBName where c.ModifiedPage > (t.diff_bkup_cnt+1)*' + cast( @diff_bkup_threshold_mb*1024/8 as varchar(20)) exec sp_MSforeachdb @sqlcmd; -- loop through each database on a server -- now based on [IsDiff] in #t, we decide which db to do the diff backup set @sqlcmd = ''; select @sqlcmd += 'backup database ' + dbname + ' to disk='''+@bkup_device+ dbname+'_diff_' + replace(replace(replace(convert(varchar, current_timestamp, 120), ':', ''), '-', ''),' ','_') +'.bak'' with init, compression, differential;' + char(0x0d) + char(0x0d) from #t where isDiff=1 if @debug = 1 print case when @sqlcmd='' then 'No Differential backup needed' else @sqlcmd end;; else exec (@sqlcmd);

Ok, we will test the Fig-1 script by going through a few scenarios.

First we will do a full backup and then check the modified pages.

--exec msdb.dbo.sp_delete_backuphistory '2030-12-31'; -- cleanup backup history for testing -- first do a fullbackup as a baseline backup database MSSQLTips to disk='c:\backup\mssqltips_full.bak' with init, compression; -- now we can check the modified page -- now we can check the modified page select [DBName]=db_name(database_id) , TotalPage= sum(total_page_count) , ModifiedPage=sum(modified_extent_page_count) from sys.dm_db_file_space_usage group by database_id;

We get:

Now letís do some data changes and later check modified page again.

-- do some data changes delete top (5000) from dbo.Record where id > 300000;

Since the modified page reaches 3576 pages, i.e. 3576 page * 8kB/page=28608KB, about 28MB > 20MB (our defined threshold value in @diff_bkup_threshold_mb of Fig-1 script), when we run the Fig-1 script, we expect a differential backup statement generated (with @debug=1).

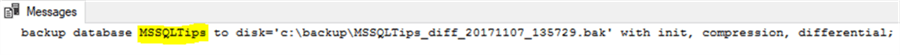

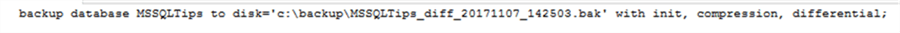

If we set @debug=0 in Fig-1 script, we will automatically run the differential backup as shown below:

Now assuming we are not doing DMLs inside the [MSSQLTips] database, after this differential backup, if we run the Fig-1 script again, we should not expect a differential backup statement to be generated, because to generate the 2nd differential backup, the modified page volume should be 2 times the threshold value (20MB), i.e. 40MB. But our current change is only 3600 pages = 28.125 MB.

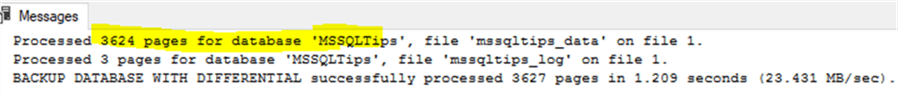

When I run Fig-1 script again, I get:

This is exactly as expected.

Now if we do another big chunk of data changes as follows:

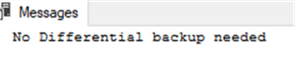

-- another big chunk of data change update top (35000) r set x=x+10, name='hello'+name, dt=dt+1 from dbo.Record r where id > 1000; -- check modified page again select [DBName]=db_name(database_id) , TotalPage= sum(total_page_count) , ModifiedPage=sum(modified_extent_page_count) from sys.dm_db_file_space_usage group by database_id;

Since 5312 pages = 5312 * 8 KB/page = 42496 KB = 41.5 MB > 2 * 20 MB (threshold value), if we run Fig-1 script again, we should see a differential backup statement printed, which is what we expect.

So the test case proves the logic of the Fig-1 script for on-demand differential backup.

Now we will take a look at on-demand log backup implementation. Actually, the logic is simpler than the differential because the column [log_since_last_log_backup_mb] is reset after each log backup.

-- recreate / populate MSSQLTips database

use master

go

drop database if exists mssqltips;

go

create database MSSQLTips

on primary (name=mssqltips_data, filename='c:\data\mssqltips_data.mdf', size=12mb, filegrowth=4mb)

log on (name=mssqltips_log, filename='c:\data\mssqltips_log.ldf', size=5mb, filegrowth=2mb);

go

use MSSQLTips

if object_id('dbo.Record', 'U') is not null

drop table dbo.Record;

create table dbo.Record (id int primary key, [name] varchar(200), x int, dt datetime default getdate());

create index idx_Record_name on dbo.Record (name, x);

create index idx_record_x on dbo.Record (x, dt)

go

set nocount on;

; with L0 as (select c from (values (1), (1)) as T(c))

, L1 as (select 1 as c from L0 as A cross join L0 as B)

, L2 as (select 1 as c from L1 as A cross join L1 as B)

, L3 as (select 1 as c from L2 as A cross join L2 as B)

, L4 as (select 1 as c from L3 as A cross join L3 as B)

, L5 as (select 1 as c from L4 as A cross join L4 as B)

, num (rn) as (select rn = row_number() over (order by (select 1)) from L5)

insert into dbo.record (id, [name], x)

select top (500000) rn, substring(convert(varchar(300),NEWID()),1, cast(ceiling(rand()*123456789) as int) %200 )

, cast(ceiling(rand(rn)*123456789) as int)

from num;

go

alter database MSSQLTips set recovery full;

-- first do a fullbackup as a baseline

backup database MSSQLTips to disk='c:\backup\mssqltips_full.bak' with init, compression;

Now letís check DMV sys.dm_db_log_stats:

select db_name(database_id) as DBName

, log_since_last_log_backup_mb, log_truncation_holdup_reason

from sys.dm_db_log_stats(db_id('mssqltips'))

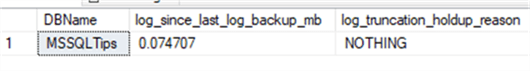

We get the following:

Now letís do some data changes and check again.

-- let's do some data change

use MSSQLTips

update top (5000) r

set x=x+10, [name]=[name]+'123'

from dbo.Record r

where id > 300000;

-- check again

select db_name(database_id) as DBName

, log_since_last_log_backup_mb, log_truncation_holdup_reason

from sys.dm_db_log_stats(db_id('mssqltips'));

We can see [log_since_last_log_backup_mb] is now increased to about 3.5 MB. We can do a log backup and then re-check.

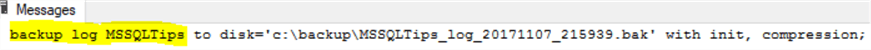

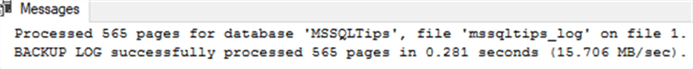

-- do a log backup

backup log MSSQLTips to disk='c:\backup\mssqltips_log.bak' with init, compression;

checkpoint;

-- check again

select db_name(database_id) as DBName

, log_since_last_log_backup_mb, log_truncation_holdup_reason

from sys.dm_db_log_stats(db_id('mssqltips'));

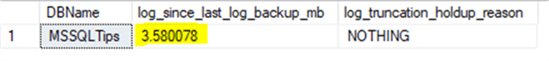

![see [log_since_last_log_backup_mb] is now increased to about 3.5 MB. We can do a log backup and then re-check.](/tipimages2/5216_on-demand-backup-sql-server-2017.010.png)

This shows that after a log backup, the column [log_since_last_log_backup_mb] will be reset.

The following script will do an on-demand log backup based on a pre-defined threshold value.

-- Fig-2 script -- on-demand log backup implementation use master; set nocount on; declare @debug bit = 1; -- 1= print out, 0=execute -- define threshold declare @log_bkup_threshold_mb float = 2.0; -- change to your own requirement declare @bkup_device varchar(128)='c:\backup\'; -- define your own backup device path declare @sqlcmd varchar(max)=''; -- first check out the differential backup select @sqlcmd += 'backup log ' + d.name + ' to disk='''+@bkup_device+ d.name+'_log_' + replace(replace(replace(convert(varchar, current_timestamp, 120), ':', ''), '-', ''),' ','_') +'.bak'' with init, compression;' + char(0x0d) + char(0x0d) from sys.databases d cross apply sys.dm_db_log_stats(d.database_id) s where d.recovery_model in (1,2) -- 1=Full / 2=bulk_ogged and d.database_id > 4 -- only for user dbs and s.log_since_last_log_backup_mb > @log_bkup_threshold_mb; print @sqlcmd if @debug = 1 print case when @sqlcmd='' then 'No log backup needed' else @sqlcmd end; else exec (@sqlcmd); go

Letís do a quick test to prove the logic of this on-demand transaction log backup.

Letís make some data changes:

-- do some data change

use MSSQLTips

update top (5000) r

set x=x+10, [name]=[name]+'123'

from dbo.Record r

where id > 100000;

checkpoint;

-- check log size

select db_name(database_id) as DBName

, log_since_last_log_backup_mb, log_truncation_holdup_reason

from sys.dm_db_log_stats(db_id('mssqltips'));

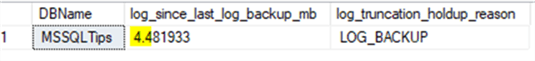

Now run Fig-2 script. Note, the @log_bkup_threshold_mb = 2.0 in the script, and after the data change, the current [log_since_last_log_backup_mb] is already 4.481933 mb. We should expect there is a log backup statement generated with @debug = 1.

The result is exactly as expected.

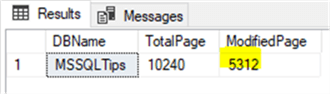

If we set @debug = 0, and run Fig-2 script again, we will see the log backup is indeed carried out.

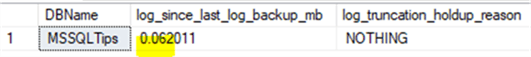

And if we check the log size, we should expect the column [log_since_last_log_backup_mb] be reset.

-- check log size

checkpoint;

select db_name(database_id) as DBName

, log_since_last_log_backup_mb, log_truncation_holdup_reason

from sys.dm_db_log_stats(db_id('mssqltips'));

The result is:

Summary

In this tip, we have explored how to execute on-demand SQL Server differential and transaction log backups with the new information in the SQL Server 2017 DMVs. The method discussed here can be applied to multiple databases in a instance when using only one threshold value.

In real complex environments, like multiple instances with multiple databases, there may be requirements that one database has a threshold value different from another. If so, I would use a more sophisticated approach, like creating a central configuration table where I can configure the threshold value for each server/database. I would also create a central table to keep the [modified_extent_page_count] for each database, so I can use the current DMV value to compare it for each database to ensure more accurate on-demand differential backups, and I do not even need to rely on msdb.dbo.backupset to count the number of previous differential backups.

Note: strictly speaking, for pre-SQL Server 2017, we can use dbcc sqlperf(logspace) to calculate the log size change and do on-demand log backup as well, but there is no (easy) way to determine the data change for a differential backup.

Next Steps

If you happen to need such backup approach instead of a purely time-based backup, you can modify scripts in Fig-1 and Fig-2 to meet your own requirements and put them in a job to run on a schedule.

You may also read the following to better understand the SQL Server transaction log and transaction log backups.

- SQL Server Transaction Log Backups

- SQL Server 2017 Transaction Log Backup Improvements.

- How to determine SQL Server database transaction log usage

- SQL Server Backups and Transaction Log Questions

About the author

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.

Jeffrey Yao is a senior SQL Server consultant, striving to automate DBA work as much as possible to have more time for family, life and more automation.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips