By: John Miner | Comments (1) | Related: > Azure

Problem

Microsoft announced the general availability of Azure Data Lake Storage (Generation 2) on 7 February 2019. This service promises to be scalable, cost effective and extremely secure. The cost savings of the service comes from the use Azure Blob Storage. Unlike Azure Data Lake Storage (Generation 1), this version of the service is widely available through Microsoft's data centers.

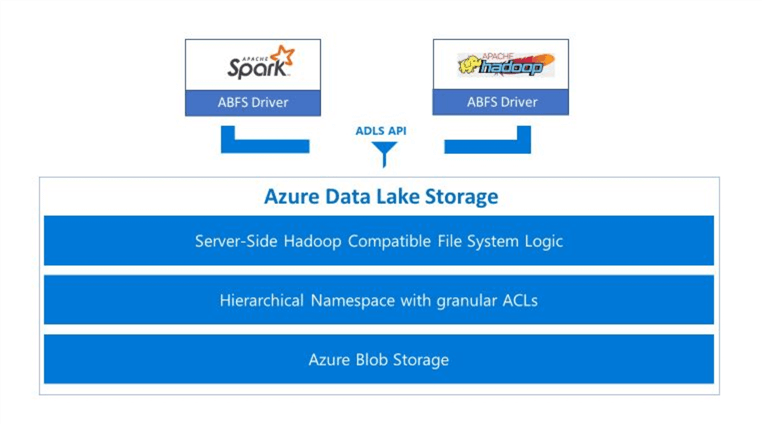

The high-performance Azure blob file system (ABFS) is built for big data analytics and is compatible with the Hadoop Distributed File System. Some of the attractive features of the new service are the following: encryption of data in transit with TLS 1.2, encryption of the data at rest, storage account firewalls, virtual network integration, role-based access security, and hierarchical namespaces with granular ACLs.

The image below highlights the benefits of the new service that caters towards Hadoop ready products such as Data Bricks, HD Insight and Azure Data Warehouse.

How can we create an ADLS Gen 2 file system within an Azure subscription?

Solution

While there are many benefits to this new data lake storage service, many Azure components are still not compatible with the service. I am sure this situation will change over time as the development team configures older services to use the new ABFS driver.

In the past, PowerShell was the language of choice to manage an ADLS Gen 1 file system. Today, the Azure portal is easiest way to deploy an ADLS Gen 2 file system and manage role bases access. Additionally, Azure Storage Explorer is the preferred way to manage the file system and handle access control lists.

Business Problem

Azure Data Lake Storage is a high speed, scalable, secure and cost-effective platform that can be used to collate your company's data in the cloud.

The key objective of a modern data warehouse is to collate various company data sources into one central place in the cloud. The data scientists in the company can setup machine learning experiments using raw data files. Enriched data can be stored in Azure SQL database using a star schema and typical data warehouse techniques. Comprehension reporting can be generated by joining the fact and dimension tables using your favorite tool. In short, the data lake offers many possibilities to any modern organization.

Today, we are going to investigate how to deploy and manage Azure Data Lake Storage Gen 2 using the Azure Portal and Azure Storage Explorer. Again, we are going to be working with the S&P 500 stock data files for 2019.

Azure Storage Account

I am assuming you have an Azure subscription and are familiar with the Azure portal. If you are new to the Azure ecosystem, check out the free learning paths from Microsoft. Today, I am using the [email protected] account that has domain administrative rights to all objects in the subscription.

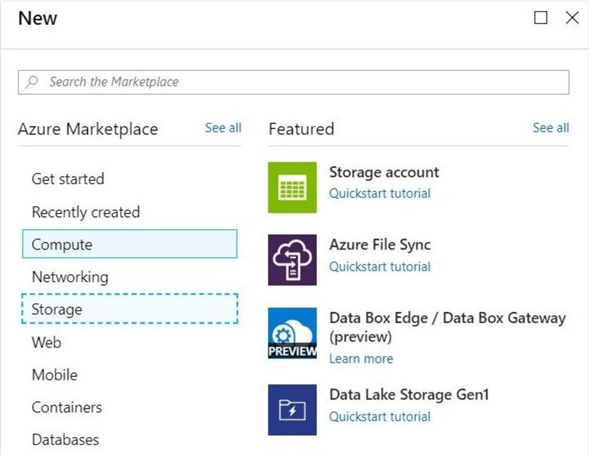

To start the deployment tasks, use the create resource button to bring up the new dialog box. Select the storage category from the Azure Marketplace. Choose a storage account object as the target type.

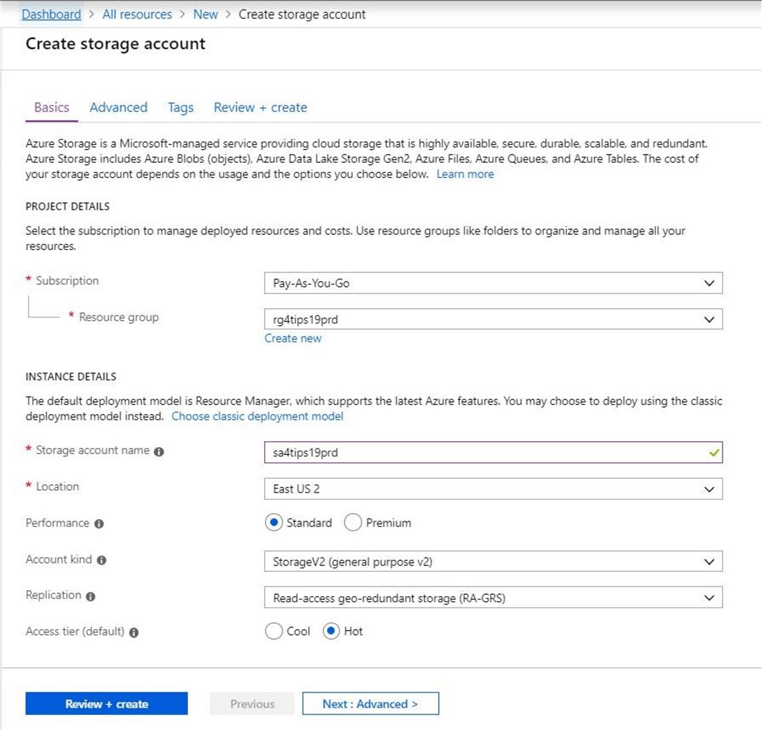

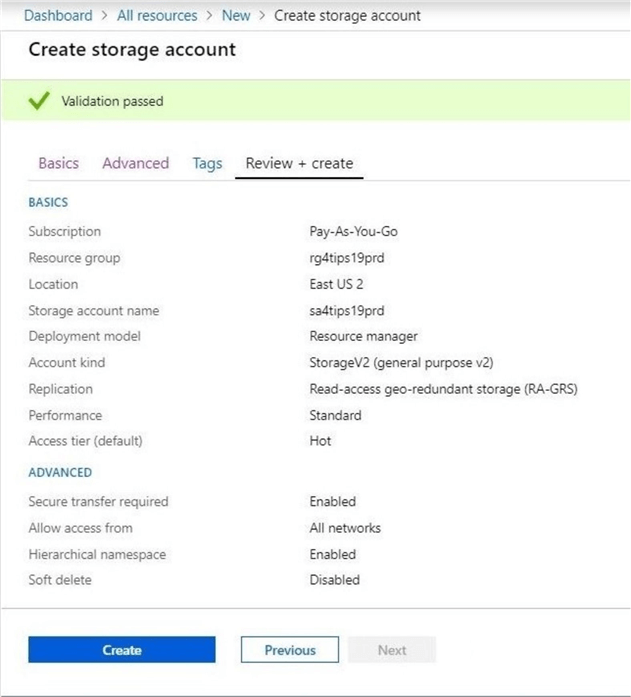

The next screen is the new create storage account form. Make sure you enter an existing resource group, choose a new storage account name and select Storage V2 as storage kind. In the example below, the storage account named sa4tips19prd will be created in the resource group called rg4tips19prd that existing in the East US 2 data center.

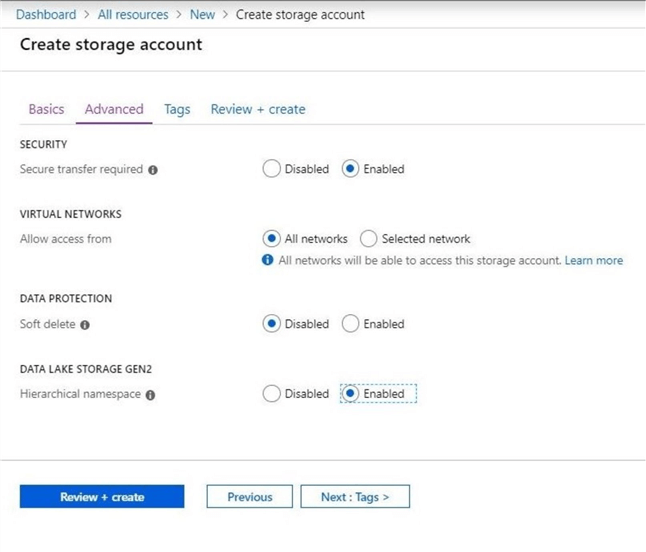

The most important step in the deployment task is to enable Data Lake Storage Gen 2 on the advanced options page.

The last step is to review the options that were picked before creating the new storage account.

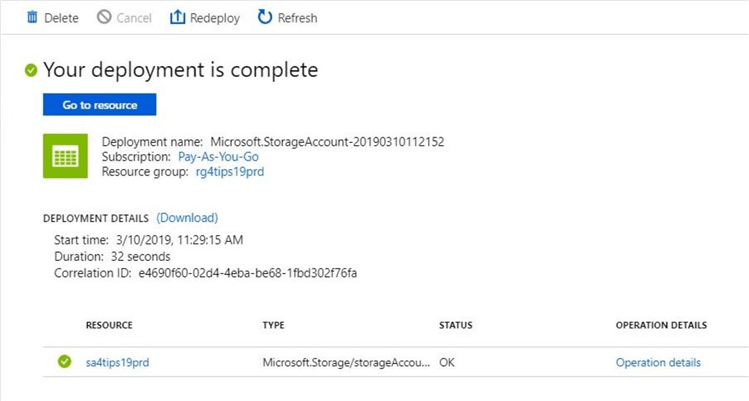

Click the create button to start the deployment of the storage account. If the deployment is successful, you should receive a notification like the one below.

The deployment of an Azure Data Lake Storage Gen 2 file system with an Storage Account is an extremely easy task.

Azure Portal

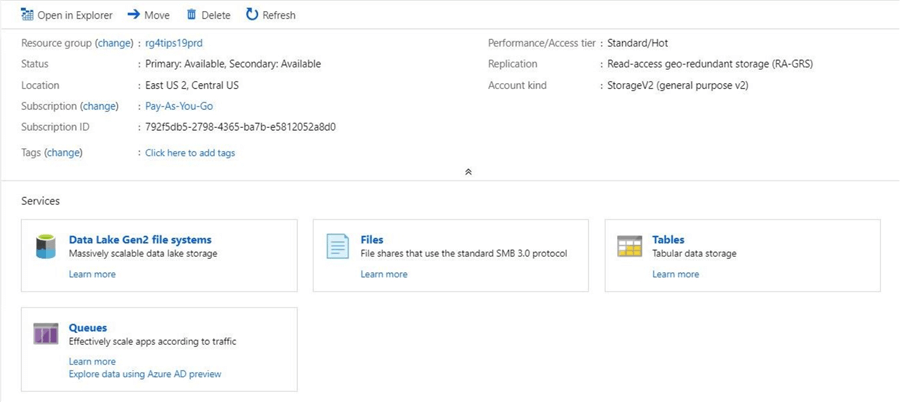

The portal can be used to configure role-based security and add file systems. The image below shows the overview of the new storage account. We can see that the blob service has been replaced with the ADLS Gen 2 file systems service.

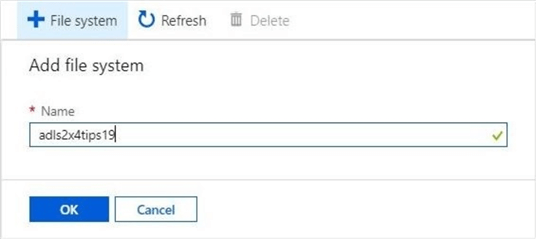

Initially, we do not have any file systems. Use the add file system button to change this condition. The image below shows the creation of a file system called adsl2x4tips19.

A refresh of the file system list will reflect the addition of a new object.

Double clicking the file system object will bring up this help screen. With the first generation of the service, there was a data explorer that could be launched from the portal to manage both the hierarchical file system and access control lists.

Currently, the second generation of the service supports Azure Storage Explorer to handle these maintenance tasks.

Azure Storage Explorer

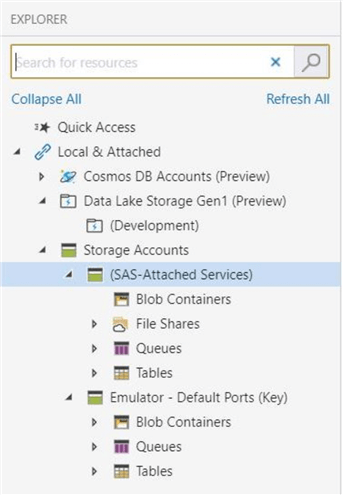

This free application allows you to manage a variety of storage services from a single console. If you do not have this software currently installed, browse this link to download the bits and check out the documentation.

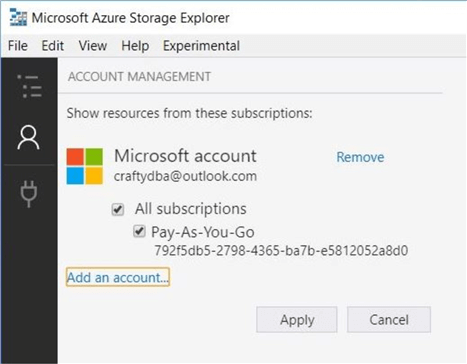

Before you can start using Azure Storage Explorer, you must add one or more users accounts. This involves logging into the Azure Portal with the credentials that you want to use. Please get familiar with this task. We will be using different accounts to test role-based security as well as access control lists.

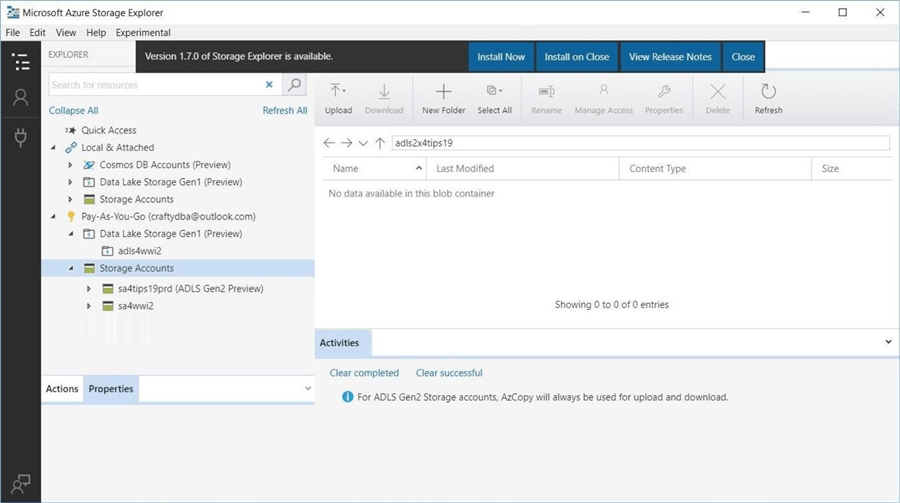

The image above shows the [email protected] account being added to the explorer. The image below shows the new Data Lake Storage Gen2 file system. It is important to note that this tool uses AzCopy.exe to perform the upload and download tasks.

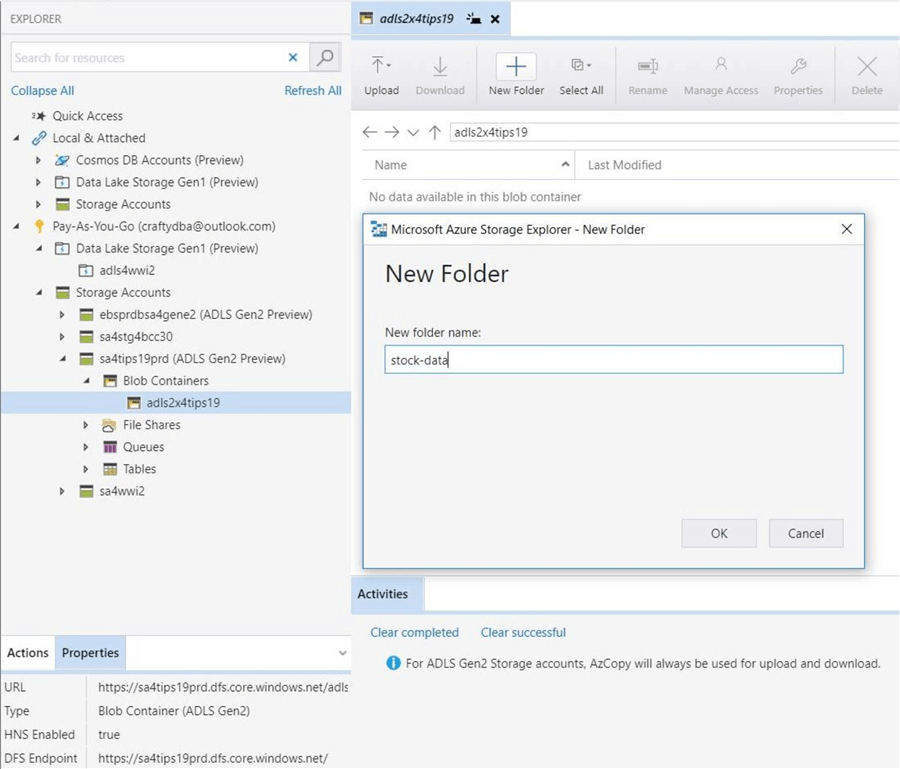

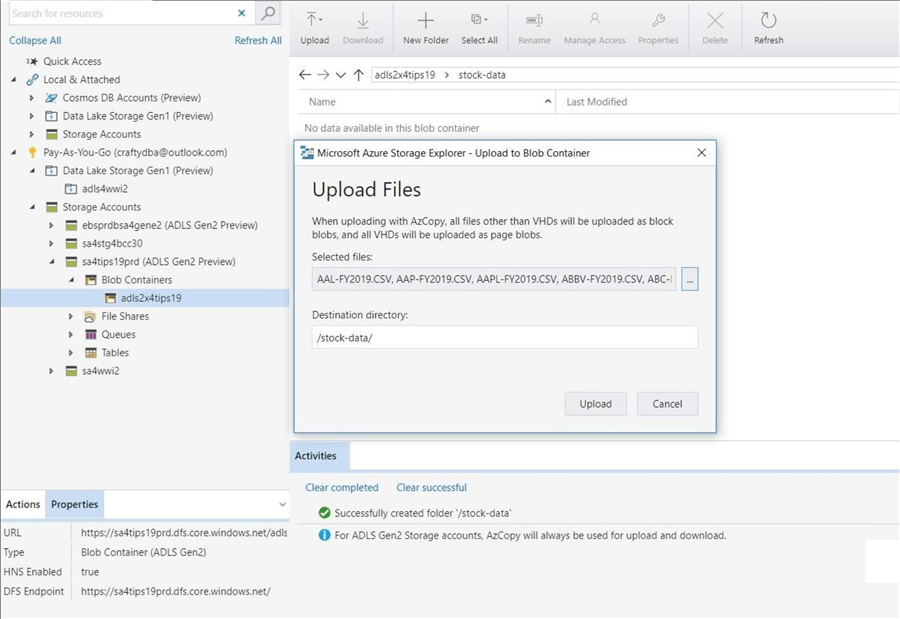

There are several folder and file tasks that we are interested in trying. Creating a new folder or deleting an existing one is a very common task. Our original business problem was to store S&P stock data for 2019 in the data lake. The image below shows the creation of a folder named stock-data.

The Azure Storage Explorer supports both upload folder and upload files. These actions are two drop down items under the upload button. The first item (technique) will create a new sub-directory with matches the source location. Since this side effect is not what we want. Choose the upload files action. Use [Control + A] key sequence to select all files in the c:\stocks\outbound to upload into the data lake. See the image below for details.

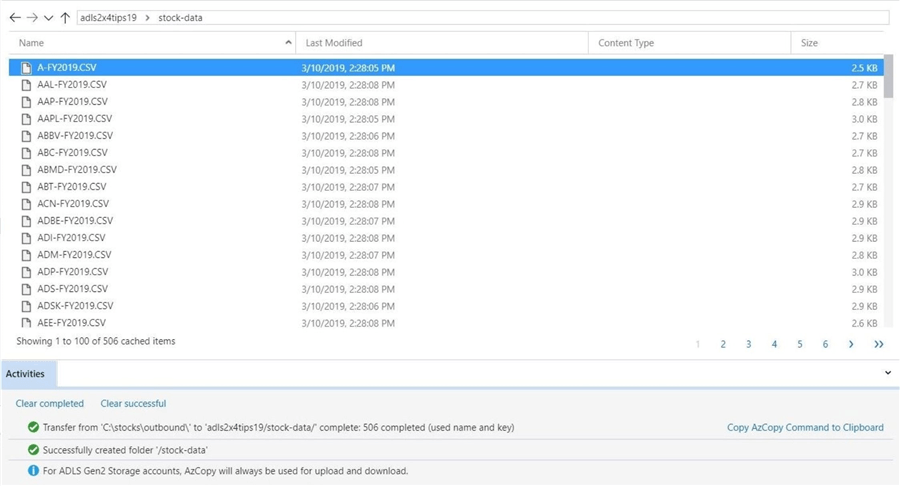

The storage explorer will prompt you to refresh the file and folder list. The activities section keeps track of any actions that you may perform. We can see that 506 files were uploaded into the stock-data directory.

There are many other file and folder activities that I will let you investigate. These tasks may include deleting files, deleting folders and downloading files. In a nutshell, the Azure Data Explorer application can be used to accomplish any type of file system management.

Storage Account Firewall

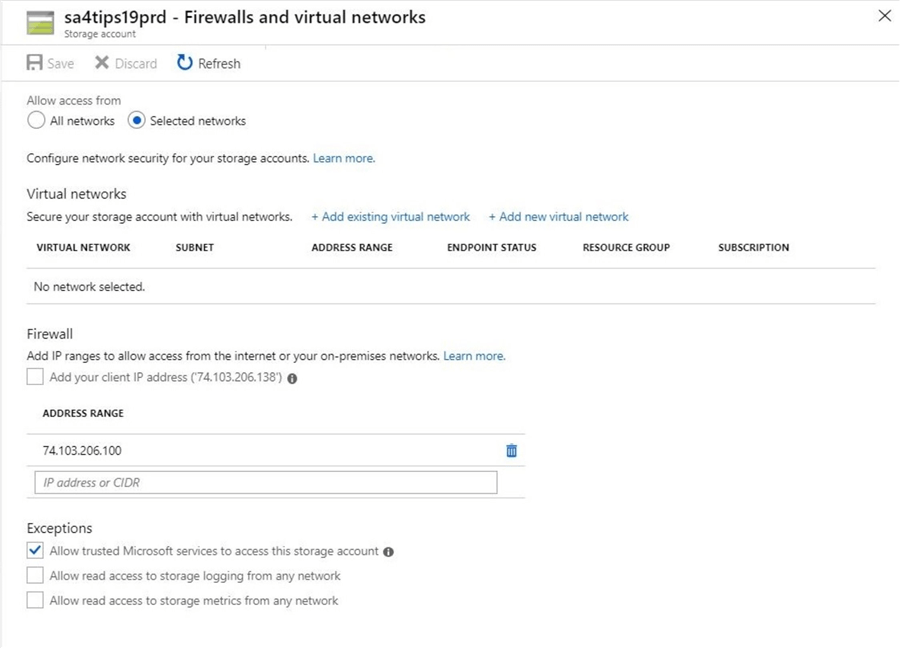

The addition of a firewall at the storage account level has greatly increased the security of Azure Blob Storage. In the past, storage could be used with an Access Key or Shared Access Signature. Any nefarious individual could use this simple string of text to access the service. Now, not only does the individual have to have access to the service but they have to have the right IP address.

Use the firewalls and virtual networks blade to enable the firewall. Change the allow access from radio button to point to selected networks. Add a custom IP address with the wrong information. The image below shows the class C section of the IP address having a value of 100 instead of 138. This fat fingering of the IP address will prevent the client from accessing the system.

If we refresh the Azure Storage Explorer window, the following error message occurs. That is great since our firewall is working. Unfortunately, the current computer can't access the storage service.

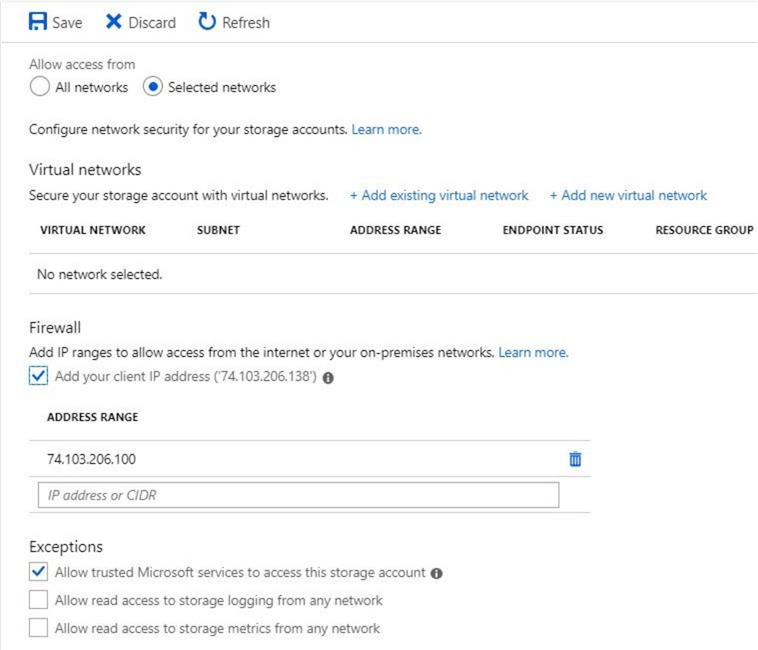

Another way to add the correct IP address is to use the check box next to add your client IP address.

A quick refresh of the Azure Storage Explorer file list window should show data instead of an access issue.

Role Based Security

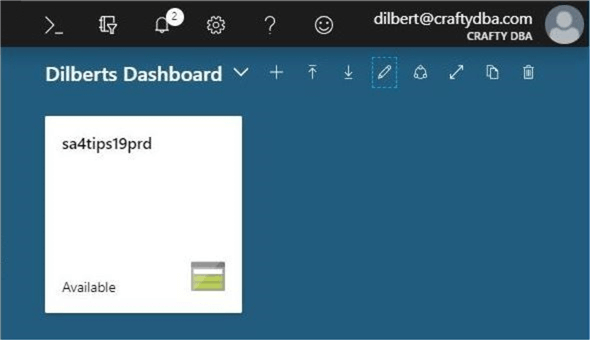

The Azure Data Lake Service is tightly integrated with Azure Active Directory. I have another account named [email protected]. Of course, this account is dedicated to Scott Adams comic strip. This account has been given reader rights to the subscription. The image below shows the storage account added to the dashboard with this account logged in.

What access do you think the account has to the any of the storage services? I changed the Azure account associated with the storage explorer. As you can see below, we do not have access to any of the storage services.

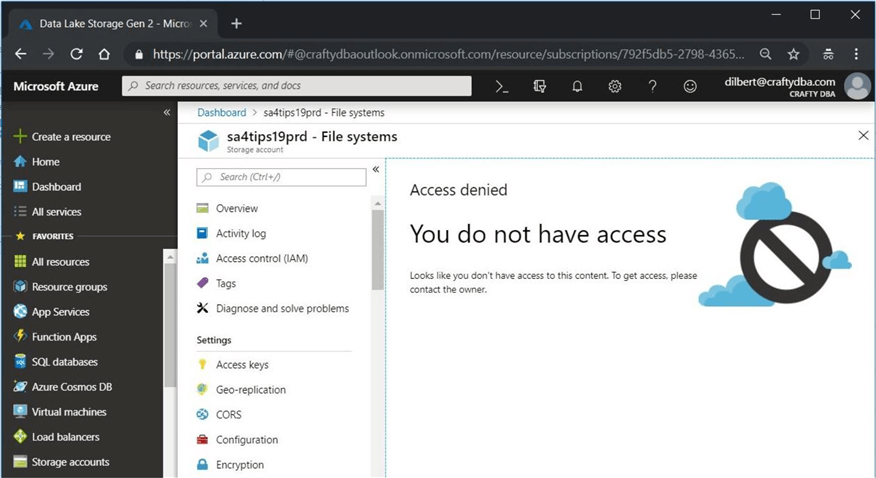

We can see that the sa4tips19prd storage account has the ability to create ADLS Gen 2 file systems. If we double the services icon, we can see that we do not have access to this section of the portal.

The principle of least privilege is an important concept when assigning security to users. It states that a user should only be given rights to do the task. The reader role for the subscription is the lowest level of security for a service account. However, we can't access the Data Lake Storage using this access role.

New Built-In Roles

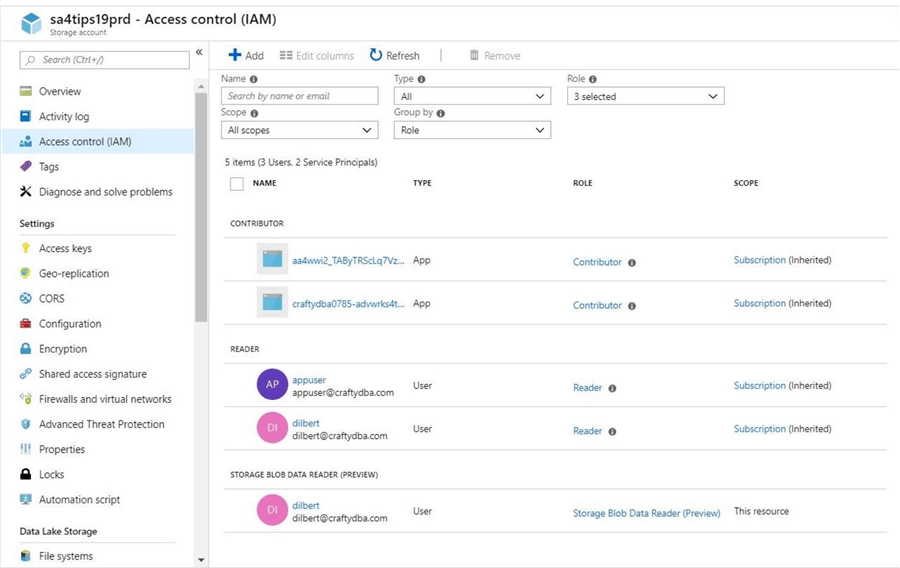

There are three new security roles for Azure Data Lake Storage Gen 2. The following roles in the table below can be assigned at the subscription or storage level. I suggest assignment at the storage level to keep in line with the principle of least privilege.

| Role | Description |

|---|---|

| Storage Blob Data Reader | Read access to containers and data. |

| Storage Blob Data Contributor | Read, write and delete access to containers and data. |

| Storage Blob Data Owner | Full access to containers and data, including assigning POSIX access control. |

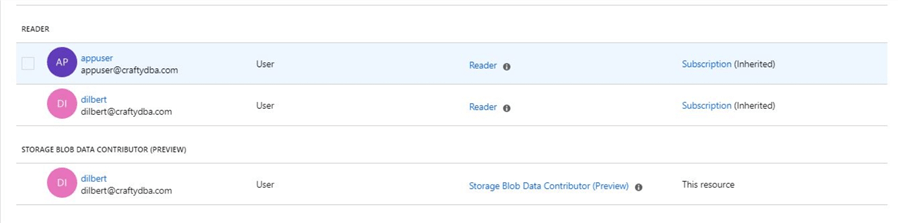

The access control blade in the storage account allows for the assignment of the Reader role. The image below shows the Dilbert account belonging to the built-in role.

A refresh of the Azure Data Explorer file list window will now show the contents of the adls2x4tips19 file system. If we truly have read access, both the new folder and upload file actions should fail.

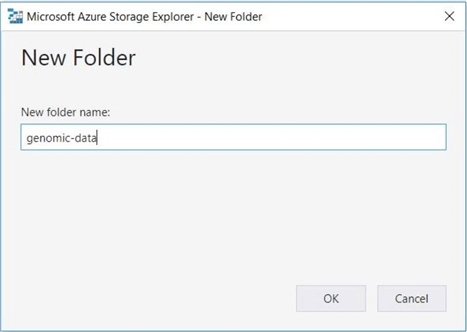

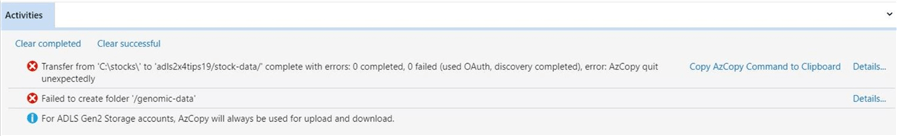

The above image shows the addition of a new folder named genomic-data to the root directory of the file system.

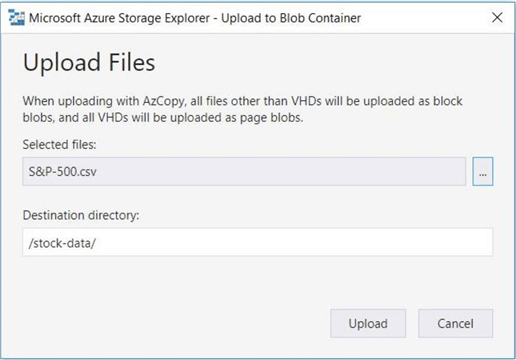

The above image shows the addition of a new text file named S&P-500.csv to the stock-data directory.

Of course, both of these actions fail due to security. This is a good test of the Reader role.

Common RBAC Assignment

Unless we are working with static files in the data lake, the most common role that will be assigned is the Contributor role. The Owner role should be given out sparingly since any user with that membership can change the access control lists of the file system.

The above image shows the user named Dilbert has been added to the Contributor role. If we try to create a folder or upload a new file to the data lake, these actions will fail.

Why are these actions failing?

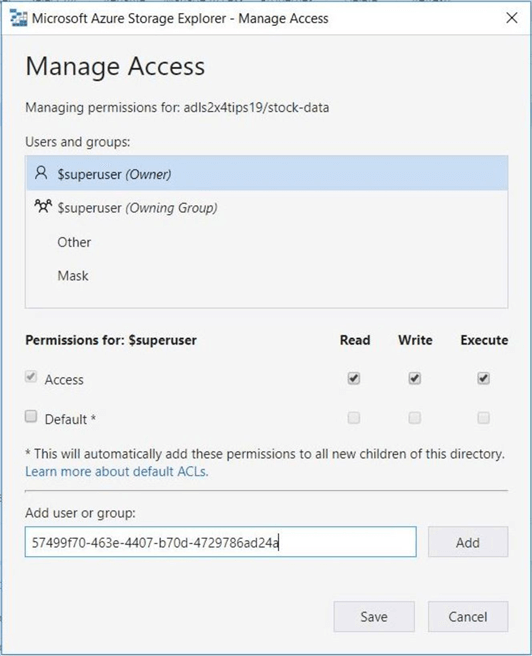

Unless you are part of the owner group, access rights have to be given out manually. To add a user, you have to supply the UPN of the account or the object id from Azure Active directory. The hyperlinks in the previous image bring up the profiles of the active directory accounts. Grab the object id for the user named Dilbert.

The above image shows the entry of an object id which will be granted read (R), write (W) and execute (E) rights. I totally understand the need for the first two rights for uploading and downloading files to the data lake.

Can anyone tell me how an execute right relates to a file system action?

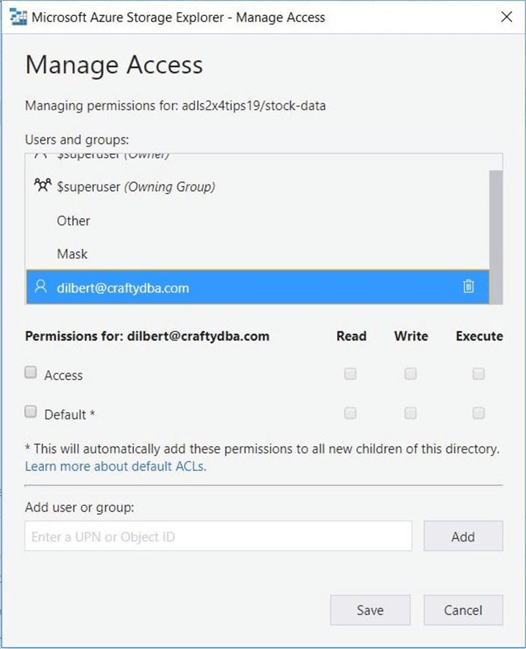

I believe this right was kept for either full compliance of the POSIX standard or future use. The image below shows the look of the object id into a UPN by the storage explorer.

Now that we have assigned rights to the Dilbert user, we can test this access using the storage explorer.

Testing ACL assignment

When designing file system security, it is important to test out the access using a sample account from each type of user. The Reader role gives only read access and the Owner roles gives full access. This is both sides of the spectrum. However, most accounts will be given the Contributor role. This role allows for the management of folders and files given the right access.

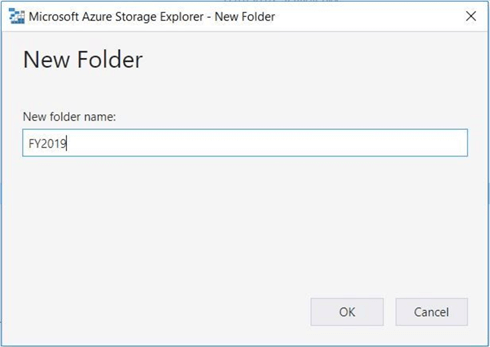

Continuing with the Dilbert account that has 111 or RWX access, we will investigate what actions are possible when adding the WRITE access.

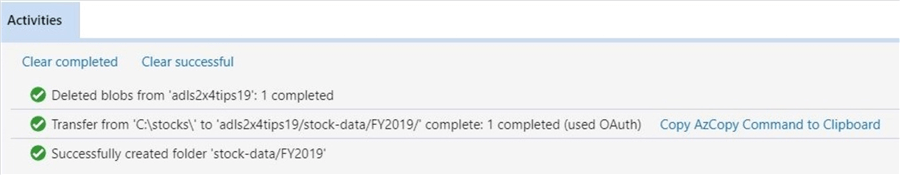

The WRITE access allows for the creation and deletion of folders within the file system. The image below shows the creation of a sub-directory named FY2019 under the stock-data directory.

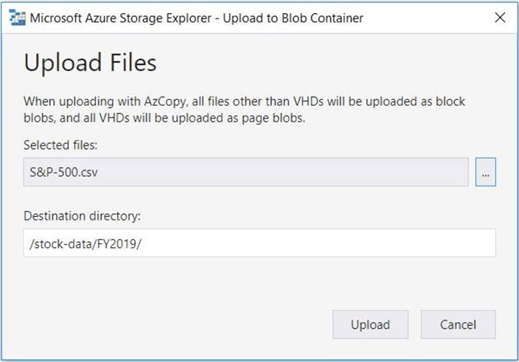

Adding files to the data lake can be performed by accounts that have WRITE access.

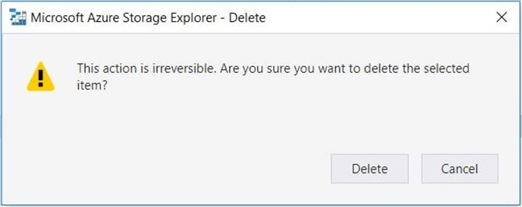

For some reason, I did not buy any Apple stock in 2019. Therefore, I want to remove the stock quotes file named AAPL-FY2019.CSV from the file system.

The activities window shows the successful execution of all these actions. If you are curious about how the Azure Storage Explorer converts upload actions into AzCopy.exe commands, click the link to copy the actions to the clipboard. Paste the information into Notepad++ to review.

To recap, the Contributor built-in role is the go-to access level for most service accounts. Use the correct ACL rights to create a file system that is secured the way you want.

Summary

Today, companies are starting to build modern data warehouses using Azure Data Lake Storage (ADLS) as the key component. The second generation of the ADLS service is a major design change which leverages cost effective blob storage as the foundation. This service promises can scale to petabytes of data without worrying about the capacity of a given storage container.

Four levels of security enable the designer to store confidential data in the cloud without worry. First, a firewall can be added at the storage account level to block unwanted visitors. Second, data is encrypted during transit and at rest. Third, role-based access control (RBAC) allows for the assignment of either Reader, Contributor, or Owner rights to a given UPN or Azure Active Directory account. Last but not least, POSIX style access control lists (ACLs) can be used to set fine grain access at the folder and file level.

The only problem I foresee with the service is the lack of support for existing applications. If you spent time and money creating big data solutions using Azure Data Lake Analytics (ADLA) Gen 1, there is no support for this new storage. If fact, there is no road map for the product at this time. Other nice to have items are Power Shell cmdlets using the cross-platform library or SSIS Azure Feature Pack that supports this new driver.

If you have current Hadoop compatible applications such as Data Bricks, HD Insight, Data Factory or Azure Data Warehouse, these applications will work with the new storage service. I see the Gen 2 service being a winner since it is 50% less in base storage cost than older Gen 1 service. If more products are added to the compatibility list in the near future, more adoption will follow.

Please see the current documentation for details and announcements page for recent changes to the Azure services.

Next Steps

- Copying Window and Linux files to ADLS Gen 2 using AzCopy.exe

- Reading and writing ADLS Gen 2 files from Azure Data Warehouse

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips