By: John Miner | Comments | Related: > Azure

Problem

The reserved capacity databases allow systems designers to choose the number of virtual cores and memory allocated to a given database instance in Azure. In a previous article, the budget oriented General Purpose tier was investigated. A good percentage of the typical business problems can be addressed with this service tier in Azure.

However, business problems that process a large number of transactions that require fast response times might have issues with this service tier. Today, we are going to investigate the fastest reserved capacity database available in Azure at this time.

How can we deploy a reserved capacity database in Azure for a customer that has a low latency workload?

Solution

Microsoft announced the general availability of reserved capacity databases in August of 2018. The Business Critical tier for Azure SQL database is meant for heavier workloads. This offering is ideal for mission critical applications that have high transaction rates and require low latency response times.

Please note that Azure Database managed instance is a different deployment model that provides native virtual networking integration (V-NET) and higher Transact SQL compatibility. This model is ideal for older applications that require a lot of rework for Azure SQL database. Also, this model supports the Business Critical tier and will be discussed in a future tip.

Business Problem

Our boss has asked us to look into the new virtual core offerings of Azure SQL database. We have several on premises databases with heavy workloads. The code within the databases complies with the ANSI standard of the structure query language (SQL). Therefore, migrating the databases to the cloud does not require managed instance.

It is best to choose a simple problem that can be solved on our local laptop and in the cloud. This will to create a performance comparison between the deployments. This proof of concept will use the math database schema which calculates prime numbers from 1 to N. In our case, we want to know how many primes numbers exist between 1 and 5 million. We want to execute 20 asynchronous jobs to accomplish this task as fast as possible.

The rest of the article will show you how to deploy an Azure SQL database (Business Critical Tier) using the math database schema. Comparing cloud to on premises execution times allows us to gauge how fast the Azure virtualized environment is.

Architectural Overview

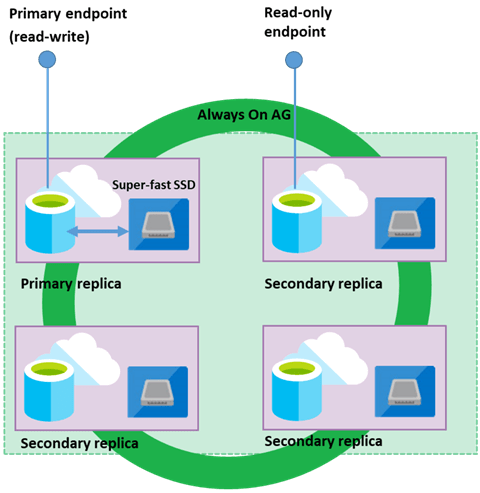

The Premium (DTU) and Business Critical (vCore) service tiers use the same architectural design. The image below was copied from MSDN documentation. This high availability and super fast architecture is designed with the following key components: super-fast local computing resources and always on availability group.

The local computing runs the database (sqlservr.exe) process and contains all files that makeup the database. Data resides either in memory or on super fast SSD disks. I am guessing that Non-Volatile Memory Express (NVMe) solid state drives are used in the hardware topology to produce the incredible IOPS that are seen with this architecture. Please see this article that compares various drive types for performance.

The always on availability groups guarantee the high available of the server. Four separate computing nodes that contain virtual cores, local memory and super fast SSD disk are part of the quorum. One primary node in the group can service both read/write actions and one secondary node can support read-only actions. The other two nodes in the group are to maintain replication and high availability.

One major characteristic of this tier is the ability to support memory optimized tables. These tables are incredibly fast and may reduce normal business processing times by a huge factor. I like using these tables for extract, transform and load (ETL) processing as well as consolidated reporting in the cloud. We will use in memory tables during our performance testing.

In a nutshell, this high speed architecture design has served very well for many years. The only difference between the Premium (DTU) and Business Critical (v-Core) tiers is the dedication of computing resources. Just remember that any shared cloud computing resource may encounter the noisy neighbor issue. Most cloud vendors have eliminated this issue by using intelligence resource management algorithms.

Hardware Generations

There are predominately two hardware generations in use for deploying Azure SQL database with dedicated cores. The information below was obtained from Microsoft online documentation and summarized into tables.

The fourth generation Intel processors are based on the Broadwell architecture. Each database deployed with a GEN4 v-core is not hyper-threaded, has a larger memory to core ratio, uses a non-volatile memory express controller for solid state drives, and does not have network acceleration. However, the total number of cores is capped at 24. Microsoft considers this offering as deprecated since this is an older chip set.

The table below show the characteristics of the GEN4 offering.

| Generation | Feature | Limit |

|---|---|---|

| 4 | CPU | 2-24 |

| 4 | RAM | 7.0 – 159.5 GB |

| 4 | In Memory Tables | 1.0 – 36.0 GB |

| 4 | IOPS | 4,000 – 76,800 |

| 4 | MAX DB SIZE | 4 TB |

The fifth generation Intel processors are based on the Haswell architecture. Each database deployed with a GEN5 v-core is hyper-threaded, has a lower memory to core ratio, uses a non-volatile memory express controller for solid state drives, and has network acceleration. The total number of cores is capped at 80. This is the default v-Core offering in the Azure Portal.

The table below show the characteristics of the GEN5 offering.

| Generation | Feature | Limit |

|---|---|---|

| 5 | CPU | 2-80 |

| 5 | RAM | 10.4 – 415.2 GB |

| 5 | In Memory Tables | 1.57 - 131.64 GB |

| 5 | IOPS | 8,000 – 204,800 |

| 5 | MAX DB SIZE | 4 TB |

Azure Portal Deployment

I am going to assume you are familiar with deploying database from the Azure Portal. Please deploy a new logical server named svr4tips2019 in the East US region. Please store the admin user name and admin password in a safe place. I like using an Azure Key Vault to keep track of such information.

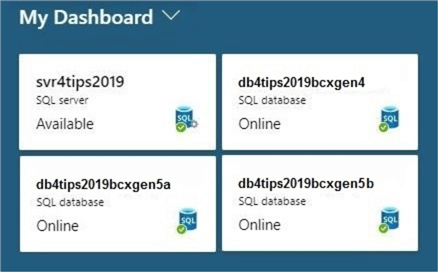

The Azure Portal allows the data platform designer to pin objects to a custom dashboard. I like using this technique since objects such as a virtual machine have many components. Usually, I am only interested in the topmost object. The image below shows the logical database server being added to the default dashboard.

Once a logical server is defined, there are many ways to deploy databases. For now, we will complete the deployment of three databases using the portal.

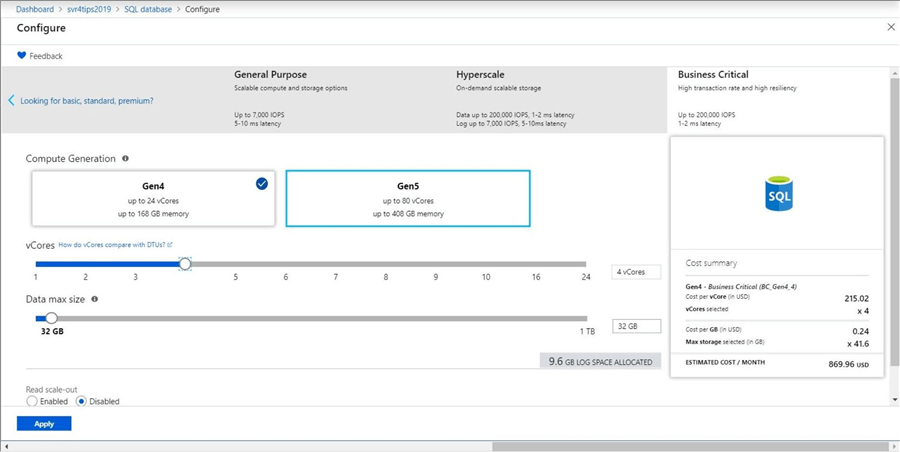

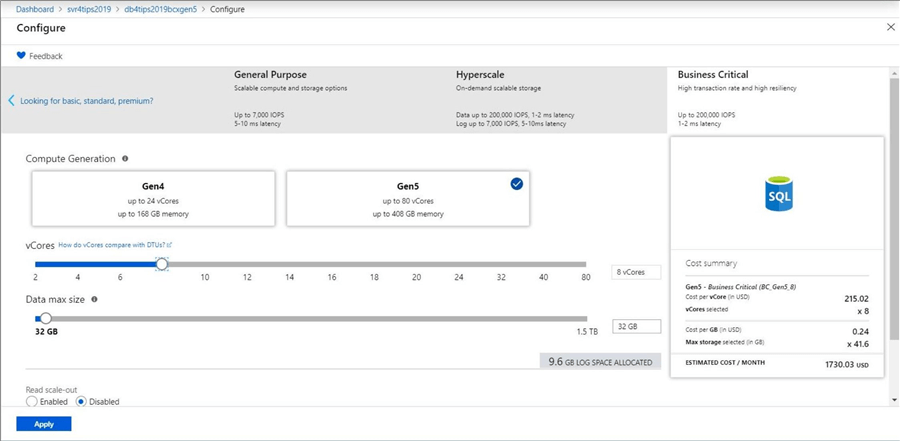

The image below shows the business critical database configuration for an Azure SQL database using a provisioned GEN4 computing tier and four virtual codes. Please complete the deployment for a database with the name of db4tips2019bcxgen4.

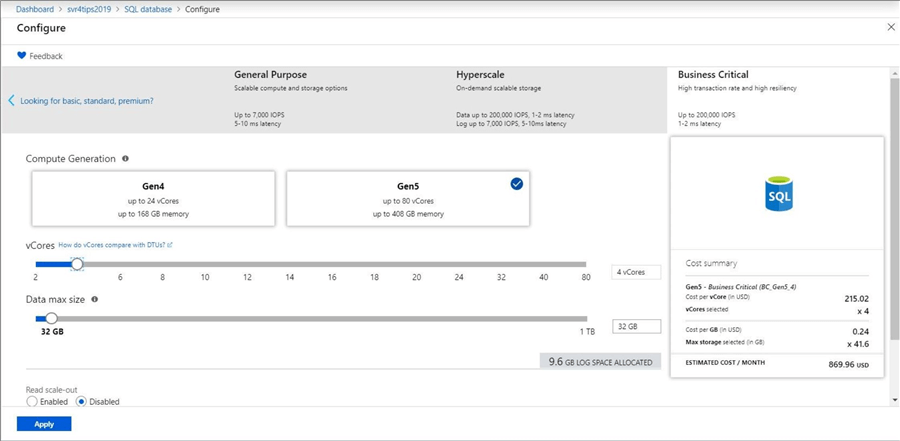

The image below shows the business critical database configuration for an Azure SQL database using a provisioned GEN5 computing tier and four virtual codes. Please complete the deployment for a database with the name of db4tips2019bcxgen5a.

The GEN 4 cores are physical in nature. The GEN5 computing cores are hyper threaded and half as powerful. Therefore, we should deploy an 8 core configuration so that we are comparing like processing power. Please complete the deployment for a database with the name of db4tips2019bcxgen5b.

The image below shows the three databases pinned to the dashboard.

At this point one can create the test MATH database schema and execute the stored procedure. However, I want to explore different ways Azure databases and servers can be deployed. Please right click each database on the dashboard and choose the delete option. In the next section we will review how Transaction SQL can be used to deploy databases.

T-SQL Deployment

I am still using SQL Server Management Studio (SSMS) for DBA tasks. Please make sure you have the latest version of the software installed on your local laptop. The image below shows a typical login using the account I created during deployment of the logical server.

At this point, the object explorer shows a logical server with no databases.

The CREATE DATABASE syntax has been modified with new edition and service objectives. The code below deploys a provisioned generation 4 computing database with 4 cores. Execute this code from the master database. Please modify and execute the code to create two generation 5 databases with 4 and 8 virtual cores respectively.

-- Drop GEN4 dbms DROP DATABASE IF EXISTS [db4tips2019bcxgen4] GO -- Create GEN4 dbms - 4 core CREATE DATABASE [db4tips2019bcxgen4] ( EDITION = 'BusinessCritical', SERVICE_OBJECTIVE = 'BC_GEN4_4' ); GO

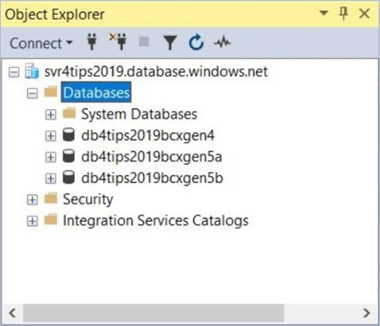

Make sure your refresh the database node in the object explorer. The image below shows all three database types being successfully deployed.

Again, right click each database and choose the drop database action. This same action can be performed by executing a DROP DATABASE T-SQL statement from the master database.

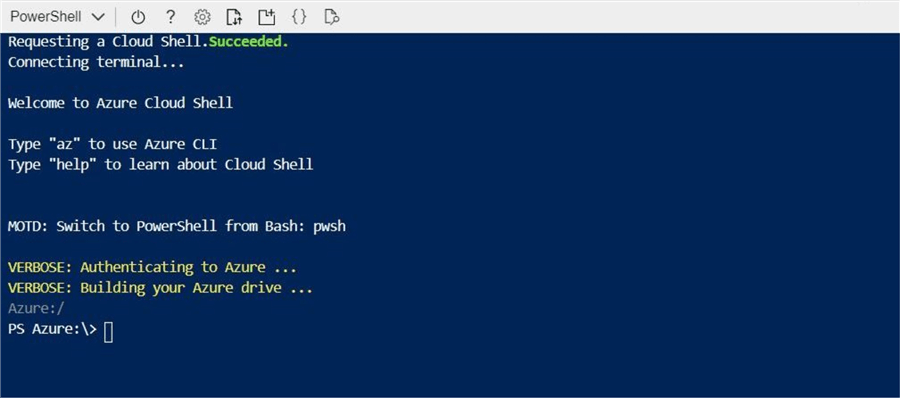

Azure Cloud Shell

The Azure Cloud Shell is a browser-accessible shell for managing Azure resources. I choose to use the PowerShell syntax when executing commands. Please refer to the MSDN documentation to setup the environment now.

The image below shows the new Cloud Shell. By default, the shell is setup to accept bash commands. Use the pwsh command to switch the shell to accept PowerShell commands.

I have decided not to show the output of the commands since it is very long (verbose) in nature. Enclosed is a link to the documentation for the various az sql db commands. The code below creates the three business critical databases that we are interested in.

# List logical database servers az sql server list # Create Business Critical Gen4_4 az sql db create -g rg4tips2019 -s svr4tips2019 -n db4tips2019bcxgen4 --compute-model Provisioned --service-objective BC_Gen4_4 # Create Business Critical Gen5_4 az sql db create -g rg4tips2019 -s svr4tips2019 -n db4tips2019bcxgen5a --compute-model Provisioned --service-objective BC_Gen5_4 # Create Business Critical Gen5_8 az sql db create -g rg4tips2019 -s svr4tips2019 -n db4tips2019bcxgen5b --compute-model Provisioned --service-objective BC_Gen5_8

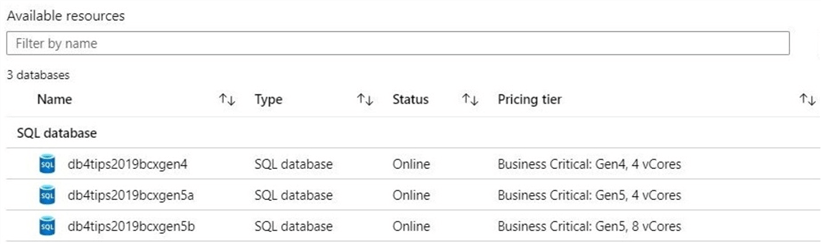

The Azure Portal can be used to validate the databases deployed via code. Please see image below taken from the overview page of the logical SQL Server.

Database Performance

I will not go over the details on how to calculate the prime numbers from 1 to 5 million using 20 different batch command shells running T-SQL in parallel. Please see my prior article that has the details. This test (stored procedure) is a computationally heavy operation. We want to create a database schema and execute the tests for the various database configurations shown in the images below. All tests are using four virtual cores for computing power.

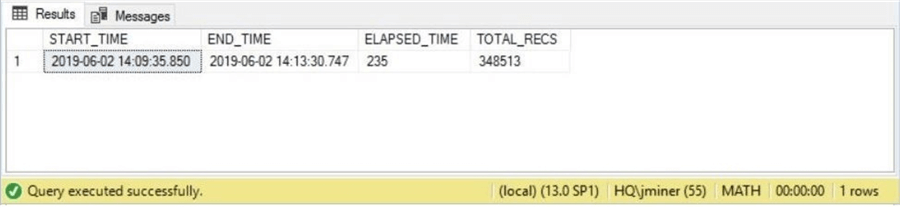

The first image shows the local execution of the test on a laptop running Windows 10 as an operating system and SQL Server 2016 as the database engine.

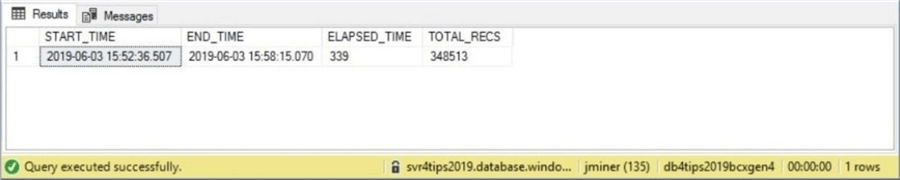

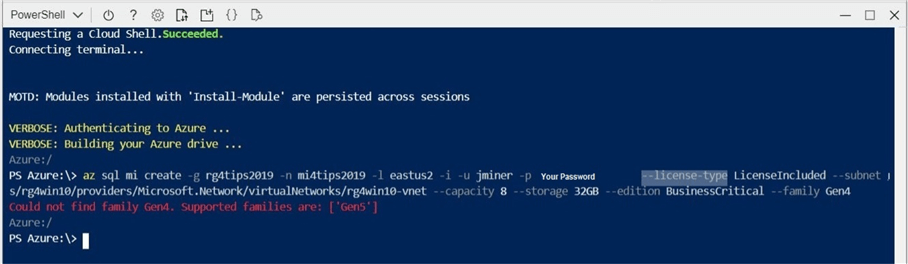

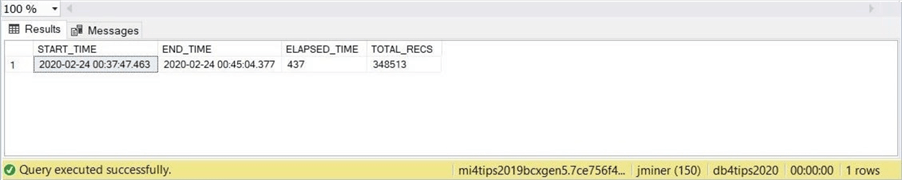

The second image shows the results of the test using a provisioned Azure SQL database using the GEN4 computing.

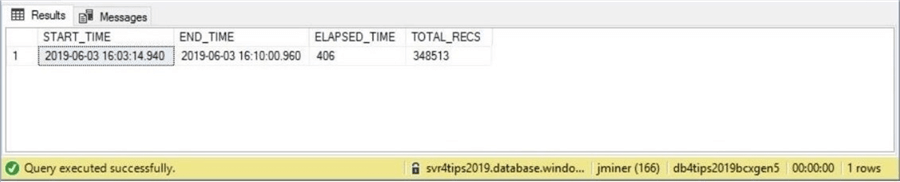

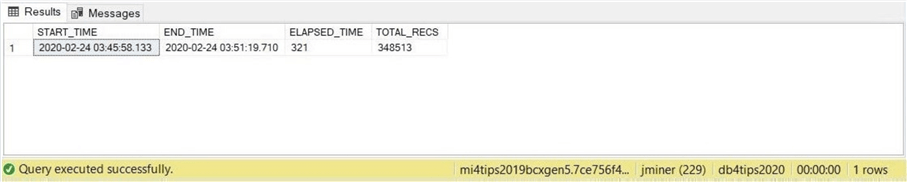

The third image shows the results of the test using a provisioned Azure SQL database using the GEN5 computing.

The fourth image shows the results of the test using a serverless Azure SQL database using the GEN5 computing with 8 cores.

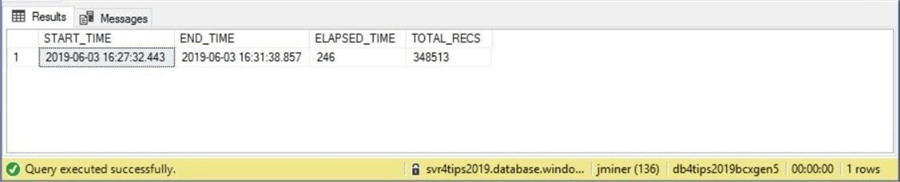

The fifth test should depict the execution times of an Azure SQL Managed Instance using the GEN4 computing. However, the option for selecting GEN4 computing was recently removed from the Azure Portal. As another alternative deployment option, I tried creating the service using Azure Command Line Interface (CLI). See the image below. This deployment also failed. After a quick search of the internet, I found this announcement on MSDN which pin points the problem. The GEN4 hardware will be officially retired in January 2023. Existing deployments remain unaffected until then. However, new deployments must use GEN5 hardware.

The sixth image shows the results of the test using a provisioned Azure SQL managed instance using the GEN5 computing.

The seventh image shows the results of the test using a provisioned Azure SQL managed instance using the GEN5 computing with 8 virtual cores.

The table below shows a summary of all the testing that was performed. I ended up tossing out any results for GEN 4 hardware since it is no longer available.

| Test No | Description | Configuration | Compute | Cores | Time |

|---|---|---|---|---|---|

| 1 | Local Laptop | WIN 10 / SQL DB 16 | GEN6 | 4 | 235 |

| 2 | Provisioned Business Critical | AZURE SQL DB | GEN5 | 4 | 406 |

| 3 | Provisioned Business Critical | AZURE SQL DB | GEN5 | 8 | 246 |

| 4 | Provisioned Business Critical | AZURE SQL MI | GEN5 | 4 | 437 |

| 5 | Provisioned Business Critical | AZURE SQL MI | GEN5 | 8 | 321 |

A few insights can be interpreted from the above table. First, the older generation of hardware can no longer be used for new deployments. Second, the prime numbers problem is computationally heavy. Thus, the same database deployment with more virtual cores performs better. As usual, the database deployment to physical hardware has the best execution times.

One might wonder if there is a way to have Azure SQL Database obtain execution timings that are as good as physical hardware?

Memory Optimized Tables

Storage devices can be ranked in order of speed. At the top of the list is the main memory that is accessible by the central processing unit (CPU). Therefore, memory optimized tables usually perform better than normal tables. These tables are only available in Azure SQL databases that are either Premium or Business Critical tier. The technology is blinding fast when natively compiled stored procedures are created. I do not have time to fully explain this topic in this article. However, I will enclose the scripts that were used in testing and you can research MSDN for more information on memory optimized tables.

The first set of tests were performed on in memory tables without native compiled stored procedures. Durable in memory tables use filestream technology to write to disk. The table below shows the execution times being worst for databases in the cloud. See previous table for timings. There is a slight improvement on physical hardware. Please use this script to run your own tests.

| Test No | Description | Configuration | Compute | Cores | Time |

|---|---|---|---|---|---|

| 6 | Local Laptop | WIN 10 / SQL DB 16 | GEN6 | 4 | 206 |

| 7 | Provisioned Business Critical | AZURE SQL DB | GEN5 | 4 | 462 |

| 8 | Provisioned Business Critical | AZURE SQL DB | GEN5 | 8 | 209 |

| 9 | Provisioned Business Critical | AZURE SQL MI | GEN5 | 4 | 449 |

| 10 | Provisioned Business Critical | AZURE SQL MI | GEN5 | 8 | 331 |

The second set of tests were performed on in memory tables without native compiled stored procedures. The table below shows all execution times being less than 10 seconds. This is why the in memory technology boasts some amazing speeds for certain work loads. Please use this script to run your own tests.

| Test No | Description | Configuration | Compute | Cores | Time |

|---|---|---|---|---|---|

| 11 | Local Laptop | WIN 10 / SQL DB 16 | GEN6 | 4 | 9 |

| 12 | Provisioned Business Critical | AZURE SQL DB | GEN5 | 4 | 8 |

| 13 | Provisioned Business Critical | AZURE SQL DB | GEN5 | 8 | 5 |

| 14 | Provisioned Business Critical | AZURE SQL MI | GEN5 | 4 | 8 |

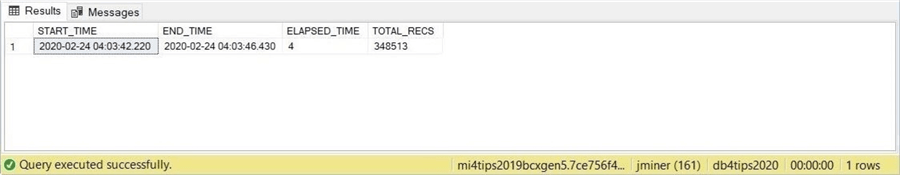

| 15 | Provisioned Business Critical | AZURE SQL MI | GEN5 | 8 | 4 |

The main objective was to create an environment in Azure that has the same horsepower as on premise and have better executions times. Tests eleven, twelve and fourteen all have the same number of cores. The Azure environment slightly outperformed the on-premise environment by 1 second. The image below shows the best execution time which was achieved with twice the number of cores on a managed instance platform. It took only 4 seconds to calculate the prime numbers between 1 and 5 million.

To recap, the Premium and Business Critical offerings allow developers to take advantage of in memory tables. Usually, twice the table size is required in memory for this technology to succeed. The amount of RAM available for memory optimized tables ranges between 1.5 and 131.6 GB and depends on the number of virtual cores you deploy. Of course, adding more cores to the database equates to a larger monthly spend.

Price vs Performance

Many projects sponsored by the business line are on a tight budget. Therefore, presenting different options to the business line allows for an informed decision. We will be curious about how price correlates to performance.

It has been decided that a 20 to 24 core machine is needed for our business work load. We want to have the ability to store up to 4 TB of data. The DS15 virtual machine has twenty core and 160 GB of memory. The Azure SQL database and Azure SQL Managed Instance offerings come with 24 cores, 124 GB of memory and 25 GB for memory optimized tables.

The chart below shows some sample prices pulled from the Azure pricing calculator.

| No | Description | Configuration | Compute | Cost |

|---|---|---|---|---|

| 1 | Virtual Machine | DS15 - SQL DB 19 | GEN6 | $7,413 |

| 2 | Provisioned Business Critical | AZURE SQL DB | GEN5 | $11,912 |

| 3 | Provisioned Business Critical | AZURE SQL MI | GEN5 | $11,904 |

There is a handful of dollars difference between the two Platform as A Service (PaaS) offerings. The cost quoted above is for pay-as-you-go with out any discounts. There are pricing discounts for Azure Hybrid Benefit (AHB) and Reserved Instance (RI). Consult your local account representative for details.

We did not performance any testing with Infrastructure as a Service (IaaS) represented by the virtual machine. Also, the price of the offering is almost $4500 less than the rest. We should look at this offering in the future. However, it does not come with high availability and automated backups. That is something that we will have to manually setup.

It would be wise to point out the fact that Azure SQL Database performed better than the rest with our current workload using regular tables. The Azure services had similar timings when memory optimized tables with native code was used. I would repeat the workload test a couple more times to make sure that consistent results are achieved. If the numbers do not change, then the client should choose Azure SQL database as the target platform.

Summary

The Azure Cloud is a complex ecosystem that is continuously changing. One service such as Azure SQL database might have only three tiers – (basic, standard and premium) – in the recent past. Today, there are a variety of offerings that a data architect can choose. The use of researching the characteristics of the offerings and comparing performance baselines is key in selecting the correct object to deploy.

Just recently, Microsoft stopped the creation of new databases using the GEN 4 hardware. All current services using GEN 4 hardware will be retired in 2023. Please plan accordingly.

The Business Critical tier is ideal for heavy workloads that process a large number of transactions that require fast response times. This offering is more expensive and can scale up to 80 cores. Even though I have not written yet about Managed Instance, this offering was included in the performance testing.

Both the Premium and Business Critical offerings allow for the creation of memory optimized tables. We learned that regular stored procedures with this table type achieve normal processing times. On the other hand, natively compiled stored procedures that use memory optimized tables were almost 100x faster. I suggest considering this combination, if possible, when designing new systems.

In review, there are only a few choices that one can make when choosing dedicated computing capacity. This includes the General Purpose, Business Critical, and Hyper Scale tiers. Today, it was surprising to see that Azure SQL Database achieved better execution times for normal tables than Managed Instance. I would re-check my testing before coming to any conclusions. However, both platforms were able to compete with physical hardware when memory optimized tables and natively compiled code were used. In short, a single virtual machine cost less money than a service but sacrifices high availability and automatic backups. If you decide to manually create 4 node cluster using virtual machines, you can see that the Platform as a Service offerings are truly least expensive.

Next time, I will be talking about scaling our database size over 8 TB with the Hyper Scale tier. This new service tier allows databases to grow up to 100 TB in size.

Next Steps

- Going hyperscale with Azure SQL Database

- Why choose Managed Instance over other SQL offerings?

- Deploying Azure SQL Databases using the new Az Module

- Executing SSIS packages with Azure SQL Database

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips