By: Ron L'Esteve | Comments | Related: > Azure

Problem

There are a variety of considerations to account for while designing and architecting an Azure Data Lake Storage Gen2 account. Some of these considerations include security, zones, folder/file structures, data lake layers and more. What are some of the best practices for getting started with designing an Azure Data Lake Storage Gen2 Account?

Solution

This article will explore the various considerations to account for while designing an Azure Data Lake Storage Gen2 account. Topics that will be covered include 1) the various data lake layers along with some of their properties, 2) design considerations for zones, directories/files, and 3) security options and considerations at the various levels.

Data Lake Layers

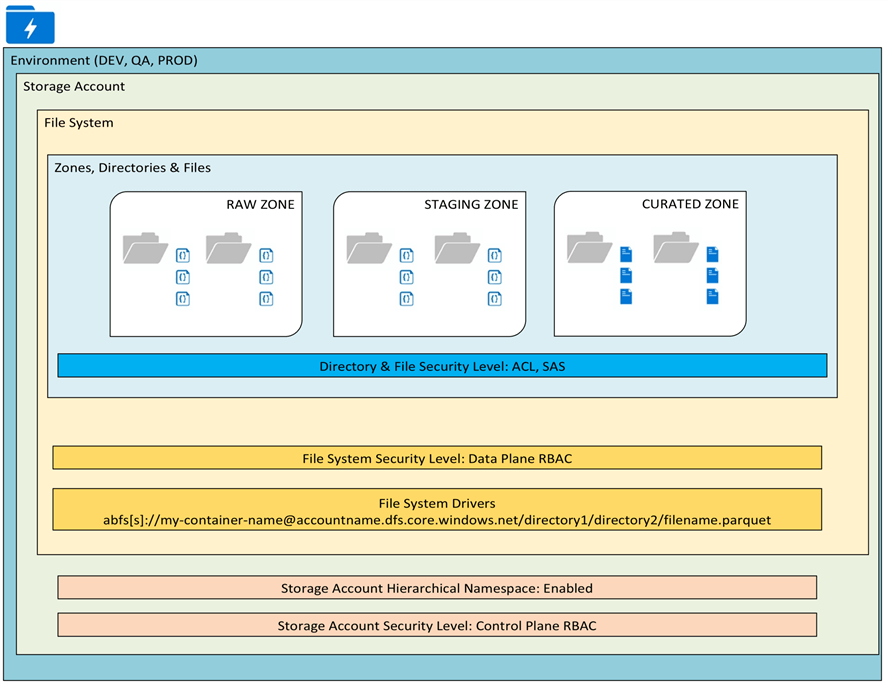

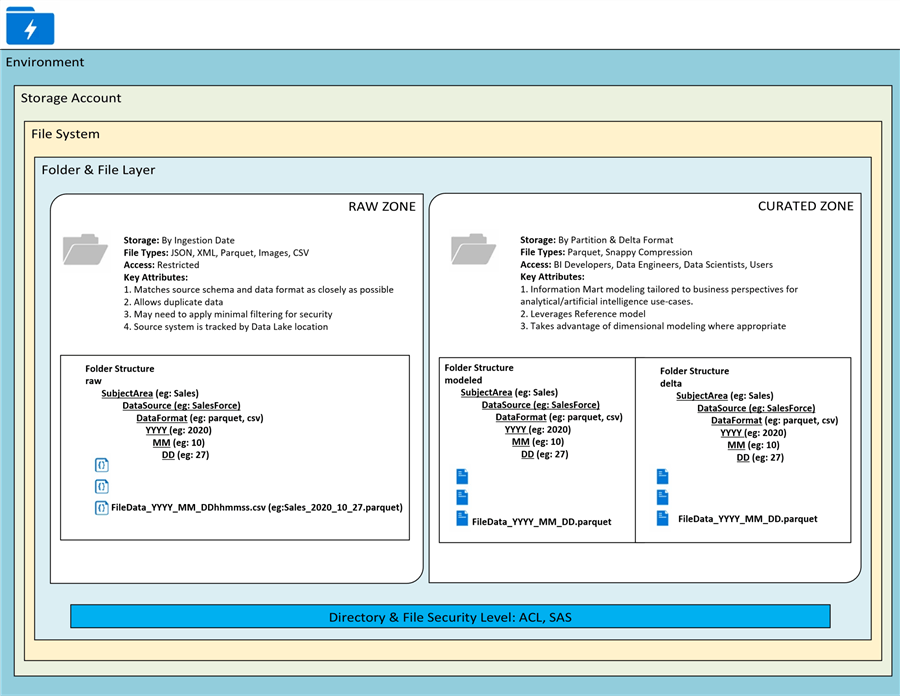

The following illustration outlines the various layers in a data lake which include the environments, storage accounts, file systems, zones, directories, and files. The subsequent sections will discuss these layers in greater detail.

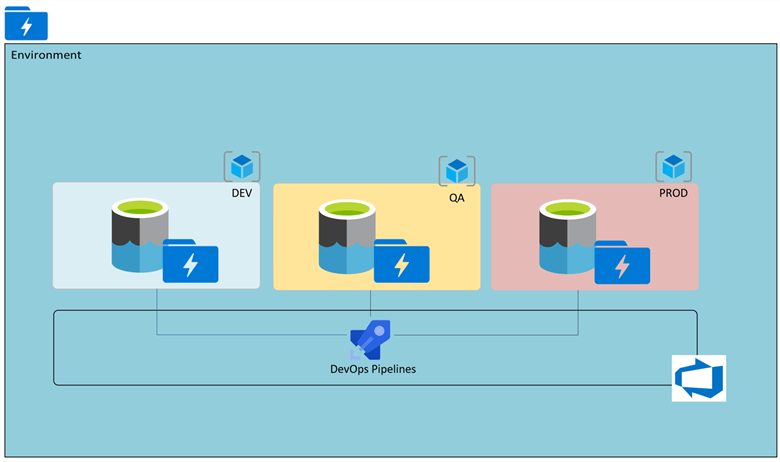

Environment

The environments define the top-level layer that needs to be accounted for when designing a data lake. For example, if a DEV, QA, and PROD environment is needed, then these environments must also include one or many ADLS2 storage accounts. The process could be orchestrated by Azure DevOps pipelines.

Storage Account

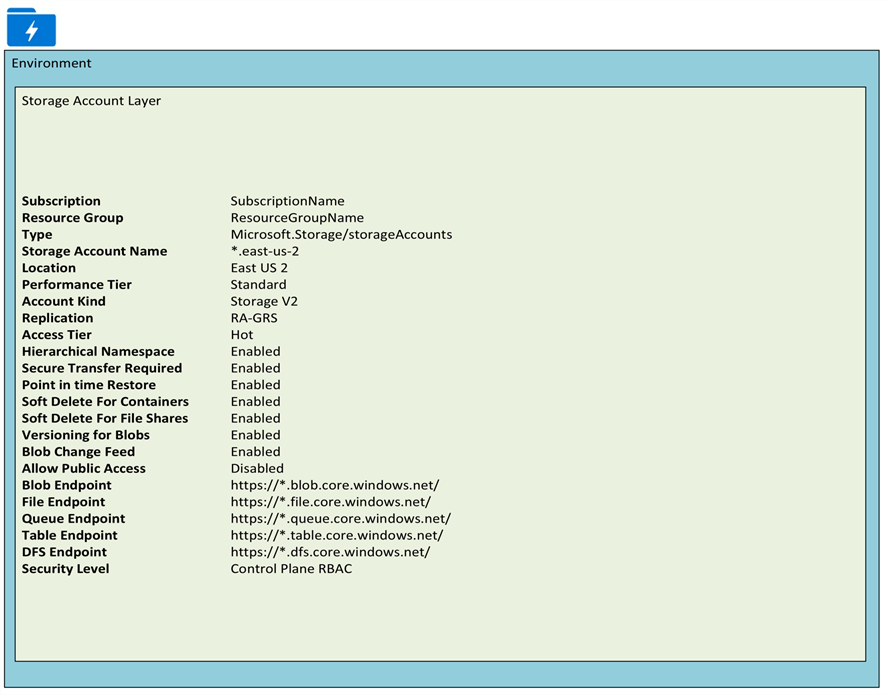

There are several properties that need to be configured when creating an Azure Data Lake Storage account. The following sections will cover these account level properties in detail. Additionally, when designing a storage account, considering the limits and capacity of the storage account will be critical to determine whether to have multiple storage account. More details on Storage limits can be found here. Security at the Storage account level will be defined by the Control Plane RBAC and more detail will be covered in the Security sections.

Account Level Properties

Below are the various account level properties that are configurable at the Storage account level.

Performance Tier: Standard storage accounts are backed by magnetic drives and provide the lowest cost per GB. They're best for applications that require bulk storage or where data is accessed infrequently. Premium storage accounts are backed by solid state drives and offer consistent, low-latency performance. They can only be used with Azure virtual machine disks and are best for I/O-intensive applications, like databases. Additionally, virtual machines that use Premium storage for all disks qualify for a 99.9% SLA, even when running outside of an availability set. This setting can't be changed after the storage account is created.

Account Kind: General purpose storage accounts provide storage for blobs, files, tables, and queues in a unified account. Blob storage accounts are specialized for storing blob data and support choosing an access tier, which allows you to specify how frequently data in the account is accessed. Choose an access tier that matches your storage needs and optimizes costs.

Replication: The data in your Azure storage account is always replicated to ensure durability and high availability. Choose a replication strategy that matches your durability requirements. Some settings can't be changed after the storage account is created.

Point in time Restore: Use point-in-time restore to restore one or more containers to an earlier state. If point-in-time restore is enabled, then versioning, change feed, and blob soft delete must also be enabled.

Soft Delete for Containers: Soft delete enables you to recover containers that were previously marked for deletion.

Soft Delete for File Shares: Soft delete enables you to recover file shares that were previously marked for deletion.

Versioning for Blobs: Use versioning to automatically maintain previous versions of your blobs for recovery and restoration.

Blob Change Feed: Keep track of create, modification, and delete changes to blobs in your account.

Connectivity Method: You can connect to your storage account either publicly, via public IP addresses or service endpoints, or privately, using a private endpoint.

Routing Preferences: Microsoft network routing will direct your traffic to enter the Microsoft cloud as quickly as possible from its source. Internet routing will direct your traffic to enter the Microsoft cloud closer to the Azure endpoint.

Secure transfer Required: The secure transfer option enhances the security of your storage account by only allowing requests to the storage account by secure connection. For example, when calling REST APIs to access your storage accounts, you must connect using HTTPs. Any requests using HTTP will be rejected when 'secure transfer required' is enabled. When you are using the Azure file service, connection without encryption will fail, including scenarios using SMB 2.1, SMB 3.0 without encryption, and some flavors of the Linux SMB client. Because Azure storage doesn’t support HTTPs for custom domain names, this option is not applied when using a custom domain name.

Allow Public Access: When allow blob public access is enabled, one is permitted to configure container ACLs to allow anonymous access to blobs within the storage account. When disabled, no anonymous access to blobs within the storage account is permitted, regardless of underlying ACL configurations.

Hierarchical Namespace: The ADLS Gen2 hierarchical namespace accelerates big data analytics workloads and enables file-level access control lists (ACLs).

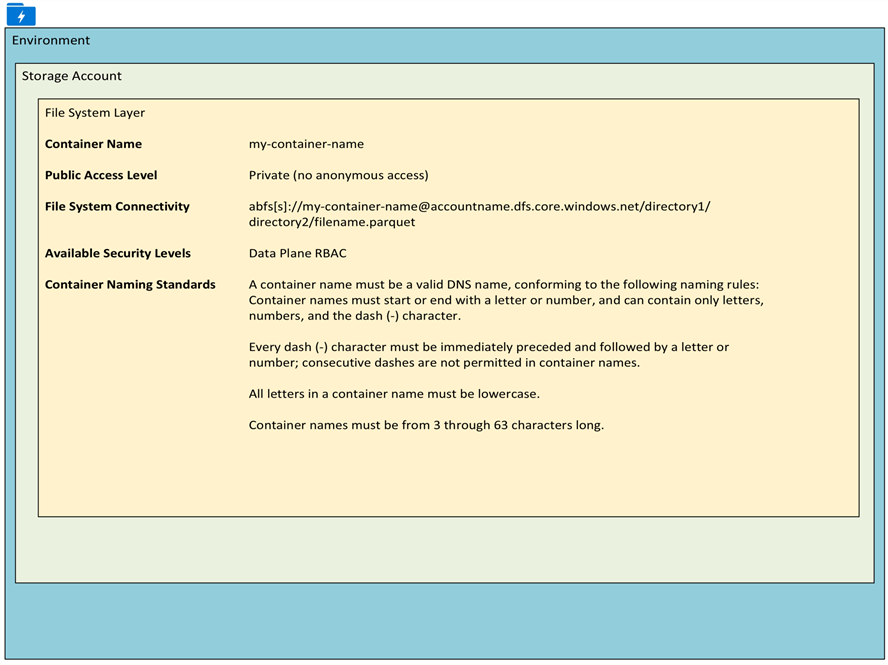

File System

The file system, also known as Container, holds hierarchical file systems for logs and data. The following section lists the container level properties that can be configured. The Data Plane RBAC security level will be discussed in detail in the Security section.

Container Properties

The following container properties can be configured at the container level.

Public Access Level: Specifies whether data in the container may be accessed publicly. By default, container data is private to the account owner. Use 'Blob' to allow public read access for blobs. Use 'Container' to allow public read and list access to the entire container.

Immutable Policies: Immutable storage provides the capability to store data in a write once, read many (WORM) state. Once data is written, the data becomes non-erasable and non-modifiable, and you can set a retention period so that files can't be deleted until after that period has elapsed. Additionally, legal holds can be placed on data to make that data non-erasable and non-modifiable until the hold is removed.

Stored Access Policies: Establishing a stored access policy serves to group shared access signatures and to provide additional restrictions for signatures that are bound by the policy. You can use a stored access policy to change the start time, expiry time, or permissions for a signature, or to revoke it after it has been issued.

Zones, Directories & Files

At the folder and file layer, storage accounts containers define zones, directories, and files like the illustration below. The security level at the directory and file layer includes ACL and SAS. These security levels will be covered in the Security section.

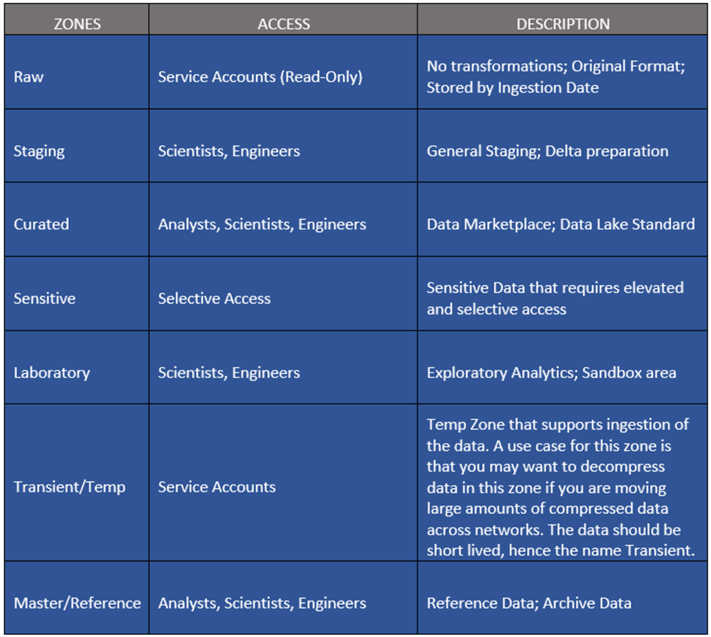

Zones

Zones define the root level folder hierarchies in a data lake container. Zones can be defined by multiple containers in a storage account or multiple folders in a container. The following sample zones describe their purpose and typical user base.

Zones do not need to always reside in the same physical data lake and could also reside as separate filesystems or different storage accounts, even in different subscriptions. If large throughput requirements are expected in a single zone exceeding a request rate of 20,000 per second, then multiple storage accounts in different subscriptions may be a good idea.

Directories (Folders)

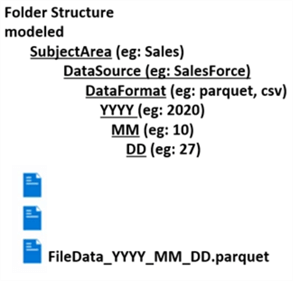

When designing a data lake folder structure, the following hierarchy is recommended for optimized analytical querying.

Each source system will be granted write permissions at the DataSource folder level with default ACL specified. This will ensure permissions are inherited as new daily folders and files are created.

These formats can be dynamically parameterized and coded into an ETL solution within either Databricks or Data Factory to auto create the folders and files based on the defined hierarchy.

\Raw\DataSource\Entity\YYYY\MM\DD\File.extension

Sensitive sub-zones in the raw layer can be separated by top level folder. This will allow one to define a separate lifecycle management policy using rules based on prefix matching.

\Raw\General\DataSource\Entity\YYYY\MM\DD\File.extension \Raw\Sensitive\DataSource\Entity\YYYY\MM\DD\File.extension

Files

Azure Data Lake Storage Gen2 is optimized to perform better on larger files of around 65MB-1GB per file for Spark based processing. Azure Data Factory compaction jobs can help achieve this. Additionally, the OPTIMIZE or AUTO OPTIMIZE features of Databricks Delta format can help with achieving this compaction. With Event Hubs, the Capture Feature can be used to persist the data based on size or timing triggers.

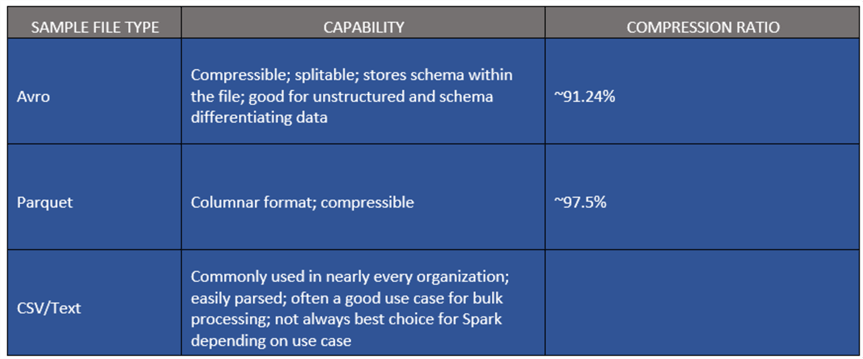

For Curated or Modeled Zones that require highly performant analytics that are read optimized, columnar formats such as Parquet and Databricks delta would be ideal choices to take advantage of predicate pushdown and column pruning to save time and cost.

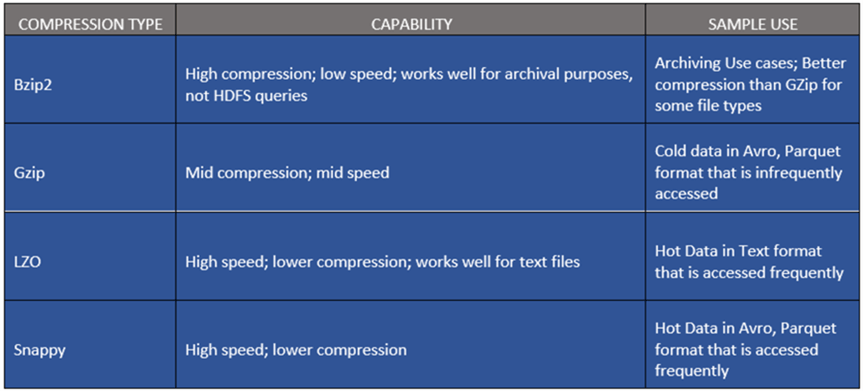

Here are some sample file types along with their capabilities and approximate compression ratio.

Security

The following security features must be considered when designing a data lake.

RBAC (Role-Based Access Control) – Control Plane Permissions

Control Plane based RBAC permissions are intended to give a security principal rights only at the Azure resource level and do not include any Data Actions. Granting a user a ‘Reader’ role will not grant access to the Storage Account data since additional ACLs or RBAC Data Plane permissions will be required. Best practice is to use Control Plane RBAC in combination with Folder/File level ACLs.

RBAC (Role-Based Access Control) – Data Plane Permissions

When RBAC Data Plane permissions are processed for a security principal, then all other ACLs will be ignored and will prevent assigning permissions on files and folders level.

RBAC data plane permissions can be applied as low as the Storage account level.

The list of built in RBAC Data Plane roles that can be assigned includes the following. Additional detail can be found here:

-

Storage Blob Data Owner: Use to set ownership and manage POSIX access control for Azure Data Lake Storage Gen2.

-

Storage Blob Data Contributor: Use to grant read/write/delete permissions to Blob storage resources.

-

Storage Blob Data Reader: Use to grant read-only permissions to Blob storage resources.

-

Storage Queue Data Contributor: Use to grant read/write/delete permissions to Azure queues.

-

Storage Queue Data Reader: Use to grant read-only permissions to Azure queues.

-

Storage Queue Data Message Processor: Use to grant peek, retrieve, and delete permissions to messages in Azure Storage queues.

-

Storage Queue Data Message Sender: Use to grant add permissions to messages in Azure Storage queues.

POSIX-like Access Control Lists

File and folder level access within ADLSg2 is granted by ACLs. Regardless of ACL permissions, the RBAC Control Plane permissions will be needed in combination with ACLs. As a best practice, it is advised to assign security principals an RBAC Reader role on the Storage Account/Container level and to then proceed with the restrictive and selective ACLs on the file and folder level.

The two types of ACLs include Access ACLs which control access to a file or a folder and Default ACLs which are inherited by the assigned Access ACLs within the child file or folder. More detail on ACL can be found here.

Share Access Signature

Shared Access Signature (SAS) supports limited access capabilities such as read, write, update or to containers for users. Additionally, timeboxes can be applied as to when the signature is valid for. This allows for temporary access to your storage account and easily managing different levels of access to users within or outside of your organization.

Additional detail related to granting limited access to Azure Storage using SAS along with the various typed of SAS can be found here.

Data Encryption

Data is secured both in motion and at rest and ADLS manages data encryption, decryption, and placement of the data automatically. ADLS also offers functionality to allow a data lake administrator to manage encryption.

Azure Data Lake uses a Master Encryption Key, which is stored in Azure Key Vault, to encrypt and decrypt data. User managed keys provides additional control and flexibility, but unless there is a compelling reason, it is recommended to leave the encryption to the Data Lake service to manage.

Data stored in ADLS is encrypted using either a system supplied or customer managed key. Additionally, data is encrypted using TLS 1.2 whilst in transit.

Network Transport

When network rules are configured, only applications requesting data over the specified set of networks can access a storage account. Access can be limited to your storage account to requests originating from specified IP addresses, IP ranges or from a list of subnets in an Azure Virtual Network (VNet). More details can be found here.

Private Endpoints for your storage account can be created, which assigns a private IP address from your VNet to the storage account and secures all traffic between your VNet and the storage account over a private link. More details can be found here.

Next Steps

- Read more about Architecting Azure Data Lakes.

- Read more about Data Lake Storage Known Issues.

- Read more about Data Lake Storage Supported Blob Features.

- Read more about Data Lake Storage Supported Azure Services.

- Read more about Scalability and performance targets for Storage Accounts.

- Read more about Getting Started with Delta Lake.

- Read more about Best Practices for using Azure Data Lake Storage Gen2.

- For more detail on permissions in Azure Data Lake Storage Gen2, read Granting Permissions in Azure Data Lake.

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips