By: Koen Verbeeck | Updated: 2022-04-28 | Comments (3) | Related: More > Import and Export

Problem

I'm new to data warehousing and working with data in general. In a couple of articles and job postings I have seen the acronym ETL. For example, they're searching for an ETL developer with experience in SSIS or Azure Data Factory (ADF). What exactly does ETL mean?

Solution

What is ETL?

ETL is an acronym standing for "Extract, Transform and Load". It's a term that originated in the 1970s and is often used in the context of data warehousing for decision making, data analysis, data visualization and more. However, it can be used in a broader context of data integration. Any system where data is extracted from one or more source systems and is copied into a destination system where the data is represented differently than in the source(s), might be called an ETL process.

We can summarize the concept of ETL in the following diagram:

Extract

In the Extract data phase of ETL, data is ingested from one or more sources (line of business, ERP, CRM, raw data, IOT, legacy systems, large data sets in the AWS, Azure or Google cloud, etc.). These sources can be anything, as long as it contains some sort of data of course. We can divide source data generally in three categories:

- Structured data. These are data sources which are "strongly typed". This means columns are defined and a data type is assigned. Typical examples are relational databases, REST APIs, OData Sources, SharePoint Lists etc. These data sources are typically the easiest to work with, as data is usually well defined (a datetime column in a database should only contain actual dates) and there are connectors available to access the data (such as ODBC, JDBC, OLE DB …). But be careful, it's not because the data comes from a relational database for example, that it cannot have bad quality. If a string column is used, any type of data can be stored in it. Excel is a good example of such a source that can have very bad data quality.

- Semi-structured data. The data is not in a relational format, but it's also not completely raw or unstructured. Typically, there's some sort of metadata present to give some structure or hierarchy to the data, such as tags or a schema file. Good examples of semi-structured data are XML, JSON and YAML. CSV files can be put into this category as well, but there's no actual check if the format is followed.

- Unstructured data. The data is not structured. This is sometimes also referred to as "raw files". These can be log files, clickstream data, photos, videos and so on.

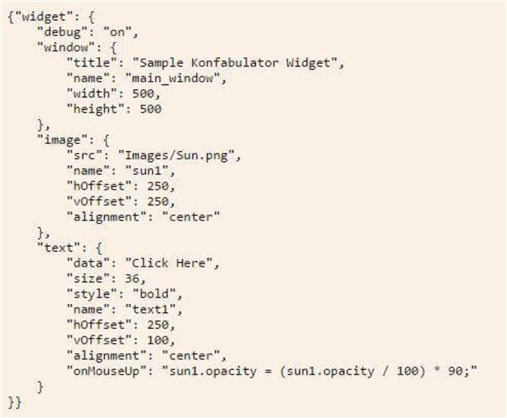

An example of a JSON file with a hierarchy (taken from the tip Introducing JSON for SQL Server):

There are also different methods on how much of the data is extracted:

- Full load. Every time the ETL process is started, the whole data set is extracted. This is fine for smaller data sets (and the definition of small depends on your environment), or for data sets where you cannot determine what is new or changed since the last time you loaded data into the staging area or production database.

- Incremental Load. If there's a metadata present in the source, you can use this to determine the set of data that is now or changed since the last load. This set is often referred to as "the delta". For example, if a table has "modified datetime" and "created datetime" columns, you can use these to find the rows that were inserted or updated since the last time the ETL ran. The point in time where you start taking data is sometimes called the "low water mark". It's important these metadata columns can be trusted; they need to be set by the application doing the data changes or by triggers. If someone runs a manual UPDATE statement and doesn't update the modified column, you will miss changes. Another option is an auto-number column, which is a column where a number is stored that automatically increases with every inserted row. In SQL Server, this can be a column with an IDENTITY constraint, or a SEQUENCE object. If a table has only inserts, you can retrieve the maximum number of the last ETL load and fetch all rows with a higher number. An ETL process working with incremental loads always must do a full load first (the initial load).

- Change Data Capture. Some source systems have a feature that allow you to retrieve the set of inserted, changed and deleted records since a certain transaction or timestamp. In SQL Server, we have change data capture and change tracking. The advantage of such systems over incremental loading is for example they can give you intermediate changes. Let's suppose a value is updated from A to B to C. In the initial load, the value A was extracted. In the next incremental load, the value C is found. It will never know there was a point in time where the value was equal to B, simply because the ETL didn't run frequently enough. CDC systems can give you the gross changes so that the value B is extracted as well. Some REST APIs have a sync endpoint that also allows you to retrieve the changes since a previous timestamp.

CDC and incremental loads give you the best performance since you only need to extract a small subset of the data. Full loads on the other hand are very easy to implement, but put more burden on the source system.

Transform

Once the data is extracted, certain data transformations can be applied. These can be business rules, data quality checks or standardizing of the data. Transformations can be done in various ways: by using standard components of an ETL tool, by writing expressions in SQL or by using a programming language such as Python, C#, Java and so on. The location where the data is transformed can also vary wildly: inside the memory of a machine running the ETL tool, inside the database engine, in a data lake (most likely some sort of blob storage), in a serverless environment such as Azure Functions, Azure Logic Apps, etc.

There are many possible transformations and it's impossible to list them all. Just to give you an idea of what is possible, here's a list with some examples:

- Removing invalid records from the set. For example, rows where a certain column has a NULL value.

- Removing duplicate rows.

- Replacing NULL values by a placeholder, like "N/A".

- Translating code values. The source system might have 'C' and 'D' as possible values, but in the reports we would like to show "Credit" and "Debit". The other way around is also an option.

- Mapping values to a standard value. For example, when we get country data from various sources, we can get different values for "Belgium": "BE", "BEL", "Belgique" or "België".

- Correcting spelling mistakes. "Belgum" can be corrected to "Belgium". This can be done using fuzzy logic algorithms, like the fuzzy transformations in SSIS.

- Sorting data

- Aggregating data. For example, the source data is on the minute level, but it is aggregated to the daily level.

- Transposing or unpivoting data.

- Joining data from multiple sources together.

- Deriving new values from data, e.g. revenue = quantity sold * unit price.

There's a bit of a discussion whether a data warehouse should do many corrections on a data set. Some opinions say that the data should reflect the reality and thus no changes should be made to the data. In the data vault modelling technique, a "raw vault" will capture exactly the data as it was found in the source systems. In a "business vault", modifications and business rules can be applied to the data. It's up to you to decide what is the right action for your environment.

Load

The final stage of the ETL process is loading the (optionally) transformed data into a destination. In some tools, the destination is also referred to as "sink". In many cases, the destination is a relational database (especially in data warehouse projects), but as mentioned, any process moving data around can be labelled as "ETL". Other destinations can include a data lake (e.g. Azure Blob Storage or Azure Data Lake storage) or a file system. Both are just containers to store data, the actual data format can also vary wildly: CSV, JSON, XML, Parquet… Data can also be exported to be picked up by external systems. Data can be dropped in an Excel file for further analyses or exports can be dropped on an (S)FTP server.

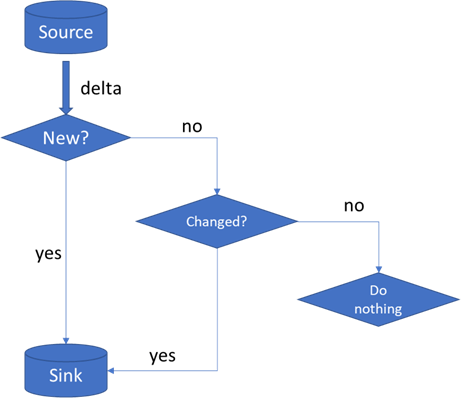

Often, the incoming data of an incremental load must be checked against the destination. Is a row new, or does it already exist in the destination? If yes, are there any fields that have changed? If yes again, we need to update the existing row. We can summarize this in the following flow chart:

This process is sometimes called an "upsert", the combination of updates and inserts. In the SQL language, both can be combined with the MERGE statement. With an incremental load, you cannot check immediately for deleted records, since you only loaded a subset of the data. If you need to find out if there are deletes, you'll need to do a full load of the business keys and check those against the destination. Let's illustrate this concept with an example. We have a source table with 3 rows:

| Code | Name | City | Modified Date |

|---|---|---|---|

| A | Bruce | Gotham | 2022-01-01 |

| B | Dick | Bludhaven | 2022-01-01 |

| C | Clark | Metropolis | 2022-01-01 |

With the initial load, we load all 3 rows to our destination. Let's say we update 1 row and delete 1 row.

| Code | Name | City | Modified Date |

|---|---|---|---|

| A | Bruce | Gotham | 2022-01-01 |

| B | Richard | Bludhaven | 2022-01-10 |

If there's no CDC system in place telling us a row is deleted, we will only find that the row with code B has changed. This will be our delta and we will update the destination accordingly. However, the row with code C still exists in the destination. To find the deletes, we need to load all codes (A, B and C) and check them against the destination. Then we'll discover code C is missing. There are two possible actions:

- Delete all rows with code C from the destination (there can be more rows if we're tracking history). This is a hard delete.

- Add a column to the destination table that flags if a row is deleted. The row will still exist, but we can figure out that it doesn't exist anymore in the source. This is a soft delete.

Because we're only loading one single column, the impact is much less than when we're doing a full load of the entire table.

Implementing a full load is simpler: empty the destination and load the data.

The possibilities for destinations are endless, but most of the time they all have one thing in common: the data is structured and formatted according to some business requirements. Frequently, the data is better structured than it was in the source.

Next Steps

- Check out the Integration Services tutorial. SSIS is the on-premises ETL tool from Microsoft to automate ETL processes.

- Another interesting tutorial is the My First SQL Server Business Intelligence Project Tutorial that provides examples on data management and data processing for the Microsoft Data Platform.

- We also have a tutorial on Azure Data Factory which is a cloud based ETL solution used by data engineers to access different sources, build ETL pipelines, workflows and destinations in real-time.

- Stay tuned for an upcoming tip on What is ELT?

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-04-28