By: Hadi Fadlallah | Updated: 2022-05-04 | Comments | Related: > Integration Services Best Practices

Problem

Several SSIS developers report bad package performance when using different SSIS OLE DB components (OLE DB Source, OLE DB destination, OLE DB command). In this tutorial, we will take a look at why this occurs and what can be done.

Solution

This tutorial will explain some of the mistakes that SSIS developers usually make when configuring the OLE DB component while designing SSIS packages and how they should avoid them.

Filtering columns in the SSIS OLE DB Source

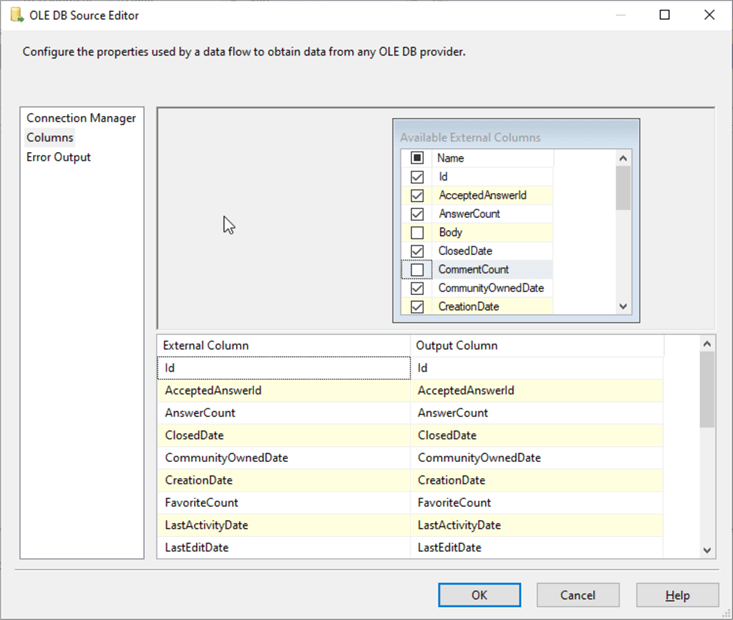

One of the most common mistakes is how developers filter columns using the OLE DB Source component. They think that unchecking columns in the SSIS OLE DB source component will minimize the amount of data loaded into memory.

Behind the scenes, unchecking will still retrieve them from the database engine and only remove them from the SSIS data pipeline; SSIS will execute a "SELECT *" command even if only one column is selected in the OLE DB Source editor.

How to only retrieve a few columns from the source table?

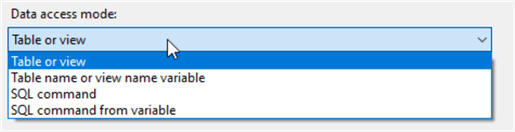

There are two efficient alternatives for filtering columns from the SSIS OLE DB Source editor. We can change the OLE DB Source data access mode and use a SQL command where we specify the needed columns in the SELECT statement. The other way is to create a view that only contains the required columns.

Note: Using a view or SQL command as a source can highly increase data load performance if they can retrieve the data from a covering index.

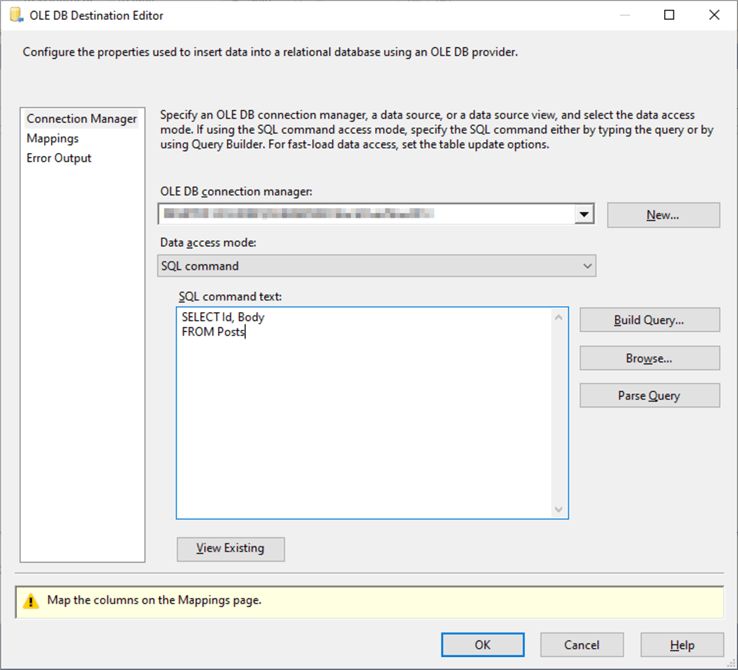

Using SQL Command as a destination

In contrast, one of the bad practices that can highly decrease the SSIS package performance is using a SQL command as a data access mode in the SSIS OLE DB Destination. Several times, I found some SSIS developers that use SQL Command as a destination. This approach is mainly used when the destination table contains many columns, and the SSIS developers want to only show the needed columns in the SSIS OLE DB Destination editor.

The main problem with this approach is that using a SQL Command as a destination will force a row-by-row insertion instead of inserting rows in batches. Besides, the developers will not benefit from the fast load option provided in the OLE DB Destination component.

Is there a good alternative to hide the useless columns?

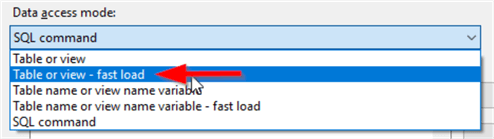

The most straightforward alternative is to create a view using the same SQL Command and select the "Table or view – fast load" data access mode to benefit from the "fast load" performance improvement.

Wrong OLE DB destination fast load configuration

When using the OLE DB Destination component to insert data into SQL Server, using the "Fast load" is highly recommended from a performance perspective. It guarantees a higher data insertion performance close to the BULK INSERT command performance.

What is the "fast load" option?

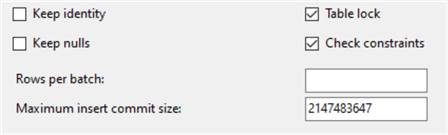

The "Fast load" option is used to bulk insert the data into the SQL Server database. When selecting a "Fast load" data access mode, some additional configurations appear in the OLE DB Destination editor:

- Keep identity: Keep identity values from the imported data file or use unique values assigned by SQL Server.

- Keep nulls: Retain a null value during the bulk load operation.

- Check constraints: Check constraints on the target table or view during the bulk import operation.

- Table lock: Acquire a table-level lock for the duration of the bulk load operation.

- Rows per batch: Specify the number of rows inserted per batch.

- Maximum commit size: Specify the maximum number of rows allowed per transaction.

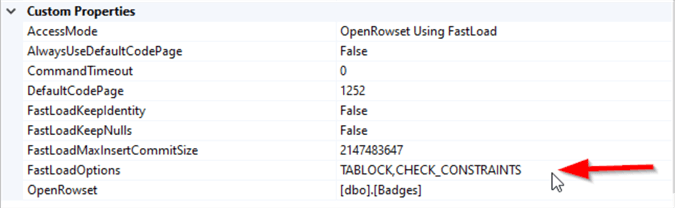

Three more "Fast load" options can be configured from the Advanced Editor by adding a keyword into the "FastLoadOptions" property:

- Kilobytes per batch (KILOBYTES_PER_BATCH): Specifies the size limit in kilobytes of a batch (instead of specifying the number of rows). For example, KILOBYTES_PER_BATCH=1024.

- Fire triggers (FIRE_TRIGGERS): Specifies whether triggers fire on the insert table.

- Ordered insertion (ORDER): Specifies how the input data is sorted. For example, ORDER ID DESC.

Fast load configuration tips

The following are some tips for using the fast load option:

- Having a better data insertion performance is not always a good thing; many developers uncheck the "Check constraints" option to increase the insertion speed (since the data validation time is decreased). This behavior usually causes messy data to be inserted into the destination table, which costs additional time for data cleaning and quality assurance tasks.

- Using the "ORDER" option can highly increase the data load performance if the data is sorted using the clustered index column(s).

- Mainly, it would be best if you did not modify the "Rows per batch", "Kilobytes per batch", and "Max commit size" properties unless you are dealing with a particular scenario. For example, you need to insert a huge volume of data on a machine having limited resources preventing it from handling the whole data set at once.

- Unchecking the "Table lock" will decrease the insertion performance since the OLE DB destination will try to get granular locks on each row/page and follow lock escalation to block the table.

- Some developers always check the "Keep Nulls" option to increase the data insertion performance. This behavior could be critical, especially when a default value is preferable for the columns that contain null. In addition, keeping nulls will decrease the data completeness. On the other hand, unchecking this option will replace all Nulls with the default value specified for the destination column and reduce the package performance.

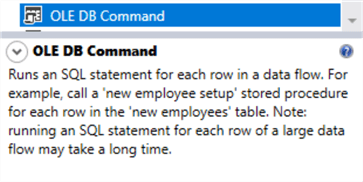

Using the OLE DB command to update large data

The last bad habit we are mentioning in this article is the usage of the OLE DB Command to update a large amount of data. Many developers use this component to update data stored within a data warehouse or a slowly changing dimension table which may take hours since the OLE DB command executes an SQL update query for each row. It is worth mentioning that this remark is mentioned in the OLE DB Command description in the SSIS toolbox.

Usually, this component should be used when handling small tables or when inserting a small chunk of data.

Alternative

An efficient alternative is to stage the data into a temp table then use an Execute SQL Task to update the destination table using the values stored in the staging table.

Next Steps

- It would be helpful if you read more about SSIS package performance optimization methods: SQL Server Integration Services SSIS Performance Tuning Techniques (mssqltips.com)

About the author

Hadi Fadlallah is an SQL Server professional with more than 10 years of experience. His main expertise is in data integration. He's one of the top ETL and SQL Server Integration Services contributors at Stackoverflow.com. He holds a Ph.D. in data science focusing on context-aware big data quality and two master's degrees in computer science and business computing.

Hadi Fadlallah is an SQL Server professional with more than 10 years of experience. His main expertise is in data integration. He's one of the top ETL and SQL Server Integration Services contributors at Stackoverflow.com. He holds a Ph.D. in data science focusing on context-aware big data quality and two master's degrees in computer science and business computing. This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-05-04