By: Hadi Fadlallah | Updated: 2023-09-29 | Comments (4) | Related: > Database Design

Problem

Over the last decade, we have experienced an unprecedented surge in data, primarily driven by the exponential expansion of social media platforms and the Internet of Things. This data explosion offered tremendous potential for organizations and introduced substantial complexities. Given the immense volume, speed, and diversity of data being generated, creating profiles for each data source has become imperative to enable organizations to broadly comprehend the attributes, quality, and structure of data originating from these diverse sources.

Solution

Data profiling means examining and understanding data. Profiling reveals data characteristics, structure, content, and quality. It involves assessing data values, identifying patterns, and gaining insights into distribution, relationships, and statistical properties.

This step-by-step technical article will explore how to perform data profiling on a database using SQL code. We will use a combination of SQL queries to gather various metrics and statistics about the data, such as column data types, null values, data length, and more. Ultimately, all SQL code portions are combined within a comprehensive script I used for years to examine SQL Server databases.

Each set of code needs to be run and the very last set of code returns the overall results for the data collected. Also, the DECLARE sections were repeated below to make it easier to copy the code and run as is. There is also a link at the very end of the article that has the entire code.

For this example, we will be collecting data from the AdventureWorks database, but this can be applied against any database.

Setup and Data Profiling Table Creation

The first part of our SQL code sets up the environment by creating a temporary table named ##Statistics. This table will store the results of our data profiling analysis for each column in the database tables. The columns in ##Statistics represent the tables and columns metadata besides various metrics we will calculate, such as column data type, null values count, maximum length, etc.

--MSSQLTips.com

IF OBJECT_ID('tempdb..##Statistics') IS NOT NULL DROP TABLE ##Statistics

IF OBJECT_ID('tempdb..#tblTemp') IS NOT NULL DROP TABLE #tblTemp

-- Create data profiling table

CREATE TABLE ##Statistics(

[table_schema] varchar(50),

[table_name] varchar(50),

[column_name] varchar(50),

[is_nullable] bit,

[data_type] varchar(20),

[default_value] varchar(50),

[max_length] int,

[max_length_reality] int,

[null_values] bigint,

[empty_strings_count] bigint,

[values_count] bigint,

[min_value] numeric(18,6),

[max_value] numeric(18,6),

[avg_value] numeric(18,6),

[stdev_value] numeric(18,6),

[median_value] numeric(18,6),

[date_before_1902] bigint,

[date_after_current] bigint,

[date_type_warning] bit,

[time_type_warning] bit,

[length_warning] bit,

[column_is_primary] bit,

[column_has_constraints] bit,

[column_is_foreign] bit,

[column_is_indexed] bit,

[column_is_identity] bit,

[column_is_partitioned] bit,

[table_row_count] bigint,

[table_total_space_KB] bigint,

[table_index_space_KB] bigint,

[table_used_space_KB] bigint,

[table_unused_space_KB] bigint,

[table_space_warning] bit,

[table_indexes_count] int,

[table_has_primary] bit,

[table_has_clustered_index] bit,

[table_partitions_count] int

)

Inserting Columns Information

Next, we use an INSERT INTO statement to populate the ##Statistics table with column information from the INFORMATION_SCHEMA.COLUMNS view. This view contains metadata about all columns in the database tables, such as column names, data types, and maximum lengths.

--MSSQLTips.com

Insert into ##Statistics(

[table_schema],[table_name],[column_name],[is_nullable],[data_type],[default_value],[max_length])

Select c.[table_schema],c.[table_name],c.[column_name], case c.[is_nullable] when 'NO' then 0 else 1 end,

c.data_type, c.column_default,

case when c.character_maximum_length is not null then c.character_maximum_length

when c.numeric_precision is not null then c.numeric_precision

else null end

from information_schema.columns c WHERE

not exists (select * from information_schema.views v

where v.table_name = c.table_name and v.table_schema = c.table_schema)

Calculating Columns Metrics

We use a cursor to loop through all tables in the database and calculate specific metrics for each table's columns. We create a temp table that stores the columns' metrics before updating them within the final result table we created at the beginning of our code.

Declare @strTablename nvarchar(100) Declare @strSchemaname nvarchar(100) Declare @strQuery nvarchar(max) Declare @strSecondaryQuery nvarchar(max) Declare @strUnpivot nvarchar(max) Create table #tblTemp([Schema] varchar(50), [Table] varchar(50), [Column] varchar(50), [FuncValue] numeric(18,6)) Declare csr Cursor for select table_schema, table_name from INFORMATION_SCHEMA.tables Open csr Fetch next from csr into @strSchemaname, @strTablename While @@fetch_status = 0 Begin -- The code is mentioned in the following subsections Fetch next from csr into @strSchemaname, @strTablename End close csr deallocate csr

Calculate Non-null Values for Nullable Columns

The metric calculated is the total number of values in the column. We created a dynamic SQL query that calculates those values and applies pivoting to store them within the temp table created.

--MSSQLTips.com

Declare @strTablename nvarchar(100)

Declare @strSchemaname nvarchar(100)

Declare @strQuery nvarchar(max)

Declare @strSecondaryQuery nvarchar(max)

Declare @strUnpivot nvarchar(max)

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate table #tblTemp

If exists(select * from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and IS_NULLABLE = 'Yes' )

Begin

Select @strQuery = ISNULL(@strQuery,'') + 'Count([' + Column_name + ']) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and IS_NULLABLE = 'Yes'

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and IS_NULLABLE = 'Yes'

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[Values_Count] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

end

Calculate the Maximum Length of Values

Similar to the previous step, we calculate each column's maximum length of values. We consider the actual data length in the column instead of the maximum length specified in the schema.

--MSSQLTips.com

Declare @strTablename nvarchar(100)

Declare @strSchemaname nvarchar(100)

Declare @strQuery nvarchar(max)

Declare @strSecondaryQuery nvarchar(max)

Declare @strUnpivot nvarchar(max)

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate table #tblTemp

If exists(select * from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname )

Begin

Select @strQuery = ISNULL(@strQuery,'') + 'MAX(LEN([' + Column_name + '])) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[max_length_reality] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

end

Calculate the Occurrence of Empty Strings

In this step, we calculate the count of empty strings in columns with character data types. We differentiate between null values and empty strings.

--MSSQLTips.com

Declare @strTablename nvarchar(100)

Declare @strSchemaname nvarchar(100)

Declare @strQuery nvarchar(max)

Declare @strSecondaryQuery nvarchar(max)

Declare @strUnpivot nvarchar(max)

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

If exists(select * from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%char%' )

Begin

Select @strQuery = ISNULL(@strQuery,'') + 'sum(case when [' + column_name + '] is null then 0 when ltrim(rtrim([' + column_name + '])) = '''' then 1 else 0 end) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%char%'

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%char%'

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[empty_strings_count] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

end

Calculate the Occurrence of Date Values Before 1902

This part of the code calculates the count of date values that fall before January 1, 1902, in columns with date data types.

--MSSQLTips.com

Declare @strTablename nvarchar(100)

Declare @strSchemaname nvarchar(100)

Declare @strQuery nvarchar(max)

Declare @strSecondaryQuery nvarchar(max)

Declare @strUnpivot nvarchar(max)

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

If exists(select * from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%date%' )

Begin

Select @strQuery = ISNULL(@strQuery,'') + 'sum(case when [' + column_name + '] is null then 0 when [' + column_name + '] <= ''19020101'' then 1 else 0 end) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%date%'

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%date%'

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[date_before_1902] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

end

Calculate the Occurrence of Date Values after the Current Date

Similarly, we calculate the count of date values greater than the current date in columns with date data types.

--MSSQLTips.com

Declare @strTablename nvarchar(100)

Declare @strSchemaname nvarchar(100)

Declare @strQuery nvarchar(max)

Declare @strSecondaryQuery nvarchar(max)

Declare @strUnpivot nvarchar(max)

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

If exists(select * from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%date%' )

Begin

Select @strQuery = ISNULL(@strQuery,'') + 'sum(case when [' + column_name + '] is null then 0 when [' + column_name + '] > GETDATE() then 1 else 0 end) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%date%'

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE like '%date%'

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[date_after_current] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

end

Calculate Max, Median, Min, Average, and Standard Deviation

We use dynamic SQL and unpivot techniques to calculate metrics like maximum, median, minimum, average, and standard deviation for numeric data types in each column.

--MSSQLTips.com

Declare @strTablename nvarchar(100)

Declare @strSchemaname nvarchar(100)

Declare @strQuery nvarchar(max)

Declare @strSecondaryQuery nvarchar(max)

Declare @strUnpivot nvarchar(max)

Truncate Table #tblTemp

-- Get Max values

declare @datatype nvarchar(50)

declare csrdatatypes cursor for select distinct DATA_TYPE from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and (DATA_TYPE like '%int%' or DATA_TYPE like '%decimal%' or DATA_TYPE like '%numeric%' or DATA_TYPE like '%float%')

open csrdatatypes

fetch next from csrdatatypes into @datatype

while @@FETCH_STATUS = 0

begin

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Select @strQuery = ISNULL(@strQuery,'') + 'max([' + column_name + ']) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[max_value] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

-- Get Median values

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Select @strQuery = ISNULL(@strQuery,'') + 'PERCENTILE_CONT(0.5) WITHIN GROUP (ORDER BY [' + column_name + ']) OVER () as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select TOP 1 ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[median_value] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

-- Get Min values

Select @strQuery = ISNULL(@strQuery,'') + 'min([' + column_name + ']) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.min_value = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

-- Get Average

Select @strQuery = ISNULL(@strQuery,'') + 'avg([' + column_name + ']) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.avg_value = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

-- Get STD

Select @strQuery = ISNULL(@strQuery,'') + 'Stdev([' + column_name + ']) as [' + column_name + '],' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Select @strUnpivot = ISNULL(@strUnpivot,'') + '[' + column_name + '] ,' from INFORMATION_SCHEMA.columns where table_name = @strTablename and table_schema = @strSchemaname and DATA_TYPE = @datatype

Set @strQuery = 'Select ''' + @strSchemaname + ''' AS [Schema],''' + @strTablename + ''' as [Table], [Column], [FuncValue] From (Select ' + Substring(@strQuery,1,LEN(@strQuery) -1) + ' From [' + @strSchemaname + '].[' + @strTablename + ']) As p ' + SUBSTRING(@strUnpivot,1,Len(@strUnpivot) - 1) + ')) As unpvt '

Insert Into #tblTemp Exec (@strQuery)

Update T1

Set T1.[stdev_value] = T2.FuncValue

FROM ##Statistics T1 inner join #tblTemp T2 ON T1.table_schema = T2.[Schema] and T1.table_name = T2.[Table] and T1.column_name = T2.[Column]

fetch next from csrdatatypes into @datatype

end

close csrdatatypes

deallocate csrdatatypes

Set @strQuery = ''

Set @strSecondaryQuery = 'Select '

Set @strUnpivot = ' Unpivot ([FuncValue] for [Column] IN ('

Truncate Table #tblTemp

Update Additional Metrics

In this section, we update specific metrics like whether a column is a primary key, foreign key, has constraints, is indexed, etc.

Check for Columns with Names Revealing Wrong Data Types

We use the following code to check if there is some column name that indicates a different data type than the actual one:

--MSSQLTips.com update ##Statistics set [date_type_warning] = case when data_type not like '%date%' and column_name like '%date%' then 1 else 0 end ,[time_type_warning] = case when data_type not like '%time%' and data_type not like '%int%' and column_name like '%time%' then 1 else 0 end

Check If Maximum Length is Over Estimated

The code below is to check if there is a significant difference between the actual maximum length and the column length specified in the metadata:

--MSSQLTips.com update ##Statistics set length_warning = case when (data_type like '%char%' and max_length is not null and max_length_reality is not null) and (max_length - max_length_reality >= (isnull(max_length,0)/4)) then 1 else 0 end

Retrieve Data Storage Information

After summarizing table structures and data, it is useful to check the storage information. Besides, we calculate the number of nulls in each column by subtracting the non-null values count (calculated previously) from the total number of rows extracted from the table metadata.

--MSSQLTips.com

Update t1

SET table_row_count = t2.row_count,

[Null_Values] = (t2.row_count - [Values_Count]),

[table_indexes_count] = IndexCount,

[table_total_space_KB] = TableSpaceKB,

[table_index_space_KB] = IndexSpaceKB,

[table_used_space_KB] = UsedSpaceKB,

[table_unused_space_KB] = UnusedSpaceKB,

[table_space_warning] = case when UnusedSpaceKB >= 0.25*TableSpaceKB then 1 else 0 end,

[table_partitions_count] = PartitionCount

FROM ##Statistics t1 inner join (

SELECT

t.NAME AS Table_Name,

s.NAME AS [Schema_Name],

COUNT(DISTINCT p.partition_number) AS PartitionCount,

SUM(p.rows) AS [Row_Count],

COUNT(DISTINCT i.index_id) AS IndexCount,

SUM(CASE WHEN i.index_id < 2 THEN a.total_pages ELSE 0 END) * 8 AS TableSpaceKB,

SUM(CASE WHEN i.index_id >= 2 THEN a.total_pages ELSE 0 END) * 8 AS IndexSpaceKB,

SUM(a.used_pages) * 8 AS UsedSpaceKB,

(SUM(a.total_pages) - SUM(a.used_pages)) * 8 AS UnusedSpaceKB

FROM

sys.tables t

INNER JOIN

sys.indexes i ON t.OBJECT_ID = i.object_id

INNER JOIN

sys.partitions p ON i.object_id = p.OBJECT_ID AND i.index_id = p.index_id

INNER JOIN

sys.allocation_units a ON p.partition_id = a.container_id

LEFT OUTER JOIN

sys.schemas s ON t.schema_id = s.schema_id

WHERE p.index_id < 2

GROUP BY

t.NAME, s.NAME

) t2 on t1.table_name = t2.table_name and t1.table_schema = t2.[schema_name]

Retrieve Table Structure Information

Primary Keys

First of all, we start by retrieving primary key information:

--MSSQLTips.com -- Check if column is a primary key update t1 set t1.[column_is_primary] = 1 from ##Statistics t1 inner join INFORMATION_SCHEMA.KEY_COLUMN_USAGE t2 on t1.table_name = t2.table_name and t1.column_name = t2.column_name and t1.table_schema = T2.TABLE_SCHEMA inner join INFORMATION_SCHEMA.TABLE_CONSTRAINTS t3 on t2.CONSTRAINT_NAME = t3.CONSTRAINT_NAME and t2.CONSTRAINT_SCHEMA = t3.CONSTRAINT_SCHEMA where t3.CONSTRAINT_TYPE = 'PRIMARY KEY' -- Table having primary keys update t1 set t1.[table_has_primary] = 1 from ##Statistics t1 inner join INFORMATION_SCHEMA.KEY_COLUMN_USAGE t2 on t1.table_name = t2.table_name and t1.table_schema = T2.TABLE_SCHEMA inner join INFORMATION_SCHEMA.TABLE_CONSTRAINTS t3 on t2.CONSTRAINT_NAME = t3.CONSTRAINT_NAME and t2.CONSTRAINT_SCHEMA = t3.CONSTRAINT_SCHEMA where t3.CONSTRAINT_TYPE = 'PRIMARY KEY'

Foreign Keys

Then, we retrieve the foreign key information:

--MSSQLTips.com -- column is a foreign key update t1 set t1.[column_is_foreign] = 1 from ##Statistics t1 inner join INFORMATION_SCHEMA.KEY_COLUMN_USAGE t2 on t1.table_name = t2.table_name and t1.column_name = t2.column_name and t1.table_schema = T2.TABLE_SCHEMA inner join INFORMATION_SCHEMA.TABLE_CONSTRAINTS t3 on t2.CONSTRAINT_NAME = t3.CONSTRAINT_NAME and t2.CONSTRAINT_SCHEMA = t3.CONSTRAINT_SCHEMA where t3.CONSTRAINT_TYPE = 'FOREIGN KEY'

Constraints

Next, we identify columns that have constraints

--MSSQLTips.com -- column has constraints update t1 set t1.[column_has_constraints] = 1 from ##Statistics t1 inner join INFORMATION_SCHEMA.KEY_COLUMN_USAGE t2 on t1.table_name = t2.table_name and t1.column_name = t2.column_name and t1.table_schema = T2.TABLE_SCHEMA inner join INFORMATION_SCHEMA.TABLE_CONSTRAINTS t3 on t2.CONSTRAINT_NAME = t3.CONSTRAINT_NAME and t2.CONSTRAINT_SCHEMA = t3.CONSTRAINT_SCHEMA where t3.CONSTRAINT_TYPE NOT IN ( 'FOREIGN KEY','PRIMARY KEY')

Indexed Columns

Identity indexed columns:

--MSSQLTips.com

-- column is indexed

update t4

set column_is_indexed = 1

From sys.all_columns t1 inner join sys.index_columns t2 on t1.column_id = t2.column_id and t1.object_id = t2.object_id

inner join sys.indexes t3 on t3.index_id = t2.index_id and t3.object_id = t2.object_id

inner join ##Statistics t4 on t1.object_id = object_id('[' +t4.table_schema + '].[' + t4.table_name + ']') and t1.name = t4.column_name

Identity Columns

Identify identity columns:

--MSSQLTips.com

-- column is identity

update t2

set t2.column_is_identity = 1

from sys.all_columns t1 inner join ##Statistics t2 on t1.object_id = object_id('[' + t2.table_schema + '].[' + t2.table_name + ']') and t1.name = t2.column_name

where t1.is_identity = 1

Table Having a Clustered Index

Retrieving tables having a clustered index:

--MSSQLTips.com

-- table has clustered index

update t2

set t2.table_has_clustered_index = 1

from sys.indexes t1 inner join ##Statistics t2 on t1.object_id = object_id('[' + t2.table_schema + '].[' + t2.table_name + ']')

where t1.type_desc like 'clustered%'

Replace Nulls with Zero

Finally, we replace null values in specific metrics columns with zero for better representation and clarity.

--MSSQLTips.com update ##Statistics set column_has_constraints = case when column_has_constraints = 1 then 1 else 0 end, table_has_clustered_index = case when table_has_clustered_index = 1 then 1 else 0 end, column_is_identity = case when column_is_identity = 1 then 1 else 0 end, column_is_indexed = case when column_is_indexed = 1 then 1 else 0 end, [table_has_primary] = case when [table_has_primary] = 1 then 1 else 0 end, [column_is_foreign] = case when [column_is_foreign] = 1 then 1 else 0 end, [column_is_primary] = case when [column_is_primary] = 1 then 1 else 0 end, [column_is_partitioned] = case when [column_is_partitioned] = 1 then 1 else 0 end, values_count = case is_nullable when 0 then table_row_count else values_count end, null_values = case is_nullable when 0 then 0 else null_values end

Running the Script

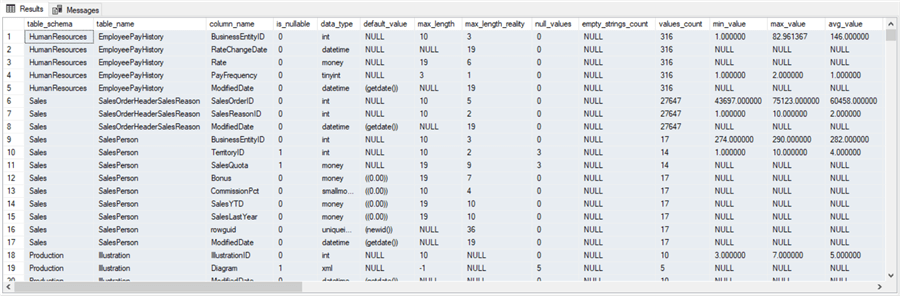

Finally, we should retrieve the data from the temporary statistics table we create using the following SELECT command:

--MSSQLTips.com select * from ##Statistics

Next Steps

- Here is the entire profiling script.

- In a later article, we will use the output of this script in a Power BI report to emphasize the potential warnings in our data.

- It is recommended to check the SSIS Data Profiling Task to learn more about the existing data profiling methods.

About the author

Hadi Fadlallah is an SQL Server professional with more than 10 years of experience. His main expertise is in data integration. He's one of the top ETL and SQL Server Integration Services contributors at Stackoverflow.com. He holds a Ph.D. in data science focusing on context-aware big data quality and two master's degrees in computer science and business computing.

Hadi Fadlallah is an SQL Server professional with more than 10 years of experience. His main expertise is in data integration. He's one of the top ETL and SQL Server Integration Services contributors at Stackoverflow.com. He holds a Ph.D. in data science focusing on context-aware big data quality and two master's degrees in computer science and business computing. This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2023-09-29