By: Sergiu Onet | Updated: 2024-02-14 | Comments | Related: > Google Cloud

Problem

We built and hosted our services in Google Cloud Platform (GCP) and want to make sure we can handle increased traffic, scale the apps, and rest assured if one instance goes down. This tip provides a few examples of where to achieve availability and performance targets in GCP.

Solution

GCP has load balancing and autoscaling options that can be utilized to protect from unexpected instance failures, handle heavy traffic, scale our apps, and distribute traffic closer to our users.

Managed Instance Groups

Let's talk about autoscaling in the context of managed instance groups. A managed instance group is a group of identical VM instances provisioned using a template and controlled as a single unit. You can set it in a single zone or region, resize the group, add more instances if you need more compute power, and reduce the number of instances if the load decreases. This autoscaling behavior can scale by CPU usage, metrics, load balancing capacity, and queue-based workload.

A load balancer can be added to a managed instance group to distribute traffic to all the instances inside the group. If an instance goes down, it's recreated with the same configuration from the template.

Load Balancer

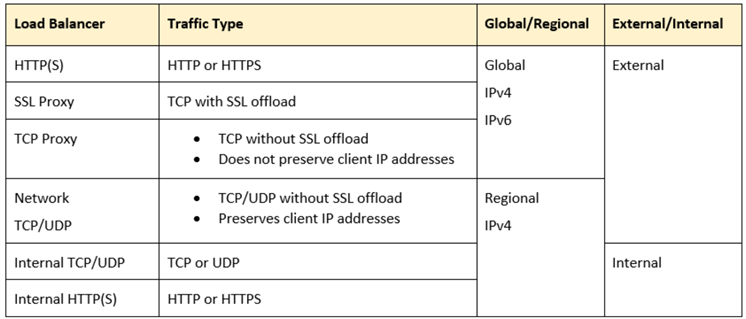

We can choose from several load balancer options based on traffic type, global/regional, and internal/external.

HTTP(S) Load Balancer (Application Load Balancer)

It's a Layer 7 load balancer that lets you route traffic to backend services based on message content, URL type, or the http/https header. It's a global resource, has a single anycast IP address, routes traffic to the instance group closest to the user, and can also route traffic to specific instances only. It uses a global rule that sends the incoming requests to a target HTTP proxy that uses a URL map to check what backend to use for the requests. This means you can send specific requests to certain backends for certain purposes. You can send requests that look like www.yoursite.com/video to a backend configured for this purpose. This backend service is smart enough to direct the request to a specific backend based on the instance health of the backends, capacity, and zone.

The health checks ensure to send requests only to healthy instances and distribute them in a round-robin mechanism.

Session affinity can be configured to send requests from the same client to the same VM instance. There's also a timeout setting of 30 seconds when the backend service waits for the backend before it marks the request as a failure.

The backends have a balancing mode setting based on CPU usage or requests per second. This helps the balancer know whether the backend is in full load. If yes, it will direct the request to the closest region that can handle requests.

The capacity setting lets you set a limit on your backend instances for CPU usage, number of connections, or a maximum rate.

For HTTPS, you need to create SSL certificates for the load balancer's target proxy. Each certificate must have an SSL certificate resource containing the certificate information.

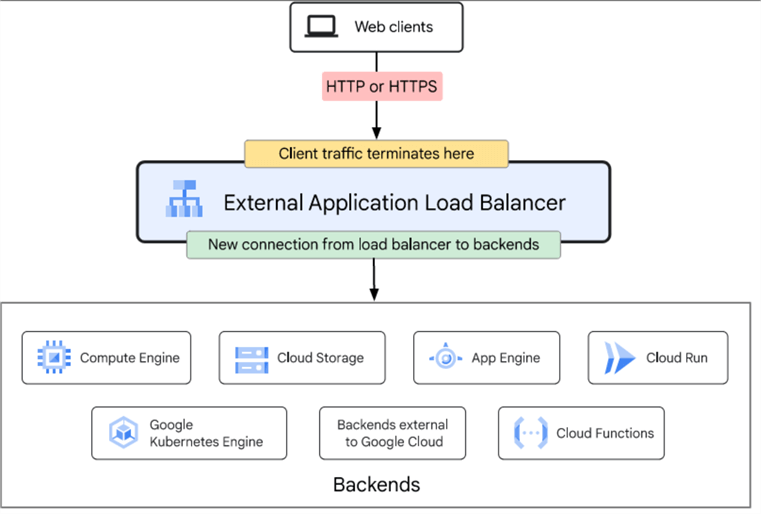

This load balancer can be deployed as External or Internal:

- External Load Balancer distributes the traffic originating from the internet to our VPC. It's globally distributed, has advanced traffic management features like traffic splitting or mirroring, protects from DDoS attacks with the help of Cloud Armor, and can use Cloud CDN for cached responses.

source - https://cloud.google.com/static/load-balancing/images/external-application-load-balancer.svg

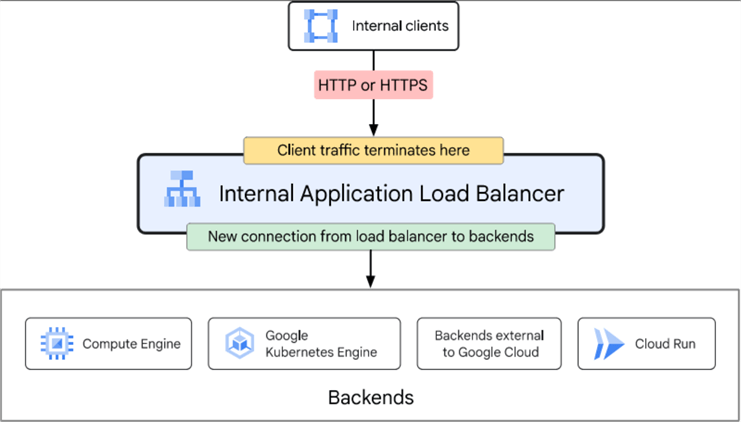

- Internal Load Balancer handles traffic originating from clients using an internal IP address inside VPC. It can be a regional or cross-region resource. In a regional configuration, the load balancer supports regional backend resources, and using a cross-region setup, you can use global backend resources; clients from any GCP region can send traffic to the balancer.

source - https://cloud.google.com/static/load-balancing/images/internal-application-load-balancer.svg

Proxy Network Load Balancer (TCP/SSL Proxy)

This is a Layer 4 load balancer used for TCP traffic. It can use SSL and distributes the traffic to the closest backend resource located in a VPC or another cloud. It supports port remapping where the port specified at the load balancer's forwarding rule can be different than the one used to connect to backends, and it can relay the client's source IP and port to the load balancer backend.

This load balancer can be deployed as External or Internal:

- External Load Balancer

can be configured as global, regional, or

classic. The global configuration is in Preview,

implemented on Google's front-end infrastructure (GFE), and available

for the Premium tier. The regional configuration is based on Envoy open-source

proxy and is available in the Standard tier. Classic configuration is similar

to the global configuration but can be configured as a regional resource in

the Standard tier.

This load balancer forwards traffic from the internet to backend resources in a VPC, on-premises, or another cloud. It supports IPv4 and IPv6, integrates with Cloud Armor for additional protection, and can offload TLS at the load balancer layer using SSL proxy. You can also set SSL policies to control the negotiation with clients better. - Internal Load Balancer is based on Envoy open-source proxy, and it forwards traffic to backends on GCP, on-premises, or other clouds. The balancer is available in the Premium tier, accessible using the internal IP in the region where your VPC is, and only clients from that VPC have access. If global access is enabled, the balancer is available to clients from any region. The balancer can also be available from other networks if you use Cloud VPN, Interconnect, or Network Peering to connect the networks. There is also a Cross-region setup where you can distribute traffic to backends located in different regions. This configuration protects from a region failure and gives higher availability, or you can forward traffic to the closest backend.

Find out more about the architecture of an internal proxy balancer: Internal proxy Network Load Balancer overview.

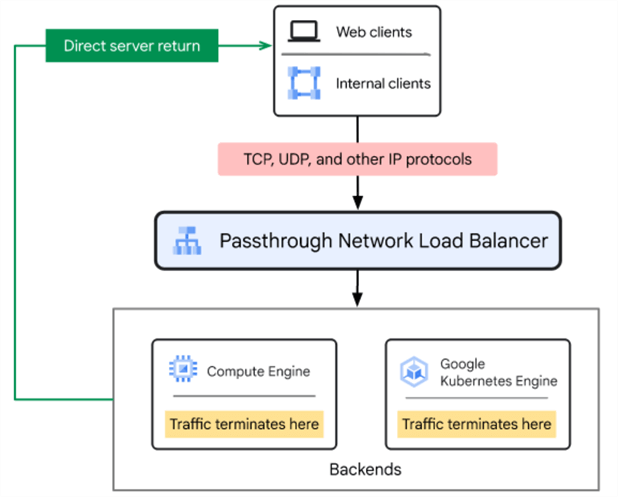

Passthrough Network Load Balancers

This is also a Layer 4 balancer. It's a regional resource, so the traffic is distributed to backends from the same region as the balancer. This does not act like a proxy, but instead, the backends will receive packets from the balancer that will contain source and destination IP, protocol, and ports if needed. One other difference is the response from the backend resources will go straight to the clients, not through the balancer. If you need to preserve the client source IP or balance TCP, UDP, and/or SSL traffic on ports not supported by other balancers, this could be a good choice.

This balancer can be configured as External or Internal:

- Using an External setup,

clients from the internet or GCP VMs with an external IP can connect to the

balancer, and Cloud Armor can be used for extra protection. This external balancer

can be configured as backend service or target pool based.

Target pool is the legacy configuration and can be used with forwarding rules for TCP and UDP traffic only. This setup creates a group of instances that need to be in the same region. You can have only one health check for each pool, and each project can have a maximum of 50 target pools.

In a backend service setup, traffic is distributed to the backend service instances, health checks are performed for SSL and HTTPS, auto-scaling managed instance groups are supported, traffic can be sent to certain backends, and you can load balance to GKE.

- Internal passthrough balancer distributes traffic to VM instances in the same VPC region. Regional backends are supported, so you can scale inside a region if needed. You can also enable global access to accept connections from any region, load balance to GKE, and set the balancer as the next hop (gateway) so you can forward the traffic to the final route.

source - https://cloud.google.com/static/load-balancing/images/passthrough-network-load-balancer.svg

Next Steps

- There are many choices for load balancing. They share some similarities and features but also have some limitations. Carefully choose the right option for your needs based on traffic type, external/internal, global/regional, etc.

- Google has a good resource that provides an overview of all these aspects to help you make the right choice: Choose a load balancer

About the author

Sergiu Onet is a SQL Server Database Administrator for the past 10 years and counting, focusing on automation and making SQL Server run faster.

Sergiu Onet is a SQL Server Database Administrator for the past 10 years and counting, focusing on automation and making SQL Server run faster.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-02-14