By: Ron L'Esteve | Updated: 2022-02-08 | Comments (2) | Related: > Azure

Problem

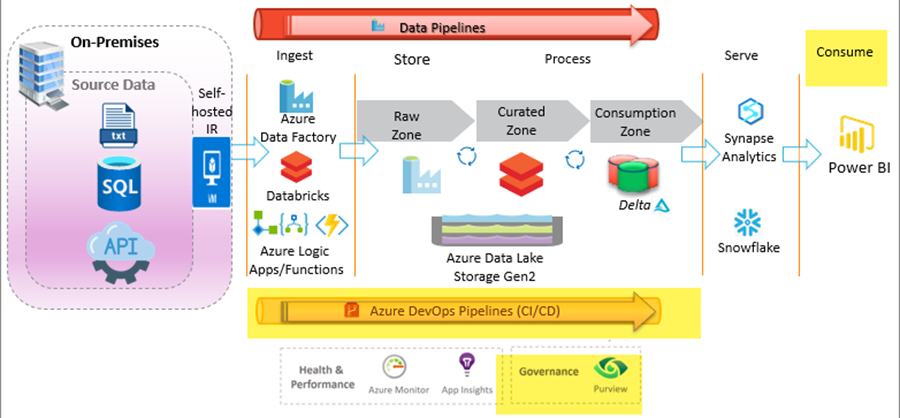

After data is ingested, stored, processed, and served within the Lakehouse, there are a variety of methods for consuming the served data through both Azure native and non-native tools. These Azure native consumption tools include Power BI, Analysis Services, Power Apps and more. Additionally, Cognitive Services and Azure Machine Learning empower Data Scientists, Advanced Analysts, Business Stakeholders and more to apply advanced analytics on their data to gain more insights, make better decisions, and contribute to the overall success of organizations. Continuous integration, deployment, and data governance are also key capabilities that customers are seeking in the Lakehouse. They are interested in learning more about the capabilities of Azure native consumption layer technologies, CI / CD, and data governance.

Solution

The Lakehouse architecture supports deep advanced analytics and AI use cases with cognitive services, Azure ML, Databricks ML, and Power BI AI/ML capabilities. Various versions of Power BI support deep reporting, data modeling, and dashboarding capabilities. It is critical to build DevOps best practices within any data platform and the Lakehouse supports multi-environment automated continuous and integration (CI / CD) best practices using Azure DevOps. All these modern Lakehouse components can be automated with CI/ CD, scheduled, monitored for health and performance with Azure Monitor and App Insights, secured with Key Vault, and governed with Purview. This article will focus on the consumption layer of the Lakehouse by describing the capabilities of reporting, data modeling and advanced analytics. You will also learn about continuous integration and deployment capabilities within the Lakehouse using Azure DevOps pipelines. From a data governance perspective, you will learn more about Purview and its roles in the Modern Data Lakehouse.

Consumption

Storing and serving data is a pivotal step in the end-to-end lifecycle from sourcing data to consuming it. It is within the storage and serving layer where cleansed, transformed, and curated data is made available for consumption by the end users. Consumption can come in many different variations such as through Azure native BI tools including Power BI, Analysis Services, Power Platform, etc. Data within the Lakehouse can also be consumed by a variety of non-Azure BI tools such as Tableau, Informatica and more. A good Lakehouse serving layer offer flexibility for consuming the data from a variety of BI tools. In this section, our efforts will focus on understanding the capabilities of some of these Lakehouse consumption options within Azure.

Analysis Services

Azure Analysis Services is a fully managed platform as a service that provides enterprise-grade data models in the cloud. With Analysis Services, users can create secured KPIs, data models, metrics and more from multiple data sources with a tabular semantic data model. Analysis Services integrates well with a variety of Azure services and can connect to Synapse Analytics Dedicated pools. When comparing Analysis Services with Power BI Premium (PBI), Analysis Services (AAS) may offer better scale out capabilities, metadata translations, object level security, and perspective feature set, however in the modern Lakehouse organizations and users are strongly considering and choosing Power BI Premium over AAS due to its vast features including paginated reports, data flows, AI workloads, pay per user models, and much more. The table below lists many of these comparisons between PBI and AAS.

| Power BI Premium | Azure Analysis Services | |

|---|---|---|

| Unlimited Power BI content viewing | Yes | No |

| Paginated Reports | Yes | No |

| Dataflows | Yes | No |

| AI Workload | Yes | No |

| Multi-model memory management | Yes | No |

| Pre-Aggregated tables | Yes | No |

| Composite models | Yes | No |

| Automated incremental refresh | Yes | No |

| Large datasets | Yes | Yes |

| Third party application support | Yes | Yes |

| Bring Your Own Key | Yes | No |

| Scale out | Not yet | Yes |

| Metadata Translations | Not yet | Yes |

| Object Level Security | Not yet | Yes |

| Perspectives | Not yet | Yes |

Power BI

Power BI (PBI) is an Azure native reporting technology which provides interactive visualizations, business intelligence capabilities which empower users to create their own visually appealing, accurate, and highly performant reports and dashboards. With Power BI, users can directly query the Lakehouse parquet files using the OPENROWSET function within a SQL query after specifying a SQL on-demand endpoint in the connection credentials. Within Synapse Analytics workspace, you can create a PBI linked service and used that within the various services of the Synapse Analytics unified analytics platform. Power BI also supports connectivity to a variety of other Lakehouse tools including Databricks, Snowflake, REST APIs and many more. From a cost optimization perspective, parquet will perform optimally due to its columnar storage format benefits, therefore the view on parquet files in Synapse Serverless SQL pools will result in low-cost compute resources. For a high number of queries across extremely large datasets, Dedicated SQL Pools may be the better storage choice to prevent from high costs incurring per query. Materialized views with External tables on Dedicated SQL pools may help with these high query volumes against the large datasets. When selecting a PBI service option, there are a few options to choose from which includes Premium versus Pro. Each option comes with its own capabilities and price points. The table below shows a side-by-side comparison between Power BI Pro and Premium to help with choosing the right feature for your reporting needs.

| Feature | Power BI Pro | Power BI Premium | Power BI Premium |

|---|---|---|---|

| Per user | Per capacity | ||

| Collaboration and analytics | |||

| Mobile app access | Yes | Yes | Yes |

| Publish reports to share and collaborate | Yes | Yes | |

| Paginated (RDL) reports | Yes | Yes | |

| Consume content without a per-user license | Yes | ||

| On-premises reporting with Power BI Report Server | Yes | ||

| Data prep, modeling, and visualization | |||

| Model size limit | 1 GB | 100 GB | 400 GB |

| Refresh rate | 8/day | 48/day | 48/day |

| Connect to 100+ data sources | Yes | Yes | Yes |

| Create reports and visualizations with Power BI Desktop 4 | Yes | Yes | Yes |

| Embed APIs and controls | Yes | Yes | Yes |

| AI visuals | Yes | Yes | Yes |

| Advanced AI (text analytics, image detection, automated machine learning) | Yes | Yes | |

| XMLA endpoint read/write connectivity | Yes | Yes | |

| Dataflows (direct query, linked and computed entities, enhanced compute engine) | Yes | Yes | |

| Analyze data stored in Azure Data Lake Storage | Yes | Yes | |

| Governance and administration | |||

| Data security and encryption | Yes | Yes | Yes |

| Metrics for content creation, consumption, and publishing | Yes | Yes | Yes |

| Application lifecycle management | Yes | Yes | |

| Multi-geo deployment management | Yes | ||

| Bring your own key (BYOK) | Yes | ||

| Auto scale add-on availability (preview) | Yes | ||

| Maximum storage | 10 GB/user | 100 TB | 100 TB |

| Continuous Integration and Deployment | |||

| Deployment pipelines (including paginated reports management) | Yes | Yes | |

Power Apps

Power Apps is a suite of apps, services, connectors and data platform that provides a rapid application development environment to build custom apps for your business needs. There are two styles of these apps: canvas apps and model driven apps. Canvas apps provide you with a blank canvas onto which you can drag and drop components in any formation to design a user interface. Model driven apps are based on underlying data stored in Common Data Service (CDS), which is a secure, cloud-based storage space that organizations can use to store business application data. Canvas apps are ideal for building task-based or role-based applications. Model-driven apps, on the other hand, are better for creating end-to-end solutions. Within the Lakehouse, Power Apps fits within the consumption layer since users are able build custom apps to interact with data in the storage and serving layer using GUI based BI tools to interact with the data through read and write back operations.

Advanced Analytics

As data makes its way into the storage, serving, and consumption layers of the Lakehouse, a subset of your data consumers including from Business Stakeholders, Data Scientists, and others may be interested in the data from the perspective of performing advanced analytics on the Lakehouse datasets. These various stakeholders and data consumers would be interested in understanding how the capabilities that the Lakehouse provides as it relates to Cognitive Services and Machine Learning. With the benefit of having data stored in the Lakehouse which supports a variety of file formats such as images, parquet, JSON, Avro, xml, REST APIs, and more Azure cognitive and machine learning services can seamlessly connect to these various Lakehouse datasets through their Azure native tools and technologies.

Cognitive Services

Cognitive Services brings AI to developers through APIs and offers a variety of services to provide the ability for AI to see, hear, speak, search, understand and accelerate decision-making into apps. Developers of all skill levels and those that do not have an expertise in Machine Learning can easily add AI capabilities to their apps. The current available Azure Cognitive services include the following. Each of these services has the capability of connecting to Lakehouse datasets store and served in Azure Storage (ADLSg2 and BLOB):

- Anomaly Detector: Identify potential problems early on.

- Content Moderator: Detect potentially offensive or unwanted content.

- Metrics Advisor: Monitor metrics and diagnose issues.

- Personaliser: Create rich, personalized experiences for every user.

- Immersive Reader: Help comprehend text using audio & visual cues.

- Language Understanding: Build natural language understanding in apps.

- QnA Maker: Create a conversational question & answer layer over data.

- Text Analytics: Detect sentiment, key phrases and named entities.

- Translator: Detect and translate more than 90 supported languages.

- Speech to Text: Transcribe audio speech into readable text.

- Text to Speech: Convert text to lifelike speech for natural interfaces.

- Speech Translation: Integrate real-time speech translation into apps.

- Speaker Recognition: Identify & verify speaking based on audio.

- Computer Vision: Analyze content in images and video.

- Custom Vision: Customize image recognition to fit your business needs.

- Face: Detect and identify people and emotions in images.

- Form Recognizer: Extract text, key-value pairs & tables from documents.

- Video Indexer: Analyze visual & audio of video & index content

- Search: Azure Cognitive Search is a cloud search service that gives developers APIs and tools for building a rich search experience over private, heterogeneous content in web, mobile, and enterprise applications.

Machine Learning

Azure Machine Learning is a service that delivers a complete data science platform. It supports both code-first and low-code experiences. Azure Machine Learning studio is a web portal in Azure Machine Learning that contains low-code and no-code options for project authoring and asset management and can connect to a variety of Lakehouse services including ADLS gen2, Azure SQL Database and more. Azure Machine Learning also integrates well with other Databricks, Data Factory, and Kubernetes services for model deployment. The three main machine learning techniques include the following:

- Supervised learning: Algorithms make predictions based on a set of labeled examples that you provide. This technique is useful when you know what the outcome should look like.

- Unsupervised learning: Algorithms label the datasets for you by organizing the data or describing its structure. This technique is useful when you don’t know what the outcome should look like.

- Reinforcement learning: Algorithms learn from outcomes and decide which action to take next. After each action, the algorithm receives feedback that helps it determine whether the choice it made was correct, neutral, or incorrect. It’s a good technique to use for automated systems that have to make a lot of small decisions without human guidance.

Continuous Integration, Deployment, and Governance

Once you’ve fully built out your ingestion, processing, serving, storage, and consumption layers of your Lakehouse Platform, there are various options for continuously integrating and deploying automated code to multiple environments along with applying robust data governance policies and practices on data. This section will cover data CI / CD and governance (Purview) within the Azure Data Lakehouse Platform.

DevOps

A CI/CD pipeline is used to automate the process of continuous integration and continuous deployment. The pipeline facilitates the software delivery process via stages like Build, Test, Merge, and Deploy. Azure DevOps Server is a Microsoft product that provides version control, reporting, requirements management, project management, automated builds, testing and release management capabilities. It covers the entire application lifecycle, and enables DevOps capabilities such as CI/CD within the Lakehouse. This section will cover the various CI /CD pipelines and process including SQL Database, Data Factory and MLOps deployments that can be built within the Lakehouse.

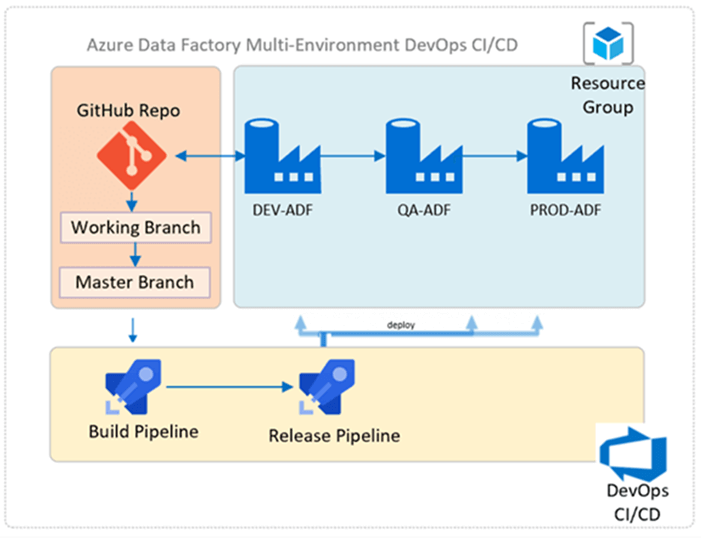

There are a few methods of deploying Azure Data Factory environments with Azure DevOps CI/CD. Source control repository options can range from GitHub to DevOps Git and implementation architectures can range from utilizing adf_publish branches to using working and master branches instead. Azure DevOps Build and Release pipelines can used for CI/CD, and custom PowerShell scripts can be used for deploying the CI/CD Data Factory resources automatically within upper environments. The Figure below illustrates the CI / CD process for ADF.

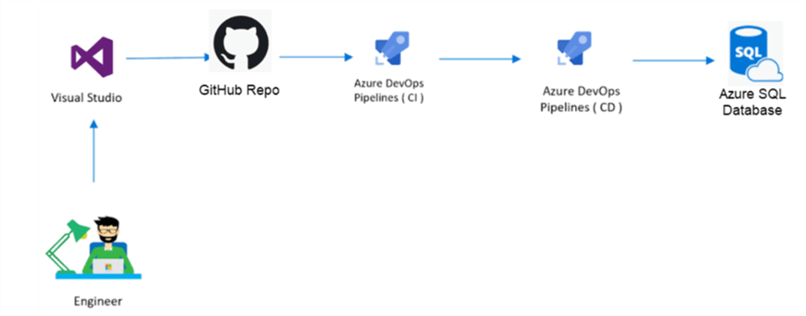

CI / CD within Azure SQL Databases and Synapse Dedicated SQL Pools is also possible with AzureDevOps. The best way to work with change control to prepare for CI and CD for databases is to perform all development using Visual Studio. There are a few source-control options within Visual Studio. GitHub is one of these source control options and offers a number of benefits including advanced security options. Integrating multiple applications such as Visual Studio, GitHub, Azure DevOps and Azure SQL Databases for a seamless CICD process is a growing need for many enterprises that are on a journey to modernize their data and infrastructure platform. The architectural flow diagram shown in the Figure below, illustrates how to deploy local SQL DDL and DML scripts from Visual Studio to an Azure SQL Database through a GitHub repository which is then picked up with an Azure DevOps Build (CI) and Release (CD) pipeline that tests and deploys the changes to the upper environments.

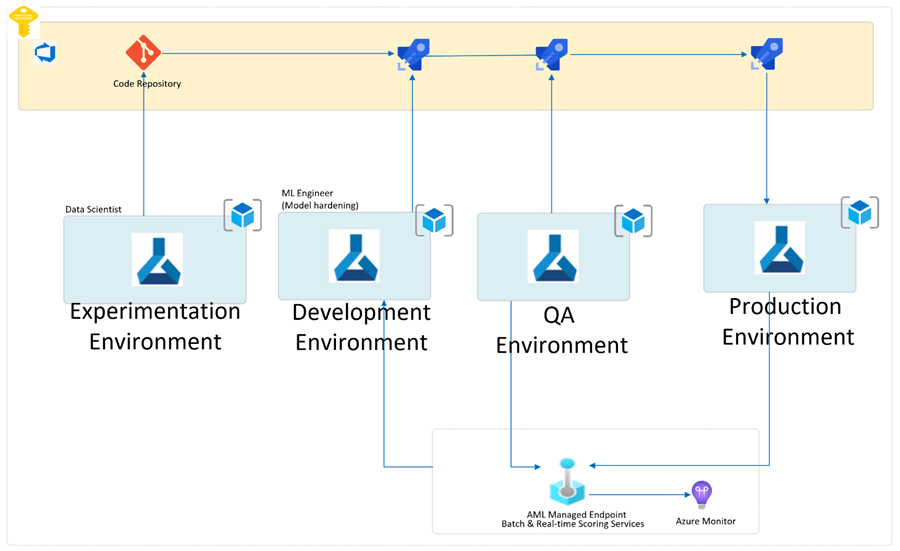

The DevOps CI / CD paradigm is prevalent across a variety of Azure PaaS services. DevOps for Machine Learning, also known as MLOps, enables Data Science, IT and Business teams to increase the pace of model development and deployment through automated continuous integration and deployment best practices. Well-designed MLOps paradigms support monitoring, validation, and governance of machine learning models. Large globally distributed organizations are seeking methods of success for deploying MLOps Frameworks across their organizations for multiple Data Science teams. They are seeking to clearly understand Azure MLOps Frameworks and patterns of success as they champion MLOps across their organizations. The Figure below, containing a generic Azure MLOps framework architecture diagram, illustrates just how Azure Machine Learning, coupled with Azure DevOps makes the concept of the Azure MLOps Framework a reality.

Purview

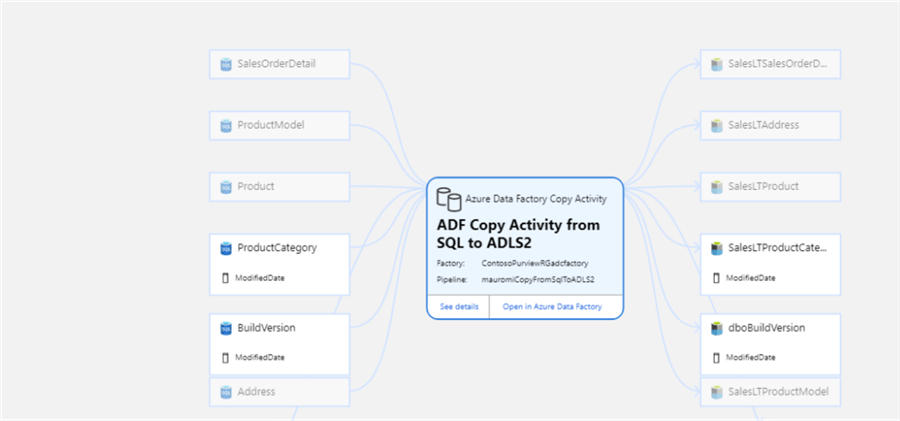

Purview is an Azure native cloud-based SaaS data governance technology which brings with it an easy-to-use UI and catalog for easily discovering and understanding data sources easily by the users who register and manage the data assets. Users can maintain a copy of the indexed metadata as well as a reference to the source location. Additionally, this metadata can be further enriched in Purview through tags, descriptions and more. The Figure below shows a sample of how lineage can be tracked within Purview for an Azure Data Factory copy activity. Lineage can also be registered with the Purview REST API and can be integrated with Azure Functions for a more customized and code driven experience that can be used with other Azure Data Lakehouse services such as Databricks.

Summary

The Modern Azure Data Lakehouse is quickly becoming a pivotal architectural paradigm in the cloud services and data warehousing domain. It offers a seamless native integration with ingestion, processing, storing, serving, and consumption layer technologies in Azure. In this article, you learned about the Reporting, Advanced Analytics, CI / CD, and Data Governance layers of the Data Lakehouse. You learned about the capabilities of Analysis Services, Power BI, and Power Apps as consumption layer. You also learned about Advanced Analytics Cognitive and Machine Learning services. Finally, you learned about Continuous Integration and Deployment using Azure DevOps and Data Governance using Purview. Organizations are seeking to move away from low performing, high cost, infrastructure bound architectures and technologies and into the Lakehouse where they will be able to reap the benefits of automated testing, continuous integrations and deployment, and advanced analytics capabilities. The Lakehouse paradigm is time and again proving to be this modern cloud solution as some of the largest organizations in the world adopt the Lakehouse.

Next Steps

- Read more about Maximizing Power BI with Snowflake

- Read more about Getting Started with Azure Purview for Data Governance

- Read more about Azure DevOps CI CD using GitHub Repo and Visual Studio Azure SQL Database Project

- Read the following article: Deploy CI/CD Changes for Azure Data Factory with Azure DevOps

- Read the following article: Deploy SQL Database from Azure DevOps with ARM and DACPAC File

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-02-08