By: Koen Verbeeck | Updated: 2022-03-02 | Comments | Related: > Azure Data Factory

Problem

When you need to move data around in the Microsoft Azure cloud, there are several options available to meet operational and business intelligence needs. Azure Data Factory is one of those options and in this tutorial, we'll explain what this service can do and how you can get started to learn more about it.

Solution

The Microsoft documentation introduces Azure Data Factory (ADF) as:

"A fully managed, serverless data integration service. Visually integrate data sources with more than 90 built-in, maintenance-free connectors at no added cost. Easily construct ETL and ELT processes code-free in an intuitive environment or write your own code."

Let's break this down:

- ETL and ELT processes. The E stands for "Extract", the T for "Transform" and the L for "Load". In other words, getting data from one location, do one or more transformations on it (for example, converting data types or moving from one file format to another) and then store the resulting data in another location. The main difference between ETL and ELT is the order in which the different operations are performed.

- Fully managed and serverless. You don't have to do a thing regarding set-up, administration and maintenance. You don't have to install a server or any software, you don't have to download and apply patches. It's all done for you. You only have to care about creating your data movement pipelines.

- Visually and code-free. Development is done in the browser. You don't need an expensive work station to code, you only need a browser and an Internet connection. Development is also done using a visual editor. There's very little coding required, aside from the occasional expression. The visual editor translates everything to Json for you behind the scenes. Sure, you can code the Json yourself (or generate it), but you don't need to.

Some common use cases \ workflows for ADF:

- Ingest data from SharePoint Lists in real-time and store the data in an Azure SQL Database

- Orchestration for a data warehouse load in Azure SQL Database or Azure Synapse Analytics

- Fetch data from a REST API and store the results as JSON files in Azure Blob Storage

- Each time a blog file arrives, ADF starts automatically and loads the data into a SQL Server table

- etc.

Getting Started with Microsoft Azure Data Factory

Before you can actually do anything, you need to install ADF in your Azure subscription. You can find a short introduction on how to do this in the tip Getting Started with Azure Data Factory - Part 1.

Terminology

If you read any tip or educational content on ADF, some terminology will always be used. Here's a quick overview:

- Linked Service: This is the definition of connection to a source. This can be a SQL Server connection string, or the URL of a SharePoint site, for example.

- Dataset: While a linked service defines where we can find data, the dataset will define how this data looks like. In the example of SQL Server, a dataset will define a table with its columns. In the case of a CSV file in Azure Blob Storage, the dataset will define the columns of the CSV file, the encoding, if a header is used, the delimiter and so on.

- Integration Runtime: This is the compute environment. ADF is a cloud service and when it is transferring your data, it needs some compute power. This is provided by the integration runtime (IR). There a couple of different runtimes. You have the Azure-IR for running your compute in a scalable and elastic manner, but there's also a self-hosted IR for when you want to run the compute on one of your own servers. Finally there's the Azure-SSIS IR, which is used to run Integration Services packages in ADF.

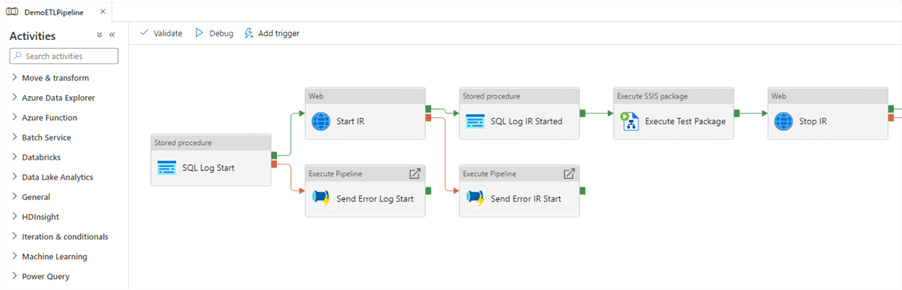

- Pipeline: This is the core of ADF. A pipeline holds on or more activities.. An activity will perform a specific task, such as copy activity, execute a stored procedure, execute PowerShell code, execute Python code, copy data from a sink to a target or run a big data job. Activities can be linked together with dependencies such as success, failure or completion. Pipelines can then be scheduled for execution, or they can be triggered by certain events such as the creation of a blob in a blob container.

- Data Flows: A special pipeline activity that has its own editors. A data flow can read in data, transform it in memory and then write it to a destination. There are two types of data flows: a (mapping) data flow and a Power Query data flow. Data flows use Azure Databricks behind the scenes and are thus more suited for big data scenarios.

Learn More About Microsoft ADF

If you want to get a quick feel about what ADF is, you can follow along with these tips Getting Started with Azure Data Factory - Part 1 and Azure Data Factory Pipeline Scheduling, Error Handling and Monitoring - Part 2 to create your first pipeline and how to schedule it. For a quick overview, you can also check out Azure Data Factory Overview. The tip What are Data Flows in Azure Data Factory? teaches you more about data flows and has easy examples you can follow.

If you're interested in comparisons with other tools, check out Choosing Between SQL Server Integration Services and Azure Data Factory or Azure Data Factory vs SSIS vs Azure Databricks.

SQL Server Integrations Services (SSIS) has been Microsoft's ETL tool since SQL Server 2005. It is mainly used for on-premises data transformation and integration scenarios, but you can also run SSIS solutions in ADF using the Azure-SSIS IR. The following tips explain how you can get started with migrating SSIS projects to the Azure cloud:

- Configure an Azure SQL Server Integration Services Integration Runtime

- Executing Integration Services Packages in the Azure-SSIS Integration Runtime

- SSIS Catalog Maintenance in the Azure Cloud

There's also a free webinar about "lift-and-shift" of SSIS projects to ADF: you can watch it here on demand. That's not all, there are many tips on the subject of ADF, too many to list them all. You can find them in this Azure Data Factory overview.

Next Steps

- There are more tips about SSIS Packages and Azure to transform data.

- For a video introduction to ADF, check out Building Data Pipelines with Azure Data Factory to Move Data.

- You can find more tips about Azure here.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-03-02