By: Ryan Kennedy | Comments | Related: > Azure Databricks

Problem

As a data leader, analyst, scientist, or engineer in today's tech world, there are more options than ever in selecting a stack of tooling that will best enable you and your team. In the cloud space, it can be especially difficult to distinguish capabilities between tools, and what their strengths and weaknesses are. The goal of this article is to illuminate exactly what Azure Databricks is so that you can determine what parts of the platform might make sense to add to your organization's data stack.

Solution

What is Azure Databricks?

Before we dive into the core components of Databricks, it is important to understand what Databricks is at the highest level. In one sentence, Databricks is a unified data and analytics platform built to enable all data personas: data engineers, data scientists and data analysts. In practicality, it is a managed platform that gives data developers all the tools and infrastructure they need to be able to focus on the data analytics, without worry about managing Databricks clusters, libraries, dependencies, upgrades, and other tasks that are not related to driving insights from data.

Databricks is currently available on Microsoft Azure and AWS, and was recently announced to launch on GCP. All of the Databricks capabilities and components described in this article have nearly 100% parity across the three cloud service providers, with the caveat of GCP being in preview. In Microsoft Azure, Databricks is a first party service that can be created through the Azure portal like other Azure services, and all billing / management is through Azure. This also means it integrates natively with Azure, including out of the box Azure Active Directory integration, and integration with most of Azure's data tools.

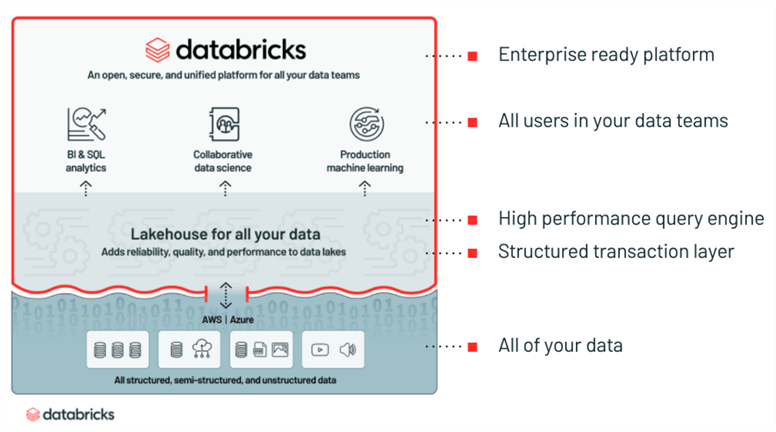

The vision of Databricks is the Lakehouse, which is a centrally managed data lake that acts as a single source of truth for all of your data teams. Data warehouses are traditionally on-premises solutions used for high concurrency, low latency queries and LOB reporting built on SQL Server, but they have a major drawback in being unable to handle unstructured data and ML/Data Science workloads. Legacy Data Lakes, such as Hadoop, were created to solve these problems, but they had their own drawbacks in performance and reliability. The purpose of the Lakehouse is to bridge this gap and marry the Data Lake and the SQL Data Warehouse into a single, centralized asset. This model democratizes your data, by keeping data in the cloud object storage of choice, in an open, non-proprietary format that can be read by any downstream technology. Object storage being your cloud providers data lake storage (Azure = ADLS Gen2, AWS = S3, GCP = GCS).

One of the core principals of Databricks is that all of its underlying technologies are open source (Apache Spark, Delta, ML Flow, etc.). Databricks brings these open-source technologies onto a single unified platform, improves them, and hardens them so they are enterprise ready out of the box. At no point are you locked in – your data stays where it is, and Spark code is Spark code – it can be run on any Spark environment.

The above diagram shows an architecture where Databricks is the only data platform, but in large enterprises, there is no single tool or platform that can 'rule them all' – there will be existing processes, platforms, and tools already in place. For this reason, Databricks was made to be open and capable of integrating with many other technologies. Thus, it can fill specific gaps as needed.

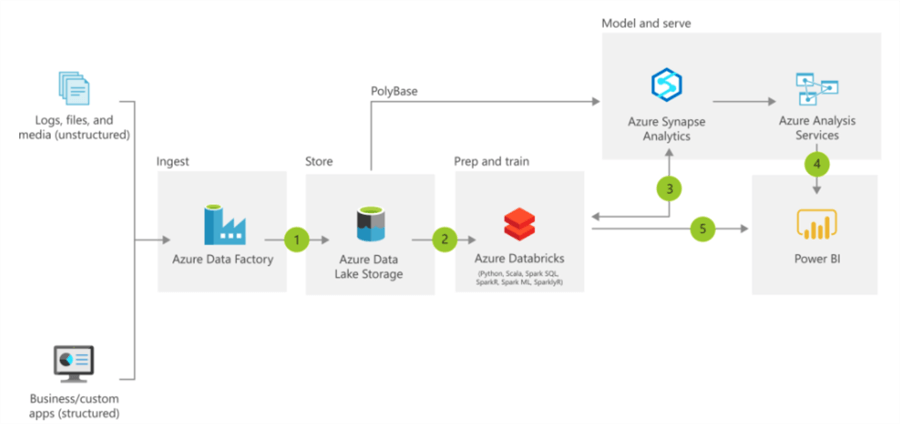

For example, in the below Microsoft reference architecture, Databricks is used for ETL and Machine Learning, and Synapse / Azure Analysis Services are serving the Line of Business / Ad Hoc BI workloads. That said, you can still use Databricks through Power BI to perform ad hoc queries on your data lake.

This example is still consistent with the vision – making your Data Lake a centralized and democratized asset that can serve all down-stream data processes.

Now let's get into some of the brass tax.

Databricks Core Components

Collaborative Workspace

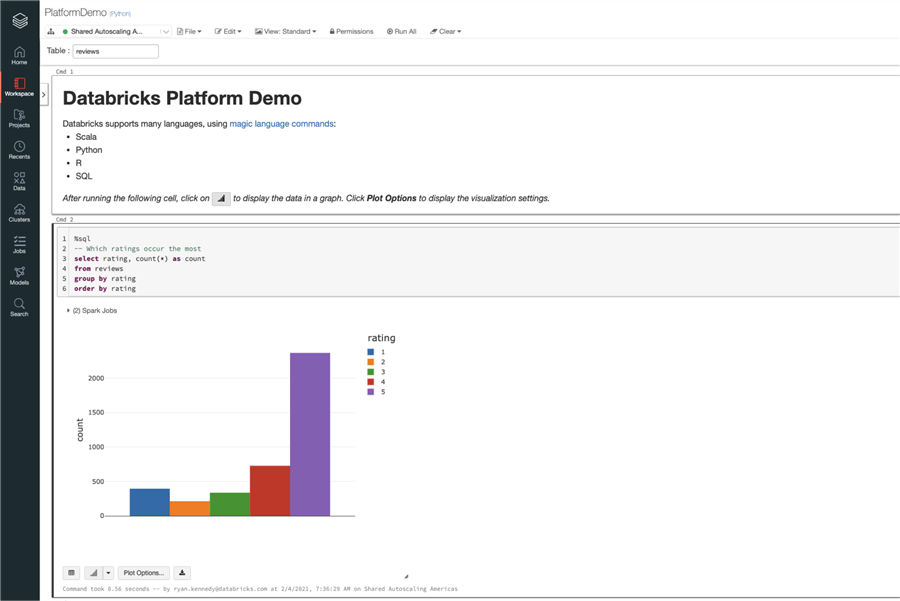

Developers will mainly interact with Databricks through its collaborative and interactive workspace. This is a notebook-based environment that has some of the following key features:

- Code collaboratively, in real time, in notebooks that support SQL, Python, Scala, and R

- Built in version control and integration with Git / GitHub and other source control

- Enterprise level security

- Visualize queries, build algorithms and create dashboards

- Create and schedule ETL / Data Science workloads from various data sources to be run as jobs

- Track and manage the machine learning lifecycle from development to production

Here is a screenshot of a Databricks Notebook and the Databricks Workspace.

Read through this article, Getting Started With Azure Databricks for a deep dive into the Databricks workspace.

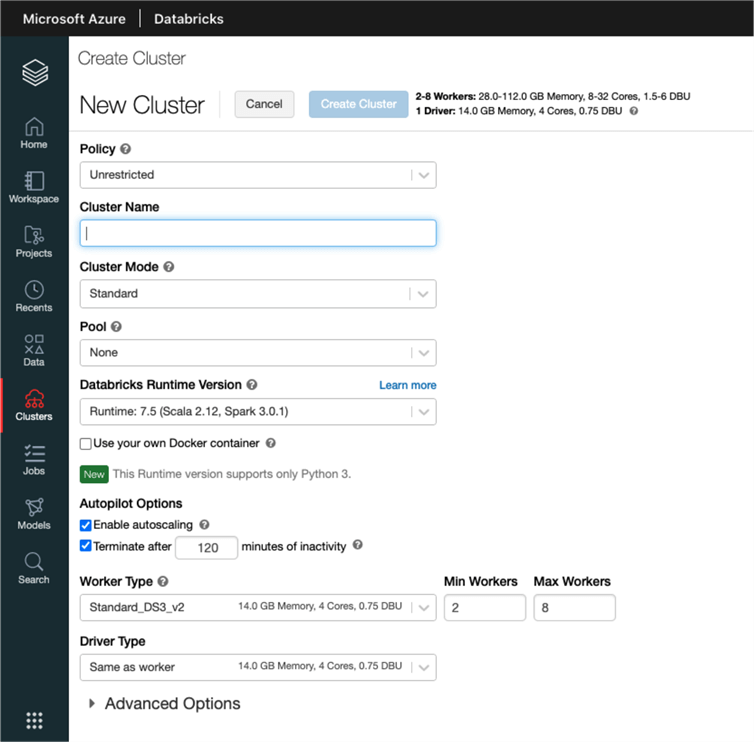

Managed Infrastructure

One of the key original value props of Databricks is its managed infrastructure. This takes the form of managed clusters. A cluster is a group of virtual machines that divide up the work of a query in order to return the results faster. By filling out 5-10 fields and clicking a button, you can spin up a Spark cluster that is optimized well beyond open-source spark, includes many common data science and data analytical libraries, and can auto scale to meet the needs of a given workload. You are only paying for Databricks for the time that a cluster is live – and there is much built-in functionality to reduce this cost. For example, using a jobs cluster, the cluster will spin up to complete a specific job or task, and then immediately shut down.

For a deep dive on cluster creation in Databricks, read here.

Spark

Spark is an open-source distributed processing engine that processes data in memory – making it extremely popular for big data processing and machine learning. Spark is the core engine that executes workloads and queries on the Databricks platform. Databricks was founded by the original creators of Spark and continues to be the largest contributor to open-source Spark today.

Read more about Spark here: https://spark.apache.org/

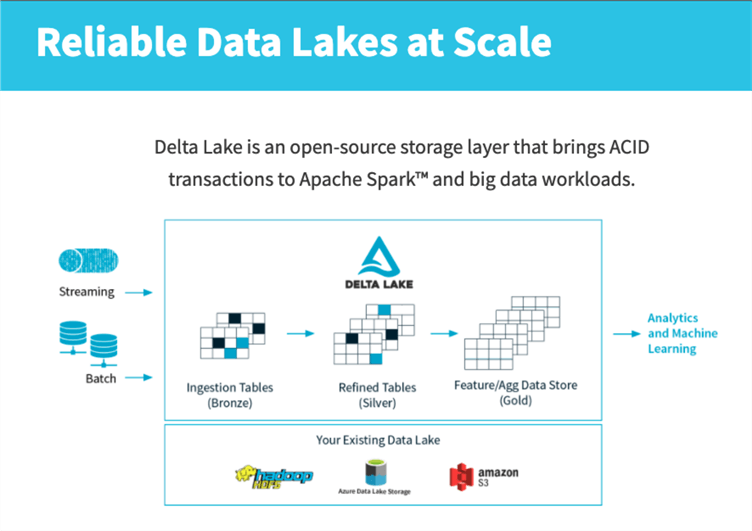

Delta

Delta is an open-source file format that was built specifically to address the limitations of traditional data lake file formats. Under the hood, Delta is composed of Parquet, a columnar format optimized for big data workloads, with added metadata and transaction logs. Delta offers the following key features that are limitations in file formats such as Parquet and ORC:

- ACID Transactions

- Ability to perform upserts

- Indexing for faster queries

- Unifies streaming and batch workloads without a complex Lambda architecture

- Schema validation and expectations

A common misconception is if you choose to build a 'Delta Lake', all of your data needs to be in the Delta format. This is not true – your raw data can stay in its original format, and if you have other specific file format requirements, you can store whatever file type you would like in the data lake. Delta is a tool to be used in the data lake where it makes sense.

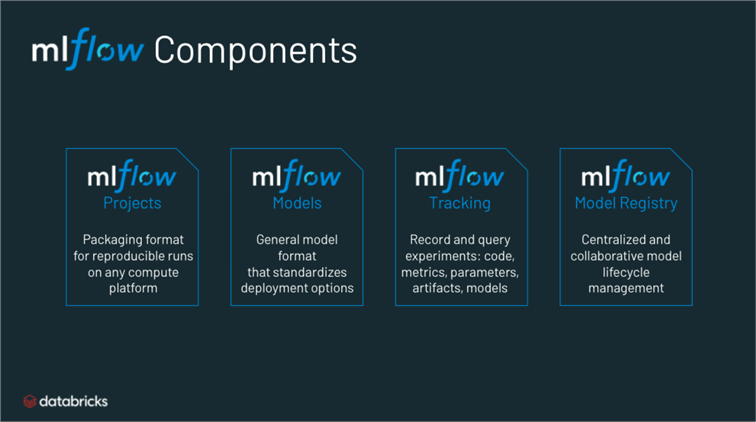

ML Flow

ML Flow is an open-source machine learning framework that was built to manage the ML lifecycle. A common challenge within data science is that it is hard to get machine learning into production. ML Flow addresses this challenge with the following features:

All of the above components are part of the open-source ML Flow. On the Databricks platform, you get the following additional benefits:

- Workspaces – Collaboratively track and organize experiments from the Databricks Workspace

- Jobs – Execute runs as a Databricks job remotely or directly from Databricks notebooks

- Big Data Snapshots – Track large-scale data sets that feed models with Databricks Delta snapshots

- Security – Take advantage of one common security model for the entire ML lifecycle.

- Serving – Quickly deploy a ML model to a rest endpoint for testing during the development process

Essentially, on Databricks, there is no management of ML Flow as a separate tool. Everything is built right in to the UI to create a seamless experience.

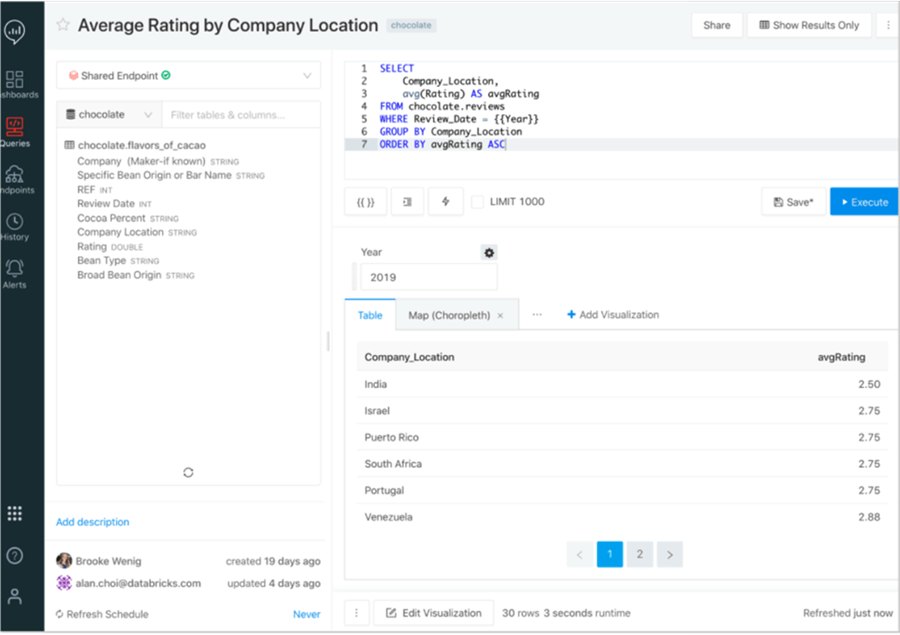

SQL Analytics

SQL Analytics is a new offering which give the SQL analyst a home within Databricks. By switching views in the traditional Databricks workspace, the SQL Analytics workspace gives an experience like that of a traditional SQL workbench. Users can:

- Write SQL queries against the data lake

- Visualize queries in line

- Build dashboards and share them with the business

- Create alerts based on SQL queries

The backend of SQL Analytics is powered by SQL Endpoints, which are spark clusters optimized for SQL workloads. These endpoints are not limited to being used by the SQL Analytics UI within Databricks – you can connect to them via your favorite BI tools such as Tableau and Power BI, and harness all of the data in your lake through your favorite BI tool.

When to use Databricks

- Modernize your Data Lake – if you are facing challenges around performance and reliability in your data lake, or your data lake has become a data swamp, consider Delta as an option to modernize your Data Lake.

- Production Machine Learning – if your organization is doing data science work but is having trouble getting that work into the hands of business users, the Databricks platform was built to enable data scientists from getting their work from Development to Production.

- Big Data ETL – from a cost/performance perspective, Databricks is best in its class.

- Opening your Data Lake to BI users – If your analyst / BI group is consistently slowed down by the major lift of the engineering team having to build a pipeline every time they want to access new data, in might make sense to open the Data Lake to these users through a tool like SQL Analytics within Databricks.

When not to use Databricks

There are a few scenarios when using Databricks is probably not the best fit for your use case:

- Sub-second queries – Spark, being a distributed engine, has overhead involved in processing that make it nearly impossible to get sub-second queries. Your data can still live in the data lake, but for sub-second queries you will likely want to use a highly tuned speed layer.

- Small data – Similar to the first point, you won't get the majority of the benefits of Databricks if you are dealing with very small data (think GBs).

- Pure BI without a supporting data engineering team – Databricks and SQL Analytics does not erase the need for a data engineering team – in fact, they are more critical than ever in unlocking the potential of the Data Lake. That said, Databricks offers tools to enable the data engineering team itself.

- Teams requiring drag and drop ETL – Databricks has many UI components but drag and drop code is not currently one of them.

Next Steps

- Read further about Databricks and its capabilities on other MSSQLTips articles:

About the author

Ryan Kennedy is a Solutions Architect for Databricks, specializing in helping clients build modern data platforms in the cloud that drive business results.

Ryan Kennedy is a Solutions Architect for Databricks, specializing in helping clients build modern data platforms in the cloud that drive business results.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips