By: Koen Verbeeck | Comments | Related: > Azure Data Factory

Problem

Azure Data Factory is a managed serverless data integration service for the Microsoft Azure Data Platform used by data engineers during business intelligence and cloud data related projects. In part 1 of this tutorial series, we introduced you to Azure Data Factory (ADF) by creating a pipeline. We continue by showing you other use cases for which you can use ADF, as well as how you can handle errors and how to use the built-in monitoring.

Solution

It's recommended to read part 1 before you continue with this tip. It shows you how to install ADF and how to create a pipeline that will copy data from Azure Blob Storage to an Azure SQL database as a sample ETL \ ELT process.

Azure Data Factory as an Orchestration Service

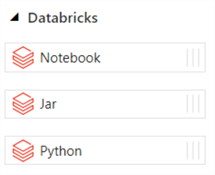

Like SQL Server Integration Services, ADF is responsible for data movement (copy data or datasets) from a source to a destination as a workflow. But it can do so much more. There are a variety of activities that don't do anything in ADF itself, but rather perform some tasks on an external system. For example, there are activities specific for handling Azure Databricks scenarios:

You can for example trigger Azure Databricks Notebooks from ADF. The following tips can get you started on this topic:

- Orchestrating Azure Databricks Notebooks with Azure Data Factory

- Create Azure Data Factory inventory using Databricks

- Getting Started with Delta Lake Using Azure Data Factory

- Snowflake Data Warehouse Loading with Azure Data Factory and Databricks

- Azure Data Factory Mapping Data Flows for Big Data Lake Aggregations and Transformations

ADF has its own form of Azure Databricks integration: Data Flows (previously called Mapping Data Flows) and Power Query flows (shortly called Wrangling Flows), which are both out of scope of this tip, but will be explained in a subsequent tip.

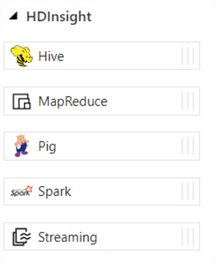

ADF also supports other technologies, such as HDInsight:

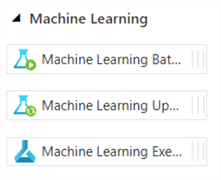

But also Azure Machine Learning:

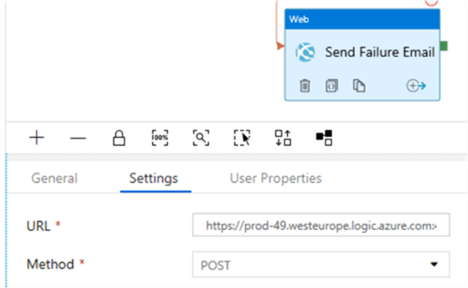

You can call Logic Apps and Azure Functions from Azure Data Factory, which is often necessary because there's still some functionality missing from ADF. For example, you cannot send an email from ADF or ADF cannot easily download a file from SharePoint Online (or OneDrive for Business).

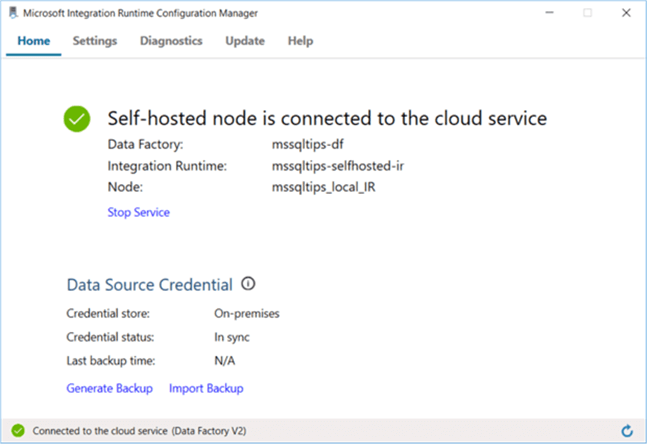

With ADF pipelines, you can create complex data pipelines where you integrate multiple data services with each other. But it's not all cloud. You can also access on-premises data sources when you install the self-hosted integration runtime. This runtime also allows you to shift workloads to on-premises machines should the need arise.

Lastly, you can also integrate existing SSIS solutions into ADF. You can create an Azure-SSIS Integration Runtime, which is basically a cluster of virtual machines that will execute your SSIS packages. The SSIS catalog itself is created in either an Azure SQL DB or an Azure SQL Managed Instance. You can find more info in the following tips:

- Configure an Azure SQL Server Integration Services Integration Runtime

- Executing Integration Services Packages in the Azure-SSIS Integration Runtime

- Customized Setup for the Azure-SSIS Integration Runtime

- SSIS Catalog Maintenance in the Azure Cloud

Scheduling ADF Pipelines

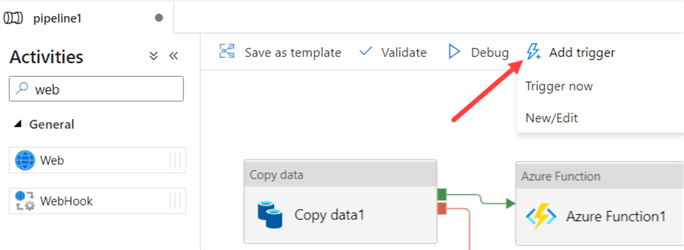

To schedule an ADF pipeline, you add a trigger from within the pipeline itself:

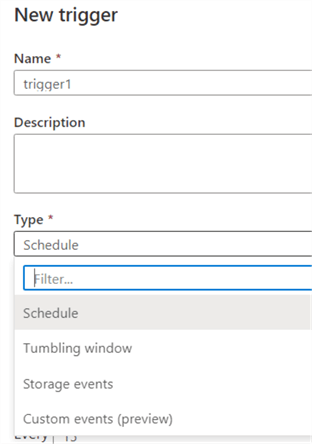

You can either trigger a one-off execution, or you can create/edit a permanent trigger. Currently, there are 4 types:

- Schedule is very similar to what is used in SQL Server Agent jobs. You define a frequency (for example every 10 minutes or once every day at 3AM), a start date and an optional end date.

- Tumbling window is a more specialized form of schedule. With tumbling windows, you have a parameterized data flow. When one window is executed, the start and the end time of the window is passed to the pipeline. The advantage of a tumbling window is that you can execute past periods as well. Suppose you have a tumbling window on the daily level, and the start date is at the start of this month. This will trigger an execution for every day of the month right until the current day. This makes tumbling windows great for doing an initial load where you want each period executed separately. You can find more info about this trigger in the tip Create Tumbling Window Trigger in Azure Data Factory ADF.

- Storage events will trigger a pipeline whenever a blob is created or deleted from a specific blob container.

- Custom events are a new trigger type which are in preview at the time of writing. These allow you to trigger a pipeline based on custom events from Event Grid. You can find more info in the documentation.

Pipelines can also be triggered from an external tool, such as from an Azure Logic App or an Azure Function. ADF has even a REST API available which you can use, but you could also use PowerShell, the Azure CLI, .NET or even Python.

Error Handling and Monitoring

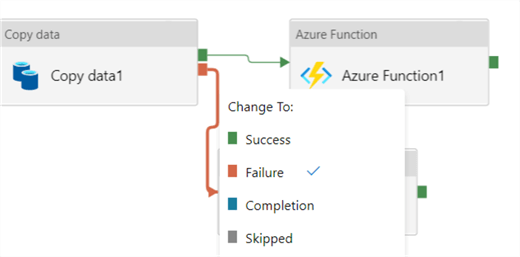

Like in SSIS, you can configure constraints on the execution paths between two activities:

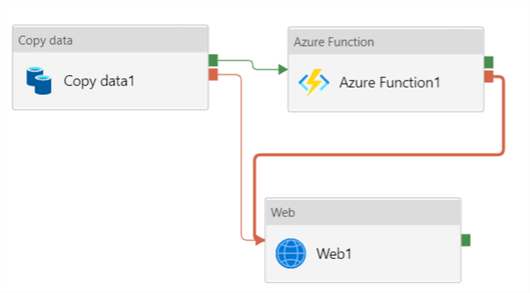

This allows you to create a more robust pipeline that can handle multiple scenarios. Keep in mind though ADF doesn't have an "OR constraint" like in SSIS. Let's illustrate why that matters. In the following scenario, the Web Activity will never be executed:

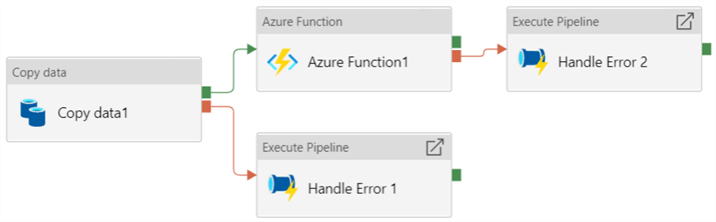

For the Web Activity to be executed, the Copy Activity must fail AND the Azure Function must fail. However, the Azure Function will only start if the Copy Data activity has finished successfully. If you want to re-use some error handling functionality, you can create a separate pipeline and call this pipeline from every activity in the main pipeline:

To capture and log any errors, you can create a stored procedure to log them into a table, as demonstrated in the tip Azure Data Factory Pipeline Logging Error Details.

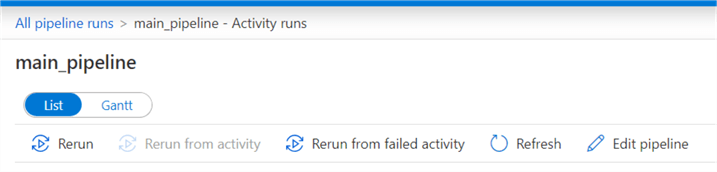

In the ADF environment, you can monitor ongoing and past pipeline runs.

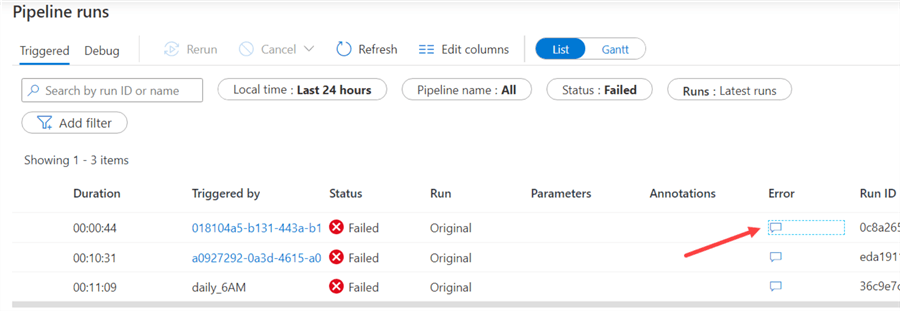

There, you can view all pipeline runs. There are pre-defined filters you can use, such as date, pipeline names and status.

You can view the error if a pipeline has failed, but you can also go into the specific run and restart an activity if needed.

For more advanced alerting and monitoring, you can use Azure Monitor.

Next Steps

-

Check out these additional Azure resources:

- Azure Data Factory Overview

- Azure Data Factory vs SSIS vs Azure Databricks

- Create Azure Data Lake Linked Service Using Azure Data Factory

- Fast Way to Load Data into Azure Data Lake using Azure Data Factory

- Deploy Azure Data Factory CI/CD Changes with Azure DevOps

- Load Data Lake files into Azure Synapse Analytics Using Azure Data Factory

- For more tips about ADF, check out this overview.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips