By: Koen Verbeeck | Comments (13) | Related: 1 | 2 | > Azure Data Factory

Problem

When you run a pipeline in Azure Data Factory, you typically want to notify someone if the load was successful or not. Especially if there are errors, you want people to take action. However, there is no send email activity in Azure Data Factory. In part 1 of this tip, we created a Logic App in Azure that sends an email using parameterized input. In this part 2, we will integrate this Logic App into an Azure Data Factory (ADF) pipeline.

Solution

If you haven't already, please read part 1 of this tip to learn more on how to set up an Azure Logic App that sends an email every time an HTTP request is received. To summarize, the Logic App expects the following JSON input:

{

"properties": {

"DataFactoryName": {

"type": "string"

},

"EmailTo": {

"type": "string"

},

"ErrorMessage": {

"type": "string"

},

"PipelineName": {

"type": "string"

},

"Subject": {

"type": "string"

}

},

"type": "object"

}

Using this JSON body, we can customize the message – and thus the email - we want to send from ADF to the Logic App.

Triggering the Logic App from ADF

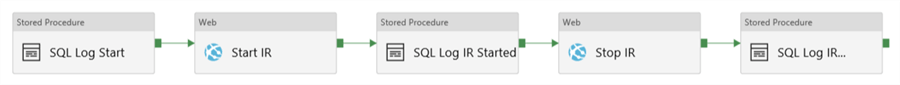

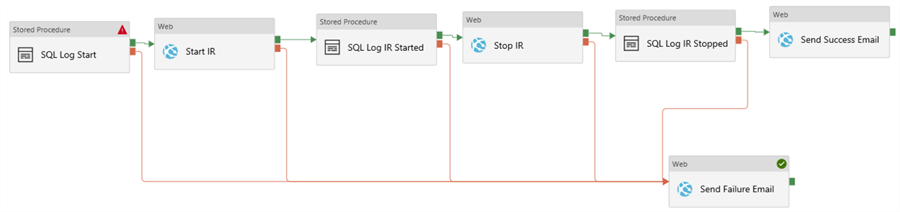

Suppose you have already created a pipeline with some activities in ADF.

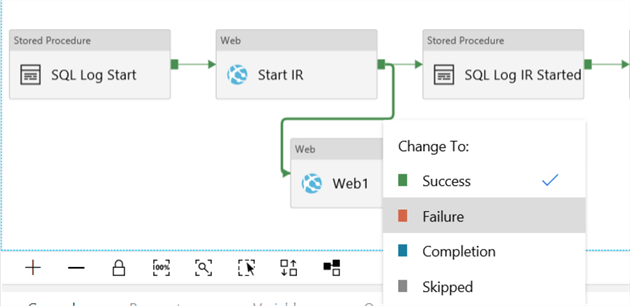

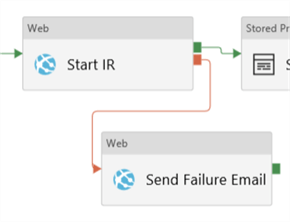

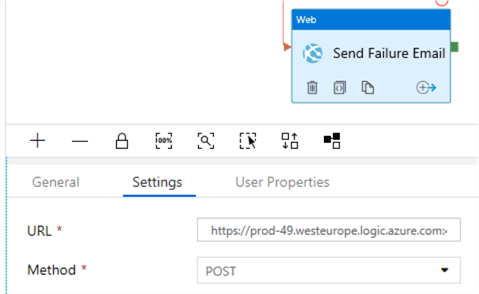

For a short introduction to ADF, please refer to the tip Azure Data Factory Overview. Now we want to send an email if an error occurs within an activity. Add a web activity to the canvas and connect another activity to this new activity using the arrow. When the connection has been made, right-click on the connection to change it to a Failure precedence constraint.

This will change the color of the connector to red.

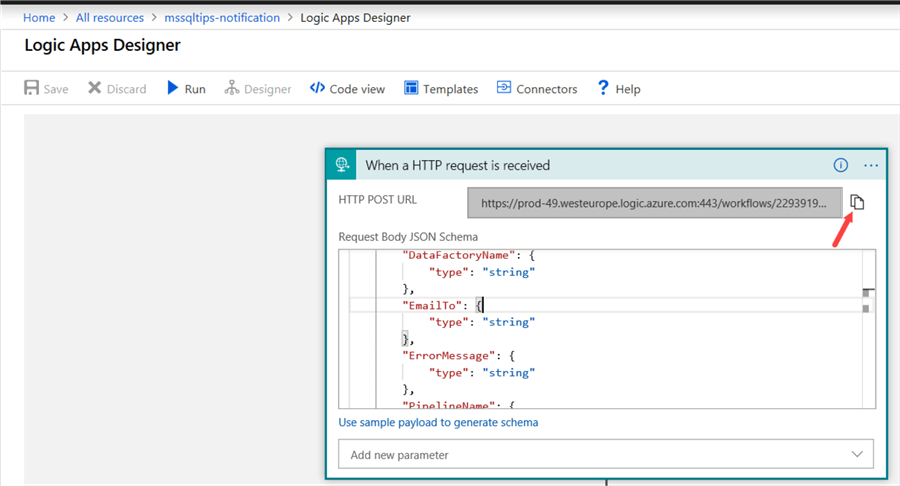

Now we need to go the Azure Logic App and copy the HTTP POST URL:

Paste this URL into the URL field in the settings pane of the Web activity. The method needs to be set to POST.

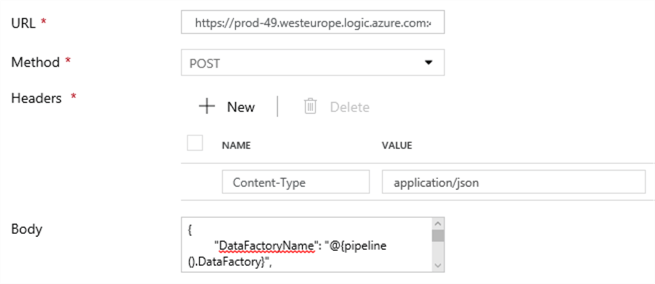

We also need to add a header, where we will set the Content-Type to application/json. In the body, we enter the following JSON (following the structure mentioned before):

{

"DataFactoryName": "@{pipeline().DataFactory}",

"PipelineName": "@{pipeline().Pipeline}",

"Subject": "An error has occurred!",

"ErrorMessage": "The ADF pipeline has crashed! Please check the logs.",

"EmailTo": "[email protected]"

}

We're using system parameters to retrieve the name of the data factory and the name of the pipeline. All the other fields in the settings pane can be left as-is.

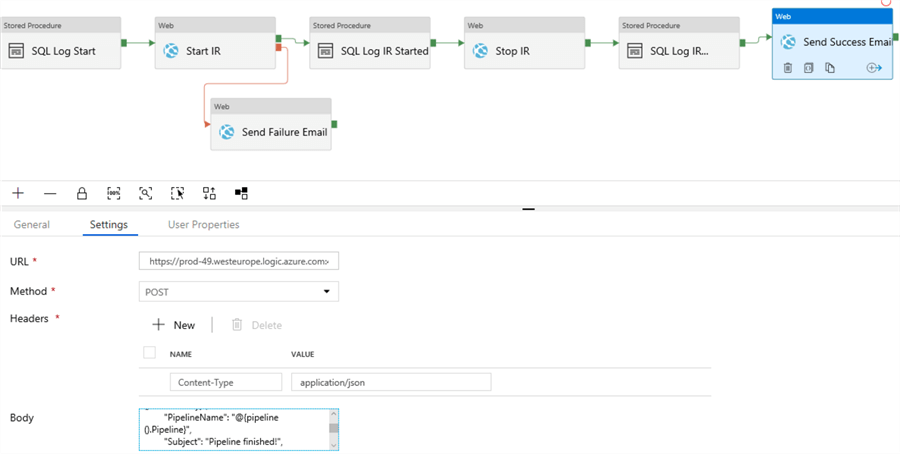

At the end of the pipeline, we can add a similar Web activity to send an email notifying users that the pipeline has finished successfully. This time we use the following body:

{

"DataFactoryName": "@{pipeline().DataFactory}",

"PipelineName": "@{pipeline().Pipeline}",

"Subject": "Pipeline finished!",

"ErrorMessage": "Everything is okey-dokey!",

"EmailTo": "[email protected]"

}

Now we can run the pipeline and wait to see if any emails come in:

Configuring Multiple Notifications

Suppose we have more than one single activity for which we want to send a notification if something goes wrong. The following won't work in ADF:

The reason it doesn't work is because all those failure constraints (the red arrows) are linked together using an AND constraint. Which means the "Send Failure Email" activity will only be executed if (and only if) ALL activities fail. This can't happen, since the second activity will only start if the first one has completed successfully. In ADF, we cannot configure those constraints to be linked by an OR constraint (like we can in an Integration Services package), which would mean the email will be sent once one of the activities fails. You can find an example of the desired behavior in the SSIS tutorial: Execute SQL Task in SQL Server Integration Services SSIS.

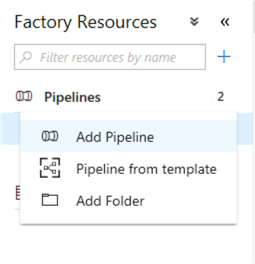

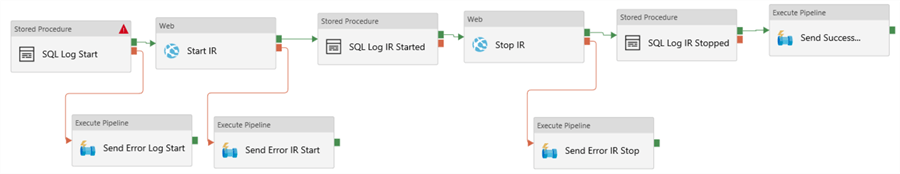

So how can we solve this issue in ADF? It's considered a best practice that if you have re-usable code, you put it in its own pipeline. This means we'll create one single pipeline with the Web Activity. Then we'll call this pipeline from the main pipeline every time we want to send a notification and we pass along the desired parameters to construct the JSON body. Let's start by creating the new pipeline:

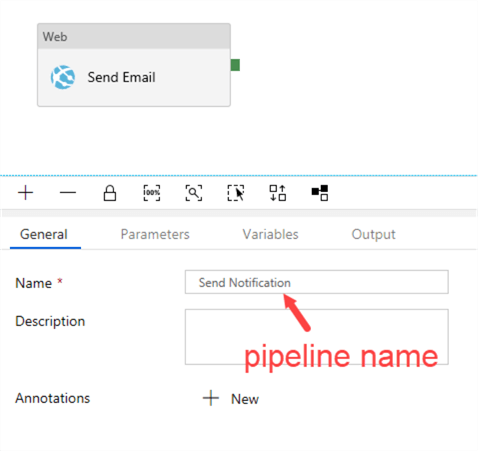

Let's copy paste the Web Activity from the main pipeline into this new pipeline.

Notice that the activity is now "neutral": it doesn't know if it's going to send a failure or success email. We have to adapt the body so the activity can use parameters, which we'll define later:

{

"DataFactoryName": "@{pipeline().DataFactory}",

"PipelineName": "@{pipeline().Pipeline}",

"Subject": "@{pipeline().parameters.Subject}",

"ErrorMessage": "@{pipeline().parameters.ErrorMessage}",

"EmailTo": "@pipeline().parameters.EmailTo"

}

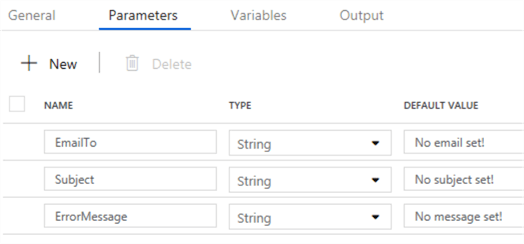

We're going to use three parameters: Subject, ErrorMessage and EmailTo. We can reference this variables in the body by using the following format: @pipeline().parameters.parametername.

Click on the pipeline canvas and then go to the Parameters pane of the pipeline. Here we can add our three parameters:

As default values, I'm specifying strings that are obvious not useful values. This way, if something goes wrong with the parameter mapping, it's easy to detect if a parameter value was used or the default value. The notification pipeline is now finished. The next step is to modify the main pipeline. All the web activities will be replaced with an Execute Pipeline Activity.

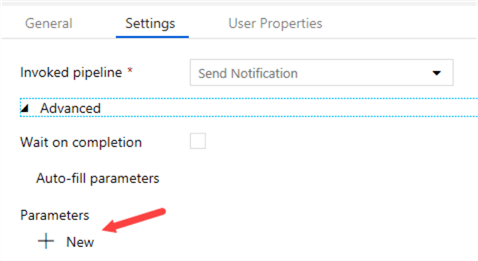

In each Execute Pipeline Activity, we define our three parameter values which we're going to pass to the notification pipeline. In the Settings pane, choose the correct pipeline and click on New to add new parameters (you have to click open the Advanced section to see this):

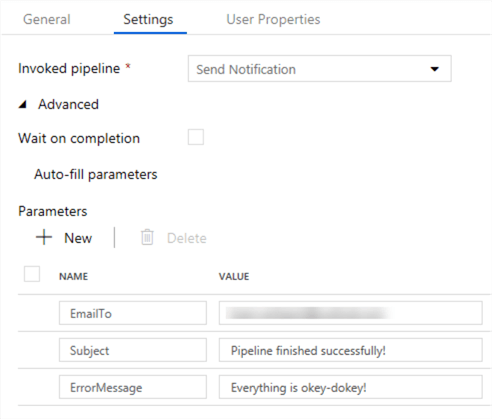

Here you can add the three parameters for the notification pipeline:

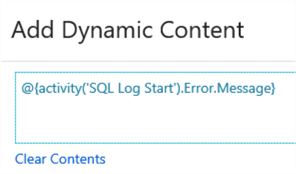

Notice it is possible to have a more useful ErrorMessage if you use dynamic contents. For example, for the notification of the first stored procedure activity, we can do the following:

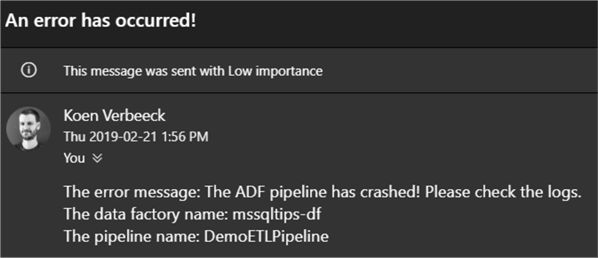

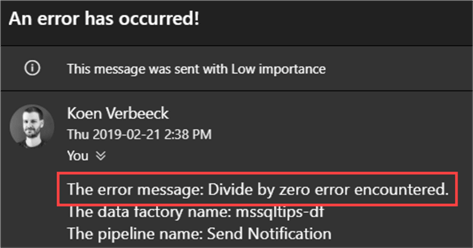

This will retrieve the actual error message from the activity, instead of using a hardcoded message. According to the documentation, this shouldn't be possible, but it still works. If we run the pipeline and the stored procedure activity fails, we get an email with the error message:

The format of the dynamic content can differ per activity (and some activities don't let you access the error details), so please refer to the documentation for more info.

Conclusion

In this two-part tip, we saw how we can leverage Azure Logic Apps to send email notifications in Azure Data Factory. Using parameters and dynamic content, it's possible to pass along actual error messages for certain activities.

Next Steps

- If you haven't already, read part 1 to learn how to create the logic app to send an email.

- More tips about Logic Apps:

- You can find more Azure tips in this overview.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips