By: Hristo Hristov | Updated: 2023-12-12 | Comments | Related: > Python

Problem

In the life of the data professional, there comes a day when you may need to open, edit, or analyze an excessively large dataset. In a previous tip, we examined a similar case when the scenario exported lots of data. What if you were handed a huge data file and needed to check it out quickly and efficiently?

Solution

One solution to consider is DuckDB. DuckDB is a free, open-source database management system intended for analytical query workloads. It supports SQL and is designed to run in-process and in-memory. This fact makes it very fast compared to traditional DBMS. Additionally, DuckDB does not require external dependencies during compile time or runtime. Installing, running, or supporting a DMBS server is unnecessary. In short, DuckDB can be your embedded best friend if you need to examine a massive dataset.

Installing DuckDB

There are many options to install and run DuckDB. For example, there is a Windows or Linux package. R, Java, Rust, and Go are also supported. For this tip, we will get started with DuckDB under Python.

Environment

As usual in the series, I have a working folder open in VS Code. If you are tuning in now, check out this tip for setting up your environment. Activate your environment and install the package.

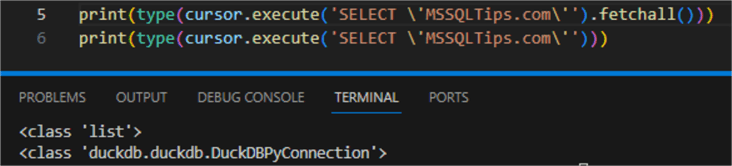

If we have the DuckDB package running, we can perform a quick validation by creating and running the following Python script file in our working directory:

import duckdb as d

cursor = d.connect()

print(cursor.execute('SELECT \'MSSQLTips.com\'').fetchall())

Calling the connect()

method without arguments returns a connection using an in-memory database. The result

itself is a list, while the "cursor" is a DuckDB connection type:

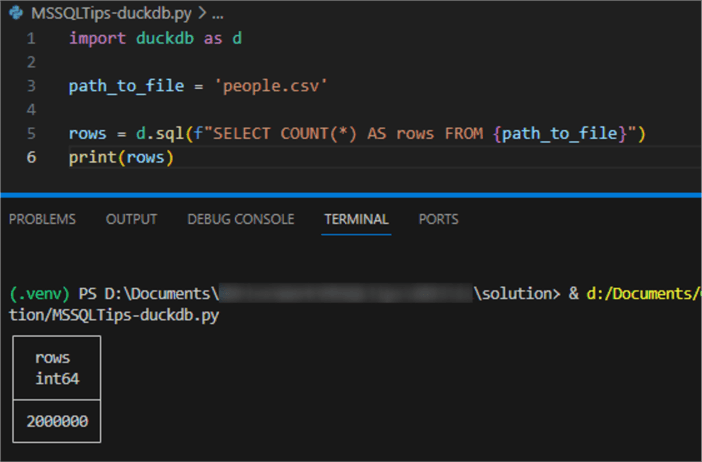

Data Ingestion

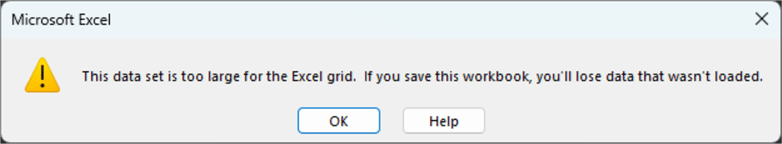

DuckDB supports reading many data sources, such as CSV, parquet, JSON, Excel, SQLite, and PostgreSQL. Let's look closer at how to handle a massive CSV file, for example. The file is more than 200 MB and contains 2 million rows of data. Larger CSV files cannot be efficiently examined or analyzed with Excel. Such files also cannot be edited without incurring a data loss.

Sample Data

The sample data file is courtesy of Datablist. Trying to open the file directly produces this warning:

Proceeding against the warning by clicking OK will open the file. However, we will see no further than the 1,048,576th row, which is the hard limit for CSV files:

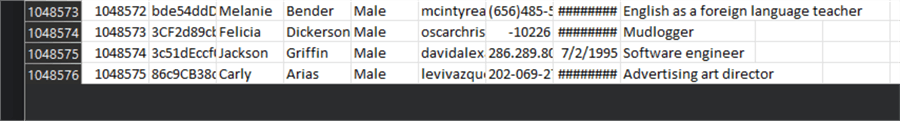

Read CSV

Let's proceed with reading and determining exactly how many rows are in our CSV file. DuckDB enables this process with the Python API. We can query the file directly:

path_to_file = 'people.csv'

rows = d.sql(f"SELECT COUNT(*) AS rows FROM {path_to_file}")

print(rows)

The result is 2 million rows:

Analyzing the Data

From this point on, we can use familiar SQL statements under the PostgreSQL dialect to query the CSV file directly through the Python API as if we had it on an SQL Server database:

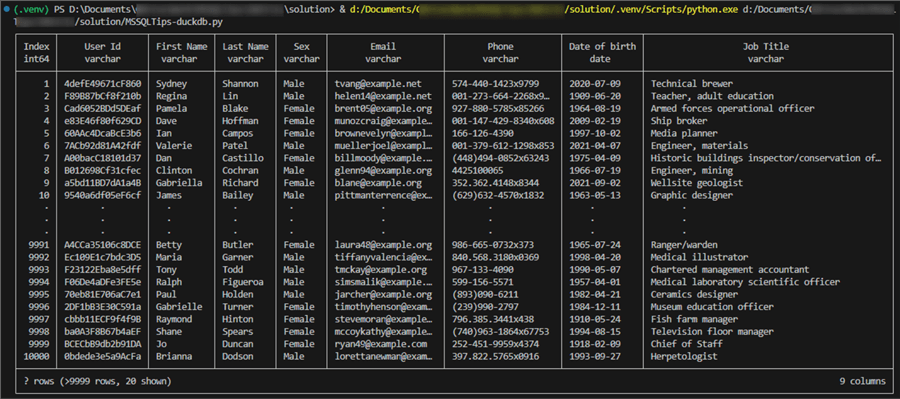

rows = d.sql(f"SELECT * FROM {path_to_file}")

print(rows)

The result appears on the Python terminal as standard output:

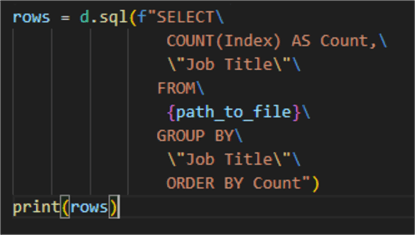

Before moving on, let's also quickly see how many professions we have and their count:

rows = d.sql(f"SELECT COUNT(Index) AS Count, \"Job Title\" FROM {path_to_file} GROUP BY \"Job Title\" ORDER BY Count")

print(rows)

As a result, we see how many professions we have and how many people there are from each profession:

Note: The column "Job Title" contains a space. Therefore, its name must be encompassed with double quotes, which need to be escaped in the Python query string by using a backslash ( \ ).

So far, we're able to get here by using only a few lines of code and without setting up a database server. What is another scenario that DuckDB enables for our CSV file?

Persisting Data

We know that CSV files can be tricky to handle. While handy for quick data exchange or browsing, they come in many different dialects, with many potential encodings and varying delimiters. As a result, the schema of the CSV file may be forced to change from system to system when trying to read the file out.

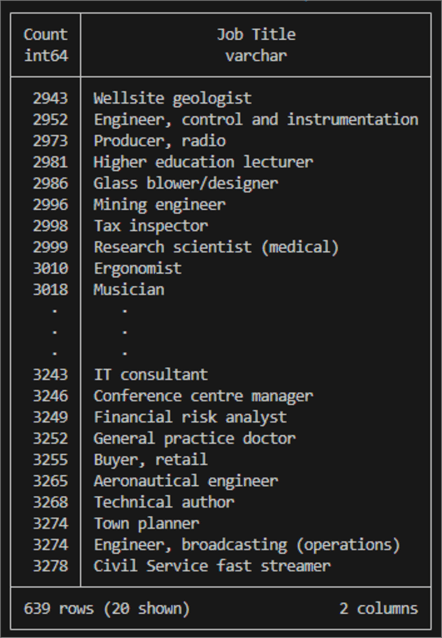

Let's import our CSV data to a DuckDB database to alleviate this situation. We could then store it on disk and send it to others. As a database, running one and the same query will always produce consistent results. For example:

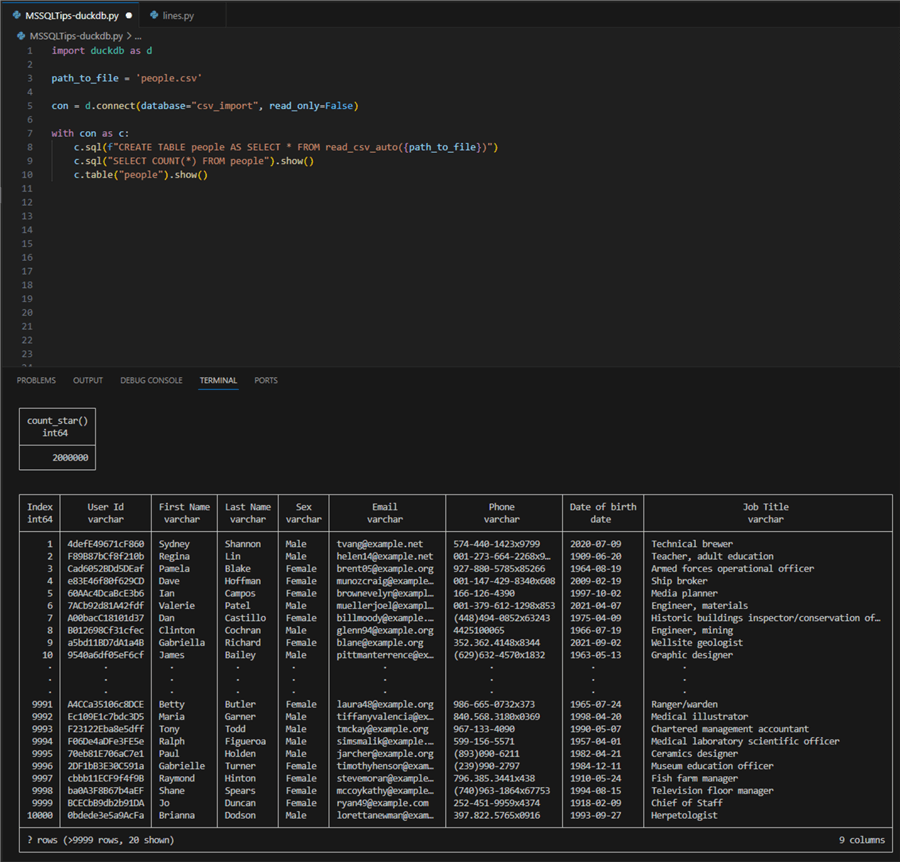

01: import duckdb as d

02:

03: path_to_file = 'people.csv'

04:

05: con = d.connect(database="csv_import", read_only=False)

06:

07: with con as c:

08: c.sql(f"CREATE TABLE people AS SELECT * FROM read_csv_auto({path_to_file})")

09: c.sql("SELECT COUNT(*) FROM people").show()

10: c.table("people").show()

Let's break it down:

- 01: import the DuckDB module we installed at the start.

- 03: declare the path to the CSV file.

- 05: instantiate a DuckDB connection.

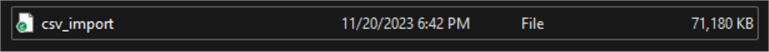

This statement will create a database file with the name "csv-import".

The connection will not be shared between processes; therefore,

read_onlyis set toFalse. This is what the database file looks like. This file is completely portable and has no dependencies:

- 07: by the force of the with-statement, we have a context manager that lets us use our database connection neatly.

- 08: using the connection to the newly created database, we can call the

sqlfunction and pass the SQL query we need. The key here is theread_csv_autofunction. When used with no optional parameters, it will try to determine the delimiter and data types based on the first 20480 rows from the file. If needed, these options can be overridden:- For custom delimiter, use

delim

- For custom type detection, use

types, which takes a list of data types.

- For custom delimiter, use

For example:

read_csv_auto('file.csv', delim='|'), types=[INT, VARCHAR, DATE])

- 09: to validate the import, we count the rows. By default, the SQL function

returns a DuckDB relation object. We can use the

show()function to see the results immediately. Alternatively, as shown earlier in this article, we can usefetchall()to convert the result to a Python list. - 10: additionally, we can query the table directly.

Finally, the context manager will close the connection automatically. The result is:

This is how we created a DuckDB database, imported the CSV data to a table, and queried that table.

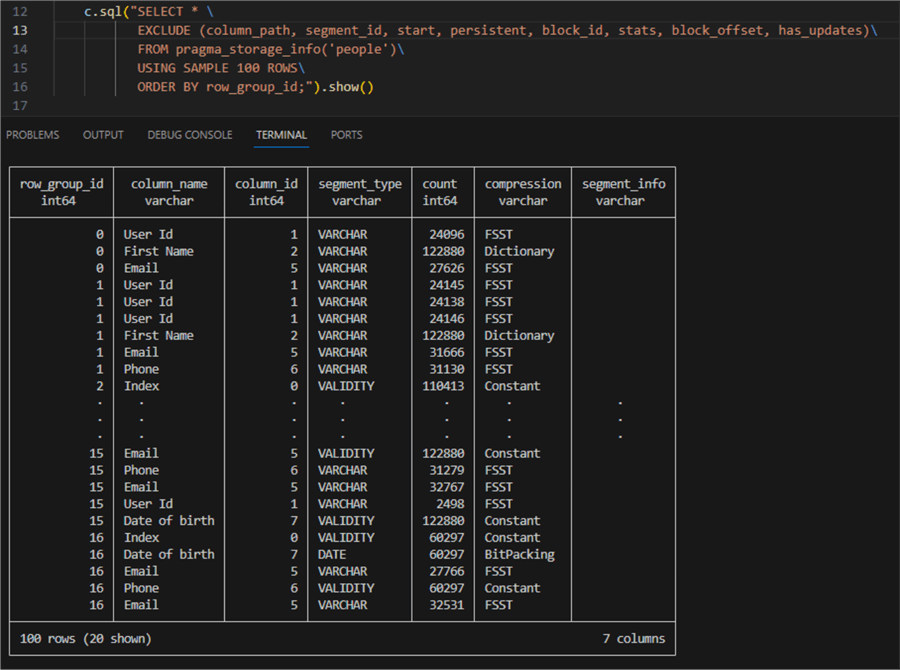

A Word on Compression

Note the size on the disk of the database file csv_import - about 71 MB or almost three times less than the original CSV source file. This is a tremendous savings of disk space. DuckDB uses specialized compression algorithms that enable this process. The rows of data are divided into chunks of 120,000 rows stored in "column segments." The data in these segments is analyzed, then the DuckDB engine determines the most suitable compression algorithm. Finally, the compression is applied, and the data is written to disk. We can use the following SQL command to find out which compression algorithm was applied to the source columns:

c.sql("SELECT * EXCLUDE (column_path, segment_id, start, persistent, block_id, stats, block_offset, has_updates) FROM pragma_storage_info('people') USING SAMPLE 10 ROWS ORDER BY row_group_id;").show()

The result is:

For the various VARCHAR columns, the DuckDB engine chooses Fast Static Symbol Table (FSST), Dictionary, or Constant compression. On the other hand, the date column "Date of birth" uses either Constant compression (for when there are a lot of overlapping dates) or BitPacking (for different values that have a finite lower and upper bound).

Conclusion

In this article, we examined the DuckDB Python API. We managed to examine a CSV file, count its rows, and perform a group by operation that otherwise would be more difficult. Additionally, we could import the data from the CSV file to a persisted disk storage, the DuckDB database. Finally, we examined how the data was compressed.

Next Steps

About the author

Hristo Hristov is a Data Scientist and Power Platform engineer with more than 12 years of experience. Between 2009 and 2016 he was a web engineering consultant working on projects for local and international clients. Since 2017, he has been working for Atlas Copco Airpower in Flanders, Belgium where he has tackled successfully multiple end-to-end digital transformation challenges. His focus is delivering advanced solutions in the analytics domain with predominantly Azure cloud technologies and Python. Hristo's real passion is predictive analytics and statistical analysis. He holds a masters degree in Data Science and multiple Microsoft certifications covering SQL Server, Power BI, Azure Data Factory and related technologies.

Hristo Hristov is a Data Scientist and Power Platform engineer with more than 12 years of experience. Between 2009 and 2016 he was a web engineering consultant working on projects for local and international clients. Since 2017, he has been working for Atlas Copco Airpower in Flanders, Belgium where he has tackled successfully multiple end-to-end digital transformation challenges. His focus is delivering advanced solutions in the analytics domain with predominantly Azure cloud technologies and Python. Hristo's real passion is predictive analytics and statistical analysis. He holds a masters degree in Data Science and multiple Microsoft certifications covering SQL Server, Power BI, Azure Data Factory and related technologies.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2023-12-12