By: Aaron Bertrand | Updated: 2022-06-21 | Comments (1) | Related: > Partitioning

Problem

Not too long ago, I came across a table that had 15,000 partitions—all but 4 of them empty. I bet when you have implemented partitioning you, too, have wondered: "Why shouldn't I create all future partitions now?"

The question is valid: wouldn't maintenance be easier if you only had to phase out old partitions, without ever worrying about adding partitions to accommodate new data?

Here's the thing. Microsoft will never tell you this, but empty partitions are not free. I don't mean you will get an invoice for creating too many, but you will pay for them in other ways.

Solution

Before we start, let's assume you're implementing partitioning for the right reasons, and not just because you've heard partitions make queries faster. See this video from Kendra Little if you're unsure, as this is a common misconception. Now, let's assume you have volumes like SAP or something like it, and you want one partition per day. The whole reason Microsoft increased the upper bound of partitions to 15,000 - at least as far as I can tell - was to handle 40+ years of daily partitions. Roughly speaking, this requires about 14,610 partitions (40*365.25), without accounting for the leap day that's lost during specific turns-of-the-century.

Example

With those figures in mind, it's certainly plausible that one could want to add 15K partitions, allowing them to retire without having to add a single partition to this table. Let's create four tables with gradually increasing numbers of partitions so we can compare the range from bare minimum, to reasonable, to well prepared, to borderline criminal:

- table with just the partitions you need (year to date + next month)

- table with ~365 extra partitions (year to date + one year out)

- table with ~3,660 extra partitions (year to date + 10 years out)

- table with ~14,610 extra partitions (year to date + 40 years out)

First, let's create a database with the following syntax:

USE master;

GO DROP DATABASE IF EXISTS PartitionTesting;

GO CREATE DATABASE PartitionTesting;

GO ALTER DATABASE PartitionTesting SET RECOVERY SIMPLE;

GO ALTER DATABASE PartitionTesting SET TARGET_RECOVERY_TIME = 60 SECONDS;

GO ALTER DATABASE PartitionTesting SET QUERY_STORE

(

OPERATION_MODE = READ_WRITE,

MAX_STORAGE_SIZE_MB = 2048,

QUERY_CAPTURE_MODE = ALL /* NOTE: in production use AUTO */

);

GO USE PartitionTesting;

GO

Now, we'll need to generate some dynamic SQL in order to generate the schemas, tables, partition functions, and partition schemes we're going to test with.

It's a fantastic reference in any case, but I always have to check

Cathrine Wilhelmsen's partitioning series to remind myself about

RANGE LEFT / RANGE RIGHT. In this case (and for virtually

all scenarios where you're partitioning by date/time), we want

RANGE RIGHT, which means the boundary value belongs

with the values immediately higher. For example, if I want to partition

by month, I want boundaries of 20220101,

20220201, and so on. Any values that fall exactly

at midnight on the 1st of the month belong to the partition "to that value's

right."

We'll insert the exact same data into each table (three rows per day from the beginning of the year until today, and no rows in the future). We want to end up with something like this syntax:

CREATE PARTITION FUNCTION pfBareMinimum(datetime) AS RANGE RIGHT FOR VALUES(<a few dates>); CREATE PARTITION FUNCTION pfReasonable(datetime) AS RANGE RIGHT FOR VALUES(<more dates>); CREATE PARTITION FUNCTION pfVeryPrepared(datetime) AS RANGE RIGHT FOR VALUES(<even more dates>); CREATE PARTITION FUNCTION pfLudicrous(datetime) AS RANGE RIGHT FOR VALUES(<all the dates>); CREATE PARTITION SCHEME psBareMinimum AS PARTITION pfBareMinimum ALL TO ([PRIMARY]); CREATE PARTITION SCHEME psReasonable AS PARTITION pfReasonable ALL TO ([PRIMARY]); CREATE PARTITION SCHEME psVeryPrepared AS PARTITION pfVeryPrepared ALL TO ([PRIMARY]); CREATE PARTITION SCHEME psLudicrous AS PARTITION pfLudicrous ALL TO ([PRIMARY]); EXEC sys.sp_executesql N'CREATE SCHEMA BareMinimum AUTHORIZATION dbo;'; EXEC sys.sp_executesql N'CREATE SCHEMA Reasonable AUTHORIZATION dbo;'; EXEC sys.sp_executesql N'CREATE SCHEMA VeryPrepared AUTHORIZATION dbo;'; EXEC sys.sp_executesql N'CREATE SCHEMA Ludicrous AUTHORIZATION dbo;'; CREATE TABLE BareMinimum.BareMinimum (LogDate datetime) ON psBareMinimum (LogDate); CREATE TABLE Reasonable.Reasonable (LogDate datetime) ON psReasonable (LogDate); CREATE TABLE VeryPrepared.VeryPrepared(LogDate datetime) ON psVeryPrepared(LogDate); CREATE TABLE Ludicrous.Ludicrous (LogDate datetime) ON psLudicrous (LogDate); INSERT BareMinimum.BareMinimum (LogDate) VALUES(<an equal number of dates>); INSERT Reasonable.Reasonable (LogDate) VALUES(<an equal number of dates>); INSERT VeryPrepared.VeryPrepared(LogDate) VALUES(<an equal number of dates>); INSERT Ludicrous.Ludicrous (LogDate) VALUES(<an equal number of dates>);

I certainly didn't type that SQL query all out by hand; I prefer a different sort of pain. It seems like a coin flip now, but if you needed to test 100 different partition layouts, or different data types, or different amounts of data, generating dynamic SQL will be the less painful approach.

DECLARE

@today date = GETDATE(),

@start date = DATEFROMPARTS(YEAR(GETDATE()),1,1),

@end_next_month date = EOMONTH(GETDATE(), 1),

@q char(1) = char(39),

@crlf char(2) = char(13) + char(10),

@pf nvarchar(255) = N'CREATE PARTITION FUNCTION pf',

@rr nvarchar(255) = N'(datetime) AS RANGE RIGHT FOR VALUES(',

@partSchemes nvarchar(max) = N'',

@partFunctions nvarchar(max) = N'',

@vschemas nvarchar(max) = N'',

@vtables nvarchar(max) = N'',

@dates nvarchar(max) = N'',

@inserts nvarchar(max) = N'',

@sql nvarchar(max); /* table names + date ranges for the partition functions */ DECLARE @p table(p nvarchar(128), s datetime, e datetime); INSERT @p(p,s,e) VALUES

(N'BareMinimum', @start, @end_next_month),

(N'Reasonable', @start, DATEADD(YEAR, 1, @end_next_month)),

(N'VeryPrepared', @start, DATEADD(YEAR, 10, @end_next_month)),

(N'Ludicrous', @start, DATEADD(YEAR, 40, @end_next_month)); /* generate schema, partition scheme, and table commands */ SELECT @vschemas = STRING_AGG(CONCAT(N'EXEC sys.sp_executesql

N''CREATE SCHEMA ',p,' AUTHORIZATION dbo;'';'),

@crlf), @partSchemes = STRING_AGG(CONCAT(N'CREATE PARTITION SCHEME ps',

p,N' AS PARTITION pf',

p,N' ALL TO ([PRIMARY]);'),

@crlf), @vtables = STRING_AGG(CONCAT(N'CREATE TABLE ',p,'.',

p,'(LogDate datetime, INDEX cx CLUSTERED(LogDate)) ON ps',

p,'(LogDate);'),

@crlf)

FROM @p; /* generate partition function commands with explicit dates */ ;WITH x AS

(

SELECT p, s, e FROM @p UNION ALL

SELECT p, DATEADD(DAY, 1, s), e FROM x WHERE s < e

)

SELECT

@partFunctions += CONCAT(@crlf, @pf, p, @rr,

STRING_AGG(CONVERT(nvarchar(max),

CONCAT(@q, CONVERT(char(8), s, 112), @q)),

',') WITHIN GROUP (ORDER BY s), ');')

FROM x GROUP BY p ORDER BY p OPTION (MAXRECURSION 0); /* generate 4 insert commands with identical values */ ;WITH d(d) AS

(

SELECT CONVERT(datetime, @today) UNION ALL

SELECT DATEADD(DAY, -1, d) FROM d

WHERE d > @start

),

t(s,p) AS

(

SELECT DATEADD(HOUR, h, d), p FROM d

CROSS JOIN (VALUES(6),(12),(18)) AS h(h)

CROSS JOIN @p AS p

)

SELECT @inserts += CONCAT(N'INSERT ',p,N'.',p,N'(LogDate) VALUES(',

STRING_AGG(CONVERT(nvarchar(max),

QUOTENAME(CONVERT(varchar(23), s, 126), @q)), '),(')

WITHIN GROUP (ORDER BY s), ');') FROM t

GROUP BY p OPTION (MAXRECURSION 366); SET @sql = CONCAT_WS(@crlf,

@partFunctions, @partSchemes,

@vschemas, @vtables, @inserts

); EXEC sys.sp_executesql @sql;

You can see most of the SQL this script generates in this db<>fiddle and, if you execute it on your own system, you'll see that it does what it advertises. On my system it takes all of about four seconds; your mileage may vary.

Now what?

Creating all those partitions and putting a little bit of data into the tables is hardly the point. What I wanted to illustrate here is that other things slow down just as a side effect of more partitions being present, even if most partitions are empty.

A previous version of the documentation explicitly recommended staying at or below 64 active partitions. What kinds of things were they protecting against? In this case, it seems they are just helping you optimize parallelism:

To maximize performance with parallel operations, we recommend that you use the same number of partitions as processor cores, up to a maximum of 64 (which is the maximum number of parallel processors that SQL Server can utilize).

This isn't really the focus of my concern either; I had some other things

in mind to test. For example, Cathrine lists a metadata query to poll information

about partitions. I modified this query to only gather data about our

BareMinimum table, since it has the fewest number

of partitions:

SELECT

OBJECT_SCHEMA_NAME(pstats.object_id) AS SchemaName

,OBJECT_NAME(pstats.object_id) AS TableName

,ps.name AS PartitionSchemeName

,ds.name AS PartitionFilegroupName

,pf.name AS PartitionFunctionName

,CASE pf.boundary_value_on_right WHEN 0 THEN 'Range Left'

ELSE 'Range Right' END AS PartitionFunctionRange

,CASE pf.boundary_value_on_right WHEN 0 THEN 'Upper Boundary'

ELSE 'Lower Boundary' END AS PartitionBoundary

,prv.value AS PartitionBoundaryValue

,c.name AS PartitionKey

,CASE

WHEN pf.boundary_value_on_right = 0

THEN c.name + ' > ' + CAST(ISNULL(LAG(prv.value)

OVER(PARTITION BY pstats.object_id

ORDER BY pstats.object_id, pstats.partition_number),

'Infinity') AS VARCHAR(100)) + ' and ' + c.name

+ ' <= ' + CAST(ISNULL(prv.value, 'Infinity') AS VARCHAR(100))

ELSE c.name + ' >= ' + CAST(ISNULL(prv.value,

'Infinity') AS VARCHAR(100)) + ' and ' + c.name

+ ' < ' + CAST(ISNULL(LEAD(prv.value)

OVER(PARTITION BY pstats.object_id

ORDER BY pstats.object_id, pstats.partition_number),

'Infinity') AS VARCHAR(100))

END AS PartitionRange

,pstats.partition_number AS PartitionNumber

,pstats.row_count AS PartitionRowCount

,p.data_compression_desc AS DataCompression

FROM sys.dm_db_partition_stats AS pstats

INNER JOIN sys.partitions AS p

ON pstats.partition_id = p.partition_id

INNER JOIN sys.destination_data_spaces AS dds

ON pstats.partition_number = dds.destination_id

INNER JOIN sys.data_spaces AS ds

ON dds.data_space_id = ds.data_space_id

INNER JOIN sys.partition_schemes AS ps

ON dds.partition_scheme_id = ps.data_space_id

INNER JOIN sys.partition_functions AS pf

ON ps.function_id = pf.function_id

INNER JOIN sys.indexes AS i

ON pstats.object_id = i.object_id

AND pstats.index_id = i.index_id

AND dds.partition_scheme_id = i.data_space_id

AND i.type <= 1 /* Heap or Clustered Index */

INNER JOIN sys.index_columns AS ic

ON i.index_id = ic.index_id

AND i.object_id = ic.object_id

AND ic.partition_ordinal > 0

INNER JOIN sys.columns AS c

ON pstats.object_id = c.object_id

AND ic.column_id = c.column_id

LEFT JOIN sys.partition_range_values AS prv

ON pf.function_id = prv.function_id

AND pstats.partition_number = (CASE pf.boundary_value_on_right

WHEN 0 THEN prv.boundary_id ELSE (prv.boundary_id+1) END)

WHERE pstats.object_id = OBJECT_ID(N'BareMinimum.BareMinimum')

ORDER BY TableName, PartitionNumber;

Like buffer pool, plan cache, and other DMVs that struggle with predicate pushdown,

I wondered how much slower that query would run at scale. I wasn't wrong. Graphing

the output would be silly because it got some 2,000 times slower, according to

avg_duration in sys.query_store_runtime_stats:

- Before

Ludicrous.Ludicrousexists, it takes 221.8 microseconds. - After

Ludicrous.Ludicrousexists, it takes 420,035.7 microseconds.

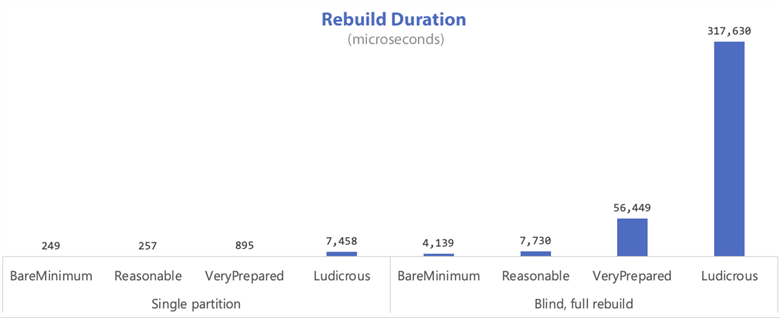

I also expected rebuilds to take a hit for the extra partitions, even though they're all empty. Sure enough, I timed these operations for each table in the following example:

-- rebuild only a specific and populated partition

ALTER INDEX cx ON <table> REBUILD PARTITION = 2;

GO -- rebuild all blindly:

ALTER TABLE <table> REBUILD;

Here's how that turned out:

Again, these are in microseconds, so on my system (with plenty of memory) and in this limited scope, these are not disastrous deltas. In a bigger system and with more data, though, it could become a big deal – something I plan to test in the future.

What else might go wrong?

- In general, more partitions may require a lot more memory for DML, DDL, and index build/rebuild operations. This can hamper concurrency and, if the system is memory-bound, can really throttle I/O performance. This is because the process builds a sort table for each partition before it knows which partitions it will write to.

- Creating or rebuilding non-aligned non-clustered indexes on more than 1,000 partitions is not supported; this is potentially much worse because all the sort tables have to be created in advance, instead of one at a time like with an aligned index.

- Other notes from the documentation:

"The minimum size for each sort table, for each partition, is 40 pages, with 8 kilobytes per page. For example, a nonaligned partitioned index with 100 partitions requires sufficient memory to serially sort 4,000 (40 * 100) pages at the same time. If this memory is available, the build operation will succeed, but performance may suffer. If this memory is not available, the build operation will fail. Alternatively, an aligned partitioned index with 100 partitions requires only sufficient memory to sort 40 pages, because the sorts are not performed at the same time.

For both aligned and non-aligned indexes, the memory requirement can be greater if SQL Server is applying degrees of parallelism to the build operation on a multiprocessor computer. This is because the greater the degrees of parallelism, the greater the memory requirement. For example, if SQL Server sets degrees of parallelism to 4, a nonaligned partitioned index with 100 partitions requires sufficient memory for four processors to sort 4,000 pages at the same time, or 16,000 pages. If the partitioned index is aligned, the memory requirement is reduced to four processors sorting 40 pages, or 160 (4 * 40) pages. You can use the MAXDOP index option to manually reduce the degrees of parallelism."

Next Steps

When planning out a table partitioning strategy, consider a balance between minimizing ongoing future maintenance and not hampering performance in the meantime. There is not much advantage, and potentially many performance pitfalls, if you create too many extra, empty partitions in advance. Don't do it.

See these tips and other resources:

- Handling Large SQL Server Tables with Data Partitioning

- Implementation of Sliding Window Partitioning in SQL Server to Purge Data

- SQL Server Database Partitioning Myths and Truths

- How to Partition an existing SQL Server Table

- Kendra Little : Why Table Partitioning Doesn't Speed Up Query Performance

- Cathrine Wilhelmsen : Table Partitioning in SQL Server

- Partitioned Tables and Indexes

- All partitioning tips

- SQL Server T-SQL Window Functions Tutorial

- What are the SQL Aggregate Functions

- SQL Aggregate Functions in SQL Server, Oracle and PostgreSQL

- SQL Server Window Aggregate Functions SUM, MIN, MAX and AVG

- How to Partition a SQL Server Table with a Columnstore Index

About the author

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-06-21