By: Koen Verbeeck | Updated: 2024-03-08 | Comments | Related: 1 | 2 | > Microsoft Fabric

Problem

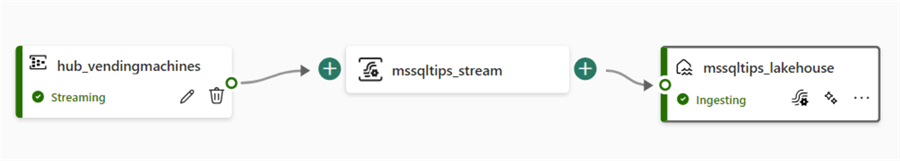

In part 1 of this series, we introduced the concept of an Eventstream in Microsoft Fabric. We are now implementing a use case in which telemetry data from vending machines is sent through Azure Event Hubs to a Fabric Eventstream, which then ends up in a Fabric lakehouse.

Solution

If you haven't already, please read part 1 of this tip, which focuses on setting up Azure Events Hubs and implementing the source part of an Eventstream in Fabric.

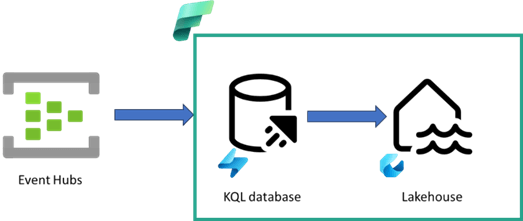

For Part 2, we will implement the destination part of the following architecture:

We will also configure and run the PowerShell scripts needed to generate the sample data. Follow along with this tip: both Azure and Fabric offer free trials.

Reading Data from Event Hubs into Eventstream and a Lakehouse Table

Running the PowerShell Script to Send Data to Azure Event Hubs

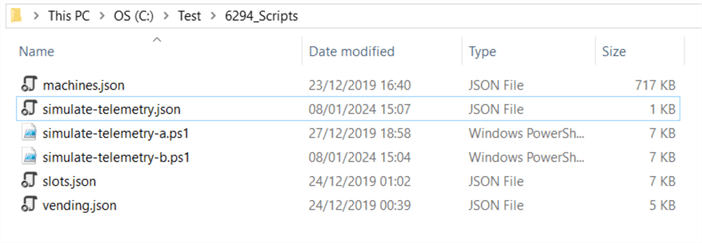

Download the PowerShell scripts here. Unzip the archive and store all the scripts in a folder on your computer. They should look like this:

It consists of the following files:

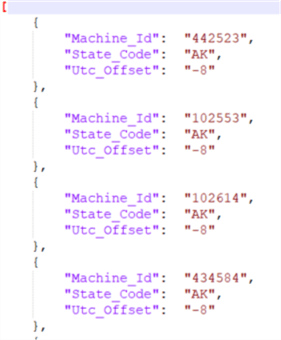

- machines.json. The configuration files of the vending machines. A JSON array of almost 3,400 machines (each vending machine is located in a particular educational institution). Each vending machine has a unique ID, an assigned state (geographical state of the USA), and a UTC offset.

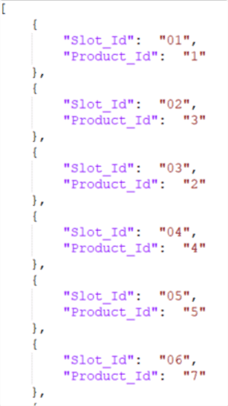

- slots.json. A vending machine has several "slots," each holding a beverage of a specific brand. This file has a list of 45 slots. Each slot has a unique ID and a product randomly assigned to it.

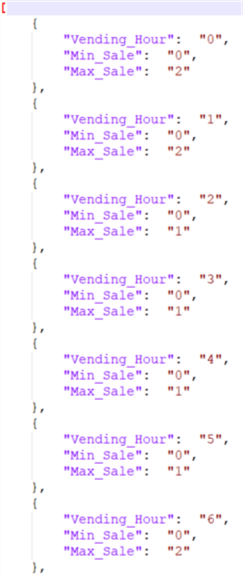

- vending.json. This file lists how many beverages can be sold on a machine or each hour of the day. Each hour has a minimum and a maximum value. Some hours will be "busier" than other hours.

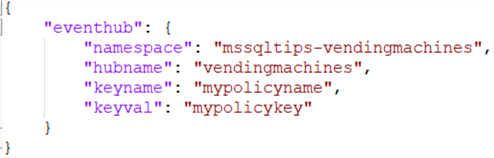

- simulate-telemetry.json. This is a configuration file for the connection to the Azure Event Hub namespace and hub. It contains the namespace name, the hub name, the name of the shared access policy, and the key of this policy. In this tip, we used the same policy for both the Eventstream (a reader of events) and the PowerShell script (a writer of events). In an actual implementation, creating separate policies might be a better option. In the original file, we used the RootManageSharedAccessKey, which was created by default on the Azure Event Hubs namespace level. Here, I opted for a shared access policy on the Event Hub itself.

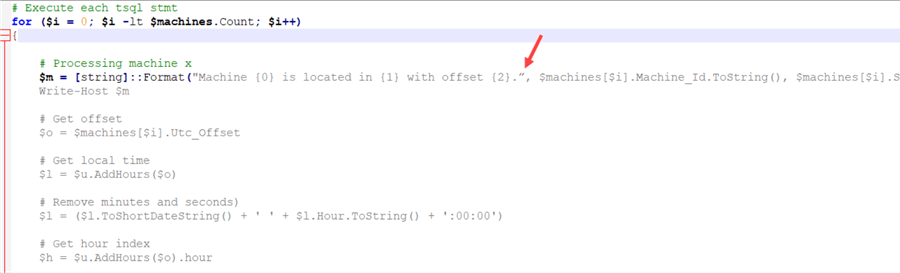

- simulate-telemetry-a.ps1. The actual PowerShell script that will use the sample data from the JSON files and the configuration file to send JSON events to the Azure Event Hub. The script will take a random value between the provided minimum and maximum values as the total sales for a specific hour. This value is then distributed over the 45 slots. This is the so-called vending grid. This is repeated for each machine, and the results are sent as a JSON file to the Azure Event Hub using the REST API method Send Event. For more detail on how this script works, I urge you to read the tip Azure Event Hub Service Telemetry Example with PowerShell, where John Miner explains the different steps.

- simulate-telemetry-b.ps1. This is an optimized version of the first PowerShell script. The first script sends out an event per machine and per slot (3,400 machines * 45 slots = 153,000 events). To optimize this, a single message per machine containing the data for all slots is sent instead. The first script runs in about an hour, while the second should finish in about 5 minutes.

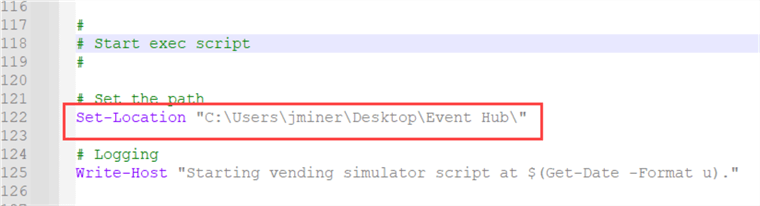

Unfortunately, the scripts don't work right from the start. The configuration file needs to be adjusted to the details of your Event Hub, obviously, but we also need to change the working directory of the script. Assuming we're using the optimized version of the script, we can find this at line 122:

Make sure to change this to the folder where you saved the PowerShell script and the JSON config files.

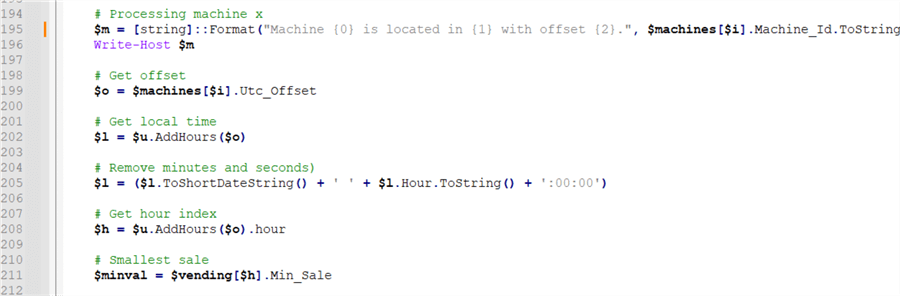

There are also some bugs that need fixing. The first is at line 195, where a double quote messes up the script. It's a "special double quote" (probably a curly quote copied from Microsoft Word), so you must replace it with a normal double quote.

Once fixed, the remainder of the script should no longer be greyed out. (In the screenshot, I use the color formatting of the free Notepad++ tool, but you can see the effect in other IDEs, for example, in Visual Studio Code.)

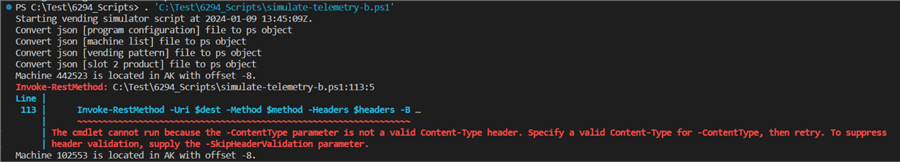

When you try to run the PowerShell script, you will get an error saying the content-type of the REST API header is incorrect: "The cmdlet cannot run because the -ContentType parameter is not a valid Content-Type header." (Personally, I use Visual Studio Code to do this. Make sure PowerShell is installed on your machine.)

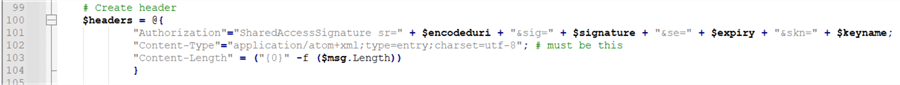

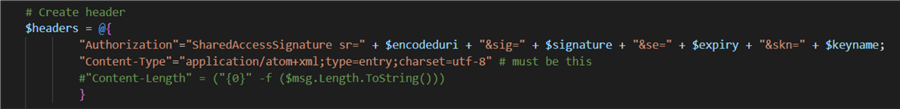

This error is thrown for every machine, so unless you want to see over 3,000 errors, you might want to hit CTRL+C to abort the execution of the script. The content-type is set on line 102:

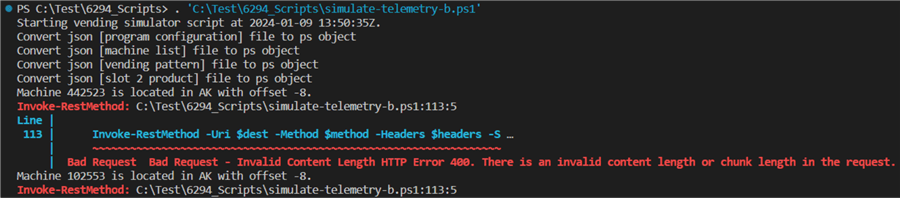

Even though we're sending JSON, the content-type needs to be set to "application/atom+xml;type=entry;charset=utf-8". The content-type is configured correctly in the script, so we need to force PowerShell to skip the validation of the headers. This can be done by adding the -SkipHeaderValidation parameter switch to the Invoke-RestMethod call. However, when we execute the script now, we get the following error: "Bad Request - Invalid Content Length HTTP Error 400. There is an invalid content length or chunk length in the request."

This error is also thrown for every machine, so remember to abort the script. At first sight, it seems the content length is set properly (see line 103). I'm not sure what the problem is. Luckily, this is an optional parameter; we can remove it from the header. Also, remove the semicolon at the end of line 102.

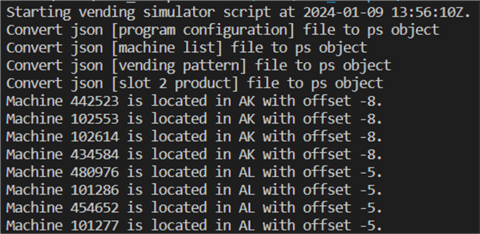

Save the script and run it. Now, the script should be sending event data successfully to the Azure Event Hub.

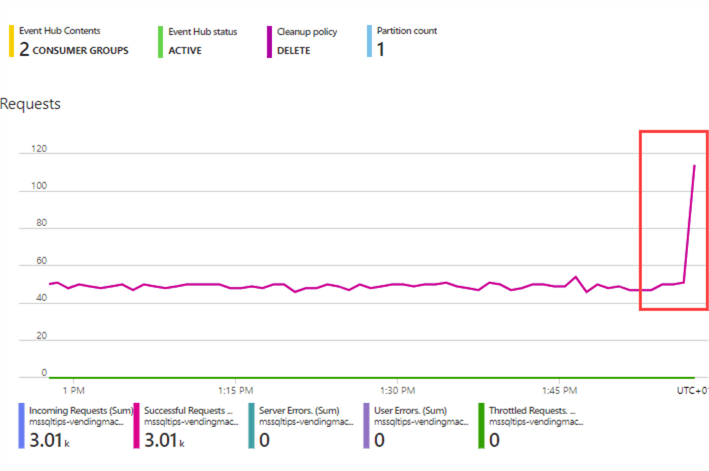

When we open our Event Hub in the Azure Portal, we should see events coming in:

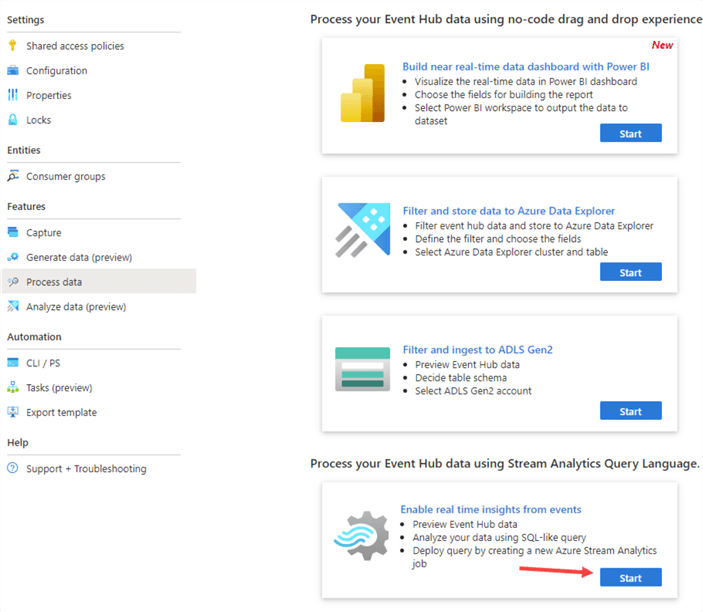

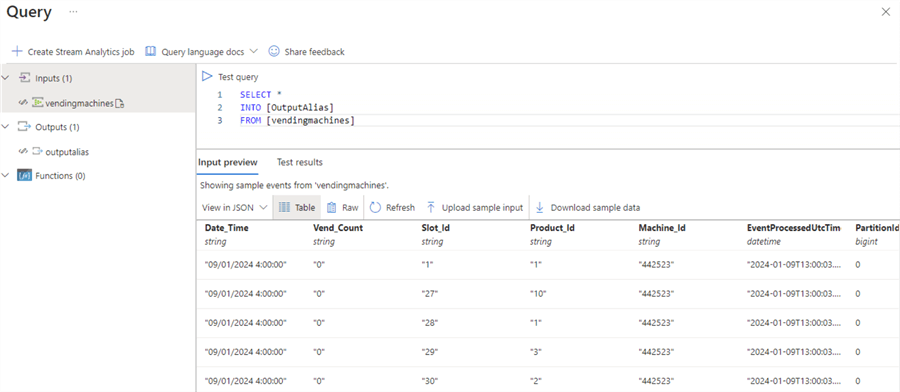

If we want to look at the data, we can go to Features > Process data. It will show several tools and service options to process Event Hub data. We're interested in the Stream Analytics Query.

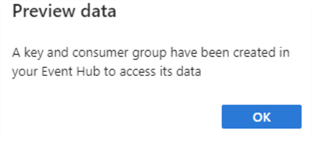

When you open the query editor, a message will display saying a key and a consumer group have been created for you:

Run the test query to see the data from the different vending machine events:

Configuring the Destination in the Fabric Eventstream

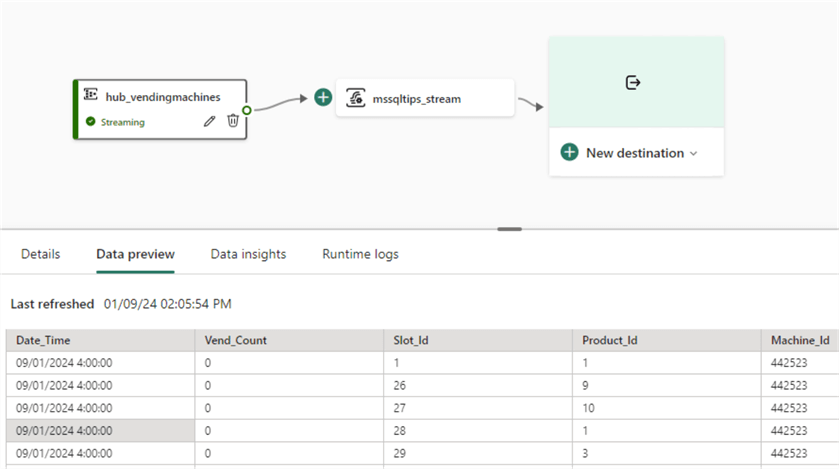

Now that we have data flowing through our Event Hub, we can finish our Eventstream. When we go back to the canvas, we should be able to see a preview of the data:

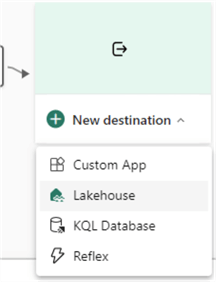

Click on New destination and select Lakehouse from the list:

Note: We can also send data to a custom app, a KQL database, or a Reflex item.

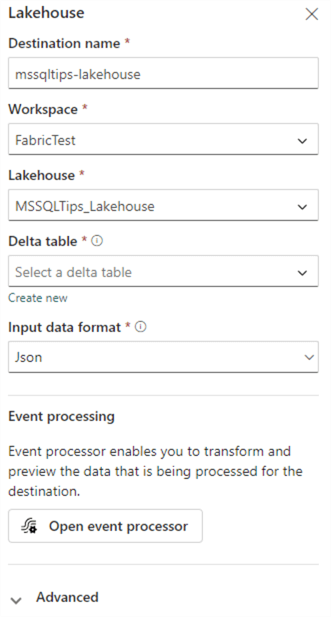

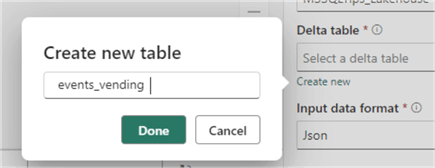

In the configuration of the lakehouse destination, specify a name for the destination, the workspace that contains the lakehouse, and the lakehouse itself.

We're going to send the event data to a new delta table. Click Create new and specify the name of the new table.

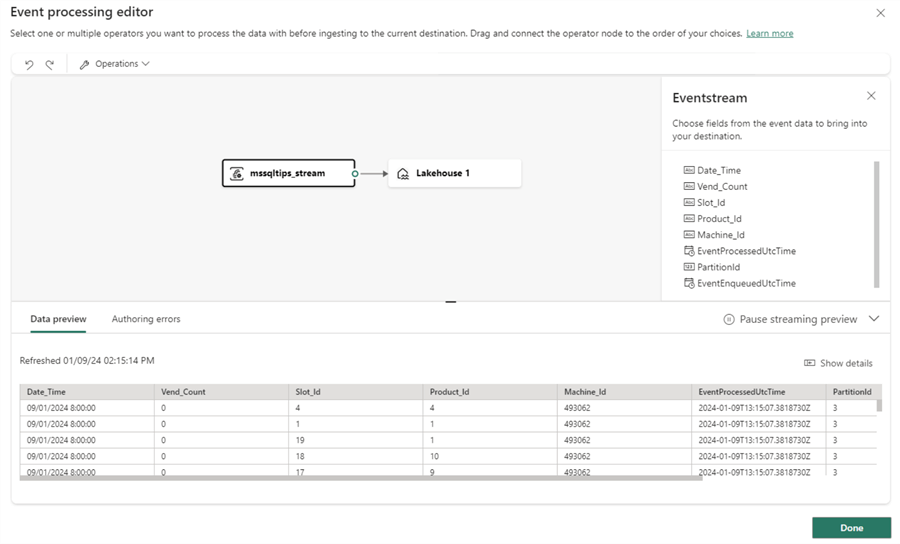

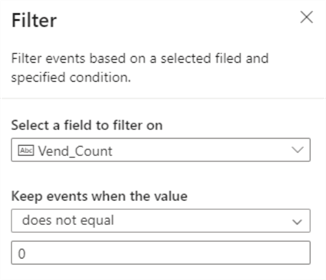

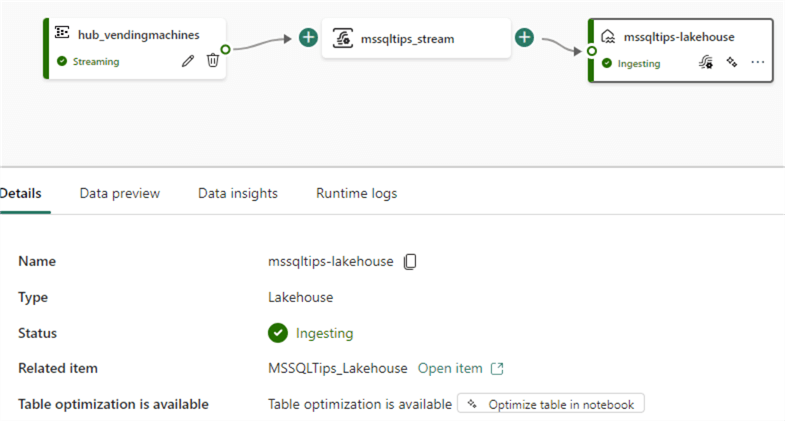

Eventstreams have the capability to perform some transformations on the event data before it is sent to the destination. To add a transformation, click Open event processor. This will open another editor where you can add transformations in a no-code canvas, similar to Integration Services and Azure Data Factory.

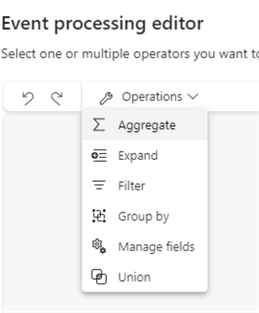

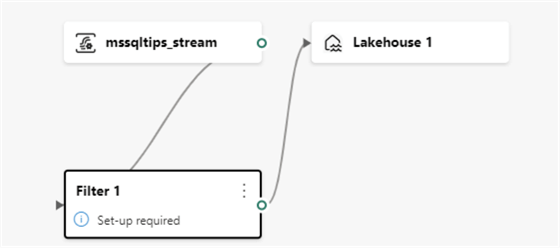

There are a lot of events where no beverages have been sold (vend_count equals 0). Since these do not provide us with real information, we can filter them out. From the Operations menu, select the Filter operation:

Delete the arrow between the Eventstream object and the lakehouse destination and connect the filter operation with both:

Configure the filter to throw events away when the vend_count column is equal to zero.

Click Done to exit the editor. Finally, click Add to finish the config of the lakehouse destination. Once added to the canvas, ingestion of the event data to the delta table should start. This might take a while; use the refresh button for the latest status).

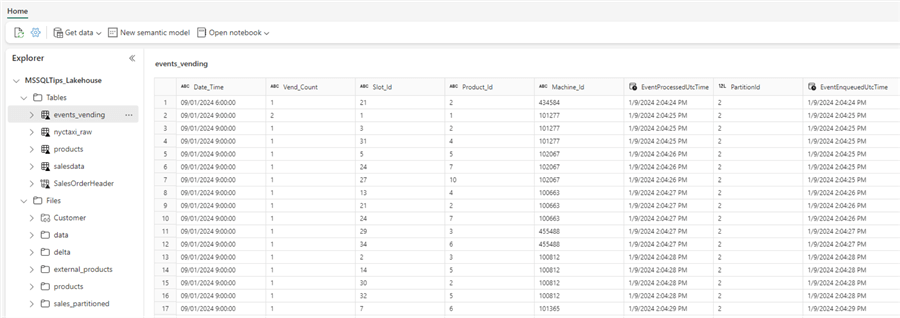

In the lakehouse, we can see the new table and event data:

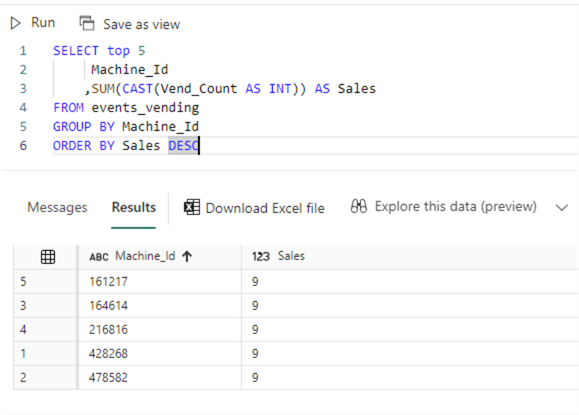

If you don't see the table being created, run the PowerShell script again to make sure data is flowing through the Event Hub to the Eventstream. In the SQL analytics endpoint, we can now query the new table using plain SQL:

You may get different results as some data is random, and the min/max values depend on the hour of the timestamp when the script is run.

Conclusion

Part 2 in this series explained how to send telemetry data through Azure Event Hubs to a Microsoft Fabric Eventstream. In the Eventstream, data can be transformed and sent to one or more destinations. For this use case, the destination was a delta table in a Fabric Lakehouse. In reality, Eventstreams can be more complex with multiple sources and destinations and with more complex transformations than this example.

An alternative is available in Microsoft Fabric: a KQL database can also read directly from Azure Event Hubs. However, the data cannot be transformed while it is ingested into a KQL table. In a KQL database, you can sync a table to a delta lakehouse table. You can opt for this solution if you don't need to transform the events and want the option to analyze the data with KQL, which is well-suited for time-series analysis.

Next Steps

- Try it out yourself! Don't forget to read part 1 of this tip first, get the PowerShell scripts, and set up your own real-time analytics solution!

- You can find more PowerShell-related tips in this overview.

- Learn more about Fabric Event streams in the official documentation.

- More Fabric tips can be found here.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-03-08