By: Ron L'Esteve | Updated: 2022-02-14 | Comments | Related: > Azure Databricks

Problem

As big datasets within Lakehouses continue to grow, challenges around enforcing reliable data governance, security, and CI CD become more prevalent. The lowest levels of access controls within Data Lakes are typically granted at the file level which makes it difficult to provide row or columns level access to certain stakeholders. Also, when there is a need to alter the layout of the data, the corresponding security model will also need to be altered and updated. Some Lakehouses have corresponding meta stores such as the Hive Meta-store which may also end up being out of sync with data in the Lakehouse. SQL databases and ML Models may also be introduced into the Lakehouse as assets that will also have their own independent governance and security models which will need to be maintained and in-sync with up-to-date security models. As data products within the lake grows, organizations will need a way to share, govern and apply CI CD best promotion patterns for tables, files, dashboards, and models within the Lakehouse.

Solution

Security and Data Governance in Databricks includes the policies for securely managing data within your Lakehouse. Databricks offers robust capabilities around governing data at the row and column levels, and extends to covering cluster, pool, workspace, and job access controls which you will learn more about in this article. You will also learn about other access and visibility features including workspace, cluster, and job visibility controls. Finally, you will learn about Azure Purview and how it integrates with Databricks from a Data Governance standpoint along with tools that can be used to promote continuous integration and deployment best processes and best practices for Databricks.

Security & Governance

Databricks Unity Catalog solves many organizational challenges around sharing and governing of data in the Lakehouse by providing an interface to govern all Lakehouse data assets with a security model that is based on ANSI SQL GRANT command, along with federated access and easy integration with existing catalogs. It offers fine grained permissions on tables, views, rows, and columns from files persisted in the Lakehouse. It streamlines access to a variety of Lakehouse assets including Data Lake files, SQL Databases, Delta Shares, and ML Models. It also offers centralized audit logs for best practice compliance management standards.

Databricks offers numerous security features including tightly a coupled integration with the Azure platform with access through Azure Active Directory (AAD) credential pass-through. Databricks also supports SSO authentication for other identity and authentication methods for technologies such as Snowflake. Audit logging can be enabled to gain access to robust audit logs on actions and operations on the workspace.

Databricks offers numerous tools to safeguard and encrypt data securely through row and column level encryption, along with a variety of functions to sensitize PII data. Keys and secrets can be integrated with Azure Key Vault. Databricks also supports creating secret scopes which are stored in an encrypted database owned and managed by Databricks.

Databricks supports a number of compliance standards including GDPR, HIPAA, HITRUST and more. With its support for virtual networks (vNets) your infrastructure will have full control of network security rules and with Private Link, no data will be transmitted through public IP addresses.

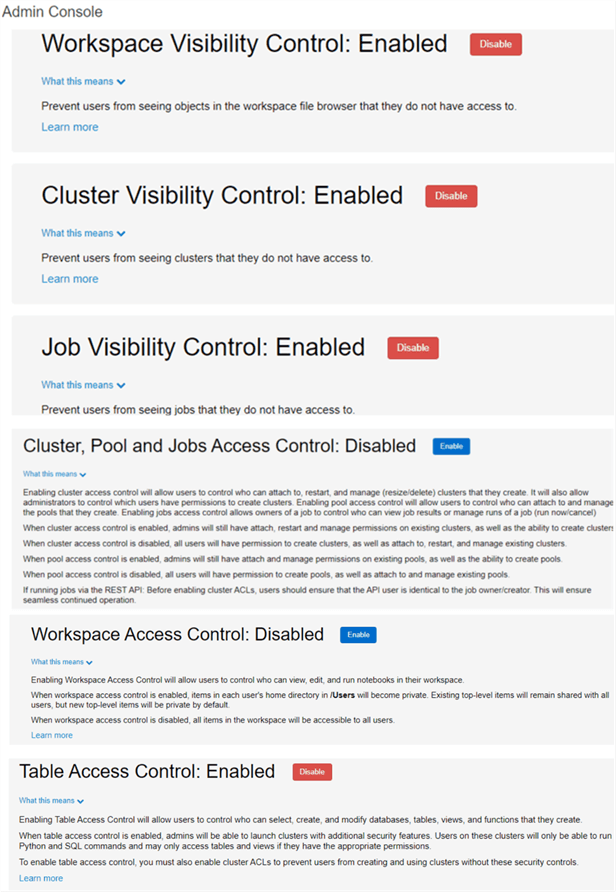

When working with SQL in Databricks, the security model follows a similar pattern as you would find with traditional SQL databases which allows for setting of fine-grained access permissions using standard SQL statements such as GRANT REVOKE. With Access control lists (ACLs), administrators can configure and manage permissions to access Databricks SQL alerts, dashboards, data, queries, and SQL endpoints. The figure below shows a list of how the available visibility and access controls are presented within the Databricks Admin Console. The following list outlines some of these visibility and access controls:

- Workspace Visibility Control: Prevents users from seeing objects in the workspace that they do not have access to.

- Cluster Visibility Control: Prevents users from seeing clusters they do not have access to.

- Job Visibility Control: Prevents users from seeing jobs they do not have access to.

- Cluster, Pool, and Jobs Access Control: Applies controls related to who can create, attach, manage, and re-start clusters, who can attach and manage pools, and who can view and manage jobs.

- Workspace Access Control: Applies controls related to who can view, edit, and run notebooks in a workspace.

- Table Access Control: Applies controls related to who can select, create, and databases, views, and functions from Python and SQL. When enabled, users can set permissions for data objects on that cluster.

- Alert Access Control: Applies controls related to who can create, run, and manage alerts in Databricks SQL.

- Dashboard Access Control: Applies controls related to who can create, run, and manage dashboards in Databricks SQL.

- Data Access Control: Applies data object related controls using SQL commands such as GRANT, DENY, REVOKE etc. to manage access to data objects.

- Query Access Control: Applies controls related to who can run, edit, and manage queries in Databricks SQL.

- SQL Endpoint Access Control: Applies controls related to who can use and manage SQL Endpoints in Databricks SQL. A SQL endpoint is a computation resource that lets you run SQL commands on data objects within the Databricks environment.

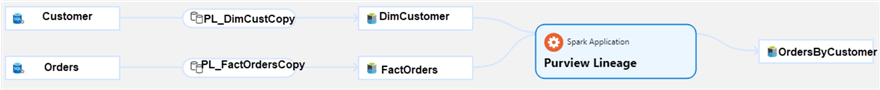

Azure Purview is a data governance service that helps manage and govern a variety of data sources including Azure Databricks and Spark Tables. Using Purview’s Apache Atlas API, developers can programmatically register data lineage, glossary terms, and much more into Purview directly from a Databricks notebook. The figure below illustrates how lineage from a Databricks transformation notebook could potentially be captured in Purview as a result of the Apache Atlas API running code to capture and register lineage programmatically.

Continuous Integration and Deployment

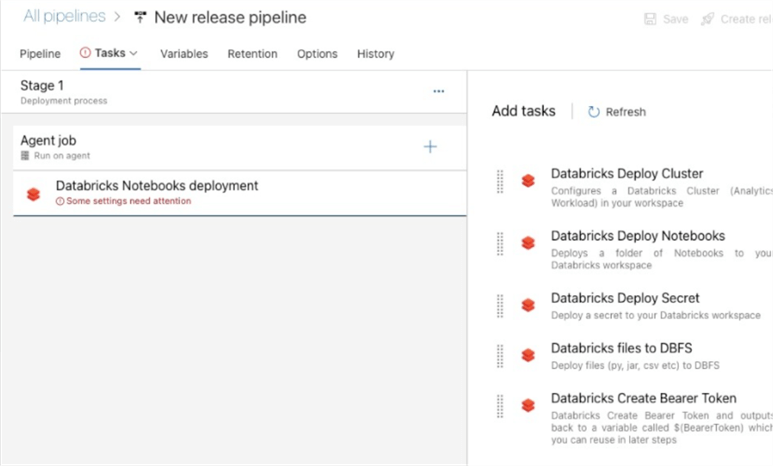

Continuous integration and deployment standards are prevalent across a variety of Azure data services which use Azure DevOps (ADO) for this CI and CD process. Similarly, Databricks supports CI / CD within Azure DevOps by relying on Repos which store code which needs to be promoted to higher Databricks environments. Once these repositories are connected and synced to Azure DevOps, the CI and CD pipelines can be built using YAML code or the classic editor. The build pipeline will use the integrated source repo to build and publish the artifact based on automated continuous integration, and the release pipeline will continuously deploy the changes to the specified higher environments. The Databricks release pipeline tasks shown in the figure below requires the installation of the Databricks Script Deployment Task by Data Thirst from the Visual Studio Marketplace. The marketplace link can be found here. These tasks support the deployment of Databricks files such as .py, .csv, .jar, .whl etc. to DBFS. In addition, there are tasks available for the deployment of Databricks notebooks, secrets, and clusters to higher environments. As with any ADO CI / CD process, once the pipelines are built there is also the capability of adding manual approval gates, code quality tests, and more within the pipelines to ensure that the best quality code is being promoted to higher environments.

Next Steps

- Read more about Azure Databricks Access Controls and Row Level Security (mssqltips.com)

- Read more about Best practices: Data governance - Azure Databricks | Microsoft Docs

- Read more about Metadata and Lineage from Apache Atlas Spark connector - Azure Purview | Microsoft Docs

- Read more about How to Implement CI/CD on Databricks Using Databricks Notebooks and Azure DevOps - The Databricks Blog

- Read more about Continuous integration and delivery on Azure Databricks using Azure DevOps - Azure Databricks | Microsoft Docs

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Masterís in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-02-14