By: Derek Colley | Comments (1) | Related: > Amazon AWS

Problem

You have been tasked with setting up an automatic method to import data from an AWS (Amazon) DynamoDB database, which is a NoSQL data store, into SQL Server. There are no direct connectors available nor is DynamoDB directly supported in most ETL tooling.

Solution

In this tip we present a solution to import data directly from DynamoDB within SQL Server 2017 by using in-line, natively-supported Python scripting to communicate with the AWS service using access key pairs. We show how you can set up a simple table in DynamoDB, then use SQL Server to retrieve the contents of the table and put it into a table in a procedure that can be automated for future use. We also outline the differences between relational and non-relational databases and point you towards further reading.

Introduction

AWS DynamoDB is a key-value data store native to AWS, Amazon Web Services. This collection of services rivals Azure for cloud services with some services like RedShift (for BI/analytics), RDS (for relational) and DynamoDB (for non-relational) data gaining traction. DynamoDB is an ideal key-value store which can be queried easily and from an object-oriented language. However, it is not a relational data store. There is no fixed schema nor constraints other than basic data types. While it's ideal for web developers to store state values, session information or other pertinent facts, there are sometimes cases where it would be useful to import this data into a relational database in order to query it more effectively and join it to other useful data to gain business insights.

Prerequisites

To follow this tip, you will need:

- An Amazon AWS account. You can sign up for free, and the DynamoDB product is available under the Free Tier. Sign up at https://aws.amazon.com.

- SQL Server 2017 with Machine Learning services (R, Python) installed. If you have 2017 and this isn't installed, don't worry. You can use the SQL Server Installer to add these features to your instance. If you're operating on 2016 this tip should also work for you although you may need to modify the Python script accordingly if any syntax returns as invalid. Unfortunately, this tip is not suitable if you're operating on SQL Server 2014 or below.

Creating a table and record in DynamoDB

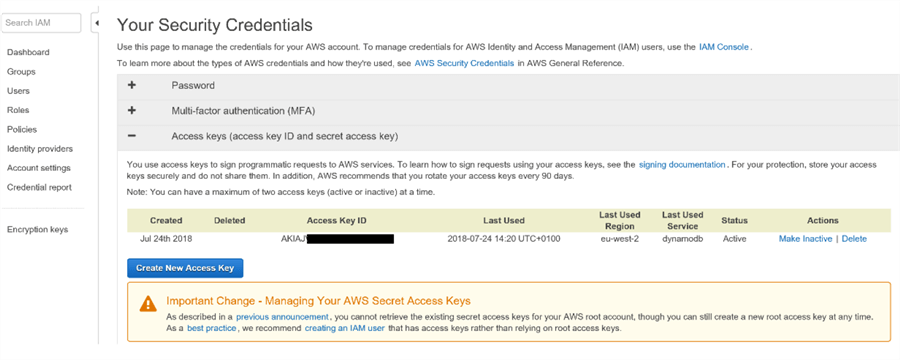

Okay, so you've signed up with Amazon and you have a shiny new AWS account. You will first need to configure your access keys. There's a link to do this on the email AWS sent you when you signed up, or you can use the console to find Your Security Credentials. When you reach a screen that looks similar to the below, expand 'Access Keys' and follow the on-screen prompts to download your access key and secret key pair. Save these somewhere secure.

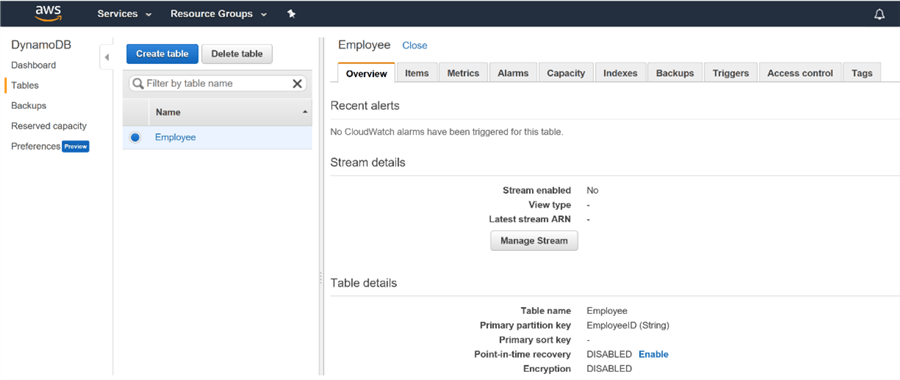

Next, let's create a new DynamoDB table. On the Console home page, type 'DynamoDB' and find the DynamoDB resource page. From there, you'll see the link to Create Table. In DynamoDB, every table has a primary key (much like a clustered index in SQL Server) but at this point you don't need to specify any other columns. There's also some settings you can play with around scale-out (which incur a cost), encryption and backup.

Below is a screenshot of my empty Employee table. This table has the EmployeeID primary key.

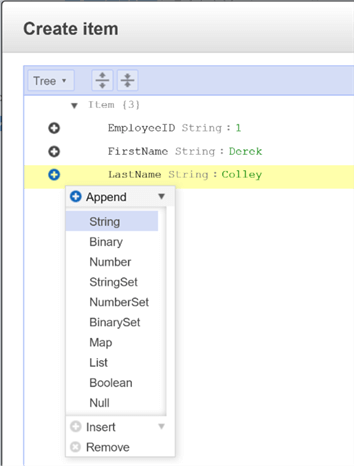

Now, let's add an item to the table - by item, we mean row. Bear in mind this is a key-value store, NOT a relational table so we have, effectively, two columns - the key, and the value. So, the key is the column name of our data, and the value is the value! But what about the datatype? In DynamoDB, each key or value of each row of data can have its own datatype. Datatype is NOT mandated by column as in a relational database - to put it formally, each column of a relational database is constrained by a domain of possible values. In DynamoDB, this domain is applied at the individual field level, not the column level.

This means we need to pick some data types for each value. Below, you can see my selections. I'm creating a single record of employee data and choosing intuitive data types as I go.

You can also create an item if you prefer to write out the content in key-value form, or even in JSON. Use the drop-down menu (see 'Tree' in the screenshot above) to change the format between tree, key-value and JSON. Here's the full key-value segment for our example record:

{

"EmployeeID": 1,

"FirstName": "Derek",

"LastName": "Colley",

"Position": "Database Administrator",

"Location": "Manchester",

"DateStarted": "01/01/2015",

"PassedProbation": true,

"TrainingBudget": 250

}

Hit Save. Sometimes when you hit Save you'll see an error crop up. If this happens to you check that you haven't inadvertently renamed your primary key field - the first key in your record should be the primary key, and identical in name (case-sensitive) to the table primary key you defined.

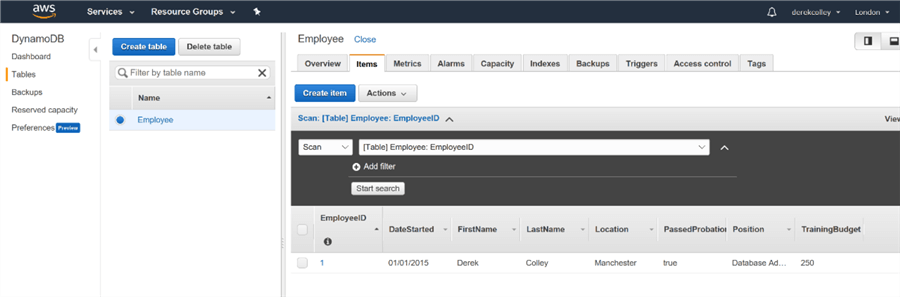

Now we have our record safely ensconced in DynamoDB. How on earth do we access it from SQL Server?

Modifying the default Python library in SQL Server

Okay, we need to do a couple of things before we get started. AWS provide several ways of accessing a DynamoDB instance - from the console, from the command-line interface and via an API. We'll be using the latter, using SQL Server to access the AWS DynamoDB API. However, to do this we will need to use a Python package within SQL Server that doesn't come natively bundled with it. This package is called boto3 - it's owned and published by Amazon and available from the normal Python repositories. Python in SQL Server is a variant published by Anaconda, but still comprises of the same open-source code as used in other distributions. This means we can add our own packages to Python in SQL Server even if it isn't obvious how!

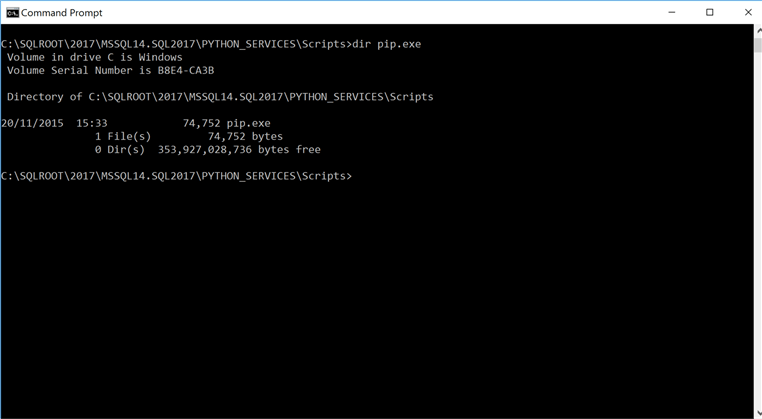

To do this you'll need to open a command prompt. Navigate to your SQL Server root directory - by default, C:\Program Files\Microsoft SQL Server\<Version>.<Instance>. Mine is C:\SQLROOT\2017\MSSQL14.SQL2017. Now navigate to the subfolder 'PYTHON_SERVICES', then the subfolder 'scripts'. Issue dir pip.exe. You should see pip.exe in the directory listing (if you can't see it, you're in the wrong folder. Try using dir /s or File Explorer to search for the file).

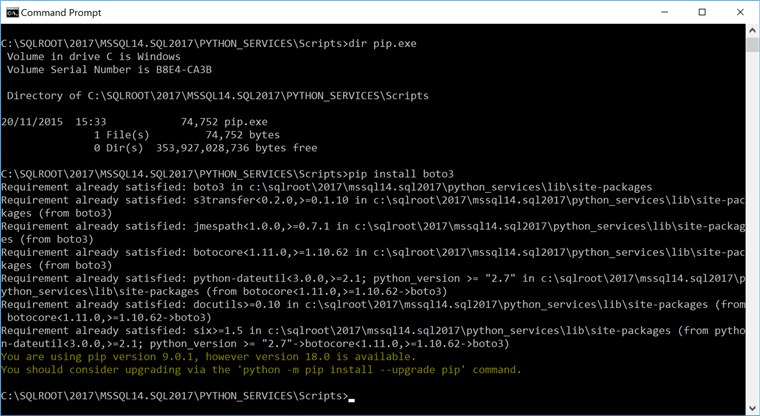

Now we need to install boto3. The syntax is very easy:

pip install boto3

If all goes well, you'll see a screen indicating that the installation was successful. When I try it, it shows I've already installed it:

Now, we can import the contents of this package within our Python script. Let's turn our attention to SQL Server.

Accessing DynamoDB from SQL Server

Our goal is to import this record from DynamoDB into SQL Server. First, let's create the destination table.

drop table if exists Employees create table Employees ( EmployeeID INT, FirstName VARCHAR(50), LastName VARCHAR(50), Position VARCHAR(100), Location VARCHAR(100), DateStarted DATE, PassedProbation BIT, TrainingBudget NUMERIC(16,2) )

Now we need to write a Python script which can access DynamoDB. Here it is - we'll discuss it line-by-line below:

declare @rScript NVARCHAR(MAX)

set @rScript = N'

import boto3

import pandas as pd

dbConn = boto3.resource(''dynamodb'',

aws_access_key_id =''AKIAJVBHxxxx'',

aws_secret_access_key =''xxxx'',

region_name=''eu-west-2'')

table = dbConn.Table(''Employee'')

table = table.scan()

tableContents = table[''Items'']

contentsList = []

for item in tableContents:

contentsList.append(item)

od = pd.DataFrame(contentsList, columns=["EmployeeID", "FirstName", "LastName", "Position", "Location", "DateStarted", "PassedProbation", "TrainingBudget"])

od.TrainingBudget = od.TrainingBudget.astype(str)

OutputDataSet = od

'

- import boto3 - we import the package we just installed

- import pandas - a special package that comes bundled and supports Data Frames, a type of data object.

- dbConn = boto3.resource ... - we call the 'resource' method to set up a new DynamoDB connection. I've blanked out some of the key details - substitute your own! Note here that your region might be different - I'm based in the UK so my default location is London, which means my region is eu-west-2. You can check your region by looking in the URL while you're navigating the console.

- table = dbConn... - Here, we create a new object called 'table' of class Resource.Table named Employees, to match our existing table.

- table = table.scan() - read the contents of the table and overwrite the variable table with the results. It’s exactly the same method called as viewing the table contents in the console.

- tableContents = table[''Items''] - the table object, prior to this line, contains 4 columns. Only one of these columns contains 'items' which are actual data values - the rest are metadata. Here, we specify that the variable tableContents should be used to hold all the items of the object 'table'.

- contentsList[]... in this line and the subsequent for loop, we take every item of type Response.Table.Item and append it to a simple list. This creates an ideal object to transform into a data frame.

- od = pd.DataFrame ... - here, we convert the contentsList list into a data frame and specify column names. This is a powerful procedure, because it will also attempt to convert the data into the correct types automatically.

- od.TrainingBudget ... - unfortunately the type numpy.Decimal.Decimal, while a valid type, isn't compatible with the way data is rendered back out to SQL Server, so we need to convert this to a string.

- OutputDataSet = od - 'OutputDataSet' is a special keyword indicating the output of the script. We use this keyword to contain the output objects that we pass to the wrapper that calls this script.

Next, we need to do a little DBA work. We can't use external scripts in SQL Server unless this feature is explicitly turned on, and it won't take effect without restarting the instance. So, we do:

exec sp_configure 'external scripts enabled', 1 reconfigure with override

Now restart the instance. If you check exec sp_configure 'external scripts enabled' the run value should equal 1.

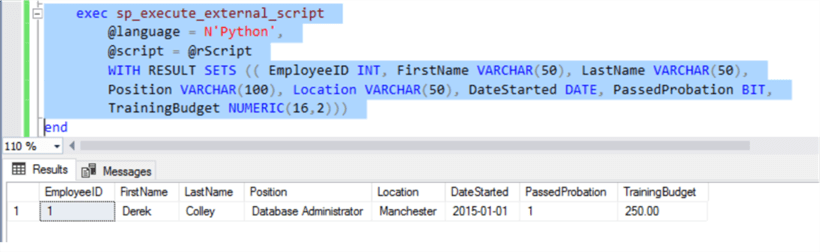

Now, we write the wrapper. Here's the code:

exec sp_execute_external_script @language = N'Python', @script = @rScript WITH RESULT SETS (( EmployeeID INT, FirstName VARCHAR(50), LastName VARCHAR(50), Position VARCHAR(100), Location VARCHAR(50), DateStarted DATE, PassedProbation BIT, TrainingBudget NUMERIC(16,2)))

This executes our Python script and outputs the results as a normal result set:

You can see from the wrapper that we cast the result set back out to a known set of columns and types, which means we can elegantly convert our troublesome TrainingBudget column back out to a NUMERIC(16,2) using implicit conversion.

Now to get the results into a table, we're going to run into problems using an INSERT wrapper. INSERT isn't compatible with a subquery that uses WITH RESULT SETS, so we need to proceduralize it. You'll see how this is done in the complete script below.

--exec sp_configure 'external scripts enabled', 1

--reconfigure with override

--** now restart the instance **

drop table if exists Employees

create table Employees (

EmployeeID INT,

FirstName VARCHAR(50),

LastName VARCHAR(50),

Position VARCHAR(100),

Location VARCHAR(100),

DateStarted DATE,

PassedProbation BIT,

TrainingBudget NUMERIC(16,2) )

drop procedure if exists ImportEmployeeData

go

create procedure ImportEmployeeData

as begin

declare @rScript NVARCHAR(MAX)

set @rScript = N'

import boto3

import pandas as pd

dbConn = boto3.resource(''dynamodb'',

aws_access_key_id =''AKIAXXXX'',

aws_secret_access_key =''97nSN8V8XXXX'',

region_name=''eu-west-2'')

table = dbConn.Table(''Employee'')

table = table.scan()

tableContents = table[''Items'']

contentsList = []

for item in tableContents:

contentsList.append(item)

od = pd.DataFrame(contentsList, columns=["EmployeeID", "FirstName", "LastName", "Position", "Location", "DateStarted", "PassedProbation", "TrainingBudget"])

od.TrainingBudget = od.TrainingBudget.astype(str)

OutputDataSet = od

'

exec sp_execute_external_script

@language = N'Python',

@script = @rScript

WITH RESULT SETS (( EmployeeID INT, FirstName VARCHAR(50), LastName VARCHAR(50),

Position VARCHAR(100), Location VARCHAR(50), DateStarted DATE, PassedProbation BIT,

TrainingBudget NUMERIC(16,2)))

end

go

insert into Employees

exec ImportEmployeeData

select * from Employees

Conclusions

In this tip we've demonstrated a simple method of connecting to DynamoDB, an Amazon offering, to fetch data by using the Machine Learning features of SQL Server 2016/2017. Of course, schemaless databases are not the same as relational databases - bear in mind that when you fetch data you will need to predefine your schema (via the table definition, data frame definition and WITH RESULT SETS definition in the example) and the attraction of NoSQL databases like DynamoDB is that they don't *require* a schema. So, you may find your schema changes!

You can also extend the example given to filter your results. Check out the (hefty) API reference for more methods supported by boto3.

You can also check out more tips from MSSQLTips - Rick Dobson presents an excellent overview and demonstration of sp_execute_external_script, the procedure we leveraged here. Siddarth Mehta also provides a tip with a rundown of Python functions in SQL Server and another on exploratory data analysis.

As ever if you have any questions or have trouble getting things set up, leave a comment and I'll get back to you as soon as time allows. Thank you for reading!

Next Steps

- Amazon DynamoDB API Reference

- MSSQLTips - Installing Python and Running Python Scripts from SQL Server SSMS

- MSSQLTips - Python in SQL Server Ecosystem

- MSSQLTips - Exploratory Data Analysis with Python in SQL Server 2017

About the author

Dr. Derek Colley is a UK-based DBA and BI Developer with more than a decade of experience working with SQL Server, Oracle and MySQL.

Dr. Derek Colley is a UK-based DBA and BI Developer with more than a decade of experience working with SQL Server, Oracle and MySQL.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips