By: Fikrat Azizov | Updated: 2021-11-16 | Comments | Related: 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | > Azure Synapse Analytics

Problem

This tutorial is part of the series of posts dedicated to building a Lakehouse solution, based on Delta Lake and Azure Synapse Analytics technologies. In the previous posts (see Building Scalable Lakehouse Solutions using Azure Synapse Analytics and Common Data Warehouse Development Challenges) we've discussed some of the popular cloud ETL platforms, as well as covered common data warehouse build tasks. The data ingestion is typically the first high-level task in the chain of ETL pipelines feeding most Data warehouses. So, we'll be focusing on the ingestion-related tasks in this tip.

Solution

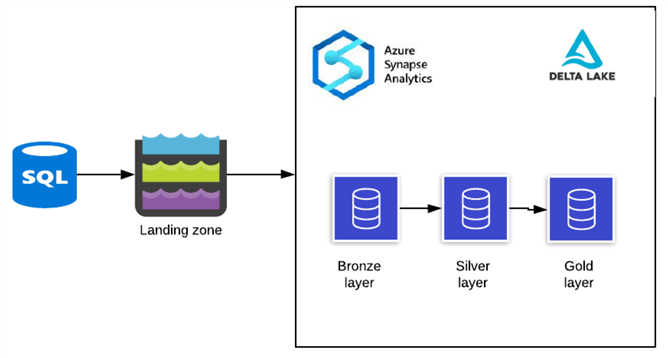

Here's the solution architecture of the data flows we'll build in the next few tutorials:

Figure 1

This architecture involves the following steps:

- Data ingestion into the landing zone in the data lake. Depending on your data sources, this step may or may not be included in your solution. Azure Synapse Analytics can read from different data sources, and write into the Delta Lake, without requiring an intermediate landing zone. However, in some cases, people may prefer to include the intermediate layer, either due to Azure Synapse Analytics' limitations or for some other reasons. Our pipelines will read data from Azure SQL DB, and although I could write directly into Delta Lake, I've included a landing zone, just for the sake of the demonstration.

- Data ingestion into the Bronze layer tables. The three data maturity layers described here, are adopted from Databricks (see Building Scalable Lakehouse Solutions using Azure Synapse Analytics for more details), and the Bronze layer corresponds to the raw layer (or staging) in a typical DWH implementation. This ingestion will bring the data as is, without any transformations.

- Data ingestion into the Silver layer tables. The Silver layer includes cleansed, processed and enriched data and it corresponds to Operational Data Store (ODS) in a traditional DWH solution.

- Data ingestion into the Gold layer tables. The Gold layer represents data in a dimensional model and serves as a source for enterprise reporting.

We'll discuss landing ingestions in this tip and will cover the other steps in the upcoming tips.

Environment Preparation

Please follow the below steps to prepare the environment:

- Provision Azure SQL DB, based on a sample AdventureWorks schema (I've named it AdventureWorks).

- Provision general-purpose v2 Azure Data Lake account (I've named it synstg). This storage will contain landing zone files, as well as our Delta Lake tables.

- Provision Azure Synapse Analytics account and specify the above mentioned storage account as default storage. This step will also require the creation of a container that will serve as the default file system container for Synapse Analytics. Add the Spark pool with the latest Spark version (currently 3.1).

Landing Zone Ingestion

Because the landing zone doesn't require any transformations, the ingestion into it can be automated to a certain degree.

The landing pipeline will read from Azure SQL DB and write into the storage. I'll specify a JSON format for a few tables and select Parquet format for others, for demonstration purposes.

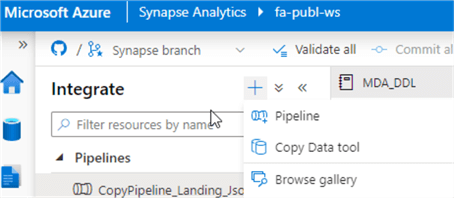

Let's open Synapse Analytics Studio and use the Copy Data tool, as follows:

Figure 2

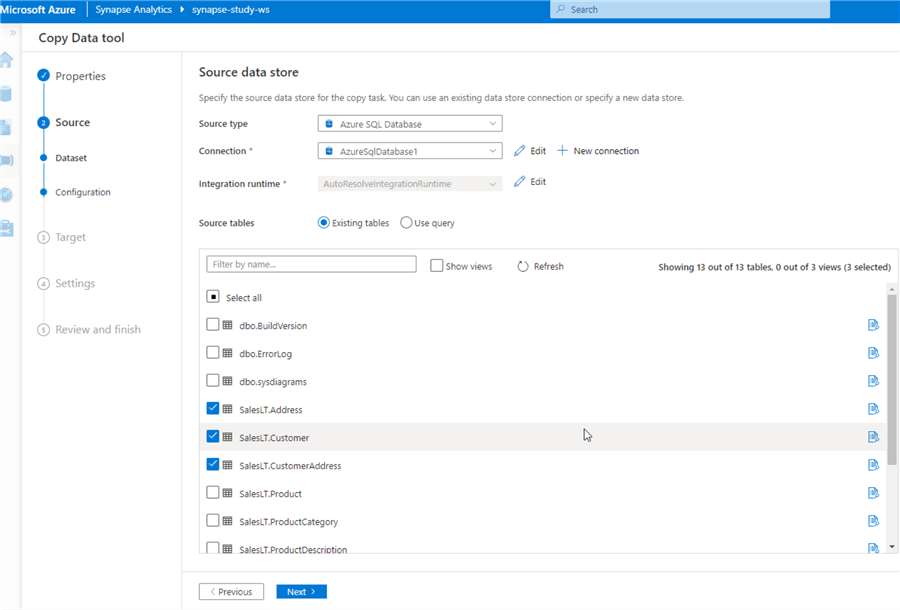

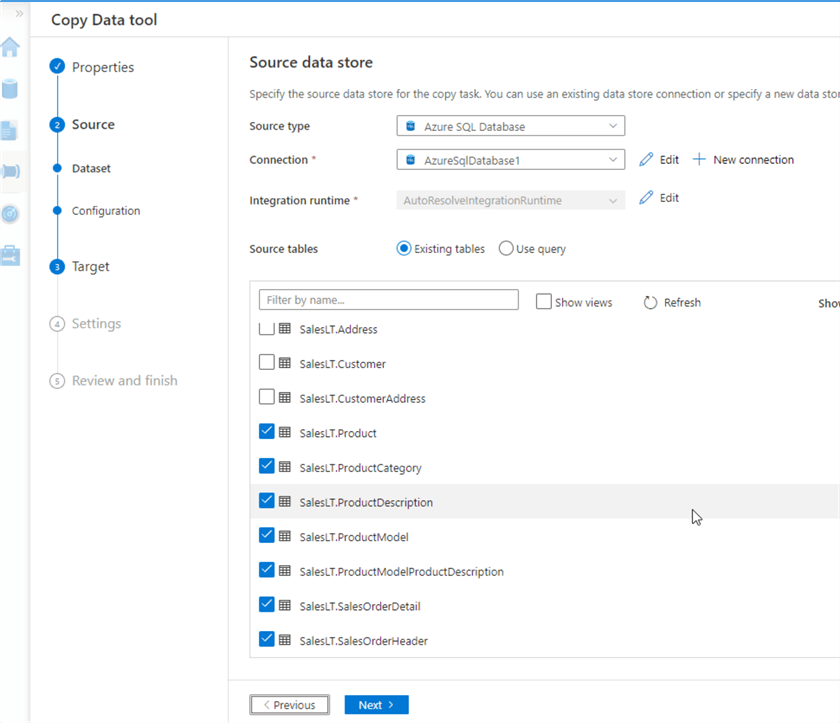

Select Azure SQL DB as a source type and specify the sample AdventureWorks database created earlier. Select three tables, as follows:

Figure 3

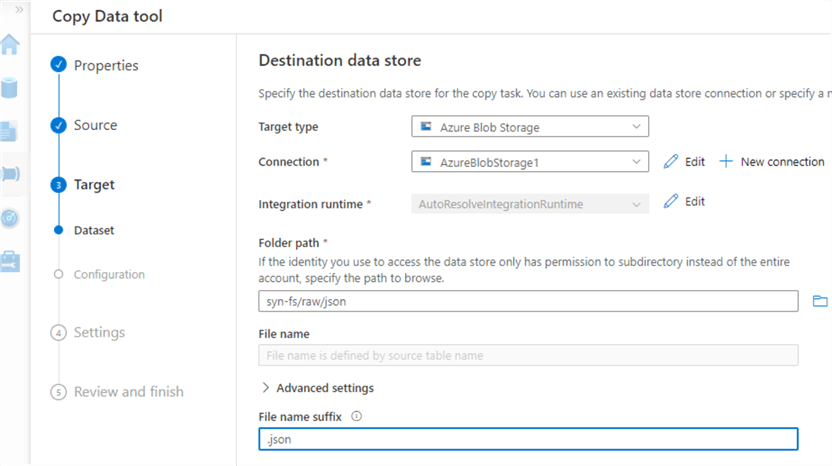

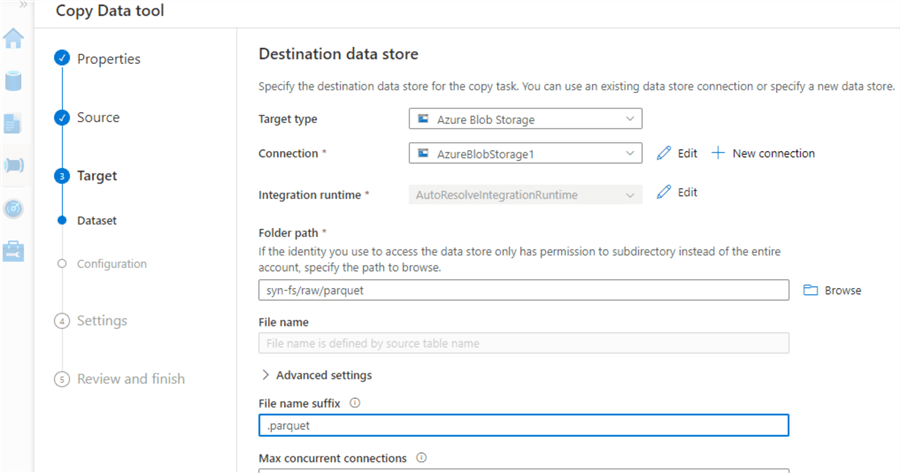

Select Azure Blob Storage as a destination type, and create a connection to the storage account created earlier.

Type syn-fs/raw/json in the Folder path box and .json in the File name suffix box, as follows:

Figure 4

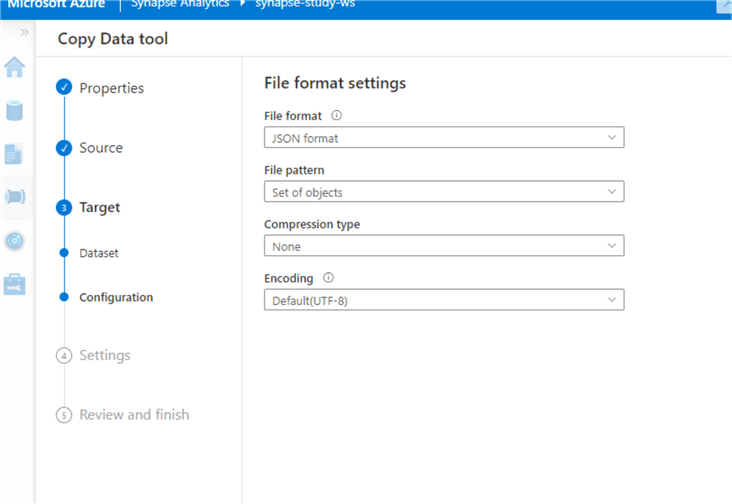

Next, select the JSON format in the File format settings screen:

Figure 5

Leave other settings as default, and assign the name to the pipeline (I've named it as CopyPipeline_Landing_Json).

Now, let's repeat similar steps to extract the remaining tables in the Parquet format. Below are the relevant screenshots.

Source settings:

Figure 6

Target settings:

Figure 7

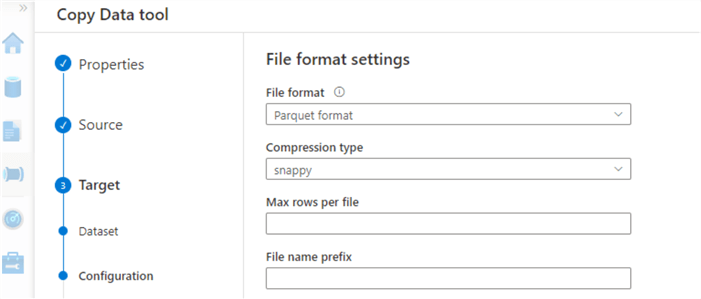

Target format:

Figure 8

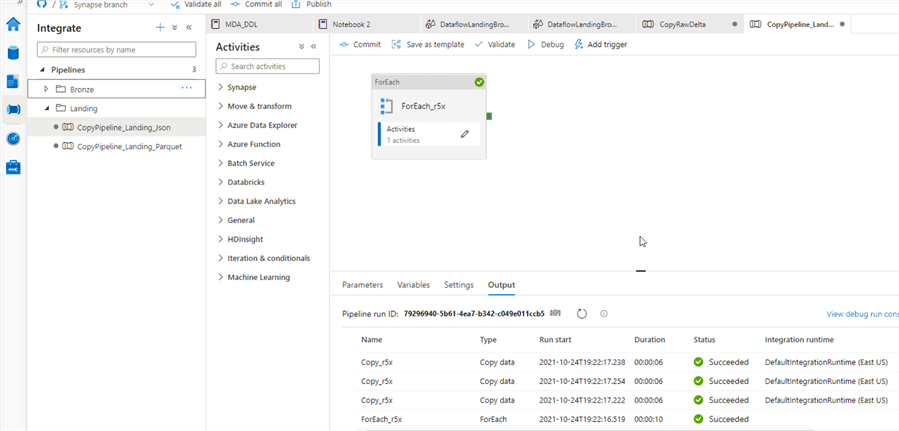

Here are the pipelines, generated by Synapse Analytics:

Figure 9

Note I've also created folders, like Landing, Bronze to organize the pipelines.

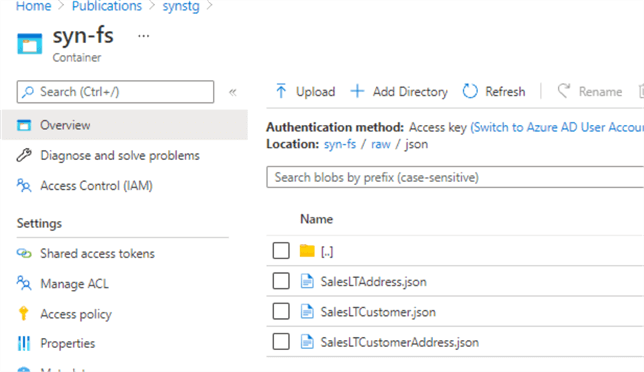

By default, Synapse Analytics will run the pipelines immediately after their configurations are completed. So, let's give them a few minutes to run and check the JSON and Parquet files in their corresponding destination folders:

Figure 10

Next Steps

- Read: Copy Data tool in Azure Data Factory and Synapse Analytics

- Read: Azure Synapse Analytics Overview

- Read: Building Scalable Lakehouse Solutions using Azure Synapse Analytics

- Read: Common Data Warehouse Development Challenges

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-11-16