By: Fikrat Azizov | Updated: 2021-10-08 | Comments | Related: 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | > Azure Synapse Analytics

Problem

The modern data warehouse concept has evolved significantly in the past few decades. I still remember the times when data warehouses were based only on the on-premises Relational Database Management Systems (RDBMS). However, exponentially increasing data volumes and the necessity to store non-structured data have forced many organizations to move into cloud Big Data platforms in recent years. This move was accompanied by the popularization of Data Lakes. Many organizations have implemented Data Lakes, to store semi-structured and unstructured data, in addition to the structured data. The Data Lakes also allowed them to store large volumes of data without incurring high costs.

However, despite all its advantages, many Data Lake implementation projects had some serious shortcomings, which prevented organizations from leveraging the true power of the Data Lakes. In this series of posts, I'm going to discuss common challenges of implementing Data Lakes, explain the lakehouse concept and build scalable lakehouse solutions, capable of handling large data volumes.

Solution

Common Data Lakes benefits and challenges

The Data Lakes brought many advantages, including:

- Flexibility, of storing the files in a variety of different formats, including structured, semi-structured and unstructured formats.

- Scalability, unlimited capacity, and lower cost. Data Lakes allow ingesting petabytes of data at much lower costs, compared to the RDBMS databases.

- Low upfront development needs - the files could be brought into Data Lakes without the necessity to create table schemas.

However, the traditional Data Lakes also presented some challenges, like:

- Having no schema enforcement often leads to low-quality data.

- Most ETL technologies supporting Data Lakes didn't have built-in transaction support, which forced developers to create their transaction support systems.

- Many Big Data processing systems didn't have row-level update/delete capabilities, which forced developers to re-create the entire table or partition for the sake of updating few rows, which made these ETL jobs costly.

Introducing the Lakehouse concept

The Lakehouse concept, recently brought by Databricks, is designed to solve these problems. This concept is based on a few major ideas, outlined below.

- First, it leverages Spark's Delta Lake technology to store the data in Delta Lake tables residing in the Data Lakes. The Delta Lake tables can control the schema of the data during data persistence. This takes care of the schema enforcement problems, mentioned earlier.

- In addition to this, Delta Lake technology has built-in ACID transactional support, that eliminates the need to build custom transaction support systems.

- Next, the Delta Lake technology provides support for the row-level upsert/delete capabilities, which removes the need to do costly partition/table updates.

- Finally, the Delta Lake technology provides a time-travel functionality, which may be very helpful from audit and troubleshooting standpoints.

- The Delta Lake technology stores data in parquet format and adds series of log files to support row-versioning and time-travel functionality.

To summarize, Delta Lakes includes the best of two worlds - Data Lakes and RDBMS databases.

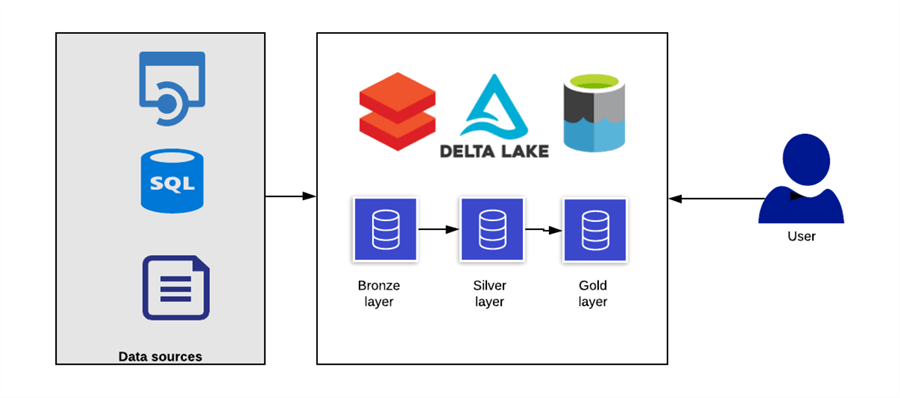

Delta Lake data maturity layers

In a typical data warehousing solution, the data changes its shape, as it passes through different processing stages. The raw data consumed from various sources needs to be cleansed, de-duplicated, enriched and converted into a dimensional model before being brought into a data warehouse. So, it makes sense to have several data zones with different data maturity levels within the Lakehouses. I like Databricks' approach to defining data maturity layers, as outlined below:

- Bronze layer - This layer contains the raw tables with the structures, resembling the source files. Bronze tables typically receive data from source systems as is, with no transformations.

- Silver layer - This layer contains the tables with cleansed, de-duplicated and enriched data.

- Gold layer - This layer represents the data converted into the dimensional model, aggregated and ready to be consumed by business users.

Here's the graphical representation of this approach:

With this approach, the user reports consume the data directly from Delta Lake tables. I should mention that in some cases it makes sense to enhance this model by adding relational or analytical data layers, like Azure SQL Db, Synapse SQL Pool or Azure Analysis Services models, to address specific performance or security requirements.

Data movement and transformation platforms

In the past, when the data were residing on-premises, the SQL Server Integration Services (SSIS) used to be my favorite data movement and transformation tool. Its nice graphical interface allowed building flexible ETL jobs, to address a variety of data warehouse transformation needs. However, scalability limitations of SSIS became apparent when data volumes have grown significantly.

In this series of tips, we're going to discuss the following popular cloud ETL platforms:

- Azure Data Factory/Azure Synapse Analytics - Azure Data Factory (ADF) has many data movement and transformation activities, allowing to move the data between on-premises and cloud data sources. Azure Synapse Analytics has inherited most of these activities. The Mapping Flow is one of these activities, with a graphical interface and flexible data movement and transformation features, that allows you to build data flows resembling SSIS flows. Although the Mapping Flow doesn't require programming skills, it uses a Spark engine under the hood to convert and execute UI-based data flows.

- Spark notebooks - Azure Databricks operates on Spark-based notebooks, based on programming languages, like Python, SQL, R, Scala, etc. Spark is a very powerful, scalable, and open-source technology that covers not just data ETL and data warehousing, but also Data Science needs. Azure Synapse Analytics also includes Spark notebooks.

Comparison of the popular ETL technologies

A typical ETL and data warehousing solution require a few high-level features, like metadata management, data movement and transformation, etc. So, let me compare few popular cloud platforms from the perspective of covering ETL and data warehousing needs:

- Delta Lake metadata management features - Spark notebooks that are part of Azure Databricks and Azure Synapse Analytics, are best suited for creating and changing Delta Lake objects, in my view. You can use Spark SQL to create DDL statements, much like the RDBMS commands.

- Data movement features - These may include fetching data from databases, standalone files, third-party APIs, streaming sources, etc. Although Spark functionality included in Azure Databricks and Azure Synapse Analytics can handle some of these data sources, the Mapping Flow is much more flexible - it has lots of data connectors to well-known data systems, which makes it an ideal data movement tool.

- Data transformation features - The data transformation tasks can be built using either Mapping Flow or Spark notebooks. While the former option represents the code-free experience, the latter option represents the pure code approach, so choosing one tool or another is a matter of developer preference.

- Data flow orchestration features - The ETL jobs for DWH often require job scheduling, complex activity dependencies and job orchestration. Although Azure Databricks has some job scheduling capabilities, Azure Synapse Analytics data integration pipelines are better suited to address complex data flow orchestration needs, in my view.

In the next few tips, I'm going to walk you through common data warehouse build tasks and explain how to build a scalable Lakehouse using the technologies discussed here. Although I'll provide detailed explanation of each step, familiarity with the basics of the Azure Data Factory and Synapse Analytics would help you understand the material better, so please take a look at the links provided below.

Next Steps

- Read: What is a Lakehouse?

- Read: Build Lakehouses with Delta Lake

- Read: Azure Synapse Analytics Overview

- Read: Azure Data Factory Overview

- Read: Mapping data flows in Azure Data Factory

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-10-08